This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Administrator's guide

Welcome to the Vertica Administator's Guide.

Welcome to the Vertica Administator's Guide. This document describes how to set up and maintain a Vertica Analytics Platform database.

Prerequisites

This document makes the following assumptions:

1 - Administration overview

This document describes the functions performed by a Vertica database administrator (DBA).

This document describes the functions performed by a Vertica database administrator (DBA). Perform these tasks using only the dedicated database administrator account that was created when you installed Vertica. The examples in this documentation set assume that the administrative account name is dbadmin.

-

To perform certain cluster configuration and administration tasks, the DBA (users of the administrative account) must be able to supply the root password for those hosts. If this requirement conflicts with your organization's security policies, these functions must be performed by your IT staff.

-

If you perform administrative functions using a different account from the account provided during installation, Vertica encounters file ownership problems.

-

If you share the administrative account password, make sure that only one user runs the Administration tools at any time. Otherwise, automatic configuration propagation does not work correctly.

-

The Administration Tools require that the calling user's shell be /bin/bash. Other shells give unexpected results and are not supported.

2 - Managing licenses

You must license Vertica in order to use it.

You must license Vertica in order to use it. Vertica supplies your license in the form of one or more license files, which encode the terms of your license.

To prevent introducing special characters that invalidate the license, do not open the license files in an editor. Opening the file in this way can introduce special characters, such as line endings and file terminators, that may not be visible within the editor. Whether visible or not, these characters invalidate the license.

Applying license files

Be careful not to change the license key file in any way when copying the file between Windows and Linux, or to any other location. To help prevent applications from trying to alter the file, enclose the license file in an archive file (such as a .zip or .tar file). You should keep a back up of your license key file. OpenText recommends that you keep the backup in /opt/vertica.

After copying the license file from one location to another, check that the copied file size is identical to that of the one you received from Vertica.

2.1 - Obtaining a license key file

Follow these steps to obtain a license key file:.

Follow these steps to obtain a license key file:

-

Log in to the Software Entitlement Key site using your passport login information. If you do not have a passport login, create one.

-

On the Request Access page, enter your order number and select a role.

-

Enter your request access reasoning.

-

Click Submit.

-

After your request is approved, you will receive a confirmation email. On the site, click the Entitlements tab to see your Vertica software.

-

Under the Action tab, click Activate. You may select more than one product.

-

The License Activation page opens. Enter your Target Name.

-

Select you Vertica version and the quantity you want to activate.

-

Click Next.

-

Confirm your activation details and click Submit.

-

The Activation Results page displays. Follow the instructions in New Vertica license installations or Vertica license changes to complete your installation or upgrade.

Your Vertica Community Edition download package includes the Community Edition license, which allows three nodes and 1TB of data. The Vertica Community Edition license does not expire.

2.2 - Understanding Vertica licenses

Vertica has flexible licensing terms.

Vertica has flexible licensing terms. It can be licensed on the following bases:

-

Term-based (valid until a specific date).

-

Size-based (valid to store up to a specified amount of raw data).

-

Both term- and size-based.

-

Unlimited duration and data storage.

-

Node-based with an unlimited number of CPUs and users (one node is a server acting as a single computer system, whether physical or virtual).

-

A pay-as-you-go model where you pay for only the number of hours you use. This license is available on your cloud provider's marketplace.

Your license key has your licensing bases encoded into it. If you are unsure of your current license, you can view your license information from within Vertica.

Note

Vertica does not support license downgrades.

Vertica Community Edition (CE) is free and allows customers to cerate databases with the following limits:

-

up to 3 of nodes

-

up to 1 terabyte of data

Community Edition licenses cannot be installed co-located in a Hadoop infrastructure and used to query data stored in Hadoop formats.

As part of the CE license, you agree to the collection of some anonymous, non-identifying usage data. This data lets Vertica understand how customers use the product, and helps guide the development of new features. None of your personal data is collected. For details on what is collected, see the Community Edition End User License Agreement.

Vertica for SQL on Apache Hadoop license

Vertica for SQL on Apache Hadoop is a separate product with its own license. This documentation covers both products. Consult your license agreement for details about available features and limitations.

2.3 - Installing or upgrading a license key

The steps you follow to apply your Vertica license key vary, depending on the type of license you are applying and whether you are upgrading your license.

The steps you follow to apply your Vertica license key vary, depending on the type of license you are applying and whether you are upgrading your license.

2.3.1 - New Vertica license installations

Follow these steps to install a new Vertica license:.

Follow these steps to install a new Vertica license:

-

Copy the license key file you generated from the Software Entitlement Key site to your Administration host.

-

Ensure the license key's file permissions are set to 400 (read permissions).

-

Install Vertica as described in the Installing Vertica if you have not already done so. The interface prompts you for the license key file.

-

To install Community Edition, leave the default path blank and click OK. To apply your evaluation or Premium Edition license, enter the absolute path of the license key file you downloaded to your Administration Host and press OK. The first time you log in as the Database Superuser and run the Administration tools, the interface prompts you to accept the End-User License Agreement (EULA).

Note

If you installed

Management Console, the MC administrator can point to the location of the license key during Management Console configuration.

-

Choose View EULA.

-

Exit the EULA and choose Accept EULA to officially accept the EULA and continue installing the license, or choose Reject EULA to reject the EULA and return to the Advanced Menu.

2.3.2 - Vertica license changes

If your license is expiring or you want your database to grow beyond your licensed data size, you must renew or upgrade your license.

If your license is expiring or you want your database to grow beyond your licensed data size, you must renew or upgrade your license. After you obtain your renewal or upgraded license key file, you can install it using Administration Tools or Management Console.

Upgrading does not require a new license unless you are increasing the capacity of your database. You can add-on capacity to your database using the Software Entitlement Key. You do not need uninstall and reinstall the license to add-on capacity.

-

Copy the license key file you generated from the Software Entitlement Key site to your Administration host.

-

Ensure the license key's file permissions are set to 400 (read permissions).

-

Start your database, if it is not already running.

-

In the Administration Tools, select Advanced > Upgrade License Key and click OK.

-

Enter the absolute path to your new license key file and click OK. The interface prompts you to accept the End-User License Agreement (EULA).

-

Choose View EULA.

-

Exit the EULA and choose Accept EULA to officially accept the EULA and continue installing the license, or choose Reject EULA to reject the EULA and return to the Advanced Menu.

Uploading or upgrading a license key using Management Console

-

From your database's Overview page in Management Console, click the License tab. The License page displays. You can view your installed licenses on this page.

-

Click Install New License at the top of the License page.

-

Browse to the location of the license key from your local computer and upload the file.

-

Click Apply at the top of the page. Management Console prompts you to accept the End-User License Agreement (EULA).

-

Select the check box to officially accept the EULA and continue installing the license, or click Cancel to exit.

Note

As soon as you renew or upgrade your license key from either your

Administration host or Management Console, Vertica applies the license update. No further warnings appear.

Adding capacity

If you are adding capacity to your database, you do not need to uninstall and reinstall the license. Instead, you can install multiple licenses to increase the size of your database. This additive capacity only works for licenses with the same format, such as adding a Premium license capacity to an existing Premium license type. When you add capacity, the size of license will be the total of both licenses; the previous license is not overwritten. You cannot add capacity using two different license formats, such as adding Hadoop license capacity to an existing Premium license.

You can run the AUDIT() function to verify the license capacity was added on. The reflection of add-on capacity to your license will run during the automatic run of the audit function. If you want to see the immediate result of the add-on capacity, run the AUDIT() function to refresh.

Note

If you have an expired license, you must drop the expired license before you can continue to use Vertica. For more information, see

DROP_LICENSE.

2.4 - Viewing your license status

You can use several functions to display your license terms and current status.

You can use several functions to display your license terms and current status.

Examining your license key

Use the DISPLAY_LICENSE SQL function to display the license information. This function displays the dates for which your license is valid (or Perpetual if your license does not expire) and any raw data allowance. For example:

=> SELECT DISPLAY_LICENSE();

DISPLAY_LICENSE

---------------------------------------------------

Vertica Systems, Inc.

2007-08-03

Perpetual

500GB

(1 row)

You can also query the LICENSES system table to view information about your installed licenses. This table displays your license types, the dates for which your licenses are valid, and the size and node limits your licenses impose.

Alternatively, use the LICENSES table in Management Console. On your database Overview page, click the License tab to view information about your installed licenses.

Viewing your license compliance

If your license includes a raw data size allowance, Vertica periodically audits your database's size to ensure it remains compliant with the license agreement. If your license has a term limit, Vertica also periodically checks to see if the license has expired. You can see the result of the latest audits using the GET_COMPLIANCE_STATUS function.

=> select GET_COMPLIANCE_STATUS();

GET_COMPLIANCE_STATUS

---------------------------------------------------------------------------------

Raw Data Size: 2.00GB +/- 0.003GB

License Size : 4.000GB

Utilization : 50%

Audit Time : 2011-03-09 09:54:09.538704+00

Compliance Status : The database is in compliance with respect to raw data size.

License End Date: 04/06/2011

Days Remaining: 28.59

(1 row)

To see how your ORC/Parquet data is affecting your license compliance, see Viewing license compliance for Hadoop file formats.

Viewing your license status through MC

Information about license usage is on the Settings page. See Monitoring database size for license compliance.

2.5 - Viewing license compliance for Hadoop file formats

You can use the EXTERNAL_TABLE_DETAILS system table to gather information about all of your tables based on Hadoop file formats.

You can use the EXTERNAL_TABLE_DETAILS system table to gather information about all of your tables based on Hadoop file formats. This information can help you understand how much of your license's data allowance is used by ORC and Parquet-based data.

Vertica computes the values in this table at query time, so to avoid performance problems, restrict your queries to filter by table_schema, table_name, or source_format. These three columns are the only columns you can use in a predicate, but you may use all of the usual predicate operators.

=> SELECT * FROM EXTERNAL_TABLE_DETAILS

WHERE source_format = 'PARQUET' OR source_format = 'ORC';

-[ RECORD 1 ]---------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

schema_oid | 45035996273704978

table_schema | public

table_oid | 45035996273760390

table_name | ORC_demo

source_format | ORC

total_file_count | 5

total_file_size_bytes | 789

source_statement | COPY FROM 'ORC_demo/*' ORC

file_access_error |

-[ RECORD 2 ]---------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

schema_oid | 45035196277204374

table_schema | public

table_oid | 45035996274460352

table_name | Parquet_demo

source_format | PARQUET

total_file_count | 3

total_file_size_bytes | 498

source_statement | COPY FROM 'Parquet_demo/*' PARQUET

file_access_error |

When computing the size of an external table, Vertica counts all data found in the location specified by the COPY FROM clause. If you have a directory that contains ORC and delimited files, for example, and you define your external table with "COPY FROM *" instead of "COPY FROM *.orc", this table includes the size of the delimited files. (You would probably also encounter errors when querying that external table.) When you query this table Vertica does not validate your table definition; it just uses the path to find files to report.

You can also use the AUDIT function to find the size of a specific table or schema. When using the AUDIT function on ORC or PARQUET external tables, the error tolerance and confidence level parameters are ignored. Instead, the AUDIT always returns the size of the ORC or Parquet files on disk.

=> select AUDIT('customers_orc');

AUDIT

-----------

619080883

(1 row)

2.6 - Moving a cloud installation from by the hour (BTH) to bring your own license (BYOL)

Vertica offers two licensing options for some of the entries in the Amazon Web Services Marketplace and Google Cloud Marketplace:.

Vertica offers two licensing options for some of the entries in the Amazon Web Services Marketplace and Google Cloud Marketplace:

- Bring Your Own License (BYOL): a long-term license that you obtain through an online licensing portal. These deployments also work with a free Community Edition license. Vertica uses a community license automatically if you do not install a license that you purchased. (For more about Vertica licenses, see Managing licenses and Understanding Vertica licenses.)

- Vertica by the Hour (BTH): a pay-as-you-go environment where you are charged an hourly fee for both the use of Vertica and the cost of the instances it runs on. The Vertica by the hour deployment offers an alternative to purchasing a term license. If you want to crunch large volumes of data within a short period of time, this option might work better for you. The BTH license is automatically applied to all clusters you create using a BTH MC instance.

If you start out with an hourly license, you can later decide to use a long-term license for your database. The support for an hourly versus a long-term license is built into the instances running your database. To move your database from an hourly license to a long-term license, you must create a new database cluster with a new set of instances.

To move from an hourly to a long-term license, follow these steps:

-

Purchase a BYOL license. Follow the process described in Obtaining a license key file.

-

Apply the new license to your database.

-

Shut down your database.

-

Create a new database cluster using a BYOL marketplace entry.

-

Revive your database onto the new cluster.

The exact steps you must take depend on your database mode and your preferred tool for managing your database:

Moving an Eon Mode database from BTH to BYOL using the command line

Follow these steps to move an Eon Mode database from an hourly to a long-term license.

Obtain a long-term BYOL license from the online licensing portal, described in Obtaining a license key file.Upload the license file to a node in your database. Note the absolute path in the node's filesystem, as you will need this later when installing the license.Connect to the node you uploaded the license file to in the previous step.

Connect to your database using vsql and view the licenses table:

=> SELECT * FROM licenses;

Note the name of the hourly license listed in the NAME column, so you can check if it is still present later.

Install the license in the database using the INSTALL_LICENSE function with the absolute path to the license file you uploaded in step 2:

=> SELECT install_license('absolute path to BYOL license');

View the licenses table again:

=> SELECT * FROM licenses;

If only the new BYOL license appears in the table, skip to step 8. If the hourly license whose name you noted in step 4 is still in the table, copy the name and proceed to step 7.

Call the DROP_LICENSE function to drop the hourly license:

=> SELECT drop_license('hourly license name');

-

You will need the path for your cluster's communal storage in a later step. If you do not already know the path, you can find this information by executing this query:

=> SELECT location_path FROM V_CATALOG.STORAGE_LOCATIONS

WHERE sharing_type = 'COMMUNAL';

-

Synchronize your database's metadata. See Synchronizing metadata.

-

Shut down the database by calling the SHUTDOWN function:

=> SELECT SHUTDOWN();

-

You now need to create a new BYOL cluster onto which you will revive your database. Deploy a new cluster including a new MC instance using a BYOL entry in the marketplace of your chosen cloud platform. See:

Important

Your new BYOL cluster must have the same number of primary nodes as your existing hourly license cluster.

-

Revive your database onto the new cluster. For instructions, see Reviving an Eon Mode database cluster. Because you created the new cluster using a BYOL entry in the marketplace, the database uses the BYOL you applied earlier.

-

After reviving the database on your new BYOL cluster, terminate the instances for your hourly license cluster and MC. For instructions, see your cloud provider's documentation.

Moving an Eon Mode database from BTH to BYOL using the MC

Follow this procedure to move to BYOL and revive your database using MC:

-

Purchase a long-term BYOL license from the online licensing portal, following the steps detailed in Obtaining a license key file. Save the file to a location on your computer.

-

You now need to install the new license on your database. Log into MC and click your database in the Recent Databases list.

-

At the bottom of your database's Overview page, click the License tab.

-

Under the Installed Licenses list, note the name of the BTH license in the License Name column. You will need this later to check whether it is still present after installing the new long-term license.

-

In the ribbon at the top of the License History page, click the Install New License button. The Settings: License page opens.

-

Click the Browse button next to the Upload a new license box.

-

Locate the license file you obtained in step 1, and click Open.

-

Click the Apply button on the top right of the page.

-

Select the checkbox to agree to the EULA terms and click OK.

-

After Vertica installs the license, click the Close button.

-

Click the License tab at the bottom of the page.

-

If only the new long-term license appears in the Installed Licenses list, skip to Step 16. If the by-the-hour license also appears in the list, copy down its name from the License Name column.

-

You must drop the by-the-hour license before you can proceed. At the bottom of the page, click the Query Execution tab.

-

In the query editor, enter the following statement:

SELECT DROP_LICENSE('hourly license name');

-

Click Execute Query. The query should complete indicating that the license has been dropped.

-

You will need the path for your cluster's communal storage in a later step. If you do not already know the path, you can find this information by executing this query in the Query Execution tab:

SELECT location_path FROM V_CATALOG.STORAGE_LOCATIONS

WHERE sharing_type = 'COMMUNAL';

-

Synchronize your database's metadata. See Synchronizing metadata.

-

You must now stop your by-the-hour database cluster. At the bottom of the page, click the Manage tab.

-

In the banner at the top of the page, click Stop Database and then click OK to confirm.

-

From the Amazon Web Services Marketplace or the Google Cloud Marketplace, deploy a new Vertica Management Console using a BYOL entry. Do not deploy a full cluster. You just need an MC deployment.

-

Log into your new MC instance and revive the database. See Reviving an Eon Mode database on AWS in MC for detailed instructions.

-

After reviving the database on your new environment, terminate the instances for your hourly license environment. To do so, on the AWS CloudFormation Stacks page, select the hourly environment's stack (its collection of AWS resources) and click Actions > Delete Stack.

Moving an Enterprise Mode database from hourly to BYOL using backup and restore

Note

Currently, AWS is the only platform supported for Enterprise Mode databases using hourly licenses.

In an Enterprise Mode database, follow this procedure to move to BYOL, and then back up and restore your database:

Obtain a long-term BYOL license from the online licensing portal, described in Obtaining a license key file.Upload the license file to a node in your database. Note the absolute path in the node's filesystem, as you will need this later when installing the license.Connect to the node you uploaded the license file to in the previous step.

Connect to your database using vsql and view the licenses table:

=> SELECT * FROM licenses;

Note the name of the hourly license listed in the NAME column, so you can check if it is still present later.

Install the license in the database using the INSTALL_LICENSE function with the absolute path to the license file you uploaded in step 2:

=> SELECT install_license('absolute path to BYOL license');

View the licenses table again:

=> SELECT * FROM licenses;

If only the new BYOL license appears in the table, skip to step 8. If the hourly license whose name you noted in step 4 is still in the table, copy the name and proceed to step 7.

Call the DROP_LICENSE function to drop the hourly license:

=> SELECT drop_license('hourly license name');

-

Back up the database. See Backing up and restoring the database.

-

Deploy a new cluster for your database using one of the BYOL entries in the Amazon Web Services Marketplace.

-

Restore the database from the backup you created earlier. See Backing up and restoring the database. When you restore the database, it will use the BYOL you loaded earlier.

-

After restoring the database on your new environment, terminate the instances for your hourly license environment. To do so, on the AWS CloudFormation Stacks page, select the hourly environment's stack (its collection of AWS resources) and click Actions > Delete Stack.

After completing one of these procedures, see Viewing your license status to confirm the license drop and install were successful.

2.7 - Auditing database size

You can use your Vertica software until columnar data reaches the maximum raw data size that your license agreement allows.

You can use your Vertica software until columnar data reaches the maximum raw data size that your license agreement allows. Vertica periodically runs an audit of the columnar data size to verify that your database complies with this agreement. You can also run your own audits of database size with two functions:

-

AUDIT: Estimates the raw data size of a database, schema, or table.

-

AUDIT_FLEX: Estimates the size of one or more flexible tables in a database, schema, or projection.

The following two examples audit the database and one schema:

=> SELECT AUDIT('', 'database');

AUDIT

----------

76376696

(1 row)

=> SELECT AUDIT('online_sales', 'schema');

AUDIT

----------

35716504

(1 row)

Raw data size

AUDIT and AUDIT_FLEX use statistical sampling to estimate the raw data size of data stored in tables—that is, the uncompressed data that the database stores. For most data types, Vertica evaluates the raw data size as if the data were exported from the database in text format, rather than as compressed data. For details, see Evaluating Data Type Footprint.

By using statistical sampling, the audit minimizes its impact on database performance. The tradeoff between accuracy and performance impact is a small margin of error. Reports on your database size include the margin of error, so you can assess the accuracy of the estimate.

Data in ORC and Parquet-based external tables are also audited whether they are stored locally in the Vertica cluster's file system or remotely in S3 or on a Hadoop cluster. AUDIT always uses the file size of the underlying data files as the amount of data in the table. For example, suppose you have an external table based on 1GB of ORC files stored in HDFS. Then an audit of the table reports it as being 1GB in size.

Note

The Vertica audit does not verify that these files contain actual ORC or Parquet data. It just checks the size of the files that correspond to the external table definition.

Unaudited data

Table data that appears in multiple projections is counted only once. An audit also excludes the following data:

-

Temporary table data.

-

Data in SET USING columns.

-

Non-columnar data accessible through external table definitions. Data in columnar formats such as ORC and Parquet count against your totals.

-

Data that was deleted but not yet purged.

-

Data stored in system and work tables such as monitoring tables, Data collector tables, and Database Designer tables.

-

Delimiter characters.

Vertica evaluates the footprint of different data types as follows:

-

Strings and binary types—CHAR, VARCHAR, BINARY, VARBINARY—are counted as their actual size in bytes using UTF-8 encoding.

-

Numeric data types are evaluated as if they were printed. Each digit counts as a byte, as does any decimal point, sign, or scientific notation. For example, -123.456 counts as eight bytes—six digits plus the decimal point and minus sign.

-

Date/time data types are evaluated as if they were converted to text, including hyphens, spaces, and colons. For example, vsql prints a timestamp value of 2011-07-04 12:00:00 as 19 characters, or 19 bytes.

-

Complex types are evaluated as the sum of the sizes of their component parts. An array is counted as the total size of all elements, and a ROW is counted as the total size of all fields.

Controlling audit accuracy

AUDIT can specify the level of an audit's error tolerance and confidence, by default set to 5 and 99 percent, respectively. For example, you can obtain a high level of audit accuracy by setting error tolerance and confidence level to 0 and 100 percent, respectively. Unlike estimating raw data size with statistical sampling, Vertica dumps all audited data to a raw format to calculate its size.

Caution

Vertica discourages database-wide audits at this level. Doing so can have a significant adverse impact on database performance.

The following example audits the database with 25% error tolerance:

=> SELECT AUDIT('', 25);

AUDIT

----------

75797126

(1 row)

The following example audits the database with 25% level of tolerance and 90% confidence level:

=> SELECT AUDIT('',25,90);

AUDIT

----------

76402672

(1 row)

Note

These accuracy settings have no effect on audits of external tables based on ORC or Parquet files. Audits of external tables based on these formats always use the file size of ORC or Parquet files.

2.8 - Monitoring database size for license compliance

Your Vertica license can include a data storage allowance.

Your Vertica license can include a data storage allowance. The allowance can consist of data in columnar tables, flex tables, or both types of data. The AUDIT() function estimates the columnar table data size and any flex table materialized columns. The AUDIT_FLEX() function estimates the amount of __raw__ column data in flex or columnar tables. In regards to license data limits, data in __raw__ columns is calculated at 1/10th the size of structured data. Monitoring data sizes for columnar and flex tables lets you plan either to schedule deleting old data to keep your database in compliance with your license, or to consider a license upgrade for additional data storage.

Note

An audit of columnar data includes flex table real and materialized columns, but not __raw__ column data.

Viewing your license compliance status

Vertica periodically runs an audit of the columnar data size to verify that your database is compliant with your license terms. You can view the results of the most recent audit by calling the GET_COMPLIANCE_STATUS function.

=> select GET_COMPLIANCE_STATUS();

GET_COMPLIANCE_STATUS

---------------------------------------------------------------------------------

Raw Data Size: 2.00GB +/- 0.003GB

License Size : 4.000GB

Utilization : 50%

Audit Time : 2011-03-09 09:54:09.538704+00

Compliance Status : The database is in compliance with respect to raw data size.

License End Date: 04/06/2011

Days Remaining: 28.59

(1 row)

Periodically running GET_COMPLIANCE_STATUS to monitor your database's license status is usually enough to ensure that your database remains compliant with your license. If your database begins to near its columnar data allowance, you can use the other auditing functions described below to determine where your database is growing and how recent deletes affect the database size.

Manually auditing columnar data usage

You can manually check license compliance for all columnar data in your database using the AUDIT_LICENSE_SIZE function. This function performs the same audit that Vertica periodically performs automatically. The AUDIT_LICENSE_SIZE check runs in the background, so the function returns immediately. You can then query the results using GET_COMPLIANCE_STATUS.

Note

When you audit columnar data, the results include any flex table real and materialized columns, but not data in the __raw__ column. Materialized columns are virtual columns that you have promoted to real columns. Columns that you define when creating a flex table, or which you add with ALTER TABLE...ADD COLUMN statements are real columns. All __raw__ columns are real columns. However, since they consist of unstructured or semi-structured data, they are audited separately.

An alternative to AUDIT_LICENSE_SIZE is to use the AUDIT function to audit the size of the columnar tables in your entire database by passing an empty string to the function. This function operates synchronously, returning when it has estimated the size of the database.

=> SELECT AUDIT('');

AUDIT

----------

76376696

(1 row)

The size of the database is reported in bytes. The AUDIT function also allows you to control the accuracy of the estimated database size using additional parameters. See the entry for the AUDIT function for full details. Vertica does not count the AUDIT function results as an official audit. It takes no license compliance actions based on the results.

Note

The results of the AUDIT function do not include flex table data in

__raw__ columns. Use the

AUDIT_FLEX function to monitor data usage flex tables.

Manually auditing __raw__ column data

You can use the AUDIT_FLEX function to manually audit data usage for flex or columnar tables with a __raw__ column. The function calculates the encoded, compressed data stored in ROS containers for any __raw__ columns. Materialized columns in flex tables are calculated by the AUDIT function. The AUDIT_FLEX results do not include data in the __raw__ columns of temporary flex tables.

Targeted auditing

If audits determine that the columnar table estimates are unexpectedly large, consider schemas, tables, or partitions that are using the most storage. You can use the AUDIT function to perform targeted audits of schemas, tables, or partitions by supplying the name of the entity whose size you want to find. For example, to find the size of the online_sales schema in the VMart example database, run the following command:

=> SELECT AUDIT('online_sales');

AUDIT

----------

35716504

(1 row)

You can also change the granularity of an audit to report the size of each object in a larger entity (for example, each table in a schema) by using the granularity argument of the AUDIT function. See the AUDIT function.

Using Management Console to monitor license compliance

You can also get information about data storage of columnar data (for columnar tables and for materialized columns in flex tables) through the Management Console. This information is available in the database Overview page, which displays a grid view of the database's overall health.

-

The needle in the license meter adjusts to reflect the amount used in megabytes.

-

The grace period represents the term portion of the license.

-

The Audit button returns the same information as the AUDIT() function in a graphical representation.

-

The Details link within the License grid (next to the Audit button) provides historical information about license usage. This page also shows a progress meter of percent used toward your license limit.

2.9 - Managing license warnings and limits

The term portion of a Vertica license is easy to manage—you are licensed to use Vertica until a specific date.

Term license warnings and expiration

The term portion of a Vertica license is easy to manage—you are licensed to use Vertica until a specific date. If the term of your license expires, Vertica alerts you with messages appearing in the Administration tools and vsql. For example:

=> CREATE TABLE T (A INT);

NOTICE 8723: Vertica license 432d8e57-5a13-4266-a60d-759275416eb2 is in its grace period; grace period expires in 28 days

HINT: Renew at https://softwaresupport.softwaregrp.com/

CREATE TABLE

Contact Vertica at https://softwaresupport.softwaregrp.com/ as soon as possible to renew your license, and then install the new license. After the grace period expires, Vertica stops processing DML queries and allows DDL queries with a warning message. If a license expires and one or more valid alternative licenses are installed, Vertica uses the alternative licenses.

Data size license warnings and remedies

If your Vertica columnar license includes a raw data size allowance, Vertica periodically audits the size of your database to ensure it remains compliant with the license agreement. For details of this audit, see Auditing database size. You should also monitor your database size to know when it will approach licensed usage. Monitoring the database size helps you plan to either upgrade your license to allow for continued database growth or delete data from the database so you remain compliant with your license. See Monitoring database size for license compliance for details.

If your database's size approaches your licensed usage allowance (above 75% of license limits), you will see warnings in the Administration tools , vsql, and Management Console. You have two options to eliminate these warnings:

-

Upgrade your license to a larger data size allowance.

-

Delete data from your database to remain under your licensed raw data size allowance. The warnings disappear after Vertica's next audit of the database size shows that it is no longer close to or over the licensed amount. You can also manually run a database audit (see Monitoring database size for license compliance for details).

If your database continues to grow after you receive warnings that its size is approaching your licensed size allowance, Vertica displays additional warnings in more parts of the system after a grace period passes. Use the GET_COMPLIANCE_STATUS function to check the status of your license.

If your Vertica premium edition database size exceeds your licensed limits

If your Premium Edition database size exceeds your licensed data allowance, all successful queries from ODBC and JDBC clients return with a status of SUCCESS_WITH_INFO instead of the usual SUCCESS. The message sent with the results contains a warning about the database size. Your ODBC and JDBC clients should be prepared to handle these messages instead of assuming that successful requests always return SUCCESS.

Note

These warnings for Premium Edition are in addition to any warnings you see in Administration Tools, vsql, and Management Console.

If your Community Edition database size exceeds the limit of 1 terabyte, Vertica stops processing DML queries and allows DDL queries with a warning message.

To bring your database under compliance, you can choose to:

2.10 - Exporting license audit results to CSV

You can use admintools to audit a database for license compliance and export the results in CSV format, as follows:.

You can use admintools to audit a database for license compliance and export the results in CSV format, as follows:

admintools -t license_audit [--password=password] --database=database] [--file=csv-file] [--quiet]

where:

-

database must be a running database. If the database is password protected, you must also supply the password.

-

--file csv-file directs output to the specified file. If csv-file already exists, the tool returns an error message. If this option is unspecified, output is directed to stdout.

-

--quiet specifies that the tool should run in quiet mode; if unspecified, status messages are sent to stdout.

Running the license_audit tool is equivalent to invoking the following SQL statements:

select audit('');

select audit_flex('');

select * from dc_features_used;

select * from v_catalog.license_audits;

select * from v_catalog.user_audits;

Audit results include the following information:

-

Log of used Vertica features

-

Estimated database size

-

Raw data size allowed by your Vertica license

-

Percentage of licensed allowance that the database currently uses

-

Audit timestamps

The following truncated example shows the raw CSV output that license_audit generates:

FEATURES_USED

features_used,feature,date,sum

features_used,metafunction::get_compliance_status,2014-08-04,1

features_used,metafunction::bootstrap_license,2014-08-04,1

...

LICENSE_AUDITS

license_audits,database_size_bytes,license_size_bytes,usage_percent,audit_start_timestamp,audit_end_timestamp,confidence_level_percent,error_tolerance_percent,used_sampling,confidence_interval_lower_bound_bytes,confidence_interval_upper_bound_bytes,sample_count,cell_count,license_name

license_audits,808117909,536870912000,0.00150523690320551,2014-08-04 23:59:00.024874-04,2014-08-04 23:59:00.578419-04,99,5,t,785472097,830763721,10000,174754646,vertica

...

USER_AUDITS

user_audits,size_bytes,user_id,user_name,object_id,object_type,object_schema,object_name,audit_start_timestamp,audit_end_timestamp,confidence_level_percent,error_tolerance_percent,used_sampling,confidence_interval_lower_bound_bytes,confidence_interval_upper_bound_bytes,sample_count,cell_count

user_audits,812489249,45035996273704962,dbadmin,45035996273704974,DATABASE,,VMart,2014-10-14 11:50:13.230669-04,2014-10-14 11:50:14.069057-04,99,5,t,789022736,835955762,10000,174755178

AUDIT_SIZE_BYTES

audit_size_bytes,now,audit

audit_size_bytes,2014-10-14 11:52:14.015231-04,810584417

FLEX_SIZE_BYTES

flex_size_bytes,now,audit_flex

flex_size_bytes,2014-10-14 11:52:15.117036-04,11850

3 - Configuring the database

Before reading the topics in this section, you should be familiar with the material in [%=Vertica.GETTING_STARTED_GUIDE%] and are familiar with creating and configuring a fully-functioning example database.

Before reading the topics in this section, you should be familiar with the material in Getting started and are familiar with creating and configuring a fully-functioning example database.

See also

3.1 - Configuration procedure

This section describes the tasks required to set up a Vertica database.

This section describes the tasks required to set up a Vertica database. It assumes that you have a valid license key file, installed the Vertica rpm package, and ran the installation script as described.

You complete the configuration procedure using:

Note

You can also perform certain tasks using

Management Console. Those tasks point to the appropriate topic.

Continuing configuring

Follow the configuration procedure sequentially as this section describes.

Vertica strongly recommends that you first experiment with creating and configuring a database.

You can use this generic configuration procedure several times during the development process, modifying it to fit your changing goals. You can omit steps such as preparing actual data files and sample queries, and run the Database Designer without optimizing for queries. For example, you can create, load, and query a database several times for development and testing purposes, then one final time to create and load the production database.

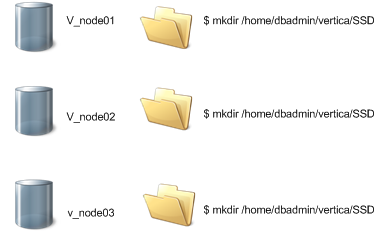

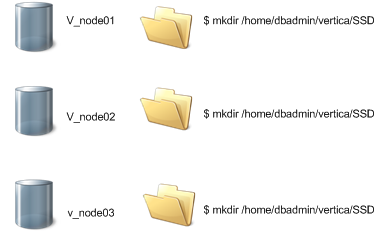

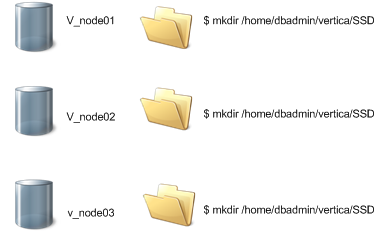

3.1.1 - Prepare disk storage locations

You must create and specify directories in which to store your catalog and data files ().

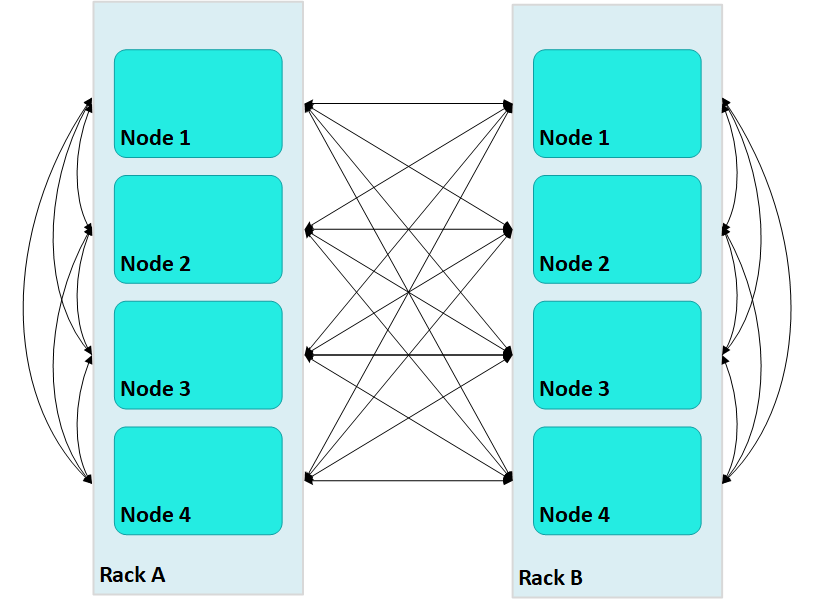

You must create and specify directories in which to store your catalog and data files (physical schema). You can specify these locations when you install or configure the database, or later during database operations. Both the catalog and data directories must be owned by the database superuser.

The directory you specify for database catalog files (the catalog path) is used across all nodes in the cluster. For example, if you specify /home/catalog as the catalog directory, Vertica uses that catalog path on all nodes. The catalog directory should always be separate from any data file directories.

Note

Do not use a shared directory for more than one node. Data and catalog directories must be distinct for each node. Multiple nodes must not be allowed to write to the same data or catalog directory.

The data path you designate is also used across all nodes in the cluster. Specifying that data should be stored in /home/data, Vertica uses this path on all database nodes.

Do not use a single directory to contain both catalog and data files. You can store the catalog and data directories on different drives, which can be either on drives local to the host (recommended for the catalog directory) or on a shared storage location, such as an external disk enclosure or a SAN.

Before you specify a catalog or data path, be sure the parent directory exists on all nodes of your database. Creating a database in admintools also creates the catalog and data directories, but the parent directory must exist on each node.

You do not need to specify a disk storage location during installation. However, you can do so by using the --data-dir parameter to the install_vertica script. See Specifying disk storage location during installation.

3.1.1.1 - Specifying disk storage location during database creation

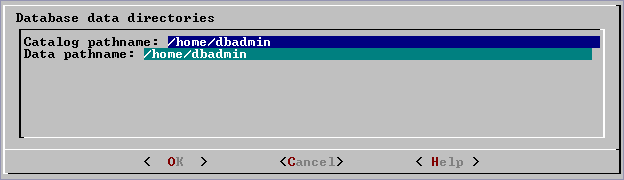

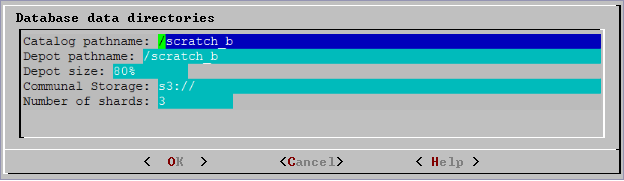

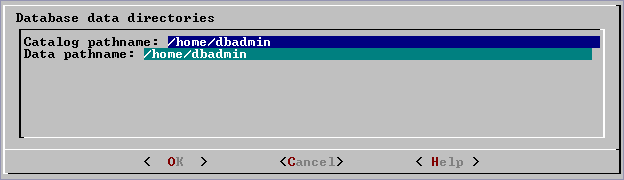

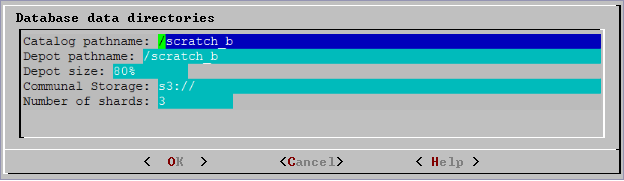

When you invoke the Create Database command in the , a dialog box allows you to specify the catalog and data locations.

When you invoke the Create Database command in the Administration tools, a dialog box allows you to specify the catalog and data locations. These locations must exist on each host in the cluster and must be owned by the database administrator.

When you click OK, Vertica automatically creates the following subdirectories:

catalog-pathname/database-name/node-name_catalog/data-pathname/database-name/node-name_data/

For example, if you use the default value (the database administrator's home directory) of

/home/dbadmin for the Stock Exchange example database, the catalog and data directories are created on each node in the cluster as follows:

/home/dbadmin/Stock_Schema/stock_schema_node1_host01_catalog/home/dbadmin/Stock_Schema/stock_schema_node1_host01_data

Notes

-

Catalog and data path names must contain only alphanumeric characters and cannot have leading space characters. Failure to comply with these restrictions will result in database creation failure.

-

Vertica refuses to overwrite a directory if it appears to be in use by another database. Therefore, if you created a database for evaluation purposes, dropped the database, and want to reuse the database name, make sure that the disk storage location previously used has been completely cleaned up. See Managing storage locations for details.

3.1.1.2 - Specifying disk storage location on MC

You can use the MC interface to specify where you want to store database metadata on the cluster in the following ways:.

You can use the MC interface to specify where you want to store database metadata on the cluster in the following ways:

See also

Configuring Management Console.

3.1.1.3 - Configuring disk usage to optimize performance

Once you have created your initial storage location, you can add additional storage locations to the database later.

Once you have created your initial storage location, you can add additional storage locations to the database later. Not only does this provide additional space, it lets you control disk usage and increase I/O performance by isolating files that have different I/O or access patterns. For example, consider:

-

Isolating execution engine temporary files from data files by creating a separate storage location for temp space.

-

Creating labeled storage locations and storage policies, in which selected database objects are stored on different storage locations based on measured performance statistics or predicted access patterns.

See also

Managing storage locations

3.1.1.4 - Using shared storage with Vertica

If using shared SAN storage, ensure there is no contention among the nodes for disk space or bandwidth.

If using shared SAN storage, ensure there is no contention among the nodes for disk space or bandwidth.

-

Each host must have its own catalog and data locations. Hosts cannot share catalog or data locations.

-

Configure the storage so that there is enough I/O bandwidth for each node to access the storage independently.

3.1.1.5 - Viewing database storage information

You can view node-specific information on your Vertica cluster through the.

You can view node-specific information on your Vertica cluster through the Management Console. See Monitoring Vertica Using Management Console for details.

3.1.1.6 - Anti-virus scanning exclusions

You should exclude the Vertica catalog and data directories from anti-virus scanning.

You should exclude the Vertica catalog and data directories from anti-virus scanning. Certain anti-virus products have been identified as targeting Vertica directories, and sometimes lock or delete files in them. This can adversely affect Vertica performance and data integrity.

Identified anti-virus products include the following:

-

ClamAV

-

SentinelOne

-

Sophos

-

Symantec

-

Twistlock

Important

This list is not comprehensive.

3.1.2 - Disk space requirements for Vertica

In addition to actual data stored in the database, Vertica requires disk space for several data reorganization operations, such as and managing nodes in the cluster.

In addition to actual data stored in the database, Vertica requires disk space for several data reorganization operations, such as mergeout and managing nodes in the cluster. For best results, Vertica recommends that disk utilization per node be no more than sixty percent (60%) for a K-Safe=1 database to allow such operations to proceed.

In addition, disk space is temporarily required by certain query execution operators, such as hash joins and sorts, in the case when they cannot be completed in memory (RAM). Such operators might be encountered during queries, recovery, refreshing projections, and so on. The amount of disk space needed (known as temp space) depends on the nature of the queries, amount of data on the node and number of concurrent users on the system. By default, any unused disk space on the data disk can be used as temp space. However, Vertica recommends provisioning temp space separate from data disk space.

See also

Configuring disk usage to optimize performance.

3.1.3 - Disk space requirements for Management Console

You can install Management Console on any node in the cluster, so it has no special disk requirements other than disk space you allocate for your database cluster.

You can install Management Console on any node in the cluster, so it has no special disk requirements other than disk space you allocate for your database cluster.

3.1.4 - Prepare the logical schema script

Designing a logical schema for a Vertica database is no different from designing one for any other SQL database.

Designing a logical schema for a Vertica database is no different from designing one for any other SQL database. Details are described more fully in Designing a logical schema.

To create your logical schema, prepare a SQL script (plain text file, typically with an extension of .sql) that:

-

Creates additional schemas (as necessary). See Using multiple schemas.

-

Creates the tables and column constraints in your database using the CREATE TABLE command.

-

Defines the necessary table constraints using the ALTER TABLE command.

-

Defines any views on the table using the CREATE VIEW command.

You can generate a script file using:

-

A schema designer application.

-

A schema extracted from an existing database.

-

A text editor.

-

One of the example database example-name_define_schema.sql scripts as a template. (See the example database directories in

/opt/vertica/examples.)

In your script file, make sure that:

-

Each statement ends with a semicolon.

-

You use data types supported by Vertica, as described in the SQL Reference Manual.

Once you have created a database, you can test your schema script by executing it as described in Create the logical schema. If you encounter errors, drop all tables, correct the errors, and run the script again.

3.1.5 - Prepare data files

Prepare two sets of data files:.

Prepare two sets of data files:

-

Test data files. Use test files to test the database after the partial data load. If possible, use part of the actual data files to prepare the test data files.

-

Actual data files. Once the database has been tested and optimized, use your data files for your initial Data load.

How to name data files

Name each data file to match the corresponding table in the logical schema. Case does not matter.

Use the extension .tbl or whatever you prefer. For example, if a table is named Stock_Dimension, name the corresponding data file stock_dimension.tbl. When using multiple data files, append _nnn (where nnn is a positive integer in the range 001 to 999) to the file name. For example, stock_dimension.tbl_001, stock_dimension.tbl_002, and so on.

3.1.6 - Prepare load scripts

You can postpone this step if your goal is to test a logical schema design for validity.

Note

You can postpone this step if your goal is to test a logical schema design for validity.

Prepare SQL scripts to load data directly into physical storage using COPY on vsql, or through ODBC.

You need scripts that load:

-

Large tables

-

Small tables

Vertica recommends that you load large tables using multiple files. To test the load process, use files of 10GB to 50GB in size. This size provides several advantages:

-

You can use one of the data files as a sample data file for the Database Designer.

-

You can load just enough data to Perform a partial data load before you load the remainder.

-

If a single load fails and rolls back, you do not lose an excessive amount of time.

-

Once the load process is tested, for multi-terabyte tables, break up the full load in file sizes of 250–500GB.

See also

Tip

You can use the load scripts included in the example databases as templates.

3.1.7 - Create an optional sample query script

The purpose of a sample query script is to test your schema and load scripts for errors.

The purpose of a sample query script is to test your schema and load scripts for errors.

Include a sample of queries your users are likely to run against the database. If you don't have any real queries, just write simple SQL that collects counts on each of your tables. Alternatively, you can skip this step.

3.1.8 - Create an empty database

Two options are available for creating an empty database:.

Two options are available for creating an empty database:

Although you can create more than one database (for example, one for production and one for testing), there can be only one active database for each installation of Vertica Analytic Database.

3.1.8.1 - Creating a database name and password

Database names must conform to the following rules:.

Database names

Database names must conform to the following rules:

-

Be between 1-30 characters

-

Begin with a letter

-

Follow with any combination of letters (upper and lowercase), numbers, and/or underscores.

Database names are case sensitive; however, Vertica strongly recommends that you do not create databases with names that differ only in case. For example, do not create a database called mydatabase and another called MyDataBase.

Database passwords

Database passwords can contain letters, digits, and special characters listed in the next table. Passwords cannot include non-ASCII Unicode characters.

The allowed password length is between 0-100 characters. The database superuser can change a Vertica user's maximum password length using ALTER PROFILE.

You use Profiles to specify and control password definitions. For instance, a profile can define the maximum length, reuse time, and the minimum number or required digits for a password, as well as other details.

The following special (ASCII) characters are valid in passwords. Special characters can appear anywhere in a password string. For example, mypas$word or $mypassword are both valid, while ±mypassword is not. Using special characters other than the ones listed below can cause database instability.

-

#

-

?

-

=

-

_

-

'

-

)

-

(

-

@

-

\

-

/

-

!

-

,

-

~

-

:

-

%

-

;

-

`

-

^

-

+

-

.

-

-

-

space

-

&

-

<

-

>

-

[

-

]

-

{

-

}

-

|

-

*

-

$

-

"

See also

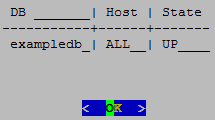

3.1.8.2 - Create a database using administration tools

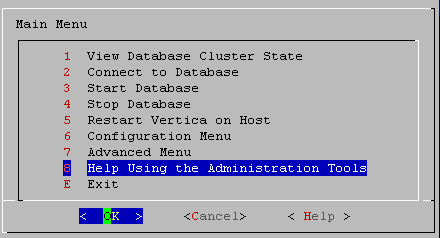

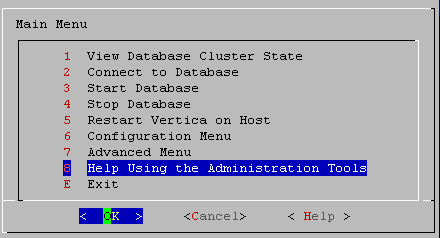

Run the from your as follows:.

-

Run the Administration tools from your Administration host as follows:

$ /opt/vertica/bin/admintools

If you are using a remote terminal application, such as PuTTY or a Cygwin bash shell, see Notes for remote terminal users.

-

Accept the license agreement and specify the location of your license file. For more information see Managing licenses for more information.

This step is necessary only if it is the first time you have run the Administration Tools

-

On the Main Menu, click Configuration Menu, and click OK.

-

On the Configuration Menu, click Create Database, and click OK.

-

Enter the name of the database and an optional comment, and click OK. See Creating a database name and password for naming guidelines and restrictions.

-

Establish the superuser password for your database.

-

To provide a password enter the password and click OK. Confirm the password by entering it again, and then click OK.

-

If you don't want to provide the password, leave it blank and click OK. If you don't set a password, Vertica prompts you to verify that you truly do not want to establish a superuser password for this database. Click Yes to create the database without a password or No to establish the password.

Caution

If you do not enter a password at this point, the superuser password is set to empty. Unless the database is for evaluation or academic purposes, Vertica strongly recommends that you enter a superuser password. See

Creating a database name and password for guidelines.

-

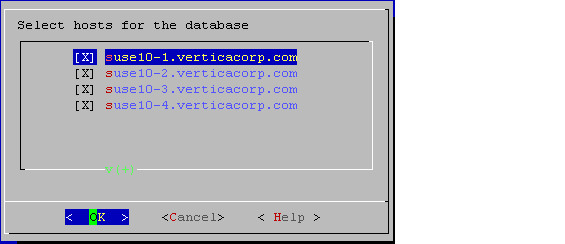

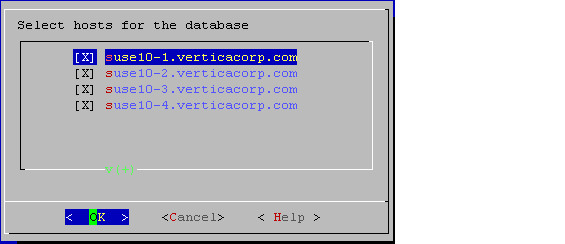

Select the hosts to include in the database from the list of hosts specified when Vertica was installed (

install_vertica -s), and click OK.

-

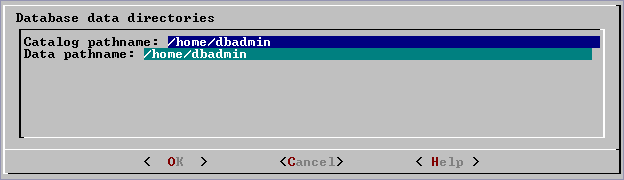

Specify the directories in which to store the data and catalog files, and click OK.

Note

Do not use a shared directory for more than one node. Data and catalog directories must be distinct for each node. Multiple nodes must not be allowed to write to the same data or catalog directory.

-

Catalog and data path names must contain only alphanumeric characters and cannot have leading spaces. Failure to comply with these restrictions results in database creation failure.

For example:

Catalog pathname: /home/dbadmin

Data Pathname: /home/dbadmin

-

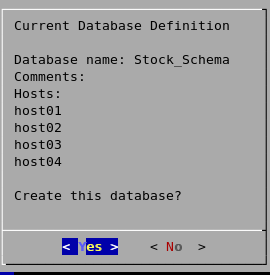

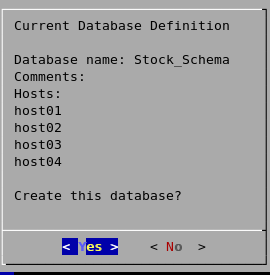

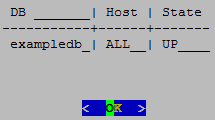

Review the Current Database Definition screen to verify that it represents the database you want to create, and then click Yes to proceed or No to modify the database definition.

-

If you click Yes, Vertica creates the database you defined and then displays a message to indicate that the database was successfully created.

Note

For databases created with 3 or more nodes, Vertica automatically sets

K-safety to 1 to ensure that the database is fault tolerant in case a node fails. For more information, see

Failure recovery in the Administrator's Guide and

MARK_DESIGN_KSAFE.

-

Click OK to acknowledge the message.

3.1.9 - Create the logical schema

Connect to the database.

-

Connect to the database.

In the Administration Tools Main Menu, click Connect to Database and click OK.

See Connecting to the Database for details.

The vsql welcome script appears:

Welcome to vsql, the Vertica Analytic Database interactive terminal.

Type: \h or \? for help with vsql commands

\g or terminate with semicolon to execute query

\q to quit

=>

-

Run the logical schema script

Using the \i meta-command in vsql to run the SQL logical schema script that you prepared earlier.

-

Disconnect from the database

Use the \q meta-command in vsql to return to the Administration Tools.

3.1.10 - Perform a partial data load

Vertica recommends that for large tables, you perform a partial data load and then test your database before completing a full data load.

Vertica recommends that for large tables, you perform a partial data load and then test your database before completing a full data load. This load should load a representative amount of data.

-

Load the small tables.

Load the small table data files using the SQL load scripts and data files you prepared earlier.

-

Partially load the large tables.

Load 10GB to 50GB of table data for each table using the SQL load scripts and data files that you prepared earlier.

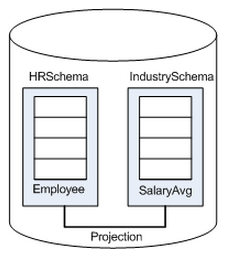

For more information about projections, see Projections.

3.1.11 - Test the database

Test the database to verify that it is running as expected.

Test the database to verify that it is running as expected.

Check queries for syntax errors and execution times.

-

Use the vsql \timing meta-command to enable the display of query execution time in milliseconds.

-

Execute the SQL sample query script that you prepared earlier.

-

Execute several ad hoc queries.

3.1.12 - Optimize query performance

Optimizing the database consists of optimizing for compression and tuning for queries.

Optimizing the database consists of optimizing for compression and tuning for queries. (See Creating a database design.)

To optimize the database, use the Database Designer to create and deploy a design for optimizing the database. See Using Database Designer to create a comprehensive design.

After you run the Database Designer, use the techniques described in Query optimization to improve the performance of certain types of queries.

Note

The database response time depends on factors such as type and size of the application query, database design, data size and data types stored, available computational power, and network bandwidth. Adding nodes to a database cluster does not necessarily improve the system response time for every query, especially if the response time is already short, e.g., less then 10 seconds, or the response time is not hardware bound.

3.1.13 - Complete the data load

To complete the load:.

To complete the load:

-

Monitor system resource usage.

Continue to run the top, free, and df utilities and watch them while your load scripts are running (as described in Monitoring Linux resource usage). You can do this on any or all nodes in the cluster. Make sure that the system is not swapping excessively (watch kswapd in top) or running out of swap space (watch for a large amount of used swap space in free).

Note

Vertica requires a dedicated server. If your loader or other processes take up significant amounts of RAM, it can result in swapping.

-

Complete the large table loads.

Run the remainder of the large table load scripts.

3.1.14 - Test the optimized database

Check query execution times to test your optimized design:.

Check query execution times to test your optimized design:

-

Use the vsql \timing meta-command to enable the display of query execution time in milliseconds.

Execute a SQL sample query script to test your schema and load scripts for errors.

Note

Include a sample of queries your users are likely to run against the database. If you don't have any real queries, just write simple SQL that collects counts on each of your tables. Alternatively, you can skip this step.

-

Execute several ad hoc queries

-

Run Administration tools and select Connect to Database.

-

Use the \i meta-command to execute the query script; for example:

vmartdb=> \i vmart_query_03.sql customer_name | annual_income

------------------+---------------

James M. McNulty | 999979

Emily G. Vogel | 999998

(2 rows)

Time: First fetch (2 rows): 58.411 ms. All rows formatted: 58.448 ms

vmartdb=> \i vmart_query_06.sql

store_key | order_number | date_ordered

-----------+--------------+--------------

45 | 202416 | 2004-01-04

113 | 66017 | 2004-01-04

121 | 251417 | 2004-01-04

24 | 250295 | 2004-01-04

9 | 188567 | 2004-01-04

166 | 36008 | 2004-01-04

27 | 150241 | 2004-01-04

148 | 182207 | 2004-01-04

198 | 75716 | 2004-01-04

(9 rows)

Time: First fetch (9 rows): 25.342 ms. All rows formatted: 25.383 ms

Once the database is optimized, it should run queries efficiently. If you discover queries that you want to optimize, you can modify and update the design incrementally.

3.1.15 - Implement locales for international data sets

Vertica uses the ICU library for locale support; you must specify locale using the ICU locale syntax.

Locale specifies the user's language, country, and any special variant preferences, such as collation. Vertica uses locale to determine the behavior of certain string functions. Locale also determines the collation for various SQL commands that require ordering and comparison, such as aggregate GROUP BY and ORDER BY clauses, joins, and the analytic ORDER BY clause.

The default locale for a Vertica database is en_US@collation=binary (English US). You can define a new default locale that is used for all sessions on the database. You can also override the locale for individual sessions. However, projections are always collated using the default en_US@collation=binary collation, regardless of the session collation. Any locale-specific collation is applied at query time.

If you set the locale to null, Vertica sets the locale to en_US_POSIX. You can set the locale back to the default locale and collation by issuing the vsql meta-command \locale. For example:

Note

=> set locale to '';

INFO 2567: Canonical locale: 'en_US_POSIX'

Standard collation: 'LEN'

English (United States, Computer)

SET

=> \locale en_US@collation=binary;

INFO 2567: Canonical locale: 'en_US'

Standard collation: 'LEN_KBINARY'

English (United States)

=> \locale

en_US@collation-binary;

You can set locale through ODBC, JDBC, and ADO.net.

ICU locale support

Vertica uses the ICU library for locale support; you must specify locale using the ICU locale syntax. The locale used by the database session is not derived from the operating system (through the LANG variable), so Vertica recommends that you set the LANG for each node running vsql, as described in the next section.

While ICU library services can specify collation, currency, and calendar preferences, Vertica supports only the collation component. Any keywords not relating to collation are rejected. Projections are always collated using the en_US@collation=binary collation regardless of the session collation. Any locale-specific collation is applied at query time.

The SET DATESTYLE TO ... command provides some aspects of the calendar, but Vertica supports only dollars as currency.

Changing DB locale for a session

This examples sets the session locale to Thai.

-

At the operating-system level for each node running vsql, set the LANG variable to the locale language as follows:

export LANG=th_TH.UTF-8

Note

If setting the LANG= as shown does not work, the operating system support for locales may not be installed.

-

For each Vertica session (from ODBC/JDBC or vsql) set the language locale.

From vsql:

\locale th_TH

-

From ODBC/JDBC:

"SET LOCALE TO th_TH;"

-

In PUTTY (or ssh terminal), change the settings as follows:

settings > window > translation > UTF-8

-

Click Apply and then click Save.

All data loaded must be in UTF-8 format, not an ISO format, as described in Delimited data. Character sets like ISO 8859-1 (Latin1), which are incompatible with UTF-8, are not supported, so functions like SUBSTRING do not work correctly for multibyte characters. Thus, settings for locale should not work correctly. If the translation setting ISO-8859-11:2001 (Latin/Thai) works, the data is loaded incorrectly. To convert data correctly, use a utility program such as Linux

iconv.

Note

The maximum length parameter for VARCHAR and CHAR data type refers to the number of octets (bytes) that can be stored in that field, not the number of characters. When using multi-byte UTF-8 characters, make sure to size fields to accommodate from 1 to 4 bytes per character, depending on the data.

See also

3.1.15.1 - Specify the default locale for the database

After you start the database, the default locale configuration parameter, DefaultSessionLocale, sets the initial locale.

After you start the database, the default locale configuration parameter, DefaultSessionLocale, sets the initial locale. You can override this value for individual sessions.

To set the locale for the database, use the configuration parameter as follows:

=> ALTER DATABASE DEFAULT SET DefaultSessionLocale = 'ICU-locale-identifier';

For example:

=> ALTER DATABASE DEFAULT SET DefaultSessionLocale = 'en_GB';

3.1.15.2 - Override the default locale for a session

You can override the default locale for the current session in two ways:.

You can override the default locale for the current session in two ways:

-

VSQL command

\locale. For example:

=> \locale en_GBINFO:

INFO 2567: Canonical locale: 'en_GB'

Standard collation: 'LEN'

English (United Kingdom)

-

SQL statement

SET LOCALE. For example:

=> SET LOCALE TO en_GB;

INFO 2567: Canonical locale: 'en_GB'

Standard collation: 'LEN'

English (United Kingdom)

Both methods accept locale short and long forms. For example:

=> SET LOCALE TO LEN;

INFO 2567: Canonical locale: 'en'

Standard collation: 'LEN'

English

=> \locale LEN

INFO 2567: Canonical locale: 'en'

Standard collation: 'LEN'

English

See also

3.1.15.3 - Server versus client locale settings

Vertica differentiates database server locale settings from client application locale settings:.

Vertica differentiates database server locale settings from client application locale settings:

The following sections describe best practices to ensure predictable results.

Server locale

The server session locale should be set as described in Specify the default locale for the database. If locales vary across different sessions, set the server locale at the start of each session from your client.

vsql client

-

If the database does not have a default session locale, set the server locale for the session to the desired locale.

-

The locale setting in the terminal emulator where the vsql client runs should be set to be equivalent to session locale setting on the server side (ICU locale). By doing so, the data is collated correctly on the server and displayed correctly on the client.

-

All input data for vsql should be in UTF-8, and all output data is encoded in UTF-8

-

Vertica does not support non UTF-8 encodings and associated locale values; .

-

For instructions on setting locale and encoding, refer to your terminal emulator documentation.

ODBC clients

-

ODBC applications can be either in ANSI or Unicode mode. If the user application is Unicode, the encoding used by ODBC is UCS-2. If the user application is ANSI, the data must be in single-byte ASCII, which is compatible with UTF-8 used on the database server. The ODBC driver converts UCS-2 to UTF-8 when passing to the Vertica server and converts data sent by the Vertica server from UTF-8 to UCS-2.

-

If the user application is not already in UCS-2, the application must convert the input data to UCS-2, or unexpected results could occur. For example:

-

For non-UCS-2 data passed to ODBC APIs, when it is interpreted as UCS-2, it could result in an invalid UCS-2 symbol being passed to the APIs, resulting in errors.

-

The symbol provided in the alternate encoding could be a valid UCS-2 symbol. If this occurs, incorrect data is inserted into the database.

-

If the database does not have a default session locale, ODBC applications should set the desired server session locale using SQLSetConnectAttr (if different from database wide setting). By doing so, you get the expected collation and string functions behavior on the server.

JDBC and ADO.NET clients

-

JDBC and ADO.NET applications use a UTF-16 character set encoding and are responsible for converting any non-UTF-16 encoded data to UTF-16. The same cautions apply as for ODBC if this encoding is violated.

-

The JDBC and ADO.NET drivers convert UTF-16 data to UTF-8 when passing to the Vertica server and convert data sent by Vertica server from UTF-8 to UTF-16.

-

If there is no default session locale at the database level, JDBC and ADO.NET applications should set the correct server session locale by executing the SET LOCALE TO command in order to get the expected collation and string functions behavior on the server. For more information, see SET LOCALE.

3.1.16 - Using time zones with Vertica

Vertica uses the public-domain tz database (time zone database), which contains code and data that represent the history of local time for locations around the globe.

Vertica uses the public-domain tz database (time zone database), which contains code and data that represent the history of local time for locations around the globe. This database organizes time zone and daylight saving time data by partitioning the world into timezones whose clocks all agree on timestamps that are later than the POSIX Epoch (1970-01-01 00:00:00 UTC). Each timezone has a unique identifier. Identifiers typically follow the convention area/location, where area is a continent or ocean, and location is a specific location within the area—for example, Africa/Cairo, America/New_York, and Pacific/Honolulu.

Important

IANA acknowledge that 1970 is an arbitrary cutoff. They note the problems that face moving the cutoff earlier "due to the wide variety of local practices before computer timekeeping became prevalent." IANA's own description of the tz database suggests that users should regard historical dates and times, especially those that predate the POSIX epoch date, with a healthy measure of skepticism. For details, see

Theory and pragmatics of the tz code and data.

Vertica uses the TZ environment variable (if set) on each node for the default current time zone. Otherwise, Vertica uses the operating system time zone.

The TZ variable can be set by the operating system during login (see /etc/profile, /etc/profile.d, or /etc/bashrc) or by the user in .profile, .bashrc or .bash-profile. TZ must be set to the same value on each node when you start Vertica.

The following command returns the current time zone for your database:

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

You can also set the time zone for a single session with SET TIME ZONE.

Conversion and storage of date/time data

There is no database default time zone. TIMESTAMPTZ (TIMESTAMP WITH TIMEZONE) data is converted from the current local time and stored as GMT/UTC (Greenwich Mean Time/Coordinated Universal Time).

When TIMESTAMPTZ data is used, data is converted back to the current local time zone, which might be different from the local time zone where the data was stored. This conversion takes into account daylight saving time (summer time), depending on the year and date to determine when daylight saving time begins and ends.