This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Developing user-defined extensions (UDxs)

The primary strengths of UDxs are:.

User-defined extensions (UDxs) are functions contained in external libraries that are developed in C++, Python, Java, or R using the Vertica SDK. The external libraries are defined in the Vertica catalog using the CREATE LIBRARY statement. They are best suited for analytic operations that are difficult to perform in SQL, or that need to be performed frequently enough that their speed is a major concern.

The primary strengths of UDxs are:

-

They can be used anywhere an internal function can be used.

-

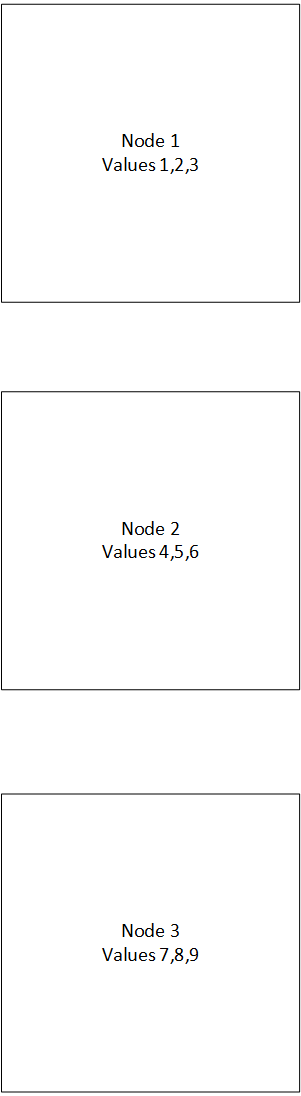

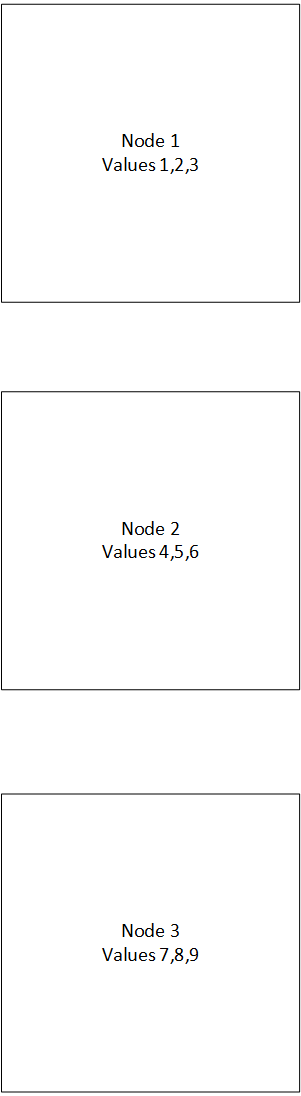

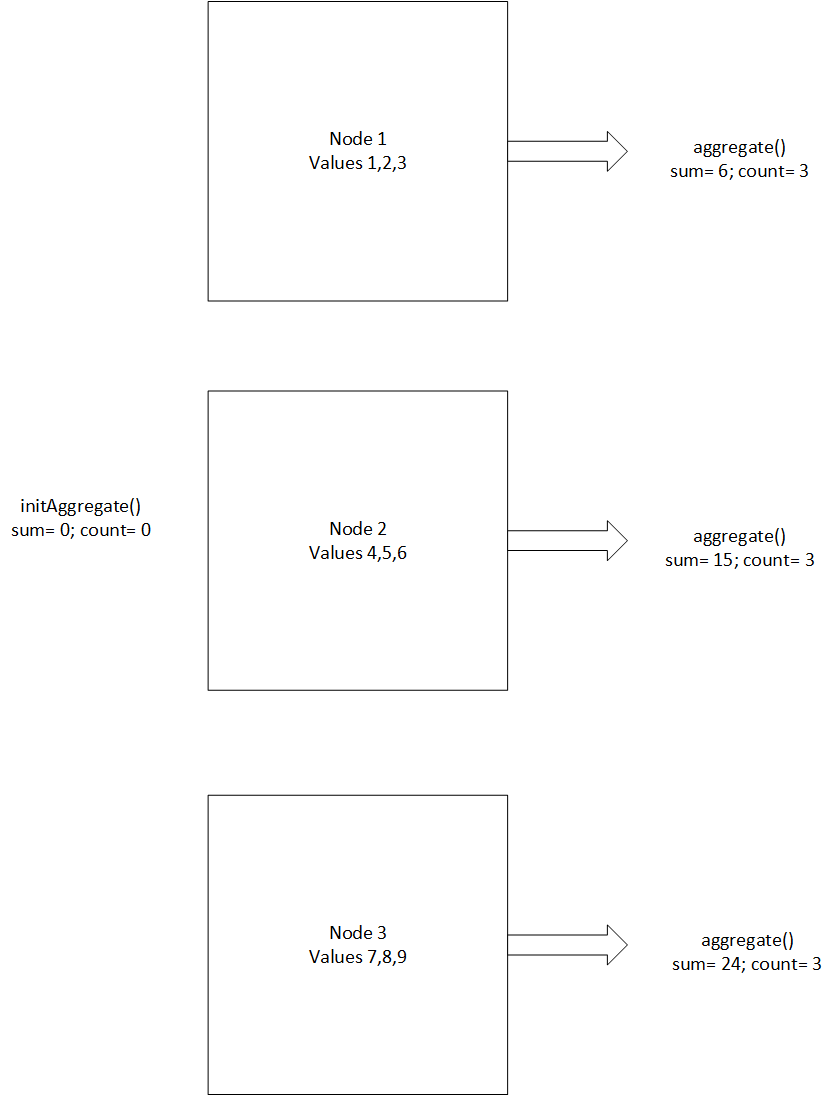

They take full advantage of Vertica's distributed computing features. The extensions usually execute in parallel on each node in the cluster.

-

They are distributed to all nodes by Vertica. You only need to copy the library to the initiator node.

-

All of the complicated aspects of developing a distributed piece of analytic code are handled for you by Vertica. Your main programming task is to read in data, process it, and then write it out using the Vertica SDK APIs.

There are a few things to keep in mind about developing UDxs:

-

UDxs can be developed in the programming languages C++, Python, Java, and R. (Not all UDx types support all languages.)

-

UDxs written in Java always run in fenced mode, because the Java Virtual Machine that executes Java programs cannot run directly within the Vertica process.

-

UDxs written in Python and R always run in fenced mode.

-

UDxs developed in C++ have the option of running in unfenced mode, which means they load and run directly in the Vertica database process. This option provides the lowest overhead and highest speed. However, any bugs in the UDx's code can cause database instability. You must thoroughly test any UDxs you intend to run in unfenced mode before deploying them in a live environment. Consider whether the performance boost of running a C++ UDx unfenced is worth the potential database instability that a buggy UDx can cause.

-

Because a UDx runs on the Vertica cluster, it can take processor time and memory away from the database processes. A UDx that consumes large amounts of computing resources can negatively impact database performance.

Types of UDxs

Vertica supports five types of user-defined extensions:

-

User-defined scalar functions (UDSFs) take in a single row of data and return a single value. These functions can be used anywhere a native function can be used, except CREATE TABLE BY PARTITION and SEGMENTED BY expressions. UDSFs can be developed in C++, Python, Java, and R.

-

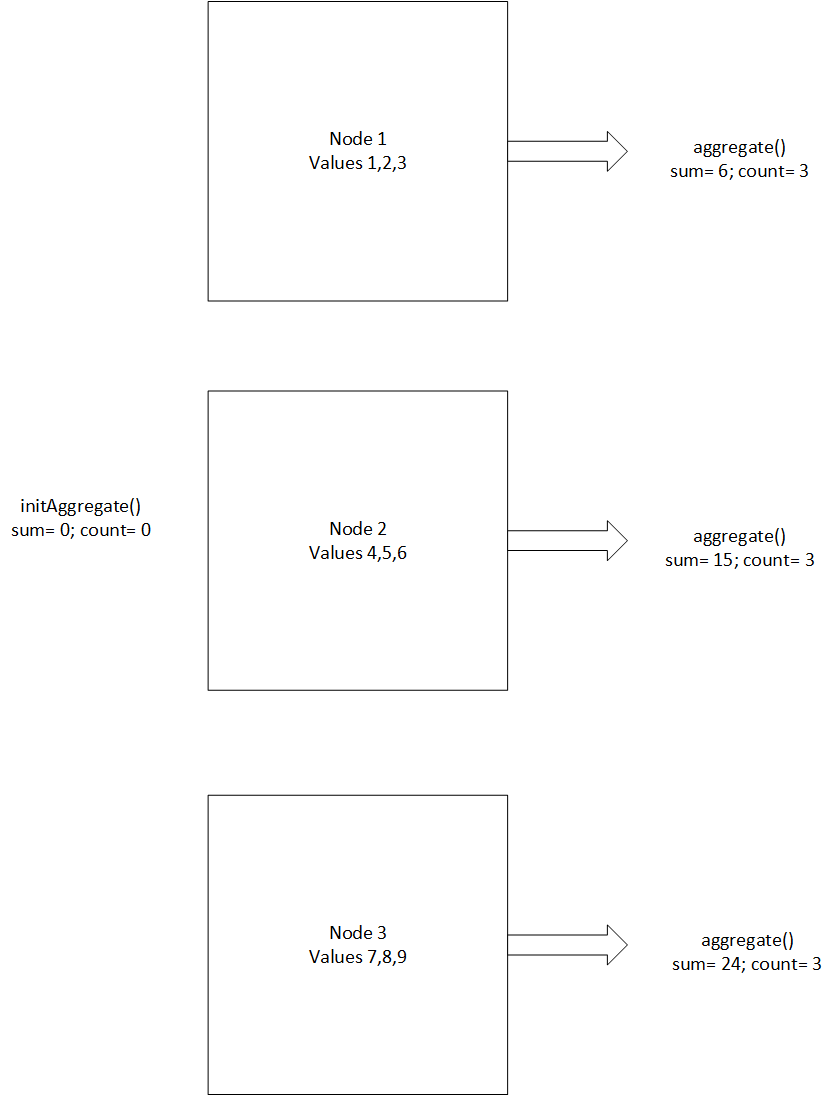

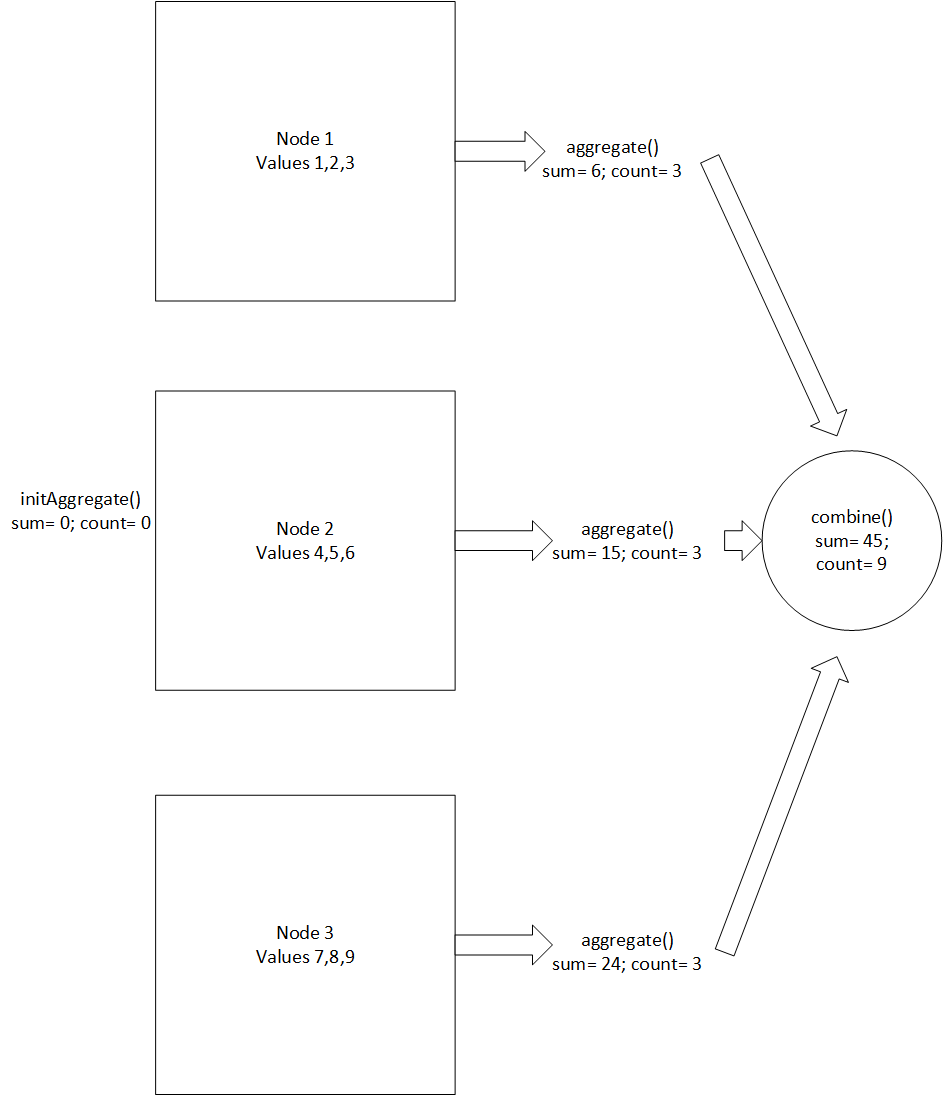

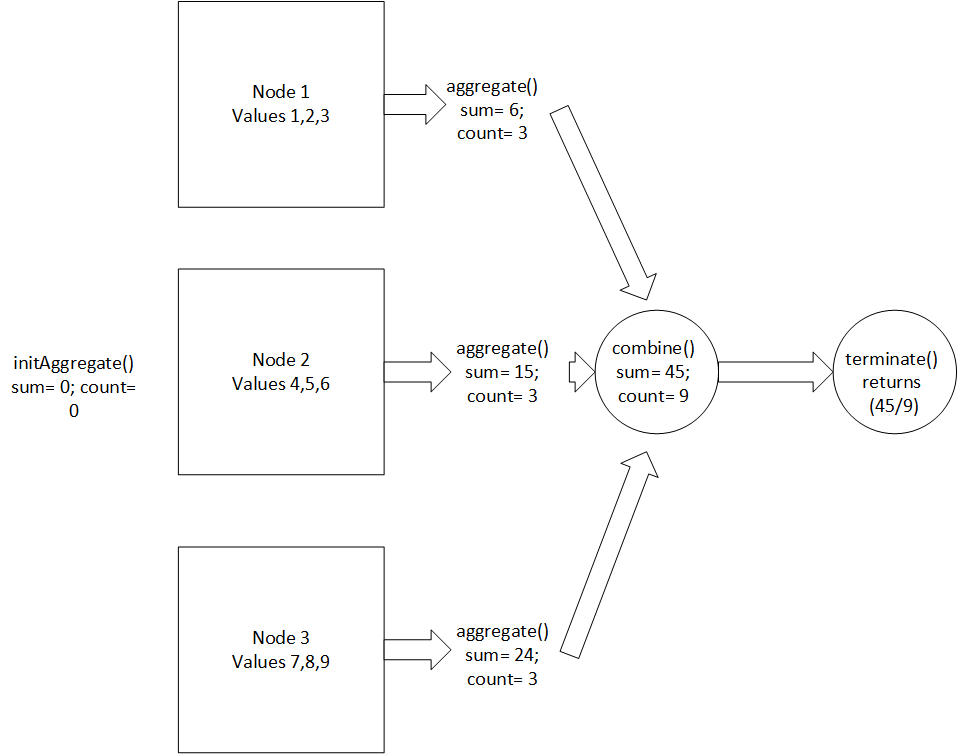

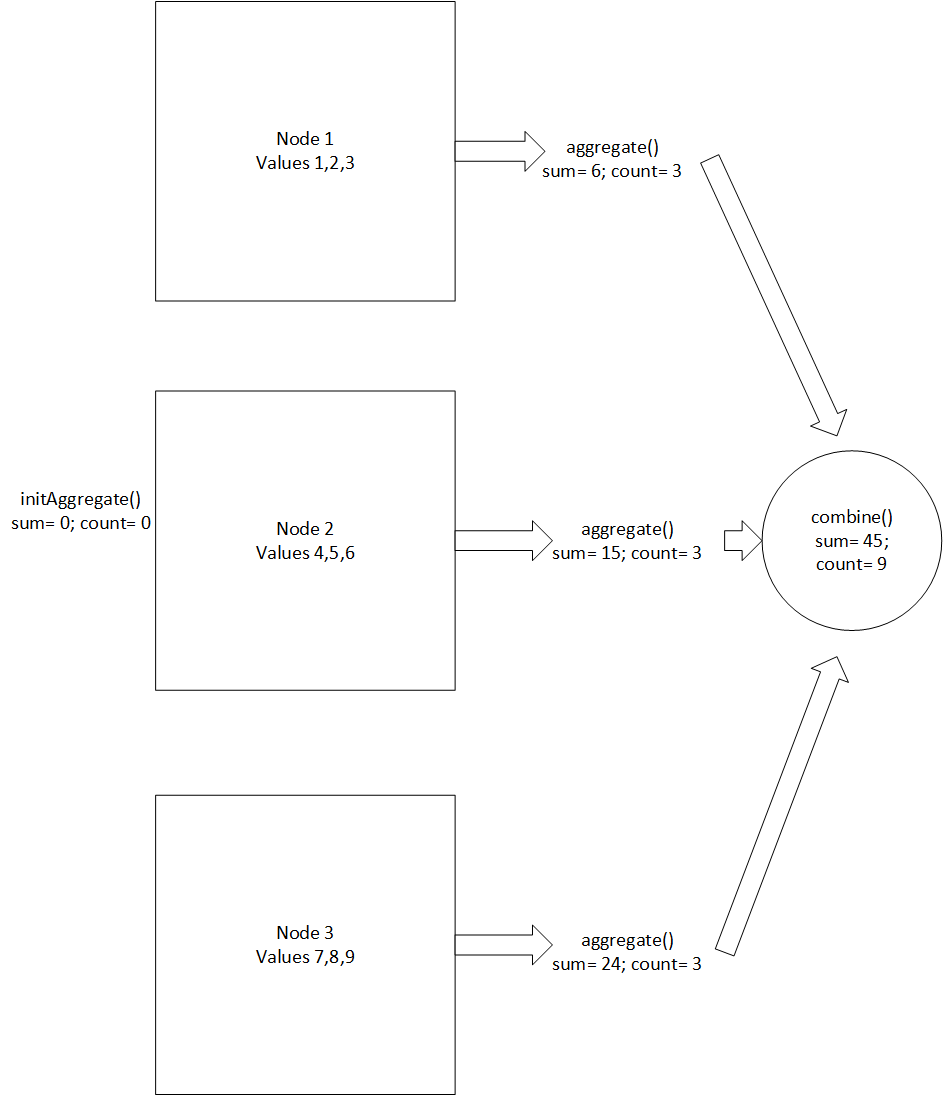

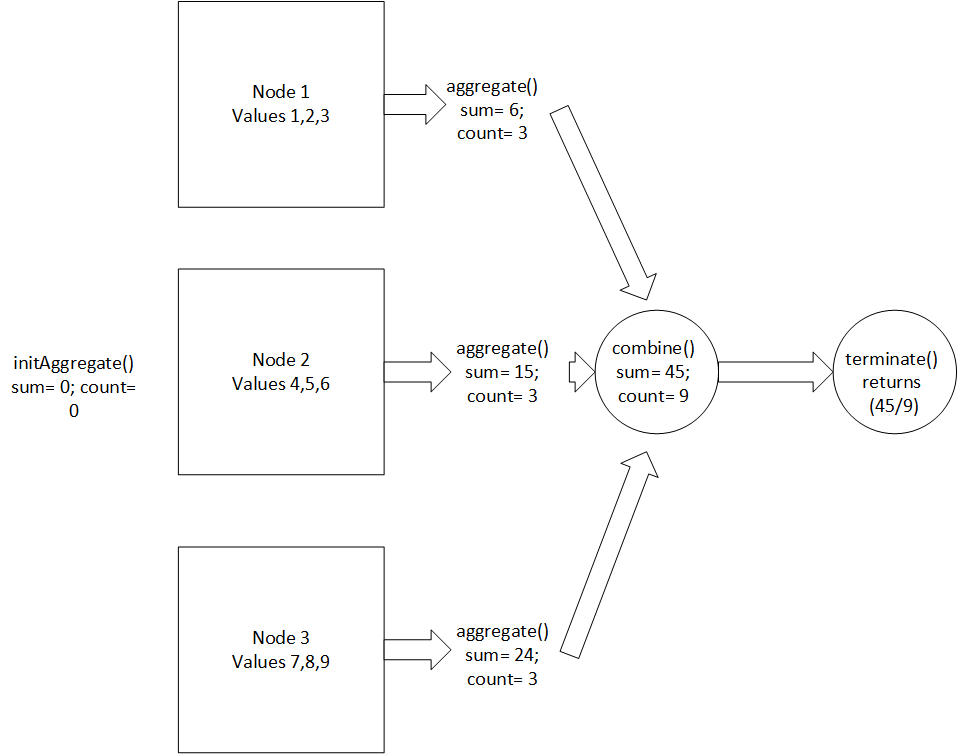

User-defined aggregate functions (UDAF) allow you to create custom Aggregate functions specific to your needs. They read one column of data, and return one output column. UDAFs can be developed in C++.

-

User-defined analytic functions (UDAnF) are similar to UDSFs, in that they read a row of data and return a single row. However, the function can read input rows independently of outputting rows, so that the output values can be calculated over several input rows. The function can be used with the query's OVER() clause to partition rows. UDAnFs can be developed in C++ and Java.

-

User-defined transform functions (UDTFs) operate on table partitions (as specified by the query's OVER() clause) and return zero or more rows of data. The data they return can be an entirely new table, unrelated to the schema of the input table, with its own ordering and segmentation expressions. They can only be used in the SELECT list of a query. UDTFs can be developed in C++, Python, Java, and R.

To optimize query performance, you can use live aggregate projections to pre-aggregate the data that a UDTF returns. For more information, see Pre-aggregating UDTF results.

-

User-defined load allows you to create custom sources, filters, and parsers to load data. These extensions can be used in COPY statements. UDLs can be developed C++, Java and Python.

While each UDx type has a unique base class, developing them is similar in many ways. Different UDx types can also share the same library.

Structure

Each UDx type consists of two primary classes. The main class does the actual work (a transformation, an aggregation, and so on). The class usually has at least three methods: one to set up, one to tear down (release reserved resources), and one to do the work. Sometimes additional methods are defined.

The main processing method receives an instance of the ServerInterface class as an argument. This object is used by the underlying Vertica SDK code to make calls back into the Vertica process, for example to allocate memory. You can use this class to write to the server log during UDx execution.

The second class is a singleton factory. It defines one method that produces instances of the first class, and might define other methods to manage parameters.

When implementing a UDx you must subclass both classes.

Conventions

The C++, Python, and Java APIs are nearly identical. Where possible, this documentation describes these interfaces without respect to language. Documentation specific to C++, Python, or Java is covered in language-specific sections.

Because some documentation is language-independent, it is not always possible to use ideal, language-based terminology. This documentation uses the term "method" to refer to a Java method or a C++ member function.

See also

Loading UDxs

1 - Developing with the Vertica SDK

Before you can write a user-defined extension you must set up a development environment.

Before you can write a user-defined extension you must set up a development environment. After you do so, a good test is to download, build, and run the published examples.

In addition to covering how to set up your environment, this section covers general information about working with the Vertica SDK, including language-specific considerations.

1.1 - Setting up a development environment

Before you start developing your UDx, you need to configure your development and test environments.

Before you start developing your UDx, you need to configure your development and test environments. Development and test environments must use the same operating system and Vertica version as the production environment.

For additional language-specific requirements, see the following topics:

Development environment options

The language that you use to develop your UDx determines the setup options and requirements for your development environment. C++ developers can use the C++ UDx container, and all developers can use a non-production Vertica environment.

C++ UDx container

C++ developers can develop with the C++ UDx container. The UDx-container GitHub repository provides the tools to build a container that packages the binaries, libraries, and compilers required to develop C++ Vertica extensions. The C++ UDx container has the following build options:

For requirement, build, and test details, see the repository README.

Non-production Vertica environments

You can use a node in a non-production Vertica database or another machine that runs the same operating system and Vertica version as your production environment. For specific requirements and dependencies, refer to Operating System Requirements and Language Requirements.

Test environment options

To test your UDx, you need access to a non-production Vertica database. You have the following options:

- Install a single-node Vertica database on your development machine.

- Download and build a containerized test environment.

Containerized test environments

Vertica provides the following containerized options to simplify your test environment setup:

Operating system requirements

Develop your UDx code on the same Linux platform that you use for your production Vertica database cluster. Centos- and Debian-based operating systems each require that you download additional packages.

CentOS-based operating systems

Installations on the following CentOS-based operating systems require the devtoolset-7 package:

-

CentOS

-

Red Hat Enterprise Linux

-

Oracle Enterprise Linux

Consult the documentation for your operating system for the specific installation command.

Debian-based operating systems

Installations on the following Debian-based operating systems require the GCC package version 7 or later:

Note

Vertica has not tested UDx builds that use GCC versions later than GCC 8.

-

Debian

-

Ubuntu

-

SUSE

-

OpenSUSE

Consult the documentation for your operating system for the specific installation command.

1.2 - Downloading and running UDx example code

You can download all of the examples shown in this documentation, and many more, from the Vertica GitHub repository.

You can download all of the examples shown in this documentation, and many more, from the Vertica GitHub repository. This repository includes examples of all types of UDxs.

You can download the examples in either of two ways:

-

Download the ZIP file. Extract the contents of the file into a directory.

-

Clone the repository. Using a terminal window, run the following command:

$ git clone https://github.com/vertica/UDx-Examples.git

The repository includes a makefile that you can use to compile the C++ and Java examples. It also includes .sql files that load and use the examples. See the README file for instructions on compiling and running the examples. To compile the examples you will need g++ or a JDK and make. See Setting up a development environment for related information.

Running the examples not only helps you understand how a UDx works, but also helps you ensure your development environment is properly set up to compile UDx libraries.

See also

1.3 - C++ SDK

The Vertica SDK supports writing both fenced and unfenced UDxs in C++ 11.

The Vertica SDK supports writing both fenced and unfenced UDxs in C++ 11. You can download, compile, and run the examples; see Downloading and running UDx example code. Running the examples is a good way to verify that your development environment has all needed libraries.

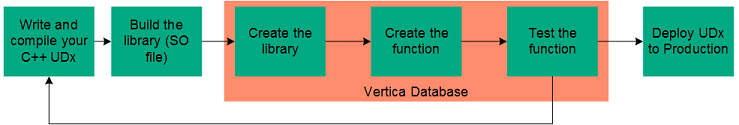

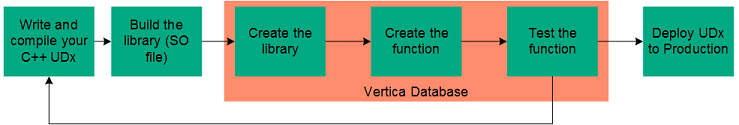

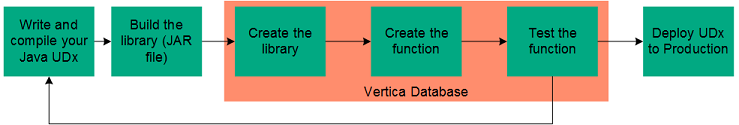

If you do not have access to a Vertica test environment, you can install Vertica on your development machine and run a single node. Each time you rebuild your UDx library, you need to re-install it into Vertica. The following diagram illustrates the typical development cycle.

This section covers C++-specific topics that apply to all UDx types. For information that applies to all languages, see Arguments and return values, UDx parameters, Errors, warnings, and logging, Handling cancel requests and the sections for specific UDx types. For full API documentation, see the C++ SDK Documentation.

1.3.1 - Setting up the C++ SDK

The Vertica C++ Software Development Kit (SDK) is distributed as part of the server installation.

The Vertica C++ Software Development Kit (SDK) is distributed as part of the server installation. It contains the source and header files you need to create your UDx library. For examples that you can compile and run, see Downloading and running UDx example code.

Requirements

At a minimum, install the following on your development machine:

-

devtoolset-7 package (CentOS) or GCC package (Debian), including GCC version 7 or later and an up-to-date libstdc++ package.

Note

Vertica has not tested UDx builds that use GCC versions later than GCC 8.

-

g++ and its associated toolchain, such as ld. Some Linux distributions package g++ separately from GCC.

-

A copy of the Vertica SDK.

Note

The Vertica binaries are compiled using the default version of g++ installed on the supported Linux platforms.

You must compile with a std flag value of c++11 or later.

The following optional software packages can simplify development:

-

make, or some other build-management tool.

-

gdb, or some other debugger.

-

Valgrind, or similar tools that detect memory leaks.

If you want to use any third-party libraries, such as statistical analysis libraries, you need to install them on your development machine. If you do not statically link these libraries into your UDx library, you must install them on every node in the cluster. See Compiling your C++ library for details.

SDK files

The SDK files are located in the sdk subdirectory under the root Vertica server directory (usually,

/opt/vertica/sdk). This directory contains a subdirectory, include, which contains the headers and source files needed to compile UDx libraries.

There are two files in the include directory you need when compiling your UDx:

-

Vertica.h is the main header file for the SDK. Your UDx code needs to include this file in order to find the SDK's definitions.

-

Vertica.cpp contains support code that needs to be compiled into the UDx library.

Much of the Vertica SDK API is defined in the VerticaUDx.h header file (which is included by the Vertica.h file). If you're curious, you might want to review the contents of this file in addition to reading the API documentation.

Finding the current SDK version

You must develop your UDx using the same SDK version as the database in which you plan to use it. To display the SDK version currently installed on your system, run the following command in vsql:

=> SELECT sdk_version();

Running the examples

You can download the examples from the GitHub repository (see Downloading and running UDx example code). Compiling and running the examples helps you to ensure that your development environment is properly set up.

To compile all of the examples, including the Java examples, issue the following command in the Java-and-C++ directory under the examples directory:

$ make

Note

To compile the examples, you must have a g++ development environment installed. To install a g++ development environment on Red Hat systems, run yum install gcc gcc-c++ make.

1.3.2 - Compiling your C++ library

GNU g++ is the only supported compiler for compiling UDx libraries.

GNU g++ is the only supported compiler for compiling UDx libraries. Always compile your UDx code on the same version of Linux that you use on your Vertica cluster.

When compiling your library, you must always:

-

Compile with a -std flag value of c++11 or later.

-

Pass the -shared and -fPIC flags to the linker. The simplest method is to just pass these flags to g++ when you compile and link your library.

-

Use the -Wno-unused-value flag to suppress warnings when macro arguments are not used. If you do not use this flag, you may get "left-hand operand of comma has no effect" warnings.

-

Compile sdk/include/Vertica.cpp and link it into your library. This file contains support routines that help your UDx communicate with Vertica. The easiest way to do this is to include it in the g++ command to compile your library. Vertica supplies this file as C++ source rather than a library to limit library compatibility issues.

-

Add the Vertica SDK include directory in the include search path using the g++ -I flag.

The SDK examples include a working makefile. See Downloading and running UDx example code.

Example of compiling a UDx

The following command compiles a UDx contained in a single source file named MyUDx.cpp into a shared library named MyUDx.so:

g++ -I /opt/vertica/sdk/include -Wall -shared -Wno-unused-value \

-fPIC -o MyUDx.so MyUDx.cpp /opt/vertica/sdk/include/Vertica.cpp

Important

Vertica only supports UDx development on 64-bit architectures.

After you debug your UDx, you are ready to deploy it. Recompile your UDx using the -O3 flag to enable compiler optimization.

You can add additional source files to your library by adding them to the command line. You can also compile them separately and then link them together.

Tip

The examples subdirectory in the Vertica SDK directory contains a make file that you can use as starting point for your own UDx project.

Handling external libraries

You must link your UDx library to any supporting libraries that your UDx code relies on.These libraries might be either ones you developed or others provided by third parties. You have two options for linking:

-

Statically link the support libraries into your UDx. The benefit of this method is that your UDx library does not rely on external files. Having a single UDx library file simplifies deployment because you just transfer a single file to your Vertica cluster. This method's main drawback is that it increases the size of your UDx library file.

-

Dynamically link the library to your UDx. You must sometimes use dynamic linking if a third-party library does not allow static linking. In this case, you must copy the libraries to your Vertica cluster in addition to your UDx library file.

1.3.3 - Adding metadata to C++ libraries

For example, the following code demonstrates adding metadata to the Add2Ints example (see C++ Example: Add2Ints).

You can add metadata, such as author name, the version of the library, a description of your library, and so on to your library. This metadata lets you track the version of your function that is deployed on a Vertica Analytic Database cluster and lets third-party users of your function know who created the function. Your library's metadata appears in the USER_LIBRARIES system table after your library has been loaded into the Vertica Analytic Database catalog.

You declare the metadata for your library by calling the RegisterLibrary() function in one of the source files for your UDx. If there is more than one function call in the source files for your UDx, whichever gets interpreted last as Vertica Analytic Database loads the library is used to determine the library's metadata.

The RegisterLibrary() function takes eight string parameters:

RegisterLibrary(author,

library_build_tag,

library_version,

library_sdk_version,

source_url,

description,

licenses_required,

signature);

-

author contains whatever name you want associated with the creation of the library (your own name or your company's name for example).

-

library_build_tag is a string you want to use to represent the specific build of the library (for example, the SVN revision number or a timestamp of when the library was compiled). This is useful for tracking instances of your library as you are developing them.

-

library_version is the version of your library. You can use whatever numbering or naming scheme you want.

-

library_sdk_version is the version of the Vertica Analytic Database SDK Library for which you've compiled the library.

Note

This field isn't used to determine whether a library is compatible with a version of the Vertica Analytic Database server. The version of the Vertica Analytic Database SDK you use to compile your library is embedded in the library when you compile it. It is this information that Vertica Analytic Database server uses to determine if your library is compatible with it.

-

source_url is a URL where users of your function can find more information about it. This can be your company's website, the GitHub page hosting your library's source code, or whatever site you like.

-

description is a concise description of your library.

-

licenses_required is a placeholder for licensing information. You must pass an empty string for this value.

-

signature is a placeholder for a signature that will authenticate your library. You must pass an empty string for this value.

For example, the following code demonstrates adding metadata to the Add2Ints example (see C++ example: Add2Ints).

// Register the factory with Vertica

RegisterFactory(Add2IntsFactory);

// Register the library's metadata.

RegisterLibrary("Whizzo Analytics Ltd.",

"1234",

"2.0",

"7.0.0",

"http://www.example.com/add2ints",

"Add 2 Integer Library",

"",

"");

Loading the library and querying the USER_LIBRARIES system table shows the metadata supplied in the call to RegisterLibrary():

=> CREATE LIBRARY add2intslib AS '/home/dbadmin/add2ints.so';

CREATE LIBRARY

=> \x

Expanded display is on.

=> SELECT * FROM USER_LIBRARIES WHERE lib_name = 'add2intslib';

-[ RECORD 1 ]-----+----------------------------------------

schema_name | public

lib_name | add2intslib

lib_oid | 45035996273869808

author | Whizzo Analytics Ltd.

owner_id | 45035996273704962

lib_file_name | public_add2intslib_45035996273869808.so

md5_sum | 732c9e145d447c8ac6e7304313d3b8a0

sdk_version | v7.0.0-20131105

revision | 125200

lib_build_tag | 1234

lib_version | 2.0

lib_sdk_version | 7.0.0

source_url | http://www.example.com/add2ints

description | Add 2 Integer Library

licenses_required |

signature |

1.3.4 - C++ SDK data types

The Vertica SDK has typedefs and classes for representing Vertica data types within your UDx code.

The Vertica SDK has typedefs and classes for representing Vertica data types within your UDx code. Using these typedefs ensures data type compatibility between the data your UDx processes and generates and the Vertica database. The following table describes some of the typedefs available. Consult the C++ SDK Documentation for a complete list, as well as lists of helper functions to convert and manipulate these data types.

For information about SDK support for complex data types, see Complex Types as Arguments and Return Values.

|

Type Definition |

Description |

|

Interval |

A Vertica interval |

|

IntervalYM |

A Vertica year-to-month interval. |

|

Timestamp |

A Vertica timestamp |

|

vint |

A standard Vertica 64-bit integer |

|

vbool |

A Boolean value in Vertica |

|

vbool_null |

A null value for a Boolean data types |

|

vfloat |

A Vertica floating point value |

|

VString |

String data types (such as varchar and char)

Note: Do not use a VString object to hold an intermediate result. Use a std::string or char[] instead.

|

|

VNumeric |

Fixed-point data types from Vertica |

|

VUuid |

A Vertica universally unique identifier |

Notes

-

When making some Vertica SDK API calls (such as VerticaType::getNumericLength()) on objects, make sure they have the correct data type. To minimize overhead and improve performance, most of the APIs do not check the data types of the objects on which they are called. Calling a function on an incorrect data type can result in an error.

-

You cannot create instances of VString or VNumeric yourself. You can manipulate the values of existing objects of these classes that Vertica passes to your UDx, and extract values from them. However, only Vertica can instantiate these classes.

1.3.5 - Resource use for C++ UDxs

Your UDxs consume at least a small amount of memory by instantiating classes and creating local variables.

Your UDxs consume at least a small amount of memory by instantiating classes and creating local variables. This basic memory usage by UDxs is small enough that you do not need to be concerned about it.

If your UDx needs to allocate more than one or two megabytes of memory for data structures, or requires access to additional resources such as files, you must inform Vertica about its resource use. Vertica can then ensure that the resources your UDx requires are available before running a query that uses it. Even moderate memory use (10MB per invocation of a UDx, for example) can become an issue if there are many simultaneous queries that call it.

Note

If your UDx allocates its own memory, you must make

absolutely sure it properly frees it. Failing to free even a single byte of allocated memory can have significant consequences at scale. Instead of having your code allocate its own memory, you should use the C++

vt_alloc macro, which uses Vertica's own memory manager to allocate and track memory. This memory is guaranteed to be properly disposed of when your UDx completes execution. See

Allocating resources for UDxs for more information.

1.3.5.1 - Allocating resources for UDxs

You have two options for allocating memory and file handles for your user-defined extensions (UDxs):.

You have two options for allocating memory and file handles for your user-defined extensions (UDxs):

-

Use Vertica SDK macros to allocate resources. This is the best method, since it uses Vertica's own resource manager, and guarantees that resources used by your UDx are reclaimed. See Allocating resources with the SDK macros.

-

While not the recommended option, you can allocate resources in your UDxs yourself using standard C++ methods (instantiating objects using new, allocating memory blocks using malloc(), etc.). You must manually free these resources before your UDx exits.

Note

You must be extremely careful if you choose to allocate your own resources in your UDx. Failing to free resources properly will have significant negative impact, especially if your UDx is running in unfenced mode.

Whichever method you choose, you usually allocate resources in a function named setup() in your UDx class. This function is called after your UDx function object is instantiated, but before Vertica calls it to process data.

If you allocate memory on your own in the setup() function, you must free it in a corresponding function named destroy(). This function is called after your UDx has performed all of its processing. This function is also called if your UDx returns an error (see Handling errors).

Note

Always use the setup() and destroy() functions to allocate and free resources instead of your own constructors and destructors. The memory for your UDx object is allocated from one of Vertica's own memory pools. Vertica always calls your UDx's destroy() function before it deallocates the object's memory. There is no guarantee that your UDx's destructor is will be called before the object is deallocated. Using the destroy() function ensures that your UDx has a chance to free its allocated resources before it is destroyed.

The following code fragment demonstrates allocating and freeing memory using a setup() and destroy() function.

class MemoryAllocationExample : public ScalarFunction

{

public:

uint64* myarray;

// Called before running the UDF to allocate memory used throughout

// the entire UDF processing.

virtual void setup(ServerInterface &srvInterface, const SizedColumnTypes

&argTypes)

{

try

{

// Allocate an array. This memory is directly allocated, rather than

// letting Vertica do it. Remember to properly calculate the amount

// of memory you need based on the data type you are allocating.

// This example divides 500MB by 8, since that's the number of

// bytes in a 64-bit unsigned integer.

myarray = new uint64[1024 * 1024 * 500 / 8];

}

catch (std::bad_alloc &ba)

{

// Always check for exceptions caused by failed memory

// allocations.

vt_report_error(1, "Couldn't allocate memory :[%s]", ba.what());

}

}

// Called after the UDF has processed all of its information. Use to free

// any allocated resources.

virtual void destroy(ServerInterface &srvInterface, const SizedColumnTypes

&argTypes)

{

// srvInterface.log("RowNumber processed %d records", *count_ptr);

try

{

// Properly dispose of the allocated memory.

delete[] myarray;

}

catch (std::bad_alloc &ba)

{

// Always check for exceptions caused by failed memory

// allocations.

vt_report_error(1, "Couldn't free memory :[%s]", ba.what());

}

}

1.3.5.2 - Allocating resources with the SDK macros

The Vertica SDK provides three macros to allocate memory:.

The Vertica SDK provides three macros to allocate memory:

-

vt_alloc allocates a block of memory to fit a specific data type (vint, struct, etc.).

-

vt_allocArray allocates a block of memory to hold an array of a specific data type.

-

vt_allocSize allocates an arbitrarily-sized block of memory.

All of these macros allocate their memory from memory pools managed by Vertica. The main benefit of allowing Vertica to manage your UDx's memory is that the memory is automatically reclaimed after your UDx has finished. This ensures there is no memory leaks in your UDx.

Because Vertica frees this memory automatically, do not attempt to free any of the memory you allocate through any of these macros. Attempting to free this memory results in run-time errors.

1.3.5.3 - Informing Vertica of resource requirements

When you run your UDx in fenced mode, Vertica monitors its use of memory and file handles.

When you run your UDx in fenced mode, Vertica monitors its use of memory and file handles. If your UDx uses more than a few megabytes of memory or any file handles, it should tell Vertica about its resource requirements. Knowing the resource requirements of your UDx allows Vertica to determine whether it can run the UDx immediately or needs to queue the request until enough resources become available to run it.

Determining how much memory your UDx requires can be difficult in some cases. For example, if your UDx extracts unique data elements from a data set, there is potentially no bound on the number of data items. In this case, a useful technique is to run your UDx in a test environment and monitor its memory use on a node as it handles several differently-sized queries, then extrapolate its memory use based on the worst-case scenario it may face in your production environment. In all cases, it's usually a good idea to add a safety margin to the amount of memory you tell Vertica your UDx uses.

Note

The information on your UDx's resource needs that you pass to Vertica is used when planning the query execution. There is no way to change the amount of resources your UDx requests from Vertica while the UDx is actually running.

Your UDx informs Vertica of its resource needs by implementing the getPerInstanceResources() function in its factory class (see Vertica::UDXFactory::getPerInstanceResources() in the SDK documentation). If your UDx's factory class implements this function, Vertica calls it to determine the resources your UDx requires.

The getPerInstanceResources() function receives an instance of the Vertica::VResources struct. This struct contains fields that set the amount of memory and the number of file handles your UDx needs. By default, the Vertica server allocates zero bytes of memory and 100 file handles for each instance of your UDx.

Your implementation of the getPerInstanceResources() function sets the fields in the VResources struct based on the maximum resources your UDx may consume for each instance of the UDx function. So, if your UDx's processBlock() function creates a data structure that uses at most 100MB of memory, your UDx must set the VResources.scratchMemory field to at least 104857600 (the number of bytes in 100MB). Leave yourself a safety margin by increasing the number beyond what your UDx should normally consume. In this example, allocating 115000000 bytes (just under 110MB) is a good idea.

The following ScalarFunctionFactory class demonstrates calling getPerInstanceResources() to inform Vertica about the memory requirements of the MemoryAllocationExample class shown in Allocating resources for UDxs. It tells Vertica that the UDSF requires 510MB of memory (which is a bit more than the UDSF actually allocates, to be on the safe size).

class MemoryAllocationExampleFactory : public ScalarFunctionFactory

{

virtual Vertica::ScalarFunction *createScalarFunction(Vertica::ServerInterface

&srvInterface)

{

return vt_createFuncObj(srvInterface.allocator, MemoryAllocationExample);

}

virtual void getPrototype(Vertica::ServerInterface &srvInterface,

Vertica::ColumnTypes &argTypes,

Vertica::ColumnTypes &returnType)

{

argTypes.addInt();

argTypes.addInt();

returnType.addInt();

}

// Tells Vertica the amount of resources that this UDF uses.

virtual void getPerInstanceResources(ServerInterface &srvInterface,

VResources &res)

{

res.scratchMemory += 1024LL * 1024 * 510; // request 510MB of memory

}

};

1.3.5.4 - Setting memory limits for fenced-mode UDxs

Vertica calls a fenced-mode UDx's implementation of Vertica::UDXFactory::getPerInstanceResources() to determine if there are enough free resources to run the query containing the UDx (see Informing [%=Vertica.DBMS_SHORT%] of Resource Requirements).

Vertica calls a fenced-mode UDx's implementation of Vertica::UDXFactory::getPerInstanceResources() to determine if there are enough free resources to run the query containing the UDx (see Informing Vertica of resource requirements). Since these reports are not generated by actual memory use, they can be inaccurate. Once started by Vertica, a UDx could allocate far more memory or file handles than it reported it needs.

The FencedUDxMemoryLimitMB configuration parameter lets you create an absolute memory limit for UDxs. Any attempt by a UDx to allocate more memory than this limit results in a bad_alloc exception. For an example of setting FencedUDxMemoryLimitMB, see How resource limits are enforced.

1.3.5.5 - How resource limits are enforced

Before running a query, Vertica determines how much memory it requires to run.

Before running a query, Vertica determines how much memory it requires to run. If the query contains a fenced-mode UDx which implements the getPerInstanceResources() function in its factory class, Vertica calls it to determine the amount of memory the UDx needs and adds this to the total required for the query. Based on these requirements, Vertica decides how to handle the query:

-

If the total amount of memory required (including the amount that the UDxs report that they need) is larger than the session's MEMORYCAP or resource pool's MAXMEMORYSIZE setting, Vertica rejects the query. For more information about resource pools, see Resource pool architecture.

-

If the amount of memory is below the limit set by the session and resource pool limits, but there is currently not enough free memory to run the query, Vertica queues it until enough resources become available.

-

If there are enough free resources to run the query, Vertica executes it.

Note

Vertica has no other way to determine the amount of resources a UDx requires other than the values it reports using the

getPerInstanceResources() function. A UDx could use more resources than it claims, which could cause performance issues for other queries that are denied resources. You can set an absolute limit on the amount of memory UDxs can allocate. See

Setting memory limits for fenced-mode UDxs for more information.

If the process executing your UDx attempts to allocate more memory than the limit set by the FencedUDxMemoryLimitMB configuration parameter, it receives a bad_alloc exception. For more information about FencedUDxMemoryLimitMB, see Setting memory limits for fenced-mode UDxs.

Below is the output of loading a UDSF that consumes 500MB of memory, then changing the memory settings to cause out-of-memory errors. The MemoryAllocationExample UDSF in the following example is just the Add2Ints UDSF example altered as shown in Allocating resources for UDxs and Informing Vertica of resource requirements to allocate 500MB of RAM.

=> CREATE LIBRARY mylib AS '/home/dbadmin/MemoryAllocationExample.so';

CREATE LIBRARY

=> CREATE FUNCTION usemem AS NAME 'MemoryAllocationExampleFactory' LIBRARY mylib

-> FENCED;

CREATE FUNCTION

=> SELECT usemem(1,2);

usemem

--------

3

(1 row)

The following statements demonstrate setting the session's MEMORYCAP to lower than the amount of memory that the UDSF reports it uses. This causes Vertica to return an error before it executes the UDSF.

=> SET SESSION MEMORYCAP '100M';

SET

=> SELECT usemem(1,2);

ERROR 3596: Insufficient resources to execute plan on pool sysquery

[Request exceeds session memory cap: 520328KB > 102400KB]

=> SET SESSION MEMORYCAP = default;

SET

The resource pool can also prevent a UDx from running if it requires more memory than is available in the pool. The following statements demonstrate the effect of creating and using a resource pool that has too little memory for the UDSF to run. Similar to the session's MAXMEMORYCAP limit, the pool's MAXMEMORYSIZE setting prevents Vertica from executing the query containing the UDSF.

=> CREATE RESOURCE POOL small MEMORYSIZE '100M' MAXMEMORYSIZE '100M';

CREATE RESOURCE POOL

=> SET SESSION RESOURCE POOL small;

SET

=> CREATE TABLE ExampleTable(a int, b int);

CREATE TABLE

=> INSERT /*+direct*/ INTO ExampleTable VALUES (1,2);

OUTPUT

--------

1

(1 row)

=> SELECT usemem(a, b) FROM ExampleTable;

ERROR 3596: Insufficient resources to execute plan on pool small

[Request Too Large:Memory(KB) Exceeded: Requested = 523136, Free = 102400 (Limit = 102400, Used = 0)]

=> DROP RESOURCE POOL small; --Dropping the pool resets the session's pool

DROP RESOURCE POOL

Finally, setting the FencedUDxMemoryLimitMB configuration parameter to lower than the UDx actually allocates results in the UDx throwing an exception. This is a different case than either of the previous two examples, since the query actually executes. The UDx's code needs to catch and handle the exception. In this example, it uses the vt_report_error macro to report the error back to Vertica and exit.

=> ALTER DATABASE DEFAULT SET FencedUDxMemoryLimitMB = 300;

=> SELECT usemem(1,2);

ERROR 3412: Failure in UDx RPC call InvokeSetup(): Error calling setup() in

User Defined Object [usemem] at [MemoryAllocationExample.cpp:32], error code:

1, message: Couldn't allocate memory :[std::bad_alloc]

=> ALTER DATABASE DEFAULT SET FencedUDxMemoryLimitMB = -1;

=> SELECT usemem(1,2);

usemem

--------

3

(1 row)

See also

1.4 - Java SDK

The Vertica SDK supports writing Java UDxs of all types except aggregate functions.

The Vertica SDK supports writing Java UDxs of all types except aggregate functions. All Java UDxs are fenced.

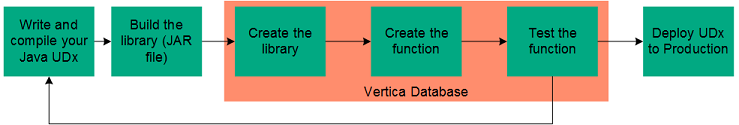

You can download, compile, and run the examples; see Downloading and running UDx example code. Running the examples is a good way to verify that your development environment has all needed libraries.

If you do not have access to a Vertica test environment, you can install Vertica on your development machine and run a single node. Each time you rebuild your UDx library, you need to re-install it into Vertica. The following diagram illustrates the typical development cycle.

This section covers Java-specific topics that apply to all UDx types. For information that applies to all languages, see Arguments and return values, UDx parameters, Errors, warnings, and logging, Handling cancel requests and the sections for specific UDx types. For full API documentation, see the Java SDK Documentation.

1.4.1 - Setting up the Java SDK

The Vertica Java Software Development Kit (SDK) is distributed as part of the server installation.

The Vertica Java Software Development Kit (SDK) is distributed as part of the server installation. It contains the source and JAR files you need to create your UDx library. For examples that you can compile and run, see Downloading and running UDx example code.

Requirements

At a minimum, install the following on your development machine:

Optionally, you can simplify development with a build-management tool, such as make.

SDK files

To use the SDK you need two files from the Java support package:

-

/opt/vertica/bin/VerticaSDK.jar contains the Vertica Java SDK and other supporting files.

-

/opt/vertica/sdk/BuildInfo.java contains version information about the SDK. You must compile this file and include it within your Java UDx JAR files.

If you are not doing your development on a database node, you can copy these two files from one of the database nodes to your development system.

The BuildInfo.java and VerticaSDK.jar files that you use to compile your UDx must be from the same SDK version. Both files must also match the version of the SDK files on your Vertica hosts. Versioning is only an issue if you are not compiling your UDxs on a Vertica host. If you are compiling on a separate development system, always refresh your copies of these two files and recompile your UDxs just before deploying them.

Finding the current SDK version

You must develop your UDx using the same SDK version as the database in which you plan to use it. To display the SDK version currently installed on your system, run the following command in vsql:

=> SELECT sdk_version();

Compiling BuildInfo.java

You need to compile the BuildInfo.java file into a class file, so you can include it in your Java UDx JAR library. If you are using a Vertica node as a development system, you can either:

-

Copy the BuildInfo.java file to another location on your host.

-

If you have root privileges, compile the BuildInfo.java file in place. (Only the root user has privileges to write files to the /opt/vertica/sdk directory.)

Compile the file using the following command. Replace path with the path to the file and output-directory with the directory where you will compile your UDxs.

$ javac -classpath /opt/vertica/bin/VerticaSDK.jar \

/path/BuildInfo.java -d output-directory

If you use an IDE such as Eclipse, you can include the BuildInfo.java file in your project instead of compiling it separately. You must also add the VerticaSDK.jar file to the project's build path. See your IDE's documentation for details on how to include files and libraries in your projects.

Running the examples

You can download the examples from the GitHub repository (see Downloading and running UDx example code). Compiling and running the examples helps you to ensure that your development environment is properly set up.

If you have not already done so, set the JAVA_HOME environment variable to your JDK (not JRE) directory.

To compile all of the examples, including the Java examples, issue the following command in the Java-and-C++ directory under the examples directory:

$ make

To compile only the Java examples, issue the following command in the Java-and-C++ directory under the examples directory:

$ make JavaFunctions

1.4.2 - Compiling and packaging a Java library

Before you can use your Java UDx, you need to compile it and package it into a JAR file.

Before you can use your Java UDx, you need to compile it and package it into a JAR file.

The SDK examples include a working makefile. See Downloading and running UDx example code.

Compile your Java UDx

You must include the SDK JAR file in the CLASSPATH when you compile your Java UDx source files so the Java compiler can resolve the Vertica API calls. If you are using the command-line Java compiler on a host in your database cluster, enter this command:

$ javac -classpath /opt/vertica/bin/VerticaSDK.jar factorySource.java \

[functionSource.java...] -d output-directory

If all of your source files are in the same directory, you can use *.java on the command line instead of listing the files individually.

If you are using an IDE, verify that a copy of the VerticaSDK.jar file is in the build path.

UDx class file organization

After you compile your UDx, you must package its class files and the BuildInfo.class file into a JAR file.

Note

You can package as many UDxs as you want into the same JAR file. Bundling your UDxs together saves you from having to load multiple libraries.

To use the jar command packaged as part of the JDK, you must organize your UDx class files into a directory structure matching your class package structure. For example, suppose your UDx's factory class has a fully-qualified name of com.mycompany.udfs.Add2ints. In this case, your class files must be in the directory hierarchy com/mycompany/udfs relative to your project's base directory. In addition, you must have a copy of the BuildInfo.class file in the path com/vertica/sdk so that it can be included in the JAR file. This class must appear in your JAR file to indicate the SDK version that was used to compile your Java UDx.

The JAR file for the Add2ints UDSF example has the following directory structure after compilation:

com/vertica/sdk/BuildInfo.class

com/mycompany/example/Add2intsFactory.class

com/mycompany/example/Add2intsFactory$Add2ints.class

Package your UDx into a JAR file

To create a JAR file from the command line:

-

Change to the root directory of your project.

-

Use the jar command to package the BuildInfo.class file and all of the classes in your UDx:

# jar -cvf libname.jar com/vertica/sdk/BuildInfo.class \

packagePath/*.class

When you type this command, libname is the filename you have chosen for your JAR file (choose whatever name you like), and packagePath is the path to the directory containing your UDx's class files.

-

For example, to package the files from the Add2ints example, you use the command:

# jar -cvf Add2intsLib.jar com/vertica/sdk/BuildInfo.class \

com/mycompany/example/*.class

-

More simply, if you compiled BuildInfo.class and your class files into the same root directory, you can use the following command:

# jar -cvf Add2intsLib.jar .

You must include all of the class files that make up your UDx in your JAR file. Your UDx always consists of at least two classes (the factory class and the function class). Even if you defined your function class as an inner class of your factory class, Java generates a separate class file for the inner class.

After you package your UDx into a JAR file, you are ready to deploy it to your Vertica database.

1.4.3 - Handling Java UDx dependencies

If your Java UDx relies on one or more external libraries, you can handle the dependencies in one of three ways:.

If your Java UDx relies on one or more external libraries, you can handle the dependencies in one of three ways:

-

Bundle the JAR files into your UDx JAR file using a tool such as One-JAR or Eclipse Runnable JAR Export Wizard.

-

Unpack the JAR file and then repack its contents in your UDx's JAR file.

-

Copy the libraries to your Vertica cluster in addition to your UDx library.Then, use the DEPENDS keyword of the CREATE LIBRARY statement to tell Vertica that the UDx library depends on the external libraries. This keyword acts as a library-specific CLASSPATH setting. Vertica distributes the support libraries to all of the nodes in the cluster and sets the class path for the UDx so it can find them.

If your UDx depends on native libraries (SO files), use the DEPENDS keyword to specify their path. When you call System.loadLibrary in your UDx (which you must do before using a native library), this function uses the DEPENDS path to find them. You do not need to also set the LD_LIBRARY_PATH environment variable.

External library example

The following example demonstrates using an external library with a Java UDx.

The following sample code defines a simple class, named VowelRemover. It contains a single method, named removevowels, that removes all of the vowels (the letters a, e, i, o u, and y) from a string.

package com.mycompany.libs;

public class VowelRemover {

public String removevowels(String input) {

return input.replaceAll("(?i)[aeiouy]", "");

}

};

You can compile this class and package it into a JAR file with the following commands:

$ javac -g com/mycompany/libs/VowelRemover.java

$ jar cf mycompanylibs.jar com/mycompany/libs/VowelRemover.class

The following code defines a Java UDSF, named DeleteVowels, that uses the library defined in the preceding example code. DeleteVowels accepts a single VARCHAR as input, and returns a VARCHAR.

package com.mycompany.udx;

// Import the support class created earlier

import com.mycompany.libs.VowelRemover;

// Import the Vertica SDK

import com.vertica.sdk.*;

public class DeleteVowelsFactory extends ScalarFunctionFactory {

@Override

public ScalarFunction createScalarFunction(ServerInterface arg0) {

return new DeleteVowels();

}

@Override

public void getPrototype(ServerInterface arg0, ColumnTypes argTypes,

ColumnTypes returnTypes) {

// Accept a single string and return a single string.

argTypes.addVarchar();

returnTypes.addVarchar();

}

@Override

public void getReturnType(ServerInterface srvInterface,

SizedColumnTypes argTypes,

SizedColumnTypes returnType){

returnType.addVarchar(

// Output will be no larger than the input.

argTypes.getColumnType(0).getStringLength(), "RemovedVowels");

}

public class DeleteVowels extends ScalarFunction

{

@Override

public void processBlock(ServerInterface arg0, BlockReader argReader,

BlockWriter resWriter) throws UdfException, DestroyInvocation {

// Create an instance of the VowelRemover object defined in

// the library.

VowelRemover remover = new VowelRemover();

do {

String instr = argReader.getString(0);

// Call the removevowels method defined in the library.

resWriter.setString(remover.removevowels(instr));

resWriter.next();

} while (argReader.next());

}

}

}

Use the following commands to build the example UDSF and package it into a JAR:

-

The first javac command compiles the SDK’s BuildInfo class. Vertica requires all UDx libraries to contain this class. The javac command’s -d option outputs the class file in the directory structure of your UDSF’s source.

-

The second javac command compiles the UDSF class. It adds the previously-created mycompanylibs.jar file to the class path so compiler can find the the VowelRemover class.

-

The jar command packages the BuildInfo and the classes for the UDx library together.

$ javac -g -cp /opt/vertica/bin/VerticaSDK.jar\

/opt/vertica/sdk/com/vertica/sdk/BuildInfo.java -d .

$ javac -g -cp mycompanylibs.jar:/opt/vertica/bin/VerticaSDK.jar\

com/mycompany/udx/DeleteVowelsFactory.java

$ jar cf DeleteVowelsLib.jar com/mycompany/udx/*.class \

com/vertica/sdk/*.class

To install the UDx library, you must copy both of the JAR files to a node in the Vertica cluster. Then, connect to the node to execute the CREATE LIBRARY statement.

The following example demonstrates how to load the UDx library after you copy the JAR files to the home directory of the dbadmin user. The DEPENDS keyword tells Vertica that the UDx library depends on the mycompanylibs.jar file.

=> CREATE LIBRARY DeleteVowelsLib AS

'/home/dbadmin/DeleteVowelsLib.jar' DEPENDS '/home/dbadmin/mycompanylibs.jar'

LANGUAGE 'JAVA';

CREATE LIBRARY

=> CREATE FUNCTION deleteVowels AS language 'java' NAME

'com.mycompany.udx.DeleteVowelsFactory' LIBRARY DeleteVowelsLib;

CREATE FUNCTION

=> SELECT deleteVowels('I hate vowels!');

deleteVowels

--------------

ht vwls!

(1 row)

1.4.4 - Java and Vertica data types

The Vertica Java SDK converts Vertica's native data types into the appropriate Java data type.

The Vertica Java SDK converts Vertica's native data types into the appropriate Java data type. The following table lists the Vertica data types and their corresponding Java data types.

|

Vertica Data Type |

Java Data Type |

|

INTEGER |

long |

|

FLOAT |

double |

|

NUMERIC |

com.vertica.sdk.VNumeric |

|

DATE |

java.sql.Date |

|

CHAR, VARCHAR, LONG VARCHAR |

com.vertica.sdk.VString |

|

BINARY, VARBINARY, LONG VARBINARY |

com.vertica.sdk.VString |

|

TIMESTAMP |

java.sql.Timestamp |

Note

Some Vertica data types are not supported.

Setting BINARY, VARBINARY, and LONG VARBINARY values

The Vertica BINARY, VARBINARY, and LONG VARBINARY data types are converted as the Java UDx SDK 's VString class. You can also set the value of a column with one of these data types with a ByteBuffer object (or a byte array wrapped in a ByteBuffer) using the PartitionWriter.setStringBytes() method. See the Java API UDx entry for PartitionWriter.setStringBytes() for more information.

Timestamps and time zones

When the SDK converts a Vertica timestamp into a Java timestamp, it uses the time zone of the JVM. If the JVM is running in a different time zone than the one used by Vertica, the results can be confusing.

Vertica stores timestamps in the database in UTC. (If a database time zone is set, the conversion is done at query time.) To prevent errors from the JVM time zone, add the following code to the processing method of your UDx:

TimeZone.setDefault(TimeZone.getTimeZone("UTC"));

Strings

The Java SDK contains a class named StringUtils that assists you when manipulating string data. One of its more useful features is its getStringBytes() method. This method extracts bytes from a String in a way that prevents the creation of invalid strings. If you attempt to extract a substring that would split part of a multi-byte UTF-8 character, getStringBytes() truncates it to the nearest whole character.

1.4.5 - Handling NULL values

Your UDxs must be prepared to handle NULL values.

Your UDxs must be prepared to handle NULL values. These values usually must be handled separately from regular values.

Reading NULL values

Your UDx reads data from instances of the the BlockReader or PartitionReader classes. If the value of a column is NULL, the methods you use to get data (such as getLong) return a Java null reference. If you attempt to use the value without checking for NULL, the Java runtime will throw a null pointer exception.

You can test for null values before reading columns by using the data-type-specific methods (such as isLongNull, isDoubleNull, and isBooleanNull). For example, to test whether the INTEGER first column of your UDx's input is a NULL, you would use the statement:

// See if the Long value in column 0 is a NULL

if (inputReader.isLongNull(0)) {

// value is null

. . .

Writing NULL values

You output NULL values using type-specific methods on the BlockWriter and PartitionWriter classes (such as setLongNull and setStringNull). These methods take the column number to receive the NULL value. In addition, the PartitionWriter class has data-type specific set value methods (such as setLongValue and setStringValue). If you pass these methods a value, they set the output column to that value. If you pass them a Java null reference, they set the output column to NULL.

1.4.6 - Adding metadata to Java UDx libraries

To add metadata to your Java UDx library, you create a subclass of the UDXLibrary class that contains your library's metadata.

You can add metadata, such as author name, the version of the library, a description of your library, and so on to your library. This metadata lets you track the version of your function that is deployed on a Vertica Analytic Database cluster and lets third-party users of your function know who created the function. Your library's metadata appears in the USER_LIBRARIES system table after your library has been loaded into the Vertica Analytic Database catalog.

To add metadata to your Java UDx library, you create a subclass of the UDXLibrary class that contains your library's metadata. You then include this class within your JAR file. When you load your class into the Vertica Analytic Database catalog using the CREATE LIBRARY statement, looks for a subclass of UDXLibrary for the library's metadata.

In your subclass of UDXLibrary, you need to implement eight getters that return String values containing the library's metadata. The getters in this class are:

-

getAuthor() returns the name you want associated with the creation of the library (your own name or your company's name for example).

-

getLibraryBuildTag() returns whatever String you want to use to represent the specific build of the library (for example, the SVN revision number or a timestamp of when the library was compiled). This is useful for tracking instances of your library as you are developing them.

-

getLibraryVersion() returns the version of your library. You can use whatever numbering or naming scheme you want.

-

getLibrarySDKVersion() returns the version of the Vertica Analytic Database SDK Library for which you've compiled the library.

Note

This field isn't used to determine whether a library is compatible with a version of the Vertica Analytic Database server. The version of the Vertica Analytic Database SDK you use to compile your library is embedded in the library when you compile it. It is this information that Vertica Analytic Database server uses to determine if your library is compatible with it.

-

getSourceUrl() returns a URL where users of your function can find more information about it. This can be your company's website, the GitHub page hosting your library's source code, or whatever site you like.

-

getDescription() returns a concise description of your library.

-

getLicensesRequired() returns a placeholder for licensing information. You must pass an empty string for this value.

-

getSignature() returns a placeholder for a signature that will authenticate your library. You must pass an empty string for this value.

For example, the following code demonstrates creating a UDXLibrary subclass to be included in the Add2Ints UDSF example JAR file (see /opt/vertica/sdk/examples/JavaUDx/ScalarFunctions on any Vertica node).

// Import the UDXLibrary class to hold the metadata

import com.vertica.sdk.UDXLibrary;

public class Add2IntsLibrary extends UDXLibrary

{

// Return values for the metadata about this library.

@Override public String getAuthor() {return "Whizzo Analytics Ltd.";}

@Override public String getLibraryBuildTag() {return "1234";}

@Override public String getLibraryVersion() {return "1.0";}

@Override public String getLibrarySDKVersion() {return "7.0.0";}

@Override public String getSourceUrl() {

return "http://example.com/add2ints";

}

@Override public String getDescription() {

return "My Awesome Add 2 Ints Library";

}

@Override public String getLicensesRequired() {return "";}

@Override public String getSignature() {return "";}

}

When the library containing the Add2IntsLibrary class loaded, the metadata appears in the USER_LIBRARIES system table:

=> CREATE LIBRARY JavaAdd2IntsLib AS :libfile LANGUAGE 'JAVA';

CREATE LIBRARY

=> CREATE FUNCTION JavaAdd2Ints as LANGUAGE 'JAVA' name 'com.mycompany.example.Add2IntsFactory' library JavaAdd2IntsLib;

CREATE FUNCTION

=> \x

Expanded display is on.

=> SELECT * FROM USER_LIBRARIES WHERE lib_name = 'JavaAdd2IntsLib';

-[ RECORD 1 ]-----+---------------------------------------------

schema_name | public

lib_name | JavaAdd2IntsLib

lib_oid | 45035996273869844

author | Whizzo Analytics Ltd.

owner_id | 45035996273704962

lib_file_name | public_JavaAdd2IntsLib_45035996273869844.jar

md5_sum | f3bfc76791daee95e4e2c0f8a8d2737f

sdk_version | v7.0.0-20131105

revision | 125200

lib_build_tag | 1234

lib_version | 1.0

lib_sdk_version | 7.0.0

source_url | http://example.com/add2ints

description | My Awesome Add 2 Ints Library

licenses_required |

signature |

1.4.7 - Java UDx resource management

Java Virtual Machines (JVMs) allocate a set amount of memory when they start.

Java Virtual Machines (JVMs) allocate a set amount of memory when they start. This set memory allocation complicates memory management for Java UDxs, because memory cannot be dynamically allocated and freed by the UDx as it is processing data. This is differs from C++ UDxs which can dynamically allocate resources.

To control the amount of memory consumed by Java UDxs, Vertica has a memory pool named jvm that it uses to allocate memory for JVMs. If this memory pool is exhausted, queries that call Java UDxs block until enough memory in the pool becomes free to start a new JVM.

By default, the jvm pool has:

-

no memory of its own assigned to it, so it borrows memory from the GENERAL pool.

-

its MAXMEMORYSIZE set to either 10% of system memory or 2GB, whichever is smaller.

-

its PLANNEDCONCURRENCY set to AUTO, so that it inherits the GENERAL pool's PLANNEDCONCURRENCY setting.

You can view the current settings for the jvm pool by querying the RESOURCE_POOLS table:

=> SELECT MAXMEMORYSIZE,PLANNEDCONCURRENCY FROM V_CATALOG.RESOURCE_POOLS WHERE NAME = 'jvm';

MAXMEMORYSIZE | PLANNEDCONCURRENCY

---------------+--------------------

10% | AUTO

When a SQL statement calls a Java UDx, Vertica checks if the jvm memory pool has enough memory to start a new JVM instance to execute the function call. Vertica starts each new JVM with its heap memory size set to approximately the jvm pool's MAXMEMORYSIZE parameter divided by its PLANNEDCONCURRENCY parameter. If the memory pool does not contain enough memory, the query blocks until another JVM exits and return their memory to the pool.

If your Java UDx attempts to consume more memory than has been allocated to the JVM's heap size, it exits with a memory error. You can attempt to resolve this issue by:

-

increasing the jvm pool's MAXMEMORYSIZE parameter.

-

decreasing the jvm pool's PLANNEDCONCURRENCY parameter.

-

changing your Java UDx's code to consume less memory.

Adjusting the jvm pool

When adjusting the jvm pool to your needs, you must consider two factors:

You can learn the amount of memory your Java UDx needs using several methods. For example, your code can use Java's Runtime class to get an estimate of the total memory it has allocated and then log the value using ServerInterface.log(). (An instance of this class is passed to your UDx.) If you have multiple Java UDxs in your database, set the jvm pool memory size based on the UDx that uses the most memory.

The number of concurrent sessions that need to run Java UDxs may not be the same as the global PLANNEDCONCURRENCY setting. For example, you may have just a single user who runs a Java UDx, which means you can lower the jvm pool's PLANNEDCONCURRENCY setting to 1.

When you have an estimate for the amount of RAM and the number of concurrent user sessions that need to run Java UDXs, you can adjust the jvm pool to an appropriate size. Set the pool's MAXMEMORYSIZE to the maximum amount of RAM needed by the most demanding Java UDx multiplied by the number of concurrent user sessions that need to run Java UDxs. Set the pool's PLANNEDCONCURENCY to the numebr of simultaneous user sessions that need to run Java UDxs.

For example, suppose your Java UDx requires up to 4GB of memory to run and you expect up to two user sessions use Java UDx's. You would use the following command to adjust the jvm pool:

=> ALTER RESOURCE POOL jvm MAXMEMORYSIZE '8G' PLANNEDCONCURRENCY 2;

The MEMORYSIZE is set to 8GB, which is the 4GB maximum memory use by the Java UDx multiplied by the 2 concurrent user sessions.

Note

The PLANNEDCONCURRENCY value is not the number of calls to Java UDx that you expect to happen simultaneously. Instead, it is the number of concurrently open user sessions that call Java UDxs at any time during the session. See below for more information.

See Managing workloads for more information on tuning the jvm and other resource pools.

Freeing JVM memory

The first time users call a Java UDx during their session, Vertica allocates memory from the jvm pool and starts a new JVM. This JVM remains running for as long as the user's session is open so it can process other Java UDx calls. Keeping the JVM running lowers the overhead of executing multiple Java UDxs by the same session. If the JVM did not remain open, each call to a Java UDx would require additional time for Vertica to allocate resources and start a new JVM. However, having the JVM remain open means that the JVM's memory remains allocated for the life of the session whether or not it will be used again.

If the jvm memory pool is depleted, queries containing Java UDxs either block until memory becomes available or eventually fail due a lack of resources. If you find queries blocking or failing for this reason, you can allocate more memory to the jvm pool and increase its PLANNEDCONCURRENCY. Another option is to ask users to call the RELEASE_JVM_MEMORY function when they no longer need to run Java UDxs. This function closes any JVM belonging to the user's session and returns its allocated memory to the jvm memory pool.

The following example demonstrates querying V_MONITOR.SESSIONS to find the memory allocated to JVMs by all sessions. It also demonstrates how the memory is allocated by a call to a Java UDx, and then freed by calling RELEASE_JVM_MEMORY.

=> SELECT USER_NAME,EXTERNAL_MEMORY_KB FROM V_MONITOR.SESSIONS;

user_name | external_memory_kb

-----------+---------------

dbadmin | 0

(1 row)

=> -- Call a Java UDx

=> SELECT add2ints(123,456);

add2ints

----------

579

(1 row)

=> -- JVM is now running and memory is allocated to it.

=> SELECT USER_NAME,EXTERNAL_MEMORY_KB FROM V_MONITOR.SESSIONS;

USER_NAME | EXTERNAL_MEMORY_KB

-----------+---------------

dbadmin | 79705

(1 row)

=> -- Shut down the JVM and deallocate memory

=> SELECT RELEASE_JVM_MEMORY();

RELEASE_JVM_MEMORY

-----------------------------------------

Java process killed and memory released

(1 row)

=> SELECT USER_NAME,EXTERNAL_MEMORY_KB FROM V_MONITOR.SESSIONS;

USER_NAME | EXTERNAL_MEMORY_KB

-----------+---------------

dbadmin | 0

(1 row)

In rare cases, you may need to close all JVMs. For example, you may need to free memory for an important query, or several instances of a Java UDx may be taking too long to complete. You can use the RELEASE_ALL_JVM_MEMORY to close all of the JVMs in all user sessions:

=> SELECT USER_NAME,EXTERNAL_MEMORY_KB FROM V_MONITOR.SESSIONS;

USER_NAME | EXTERNAL_MEMORY_KB

-------------+---------------

ExampleUser | 79705

dbadmin | 79705

(2 rows)

=> SELECT RELEASE_ALL_JVM_MEMORY();

RELEASE_ALL_JVM_MEMORY

-----------------------------------------------------------------------------

Close all JVM sessions command sent. Check v_monitor.sessions for progress.

(1 row)

=> SELECT USER_NAME,EXTERNAL_MEMORY_KB FROM V_MONITOR.SESSIONS;

USER_NAME | EXTERNAL_MEMORY_KB

-----------+---------------

dbadmin | 0

(1 row)

Caution

This function terminates all JVMs, including ones that are currently executing Java UDXs. This will cause any query that is currently executing a Java UDx to return an error.

Notes

-

The jvm resource pool is used only to allocate memory for the Java UDx function calls in a statement. The rest of the resources required by the SQL statement come from other memory pools.

-

The first time a Java UDx is called, Vertica starts a JVM to execute some Java methods to get metadata about the UDx during the query planning phase. The memory for this JVM is also taken from the jvm memory pool.

1.5 - Python SDK

The Vertica SDK supports writing UDxs of some types in Python 3.

The Vertica SDK supports writing UDxs of some types in Python 3.

The Python SDK does not require any additional system configuration or header files. This low overhead allows you to develop and deploy new capabilities to your Vertica cluster in a short amount of time.

The following workflow is typical for the Python SDK:

Because Python has an interpreter, you do not have to compile your program before loading the UDx in Vertica. However, you should expect to do some debugging of your code after you create your function and begin testing it in Vertica.

When Vertica calls your UDx, it starts a side process that manages the interaction between the server and the Python interpreter.

This section covers Python-specific topics that apply to all UDx types. For information that applies to all languages, see Arguments and return values, UDx parameters, Errors, warnings, and logging, Handling cancel requests and the sections for specific UDx types. For full API documentation, see the Python SDK.

Important

Your UDx must be able to run with the version of Python bundled with Vertica. You can find this with /opt/vertica/sbin/python3 --version. You cannot change the version used by the Vertica Python interpreter.

1.5.1 - Setting up a Python development environment

To avoid problems when loading and executing your UDxs, develop your UDxs using the same version of Python that Vertica uses.

To avoid problems when loading and executing your UDxs, develop your UDxs using the same version of Python that Vertica uses. To do this without changing your environment for projects that might require other Python versions, you can use a Python virtual environment (venv). You can install libraries that your UDx depends on into your venv and use that path when you create your UDx library with CREATE LIBRARY.

Setting up venv

Set up venv using the Python version bundled with Vertica. If you have direct access to a database node, you can use that Python binary directly to create your venv:

$ /opt/vertica/sbin/python3 -m venv /path/to/new/environment

The result is a directory with a default environment, including a site-packages directory:

$ ls venv/lib/

python3.9

$ ls venv/lib/python3.9/

site-packages

If your UDx depends on libraries that are not packaged with Vertica, install them into this directory:

$ source venv/bin/activate

(venv) $ pip install numpy

...

The lib/python3.9/site-packages directory now contains the installed library. The change affects only your virtual environment.

UDx imports

Your UDx code must import, in addition to any libraries you add, the vertica_sdk library:

# always required:

import vertica_sdk

# other libs:

import numpy as np

# ...

The vertica_sdk library is included as a part of the Vertica server. You do not need to add it to site-packages or declare it as a dependency.

Deployment

For libraries you add, you must declare dependencies when using CREATE LIBRARY. This declaration allows Vertica to find the libraries and distribute them to all database nodes. You can supply a path instead of enumerating the libraries:

=> CREATE OR REPLACE LIBRARY pylib AS

'/path/to/udx/add2ints.py'

DEPENDS '/path/to/new/environment/lib/python3.9/site-packages/*'

LANGUAGE 'Python';

=> CREATE OR REPLACE FUNCTION add2ints AS LANGUAGE 'Python'

NAME 'add2ints_factory' LIBRARY pylib;

CREATE LIBRARY copies the UDx and the contents of the DEPENDS path and stores them with the database. Vertica then distributes copies to all database nodes.

1.5.2 - Python and Vertica data types

The Vertica Python SDK converts native Vertica data types into the appropriate Python data types.

The Vertica Python SDK converts native Vertica data types into the appropriate Python data types. The following table describes some of the data type conversions. Consult the Python SDK for a complete list, as well as lists of helper functions to convert and manipulate these data types.

For information about SDK support for complex data types, see Complex Types as Arguments and Return Values.

|

Vertica Data Type |

Python Data Type |

|

INTEGER |

int |

|

FLOAT |

float |

|

NUMERIC |

decimal.Decimal |

|

DATE |

datetime.date |

|

CHAR, VARCHAR, LONG VARCHAR |

string (UTF-8 encoded) |

|

BINARY, VARBINARY, LONG VARBINARY |

binary |

|

TIMESTAMP |

datetime.datetime |

|

TIME |

datetime.time |

|

ARRAY |

list

Note: Nested ARRAY types are also converted into lists.

|

|

ROW |

collections.OrderedDict

Note: Nested ROW types are also converted into collections.OrderedDicts.

|

Note

Some Vertica data types are not supported in Python. For a list of all Vertica data types, see

Data types.

1.6 - R SDK

The Vertica R SDK extends the capabilities of the Vertica Analytic Database so you can leverage additional R libraries.

The Vertica R SDK extends the capabilities of the Vertica Analytic Database so you can leverage additional R libraries. Before you can begin developing User Defined Extensions (UDxs) in R, you must install the R Language Pack for Vertica on each of the nodes in your cluster. The R SDK supports scalar and transform functions in fenced mode. Other UDx types are not supported.

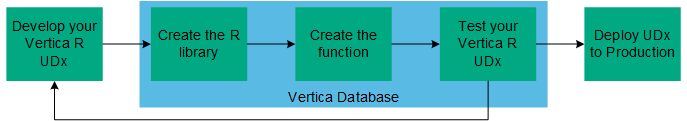

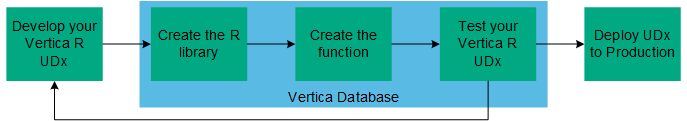

The following workflow is typical for the R SDK:

You can find detailed documentation of all of the classes in the Vertica R SDK.

1.6.1 - Installing/upgrading the R language pack for Vertica

To create R UDxs in Vertica, install the R Language Pack package that matches your server version.

To create R UDxs in Vertica, install the R Language Pack package that matches your server version. The R Language Pack includes the R runtime and associated libraries for interfacing with Vertica. You must use this version of the R runtime; you cannot upgrade it.

You must install the R Language Pack on each node in the cluster. The Vertica R Language Pack must be the only R Language Pack installed on the node.

Vertica R language pack prerequisites

The R Language Pack package requires a number of packages for installation and execution. The names of these dependencies vary among Linux distributions. For Vertica-supported Linux platforms the packages are:

-

RHEL/CentOS: libgfortran, xz-libs, libgomp

-

SUSE Linux Enterprise Server: libgfortran, liblzma5, libgomp1

-

Debian/Ubuntu: libgfortran, liblzma5, libgomp1

Vertica requires version 5 of the libgfortran library later than 8.3.0 to create R extensions. The libgfortran library is included by default with the devtool and gcc packages.

Installing the Vertica R language pack

If you use your operating systems package manager, rather than the rpm or dpkg command, for installation, you do not need to manually install the R Language Pack. The native package managers for each supported Linux version are:

-

Download the R language package by browsing to the Vertica website.

-

On the Support tab, select Customer Downloads.

-

When prompted, log in using your Micro Focus credentials.

-

Locate and select the vertica-R-lang_version.rpm or vertica-R-lang_version.deb file for your server version. The R language package version must match your server version to three decimal points.

-

Install the package as root or using sudo:

-

RHEL/CentOS

$ yum install vertica-R-lang-<version>.rpm

-

SUSE Linux Enterprise Server

$ zypper install vertica-R-lang-<version>.rpm

-

Debian

$ apt-get install ./vertica-R-lang_<version>.deb

The installer puts the R binary in /opt/vertica/R.

Upgrading the Vertica R language pack

When upgrading, some R packages you have manually installed may not work and may have to be reinstalled. If you do not update your package(s), then R returns an error if the package cannot be used. Instructions for upgrading these packages are below.

Note

The R packages provided in the R Language Pack are automatically upgraded and do not need to be reinstalled.

-

You must uninstall the R Language package before upgrading Vertica. Any additional R packages you manually installed remain in /opt/vertica/R and are not removed when you uninstall the package.

-

Upgrade your server package as detailed in Upgrading Vertica to a New Version.

-

After the server package has been updated, install the new R Language package on each host.

If you have installed additional R packages, on each node:

-

As root run /opt/vertica/R/bin/R and issue the command:

> update.packages(checkBuilt=TRUE)

-

Select a CRAN mirror from the list displayed.

-

You are prompted to update each package that has an update available for it. You must update any packages that you manually installed and are not compatible with the current version of R in the R Language Pack.

Do NOT update:

The packages you selected to be updated are installed. Quit R with the command:

> quit()

Vertica UDx functions written in R do not need to be compiled and you do not need to reload your Vertica-R libraries and functions after an upgrade.

1.6.2 - R packages

The Vertica R Language Pack includes the following R packages in addition to the default packages bundled with R:.