This guide explains how to query and analyze data in your Vertica database.

This is the multi-page printable view of this section. Click here to print.

Data analysis

- 1: Queries

- 1.1: Historical queries

- 1.2: Temporary tables

- 1.3: SQL queries

- 1.4: Arrays and sets (collections)

- 1.5: Rows (structs)

- 1.6: Subqueries

- 1.6.1: Subqueries used in search conditions

- 1.6.2: Subqueries in the SELECT list

- 1.6.3: Noncorrelated and correlated subqueries

- 1.6.4: Flattening FROM clause subqueries

- 1.6.5: Subqueries in UPDATE and DELETE statements

- 1.6.6: Subquery examples

- 1.6.7: Subquery restrictions

- 1.7: Joins

- 1.7.1: Join syntax

- 1.7.2: Join conditions vs. filter conditions

- 1.7.3: Inner joins

- 1.7.3.1: Equi-joins and non equi-joins

- 1.7.3.2: Natural joins

- 1.7.3.3: Cross joins

- 1.7.4: Outer joins

- 1.7.5: Controlling join inputs

- 1.7.6: Range joins

- 1.7.7: Event series joins

- 1.7.7.1: Sample schema for event series joins examples

- 1.7.7.2: Writing event series joins

- 2: Query optimization

- 2.1: Initial process for improving query performance

- 2.2: Column encoding

- 2.2.1: Improving column compression

- 2.2.2: Using run length encoding

- 2.3: Projections for queries with predicates

- 2.4: GROUP BY queries

- 2.4.1: GROUP BY implementation options

- 2.4.2: Avoiding resegmentation during GROUP BY optimization with projection design

- 2.5: DISTINCT in a SELECT query list

- 2.5.1: Query has no aggregates in SELECT list

- 2.5.2: COUNT (DISTINCT) and other DISTINCT aggregates

- 2.5.3: Approximate count distinct functions

- 2.5.4: Single DISTINCT aggregates

- 2.5.5: Multiple DISTINCT aggregates

- 2.6: JOIN queries

- 2.6.1: Hash joins versus merge joins

- 2.6.2: Identical segmentation

- 2.6.3: Joining variable length string data

- 2.7: ORDER BY queries

- 2.8: Analytic functions

- 2.8.1: Empty OVER clauses

- 2.8.2: NULL sort order

- 2.8.3: Runtime sorting of NULL values in analytic functions

- 2.9: LIMIT queries

- 2.10: INSERT-SELECT operations

- 2.10.1: Matching sort orders

- 2.10.2: Identical segmentation

- 2.11: DELETE and UPDATE queries

- 2.12: Data collector table queries

- 3: Views

- 3.1: Creating views

- 3.2: Using views

- 3.3: View execution

- 3.4: Managing views

- 4: Flattened tables

- 4.1: Flattened table example

- 4.2: Creating flattened tables

- 4.3: Required privileges

- 4.4: DEFAULT versus SET USING

- 4.5: Modifying SET USING and DEFAULT columns

- 4.6: Rewriting SET USING queries

- 4.7: Impact of SET USING columns on license limits

- 5: SQL analytics

- 5.1: Invoking analytic functions

- 5.2: Analytic functions versus aggregate functions

- 5.3: Window partitioning

- 5.4: Window ordering

- 5.5: Window framing

- 5.5.1: Windows with a physical offset (ROWS)

- 5.5.2: Windows with a logical offset (RANGE)

- 5.5.3: Reporting aggregates

- 5.6: Named windows

- 5.7: Analytic query examples

- 5.7.1: Calculating a median value

- 5.7.2: Getting price differential for two stocks

- 5.7.3: Calculating the moving average

- 5.7.4: Getting latest bid and ask results

- 5.8: Event-based windows

- 5.9: Sessionization with event-based windows

- 6: Machine learning for predictive analytics

- 6.1: Download the machine learning example data

- 6.2: Data preparation

- 6.2.1: Balancing imbalanced data

- 6.2.2: Detect outliers

- 6.2.3: Encoding categorical columns

- 6.2.4: Imputing missing values

- 6.2.5: Normalizing data

- 6.2.6: PCA (principal component analysis)

- 6.2.6.1: Dimension reduction using PCA

- 6.2.7: Sampling data

- 6.2.8: SVD (singular value decomposition)

- 6.2.8.1: Computing SVD

- 6.3: Regression algorithms

- 6.3.1: Autoregression

- 6.3.2: Linear regression

- 6.3.2.1: Building a linear regression model

- 6.3.3: Poisson regression

- 6.3.4: Random forest for regression

- 6.3.5: SVM (support vector machine) for regression

- 6.3.5.1: Building an SVM for regression model

- 6.3.6: XGBoost for regression

- 6.4: Classification algorithms

- 6.4.1: Logistic regression

- 6.4.1.1: Building a logistic regression model

- 6.4.2: Naive bayes

- 6.4.2.1: Classifying data using naive bayes

- 6.4.3: Random forest for classification

- 6.4.3.1: Classifying data using random forest

- 6.4.4: SVM (support vector machine) for classification

- 6.4.5: XGBoost for classification

- 6.5: Clustering algorithms

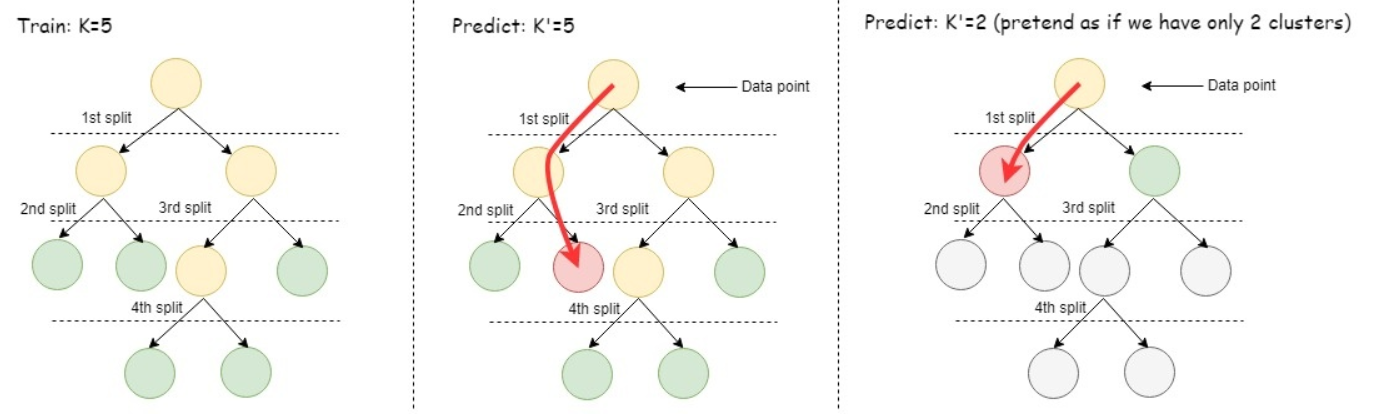

- 6.5.1: K-means

- 6.5.1.1: Clustering data using k-means

- 6.5.2: K-prototypes

- 6.5.3: Bisecting k-means

- 6.6: Time series forecasting

- 6.6.1: ARIMA model example

- 6.6.2: Autoregressive model example

- 6.6.3: Moving-average model example

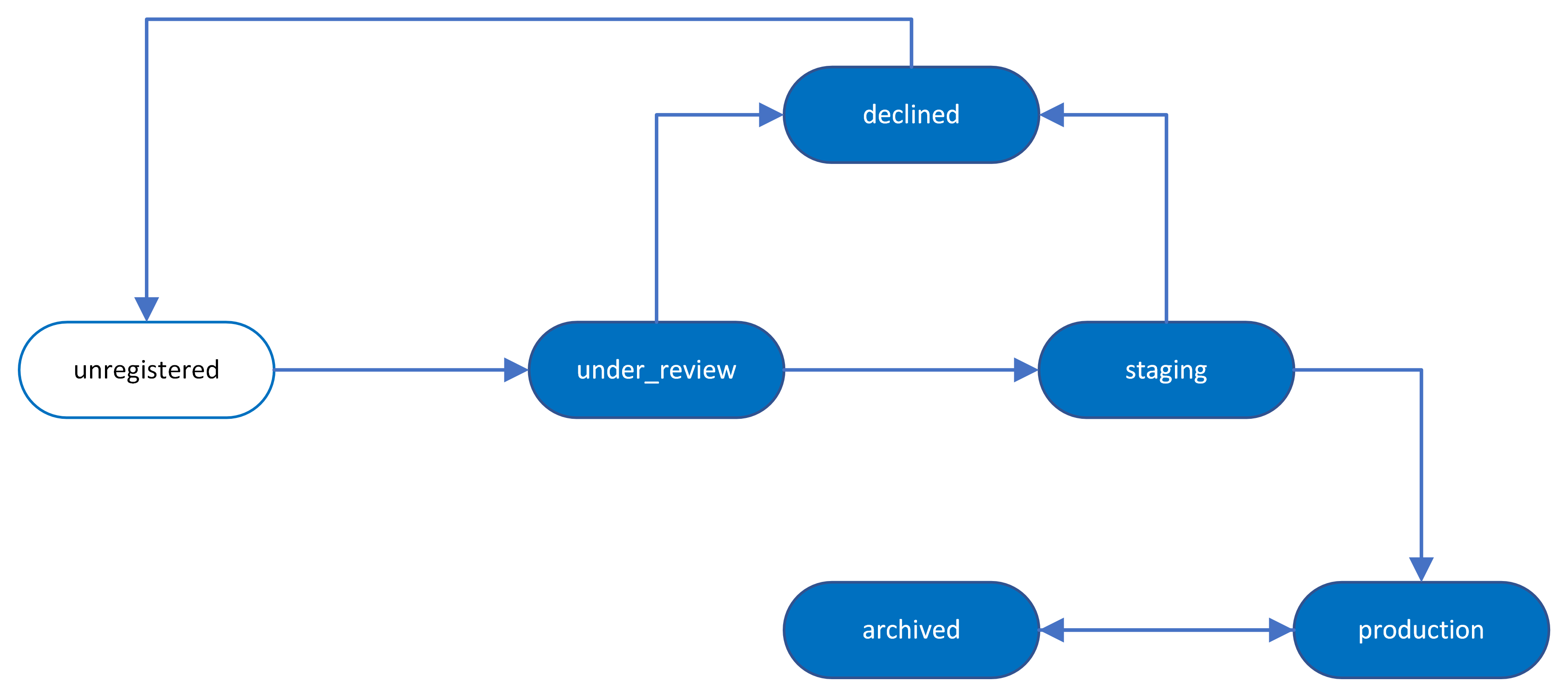

- 6.7: Model management

- 6.7.1: Model versioning

- 6.7.2: Altering models

- 6.7.2.1: Changing model ownership

- 6.7.2.2: Moving models to another schema

- 6.7.2.3: Renaming a model

- 6.7.3: Dropping models

- 6.7.4: Managing model security

- 6.7.5: Viewing model attributes

- 6.7.6: Summarizing models

- 6.7.7: Viewing models

- 6.8: Using external models with Vertica

- 6.8.1: TensorFlow models

- 6.8.1.1: TensorFlow integration and directory structure

- 6.8.1.2: TensorFlow example

- 6.8.1.3: tf_model_desc.json overview

- 6.8.2: Using PMML models

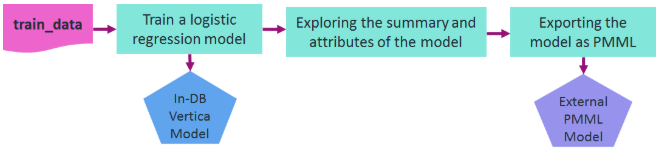

- 6.8.2.1: Exporting Vertica models in PMML format

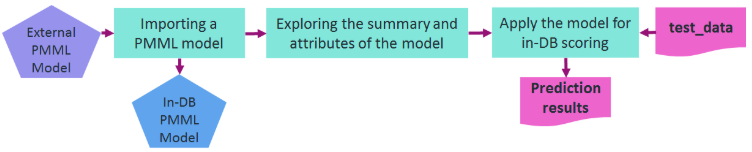

- 6.8.2.2: Importing and predicting with PMML models

- 6.8.2.3: PMML features and attributes

- 7: Geospatial analytics

- 7.1: Best practices for geospatial analytics

- 7.2: Spatial objects

- 7.3: Working with spatial objects in tables

- 7.3.1: Defining table columns for spatial data

- 7.3.2: Exporting spatial data from a table

- 7.3.3: Identifying null spatial objects

- 7.3.4: Loading spatial data from shapefiles

- 7.3.5: Loading spatial data into tables using COPY

- 7.3.6: Retrieving spatial data from a table as well-known text (WKT)

- 7.3.7: Working with GeoHash data

- 7.3.8: Spatial joins with ST_Intersects and STV_Intersect

- 7.3.8.1: Best practices for spatial joins

- 7.3.8.2: Ensuring polygon validity before creating or refreshing an index

- 7.3.8.3: STV_Intersect: scalar function vs. transform function

- 7.3.8.4: Performing spatial joins with STV_Intersect functions

- 7.3.8.4.1: Spatial indexes and STV_Intersect

- 7.3.8.5: When to use ST_Intersects vs. STV_Intersect

- 7.3.8.5.1: Performing spatial joins with ST_Intersects

- 7.4: Working with spatial objects from client applications

- 7.4.1: Using LONG VARCHAR and LONG VARBINARY data types with ODBC

- 7.4.2: Using LONG VARCHAR and LONG VARBINARY data types with JDBC

- 7.4.3: Using GEOMETRY and GEOGRAPHY data types in ODBC

- 7.4.4: Using GEOMETRY and GEOGRAPHY data types in JDBC

- 7.4.5: Using GEOMETRY and GEOGRAPHY data types in ADO.NET

- 7.5: OGC spatial definitions

- 7.5.1: Spatial classes

- 7.5.1.1: Point

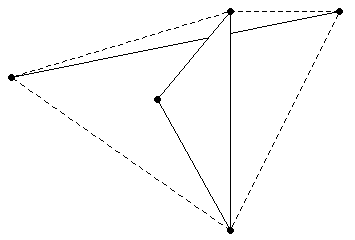

- 7.5.1.2: Multipoint

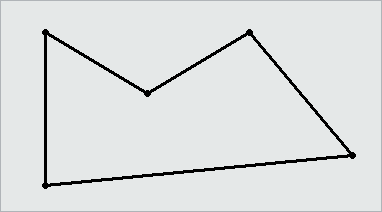

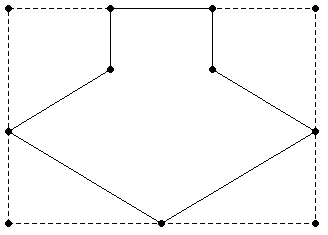

- 7.5.1.3: Linestring

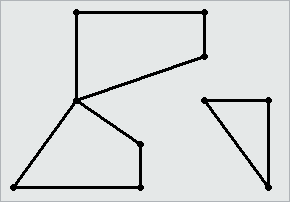

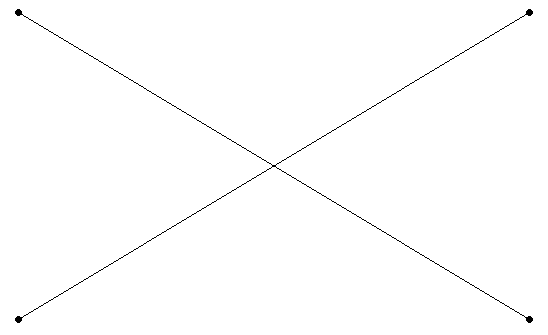

- 7.5.1.4: Multilinestring

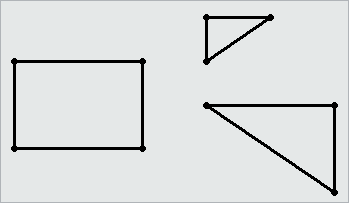

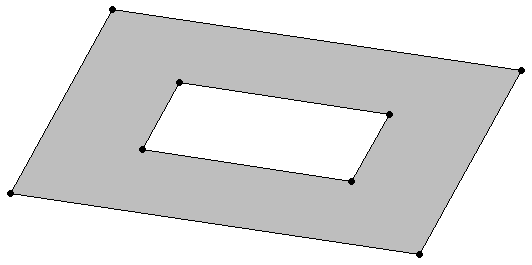

- 7.5.1.5: Polygon

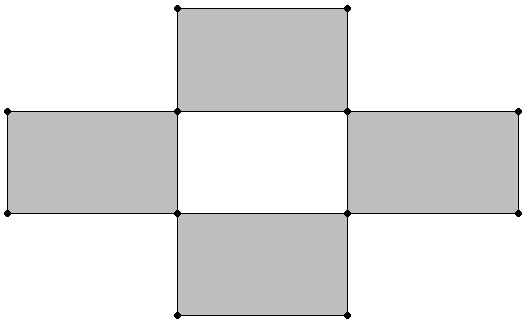

- 7.5.1.6: Multipolygon

- 7.5.2: Spatial object representations

- 7.5.2.1: Well-known text (WKT)

- 7.5.2.2: Well-known binary (WKB)

- 7.5.3: Spatial definitions

- 7.5.3.1: Boundary

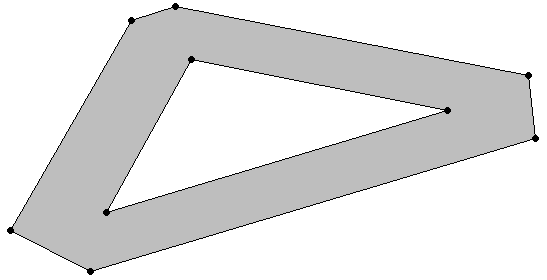

- 7.5.3.2: Buffer

- 7.5.3.3: Contains

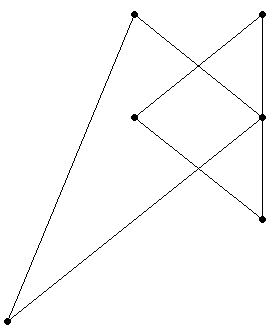

- 7.5.3.4: Convex hull

- 7.5.3.5: Crosses

- 7.5.3.6: Disjoint

- 7.5.3.7: Envelope

- 7.5.3.8: Equals

- 7.5.3.9: Exterior

- 7.5.3.10: GeometryCollection

- 7.5.3.11: Interior

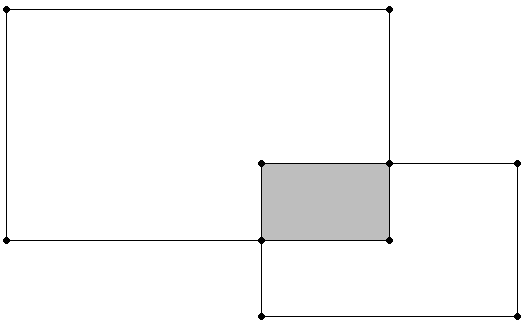

- 7.5.3.12: Intersection

- 7.5.3.13: Overlaps

- 7.5.3.14: Relates

- 7.5.3.15: Simple

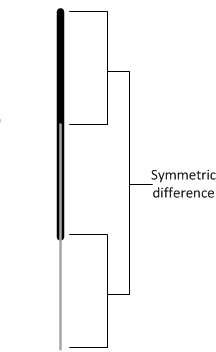

- 7.5.3.16: Symmetric difference

- 7.5.3.17: Union

- 7.5.3.18: Validity

- 7.5.3.19: Within

- 7.6: Spatial data type support limitations

- 8: Time series analytics

- 8.1: Gap filling and interpolation (GFI)

- 8.1.1: Constant interpolation

- 8.1.2: TIMESERIES clause and aggregates

- 8.1.3: Time series rounding

- 8.1.4: Linear interpolation

- 8.1.5: GFI examples

- 8.2: Null values in time series data

- 9: Data aggregation

- 9.1: Single-level aggregation

- 9.2: Multi-level aggregation

- 9.3: Aggregates and functions for multilevel grouping

- 9.4: Aggregate expressions for GROUP BY

- 9.5: Pre-aggregating data in projections

- 9.5.1: Live aggregate projections

- 9.5.1.1: Functions supported for live aggregate projections

- 9.5.1.2: Creating live aggregate projections

- 9.5.1.3: Live aggregate projection example

- 9.5.2: Top-k projections

- 9.5.2.1: Creating top-k projections

- 9.5.2.2: Top-k projection examples

- 9.5.3: Pre-aggregating UDTF results

- 9.5.4: Aggregating data through expressions

- 9.5.5: Aggregation information in system tables

1 - Queries

Queries are database operations that retrieve data from one or more tables or views. In Vertica, the top-level SELECT statement is the query, and a query nested within another SQL statement is called a subquery.

Vertica is designed to run the same SQL standard queries that run on other databases. However, there are some differences between Vertica queries and queries used in other relational database management systems.

The Vertica transaction model is different from the SQL standard in a way that has a profound effect on query performance. You can:

-

Run a query on a static backup of the database from any specific date and time. Doing so avoids holding locks or blocking other database operations.

-

Use a subset of the standard SQL isolation levels and access modes (read/write or read-only) for a user session.

In Vertica, the primary structure of a SQL query is its statement. Each statement ends with a semicolon, and you can write multiple queries separated by semicolons; for example:

=> CREATE TABLE t1( ..., date_col date NOT NULL, ...);

=> CREATE TABLE t2( ..., state VARCHAR NOT NULL, ...);

1.1 - Historical queries

Vertica can execute historical queries, which execute on a snapshot of the database taken at a specific timestamp or epoch. Historical queries can be used to evaluate and possibly recover data that was deleted but has not yet been purged.

You specify a historical query by qualifying the

SELECT statement with an AT epoch clause, where epoch is one of the following:

-

EPOCH LATEST: Return data up to but not including the current epoch. The result set includes data from the latest committed DML transaction.

-

EPOCH

integer: Return data up to and including theinteger-specified epoch. -

TIME '

timestamp': Return data from thetimestamp-specified epoch.

Note

These options are ignored if used to query temporary or external tables.See Epochs for additional information about how Vertica uses epochs.

Historical queries return data only from the specified epoch. Because they do not return the latest data, historical queries hold no locks or blocking write operations.

Query results are private to the transaction and valid only for the length of the transaction. Query execution is the same regardless of the transaction isolation level.

Restrictions

-

The specified epoch, or epoch of the specified timestamp, cannot be less than the Ancient History Mark epoch.

-

Vertica does not support running historical queries on temporary tables.

Important

Any changes to a table schema are reflected across all epochs. For example, if you add a column to a table and specify a default value for it, all historical queries on that table display the new column and its default value.1.2 - Temporary tables

You can use the CREATE TEMPORARY TABLE statement to implement certain queries using multiple steps:

-

Create one or more temporary tables.

-

Execute queries and store the result sets in the temporary tables.

-

Execute the main query using the temporary tables as if they were a normal part of the logical schema.

See CREATE TEMPORARY TABLE in the SQL Reference Manual for details.

1.3 - SQL queries

All DML (Data Manipulation Language) statements can contain queries. This section introduces some of the query types in Vertica, with additional details in later sections.

Note

Many of the examples in this chapter use the VMart schema.Simple queries

Simple queries contain a query against one table. Minimal effort is required to process the following query, which looks for product keys and SKU numbers in the product table:

=> SELECT product_key, sku_number FROM public.product_dimension;

product_key | sku_number

-------------+-----------

43 | SKU-#129

87 | SKU-#250

42 | SKU-#125

49 | SKU-#154

37 | SKU-#107

36 | SKU-#106

86 | SKU-#248

41 | SKU-#121

88 | SKU-#257

40 | SKU-#120

(10 rows)

Tables can contain arrays. You can select the entire array column, an index into it, or the results of a function applied to the array. For more information, see Arrays and sets (collections).

Joins

Joins use a relational operator that combines information from two or more tables. The query's ON clause specifies how tables are combined, such as by matching foreign keys to primary keys. In the following example, the query requests the names of stores with transactions greater than 70 by joining the store key ID from the store schema's sales fact and sales tables:

=> SELECT store_name, COUNT(*) FROM store.store_sales_fact

JOIN store.store_dimension ON store.store_sales_fact.store_key = store.store_dimension.store_key

GROUP BY store_name HAVING COUNT(*) > 70 ORDER BY store_name;

store_name | count

------------+-------

Store49 | 72

Store83 | 78

(2 rows)

For more detailed information, see Joins. See also the Multicolumn subqueries section in Subquery examples.

Cross joins

Also known as the Cartesian product, a cross join is the result of joining every record in one table with every record in another table. A cross join occurs when there is no join key between tables to restrict records. The following query, for example, returns all instances of vendor and store names in the vendor and store tables:

=> SELECT vendor_name, store_name FROM public.vendor_dimension

CROSS JOIN store.store_dimension;

vendor_name | store_name

--------------------+------------

Deal Warehouse | Store41

Deal Warehouse | Store12

Deal Warehouse | Store46

Deal Warehouse | Store50

Deal Warehouse | Store15

Deal Warehouse | Store48

Deal Warehouse | Store39

Sundry Wholesale | Store41

Sundry Wholesale | Store12

Sundry Wholesale | Store46

Sundry Wholesale | Store50

Sundry Wholesale | Store15

Sundry Wholesale | Store48

Sundry Wholesale | Store39

Market Discounters | Store41

Market Discounters | Store12

Market Discounters | Store46

Market Discounters | Store50

Market Discounters | Store15

Market Discounters | Store48

Market Discounters | Store39

Market Suppliers | Store41

Market Suppliers | Store12

Market Suppliers | Store46

Market Suppliers | Store50

Market Suppliers | Store15

Market Suppliers | Store48

Market Suppliers | Store39

... | ...

(4000 rows)

This example's output is truncated because this particular cross join returned several thousand rows. See also Cross joins.

Subqueries

A subquery is a query nested within another query. In the following example, we want a list of all products containing the highest fat content. The inner query (subquery) returns the product containing the highest fat content among all food products to the outer query block (containing query). The outer query then uses that information to return the names of the products containing the highest fat content.

=> SELECT product_description, fat_content FROM public.product_dimension

WHERE fat_content IN

(SELECT MAX(fat_content) FROM public.product_dimension

WHERE category_description = 'Food' AND department_description = 'Bakery')

LIMIT 10;

product_description | fat_content

-------------------------------------+-------------

Brand #59110 hotdog buns | 90

Brand #58107 english muffins | 90

Brand #57135 english muffins | 90

Brand #54870 cinnamon buns | 90

Brand #53690 english muffins | 90

Brand #53096 bagels | 90

Brand #50678 chocolate chip cookies | 90

Brand #49269 wheat bread | 90

Brand #47156 coffee cake | 90

Brand #43844 corn muffins | 90

(10 rows)

For more information, see Subqueries.

Sorting queries

Use the ORDER BY clause to order the rows that a query returns.

Special note about query results

You could get different results running certain queries on one machine or another for the following reasons:

-

Partitioning on a

FLOATtype could return nondeterministic results because of the precision, especially when the numbers are close to one another, such as results from theRADIANS()function, which has a very small range of output.To get deterministic results, use

NUMERICif you must partition by data that is not anINTEGERtype. -

Most analytics (with analytic aggregations, such as

MIN()/MAX()/SUM()/COUNT()/AVG()as exceptions) rely on a unique order of input data to get deterministic result. If the analytic window-order clause cannot resolve ties in the data, results could be different each time you run the query.For example, in the following query, the analytic

ORDER BYdoes not include the first column in the query,promotion_key. So for a tie ofAVG(RADIANS(cost_dollar_amount)), product_version, the samepromotion_keycould have different positions within the analytic partition, resulting in a differentNTILE()number. Thus,DISTINCTcould also have a different result:=> SELECT COUNT(*) FROM (SELECT DISTINCT SIN(FLOOR(MAX(store.store_sales_fact.promotion_key))), NTILE(79) OVER(PARTITION BY AVG (RADIANS (store.store_sales_fact.cost_dollar_amount )) ORDER BY store.store_sales_fact.product_version) FROM store.store_sales_fact GROUP BY store.store_sales_fact.product_version, store.store_sales_fact.sales_dollar_amount ) AS store; count ------- 1425 (1 row)If you add

MAX(promotion_key)to analyticORDER BY, the results are the same on any machine:=> SELECT COUNT(*) FROM (SELECT DISTINCT MAX(store.store_sales_fact.promotion_key), NTILE(79) OVER(PARTITION BY MAX(store.store_sales_fact.cost_dollar_amount) ORDER BY store.store_sales_fact.product_version, MAX(store.store_sales_fact.promotion_key)) FROM store.store_sales_fact GROUP BY store.store_sales_fact.product_version, store.store_sales_fact.sales_dollar_amount) AS store;

1.4 - Arrays and sets (collections)

Tables can include collections (arrays or sets). An ARRAY is an ordered collection of elements that allows duplicate values, and a SET is an unordered collection of unique values.

Consider an orders table with columns for product keys, customer keys, order prices, and order date, with some containing arrays. A basic query in Vertica results in the following:

=> SELECT * FROM orders LIMIT 5;

orderkey | custkey | prodkey | orderprices | orderdate

----------+---------+------------------------+-----------------------------+------------

19626 | 91 | ["P1262","P68","P101"] | ["192.59","49.99","137.49"] | 2021-03-14

25646 | 716 | ["P997","P31","P101"] | ["91.39","29.99","147.49"] | 2021-03-14

25647 | 716 | ["P12"] | ["8.99"] | 2021-03-14

19743 | 161 | ["P68","P101"] | ["49.99","137.49"] | 2021-03-15

19888 | 241 | ["P1262","P101"] | ["197.59","142.49"] | 2021-03-15

(5 rows)

As shown in this example, array values are returned in JSON format. Set values are also returned in JSON array format.

You can access elements of nested arrays (multi-dimensional arrays) with multiple indexes:

=> SELECT host, pingtimes FROM network_tests;

host | pingtimes

------+-------------------------------------------------------

eng1 | [[24.24,25.27,27.16,24.97], [23.97,25.01,28.12,29.50]]

eng2 | [[27.12,27.91,28.11,26.95], [29.01,28.99,30.11,31.56]]

qa1 | [[23.15,25.11,24.63,23.91], [22.85,22.86,23.91,31.52]]

(3 rows)

=> SELECT pingtimes[0] FROM network_tests;

pingtimes

-------------------------

[24.24,25.27,27.16,24.97]

[27.12,27.91,28.11,26.95]

[23.15,25.11,24.63,23.91]

(3 rows)

=> SELECT pingtimes[0][0] FROM network_tests;

pingtimes

-----------

24.24

27.12

23.15

(3 rows)

Vertica supports several functions to manipulate arrays and sets.

Consider the orders table, which has an array of product keys for all items purchased in a single order. You can use the APPLY_COUNT_ELEMENTS function to find out how many items each order contains (excluding null values):

=> SELECT APPLY_COUNT_ELEMENTS(prodkey) FROM orders LIMIT 5;

apply_count_elements

--------------------

3

2

2

3

1

(5 rows)

Vertica also supports aggregate functions for collections. Consider a column with an array of prices for items purchased in a single order. You can use the APPLY_SUM function to find the total amount spent for each order:

=> SELECT APPLY_SUM(orderprices) FROM orders LIMIT 5;

apply_sum

-----------

380.07

187.48

340.08

268.87

8.99

(5 rows)

Most of the array functions operate only on one-dimensional arrays. To use them with multi-dimensional arrays, first dereference one dimension:

=> SELECT APPLY_MAX(pingtimes[0]) FROM network_tests;

apply_max

-----------

27.16

28.11

25.11

(3 rows)

See Collection functions for a comprehensive list of functions.

You can include both column names and literal values in queries. The following example returns the product keys for orders where the number of items in each order is three or more:

=> SELECT prodkey FROM orders WHERE APPLY_COUNT_ELEMENTS(prodkey)>2;

prodkey

------------------------

["P1262","P68","P101"]

["P997","P31","P101"]

(2 rows)

You can use aggregate functions in a WHERE clause:

=> SELECT custkey, cust_custname, cust_email, orderkey, prodkey, orderprices FROM orders

JOIN cust ON custkey = cust_custkey

WHERE APPLY_SUM(orderprices)>150 ;

custkey| cust_custname | cust_email | orderkey | prodkey | orderprices

-------+------------------+---------------------------+--------------+--------------------------------========---+---------------------------

342799 | "Ananya Patel" | "ananyapatel98@gmail.com" | "113-341987" | ["MG-7190","VA-4028","EH-1247","MS-7018"] | [60.00,67.00,22.00,14.99]

342845 | "Molly Benton" | "molly_benton@gmail.com" | "111-952000" | ["ID-2586","IC-9010","MH-2401","JC-1905"] | [22.00,35.00,90.00,12.00]

342989 | "Natasha Abbasi" | "natsabbasi@live.com" | "111-685238" | ["HP-4024"] | [650.00]

342176 | "Jose Martinez" | "jmartinez@hotmail.com" | "113-672238" | ["HP-4768","IC-9010"] | [899.00,60.00]

342845 | "Molly Benton" | "molly_benton@gmail.com" | "113-864153" | ["AE-7064","VA-4028","GW-1808"] | [72.00,99.00,185.00]

(5 rows)

Element data types

Collections support elements of any scalar type, arrays, or structs (ROW). In the following version of the orders table, an array of ROW elements contains information about all shipments for an order:

=> CREATE TABLE orders(

orderid INT,

accountid INT,

shipments ARRAY[

ROW(

shipid INT,

address ROW(

street VARCHAR,

city VARCHAR,

zip INT

),

shipdate DATE

)

]

);

Some orders consist of more than one shipment. Line breaks have been inserted into the following output for legibility:

=> SELECT * FROM orders;

orderid | accountid | shipments

---------+-----------+---------------------------------------------------------------------------------------------------------------

99123 | 17 | [{"shipid":1,"address":{"street":"911 San Marcos St","city":"Austin","zip":73344},"shipdate":"2020-11-05"},

{"shipid":2,"address":{"street":"100 main St Apt 4B","city":"Pasadena","zip":91001},"shipdate":"2020-11-06"}]

99149 | 139 | [{"shipid":3,"address":{"street":"100 main St Apt 4B","city":"Pasadena","zip":91001},"shipdate":"2020-11-06"}]

99162 | 139 | [{"shipid":4,"address":{"street":"100 main St Apt 4B","city":"Pasadena","zip":91001},"shipdate":"2020-11-04"},

{"shipid":5,"address":{"street":"100 Main St Apt 4A","city":"Pasadena","zip":91001},"shipdate":"2020-11-11"}]

(3 rows)

You can use array indexing and ROW field selection together in queries:

=> SELECT orderid, shipments[0].shipdate AS ship1, shipments[1].shipdate AS ship2 FROM orders;

orderid | ship1 | ship2

---------+------------+------------

99123 | 2020-11-05 | 2020-11-06

99149 | 2020-11-06 |

99162 | 2020-11-04 | 2020-11-11

(3 rows)

This example selects specific array indices. To access all entries, use EXPLODE. To search or filter elements, see Searching and Filtering.

Some data formats have a map type, which is a set of key/value pairs. Vertica does not directly support querying maps, but you can define a map column as an array of structs and query that. In the following example, the prods column in the data is a map:

=> CREATE EXTERNAL TABLE orders

(orderkey INT,

custkey INT,

prods ARRAY[ROW(key VARCHAR(10), value DECIMAL(12,2))],

orderdate DATE

) AS COPY FROM '...' PARQUET;

=> SELECT orderkey, prods FROM orders;

orderkey | prods

----------+--------------------------------------------------------------------------------------------------

19626 | [{"key":"P68","value":"49.99"},{"key":"P1262","value":"192.59"},{"key":"P101","value":"137.49"}]

25646 | [{"key":"P997","value":"91.39"},{"key":"P101","value":"147.49"},{"key":"P31","value":"29.99"}]

25647 | [{"key":"P12","value":"8.99"}]

19743 | [{"key":"P68","value":"49.99"},{"key":"P101","value":"137.49"}]

19888 | [{"key":"P1262","value":"197.59"},{"key":"P101","value":"142.49"}]

(5 rows)

You cannot use complex columns in CREATE TABLE AS SELECT (CTAS). This restriction applies for the entire column or for field selection within it.

Ordering and grouping

You can use Comparison operators with collections of scalar values. Null collections are ordered last. Otherwise, collections are compared element by element until there is a mismatch, and then they are ordered based on the non-matching elements. If all elements are equal up to the length of the shorter one, then the shorter one is ordered first.

You can use collections in the ORDER BY and GROUP BY clauses of queries. The following example shows ordering query results by an array column:

=> SELECT * FROM employees

ORDER BY grant_values;

id | department | grants | grant_values

----+------------+--------------------------+----------------

36 | Astronomy | ["US-7376","DARPA-1567"] | [5000,4000]

36 | Physics | ["US-7376","DARPA-1567"] | [10000,25000]

33 | Physics | ["US-7376"] | [30000]

42 | Physics | ["US-7376","DARPA-1567"] | [65000,135000]

(4 rows)

The following example queries the same table using GROUP BY:

=> SELECT department, grants, SUM(apply_sum(grant_values))

FROM employees

GROUP BY grants, department;

department | grants | SUM

------------+--------------------------+--------

Physics | ["US-7376","DARPA-1567"] | 235000

Astronomy | ["US-7376","DARPA-1567"] | 9000

Physics | ["US-7376"] | 30000

(3 rows)

See the "Functions and Operators" section on the ARRAY reference page for information on how Vertica orders collections. (The same information is also on the SET reference page.)

Null semantics

Null semantics for collections are consistent with normal columns in most regards. See NULL sort order for more information on null-handling.

The null-safe equality operator (<=>) behaves differently from equality (=) when the collection is null rather than empty. Comparing a collection to NULL strictly returns null:

=> SELECT ARRAY[1,3] = NULL;

?column?

----------

(1 row)

=> SELECT ARRAY[1,3] <=> NULL;

?column?

----------

f

(1 row)

In the following example, the grants column in the table is null for employee 99:

=> SELECT grants = NULL FROM employees WHERE id=99;

?column?

----------

(1 row)

=> SELECT grants <=> NULL FROM employees WHERE id=99;

?column?

----------

t

(1 row)

Empty collections are not null and behave as expected:

=> SELECT ARRAY[]::ARRAY[INT] = ARRAY[]::ARRAY[INT];

?column?

----------

t

(1 row)

Collections are compared element by element. If a comparison depends on a null element, the result is unknown (null), not false. For example, ARRAY[1,2,null]=ARRAY[1,2,null] and ARRAY[1,2,null]=ARRAY[1,2,3] both return null, but ARRAY[1,2,null]=ARRAY[1,4,null] returns false because the second elements do not match.

Out-of-bound indexes into collections return NULL:

=> SELECT prodkey[2] FROM orders LIMIT 4;

prodkey

---------

"EH-1247"

"MH-2401"

(4 rows)

The results of the query return NULL for two out of four rows, the first and the fourth, because the specified index is greater than the size of those arrays.

Casting

When the data type of an expression value is unambiguous, it is implicitly coerced to match the expected data type. However, there can be ambiguity about the data type of an expression. Write an explicit cast to avoid the default:

=> SELECT APPLY_SUM(ARRAY['1','2','3']);

ERROR 5595: Invalid argument type VarcharArray1D in function apply_sum

=> SELECT APPLY_SUM(ARRAY['1','2','3']::ARRAY[INT]);

apply_sum

-----------

6

(1 row)

You can cast arrays or sets of one scalar type to arrays or sets of other (compatible) types, following the same rules as for casting scalar values. Casting a collection casts each element of that collection. Casting an array to a set also removes any duplicates.

You can cast arrays (but not sets) with elements that are arrays or structs (or combinations):

=> SELECT shipments::ARRAY[ROW(id INT,addr ROW(VARCHAR,VARCHAR,INT),shipped DATE)]

FROM orders;

shipments

---------------------------------------------------------------------------

[{"id":1,"addr":{"street":"911 San Marcos St","city":"Austin","zip":73344},"shipped":"2020-11-05"},

{"id":2,"addr":{"street":"100 main St Apt 4B","city":"Pasadena","zip":91001},"shipped":"2020-11-06"}]

[{"id":3,"addr":{"street":"100 main St Apt 4B","city":"Pasadena","zip":91001},"shipped":"2020-11-06"}]

[{"id":4,"addr":{"street":"100 main St Apt 4B","city":"Pasadena","zip":91001},"shipped":"2020-11-04"},

{"id":5,"addr":{"street":"100 Main St Apt 4A","city":"Pasadena","zip":91001},"shipped":"2020-11-11"}]

(3 rows)

You can change the bound of an array or set by casting. When casting to a bounded native array, inputs that are too long are truncated. When casting to a multi-dimensional array, if the new bounds are too small for the data the cast fails:

=> SELECT ARRAY[1,2,3]::ARRAY[VARCHAR,2];

array

-----------

["1","2"]

(1 row)

=> SELECT ARRAY[ARRAY[1,2,3],ARRAY[4,5,6]]::ARRAY[ARRAY[VARCHAR,2],2];

ERROR 9227: Output array isn't big enough

DETAIL: Type limit is 4 elements, but value has 6 elements

If you cast to a bounded multi-dimensional array, you must specify the bounds at all levels.

An array or set with a single null element must be explicitly cast because no type can be inferred.

See Data type coercion for more information on casting for data types.

Exploding and imploding array columns

To simplify access to elements, you can use the EXPLODE and UNNEST functions. These functions take one or more array columns from a table and expand them, producing one row per element. You can use EXPLODE and UNNEST when you need to perform aggregate operations across all elements of all arrays. You can also use EXPLODE when you need to operate on individual elements. See Manipulating Elements.

EXPLODE and UNNEST differ in their output:

-

EXPLODE returns two columns for each array, one for the element index and one for the value at that position. If the function explodes a single array, these columns are named

positionandvalueby default. If the function explodes two or more arrays, the columns for each array are namedpos_column-nameandval_column-name. -

UNNEST returns only the values. For a single array, the output column is named

value. For multiple arrays, each output column is namedval_column-name.

EXPLODE and UNNEST also differ in their inputs. UNNEST accepts only array arguments and expands all of them. EXPLODE can accept other arguments and passes them through, expanding only as many arrays as requested (default 1).

Consider an orders table with the following contents:

=> SELECT orderkey, custkey, prodkey, orderprices, email_addrs

FROM orders LIMIT 5;

orderkey | custkey | prodkey | orderprices | email_addrs

------------+---------+-----------------------------------------------+-----------------------------------+----------------------------------------------------------------------------------------------------------------

113-341987 | 342799 | ["MG-7190 ","VA-4028 ","EH-1247 ","MS-7018 "] | ["60.00","67.00","22.00","14.99"] | ["bob@example,com","robert.jones@example.com"]

111-952000 | 342845 | ["ID-2586 ","IC-9010 ","MH-2401 ","JC-1905 "] | ["22.00","35.00",null,"12.00"] | ["br92@cs.example.edu"]

111-345634 | 342536 | ["RS-0731 ","SJ-2021 "] | ["50.00",null] | [null]

113-965086 | 342176 | ["GW-1808 "] | ["108.00"] | ["joe.smith@example.com"]

111-335121 | 342321 | ["TF-3556 "] | ["50.00"] | ["789123@example-isp.com","alexjohnson@example.com","monica@eng.example.com","sara@johnson.example.name",null]

(5 rows)

The following query explodes the order prices for a single customer. The other two columns are passed through and are repeated for each returned row:

=> SELECT EXPLODE(orderprices, custkey, email_addrs

USING PARAMETERS skip_partitioning=true)

AS (position, orderprices, custkey, email_addrs)

FROM orders WHERE custkey='342845' ORDER BY orderprices;

position | orderprices | custkey | email_addrs

----------+-------------+---------+------------------------------

2 | | 342845 | ["br92@cs.example.edu",null]

3 | 12.00 | 342845 | ["br92@cs.example.edu",null]

0 | 22.00 | 342845 | ["br92@cs.example.edu",null]

1 | 35.00 | 342845 | ["br92@cs.example.edu",null]

(4 rows)

The previous example uses the skip_partitioning parameter. Instead of setting it for each call to EXPLODE, you can set it as a session parameter. EXPLODE is part of the ComplexTypesLib UDx library. The following example returns the same results:

=> ALTER SESSION SET UDPARAMETER FOR ComplexTypesLib skip_partitioning=true;

=> SELECT EXPLODE(orderprices, custkey, email_addrs)

AS (position, orderprices, custkey, email_addrs)

FROM orders WHERE custkey='342845' ORDER BY orderprices;

You can explode more than one column by specifying the explode_count parameter:

=> SELECT EXPLODE(orderkey, prodkey, orderprices

USING PARAMETERS explode_count=2, skip_partitioning=true)

AS (orderkey,pk_idx,pk_val,ord_idx,ord_val)

FROM orders

WHERE orderkey='113-341987';

orderkey | pk_idx | pk_val | ord_idx | ord_val

------------+--------+----------+---------+---------

113-341987 | 0 | MG-7190 | 0 | 60.00

113-341987 | 0 | MG-7190 | 1 | 67.00

113-341987 | 0 | MG-7190 | 2 | 22.00

113-341987 | 0 | MG-7190 | 3 | 14.99

113-341987 | 1 | VA-4028 | 0 | 60.00

113-341987 | 1 | VA-4028 | 1 | 67.00

113-341987 | 1 | VA-4028 | 2 | 22.00

113-341987 | 1 | VA-4028 | 3 | 14.99

113-341987 | 2 | EH-1247 | 0 | 60.00

113-341987 | 2 | EH-1247 | 1 | 67.00

113-341987 | 2 | EH-1247 | 2 | 22.00

113-341987 | 2 | EH-1247 | 3 | 14.99

113-341987 | 3 | MS-7018 | 0 | 60.00

113-341987 | 3 | MS-7018 | 1 | 67.00

113-341987 | 3 | MS-7018 | 2 | 22.00

113-341987 | 3 | MS-7018 | 3 | 14.99

(16 rows)

If you do not need the element positions, you can use UNNEST:

=> SELECT orderkey, UNNEST(prodkey, orderprices)

FROM orders WHERE orderkey='113-341987';

orderkey | val_prodkey | val_orderprices

------------+-------------+-----------------

113-341987 | MG-7190 | 60.00

113-341987 | MG-7190 | 67.00

113-341987 | MG-7190 | 22.00

113-341987 | MG-7190 | 14.99

113-341987 | VA-4028 | 60.00

113-341987 | VA-4028 | 67.00

113-341987 | VA-4028 | 22.00

113-341987 | VA-4028 | 14.99

113-341987 | EH-1247 | 60.00

113-341987 | EH-1247 | 67.00

113-341987 | EH-1247 | 22.00

113-341987 | EH-1247 | 14.99

113-341987 | MS-7018 | 60.00

113-341987 | MS-7018 | 67.00

113-341987 | MS-7018 | 22.00

113-341987 | MS-7018 | 14.99

(16 rows)

The following example uses a multi-dimensional array:

=> SELECT name, pingtimes FROM network_tests;

name | pingtimes

------+-------------------------------------------------------

eng1 | [[24.24,25.27,27.16,24.97],[23.97,25.01,28.12,29.5]]

eng2 | [[27.12,27.91,28.11,26.95],[29.01,28.99,30.11,31.56]]

qa1 | [[23.15,25.11,24.63,23.91],[22.85,22.86,23.91,31.52]]

(3 rows)

=> SELECT EXPLODE(name, pingtimes USING PARAMETERS explode_count=1) OVER()

FROM network_tests;

name | position | value

------+----------+---------------------------

eng1 | 0 | [24.24,25.27,27.16,24.97]

eng1 | 1 | [23.97,25.01,28.12,29.5]

eng2 | 0 | [27.12,27.91,28.11,26.95]

eng2 | 1 | [29.01,28.99,30.11,31.56]

qa1 | 0 | [23.15,25.11,24.63,23.91]

qa1 | 1 | [22.85,22.86,23.91,31.52]

(6 rows)

You can rewrite the previous query as follows to produce the same results:

=> SELECT name, EXPLODE(pingtimes USING PARAMETERS skip_partitioning=true)

FROM network_tests;

The IMPLODE function is the inverse of EXPLODE and UNNEST: it takes a column and produces an array containing the column's values. You can use WITHIN GROUP ORDER BY to control the order of elements in the imploded array. Combined with GROUP BY, IMPLODE can be used to reverse an EXPLODE operation.

If the output array would be too large for the column, IMPLODE returns an error. To avoid this, you can set the allow_truncate parameter to omit some elements from the results. Truncation never applies to individual elements; for example, the function does not shorten strings.

Searching and filtering

You can search array elements without having to first explode the array using the following functions:

-

CONTAINS: tests whether an array contains an element

-

ARRAY_FIND: returns the position of the first matching element

-

FILTER: returns an array containing only matching elements from an input array

You can use CONTAINS and ARRAY_FIND to search for specific elements:

=> SELECT CONTAINS(email, 'frank@example.com') FROM people;

CONTAINS

----------

f

t

f

f

(4 rows)

Suppose, instead of finding a particular address, you want to find all of the people who use an example.com email address. Instead of specifying a literal value, you can supply a lambda function to test elements. A lambda function has the following syntax:

argument -> expression

The lambda function takes the place of the second argument:

=> SELECT CONTAINS(email, e -> REGEXP_LIKE(e,'example.com','i')) FROM people;

CONTAINS

----------

f

t

f

t

(4 rows)

The FILTER function tests array elements, like ARRAY_FIND and CONTAINS, but then returns an array containing only the elements that match the filter:

=> SELECT name, email FROM people;

name | email

----------------+-------------------------------------------------

Elaine Jackson | ["ejackson@somewhere.org","elaine@jackson.com"]

Frank Adams | ["frank@example.com"]

Lee Jones | ["lee.jones@somewhere.org"]

M Smith | ["msmith@EXAMPLE.COM","ms@MSMITH.COM"]

(4 rows)

=> SELECT name, FILTER(email, e -> NOT REGEXP_LIKE(e,'example.com','i')) AS 'real_email'

FROM people;

name | real_email

----------------+-------------------------------------------------

Elaine Jackson | ["ejackson@somewhere.org","elaine@jackson.com"]

Frank Adams | []

Lee Jones | ["lee.jones@somewhere.org"]

M Smith | ["ms@MSMITH.COM"]

(4 rows)

To filter out entire rows without real email addresses, test the array length after applying the filter:

=> SELECT name, FILTER(email, e -> NOT REGEXP_LIKE(e,'example.com','i')) AS 'real_email'

FROM people

WHERE ARRAY_LENGTH(real_email) > 0;

name | real_email

----------------+-------------------------------------------------

Elaine Jackson | ["ejackson@somewhere.org","elaine@jackson.com"]

Lee Jones | ["lee.jones@somewhere.org"]

M Smith | ["ms@MSMITH.COM"]

(3 rows)

The lambda function has an optional second argument, which is the element index. For an example that uses the index argument, see Lambda functions.

Manipulating elements

You can filter array elements using the FILTER function as explained in Searching and Filtering. Filtering does not allow for operations on the filtered elements. For this case, you can use FILTER, EXPLODE, and IMPLODE together.

Consider a table of people, where each row has a person's name and an array of email addresses, some invalid:

=> SELECT * FROM people;

id | name | email

----+----------------+-------------------------------------------------

56 | Elaine Jackson | ["ejackson@somewhere.org","elaine@jackson.com"]

61 | Frank Adams | ["frank@example.com"]

87 | Lee Jones | ["lee.jones@somewhere.org"]

91 | M Smith | ["msmith@EXAMPLE.COM","ms@MSMITH.COM"]

(4 rows)

Email addresses are case-insensitive but VARCHAR values are not. The following example filters out invalid email addresses and normalizes the remaining ones by converting them to lowercase. The order of operations for each row is:

-

Filter each array to remove addresses using

example.com, partitioning by name. -

Explode the filtered array.

-

Convert each element to lowercase.

-

Implode the lowercase elements, grouping by name.

=> WITH exploded AS

(SELECT EXPLODE(FILTER(email, e -> NOT REGEXP_LIKE(e, 'example.com', 'i')), name)

OVER (PARTITION BEST) AS (pos, addr, name)

FROM people)

SELECT name, IMPLODE(LOWER(addr)) AS email

FROM exploded GROUP BY name;

name | email

----------------+-------------------------------------------------

Elaine Jackson | ["ejackson@somewhere.org","elaine@jackson.com"]

Lee Jones | ["lee.jones@somewhere.org"]

M Smith | ["ms@msmith.com"]

(3 rows)

Because the second row in the original table did not have any remaining email addresses after the filter step, there was nothing to partition by. Therefore, the row does not appear in the results at all.

1.5 - Rows (structs)

Tables can include columns of the ROW data type. A ROW, sometimes called a struct, is a set of typed property-value pairs.

Consider a table of customers with columns for name, address, and an ID. The address is a ROW with fields for the elements of an address (street, city, and postal code). As shown in this example, ROW values are returned in JSON format:

=> SELECT * FROM customers ORDER BY accountID;

name | address | accountID

--------------------+--------------------------------------------------------------------+-----------

Missy Cooper | {"street":"911 San Marcos St","city":"Austin","zipcode":73344} | 17

Sheldon Cooper | {"street":"100 Main St Apt 4B","city":"Pasadena","zipcode":91001} | 139

Leonard Hofstadter | {"street":"100 Main St Apt 4A","city":"Pasadena","zipcode":91001} | 142

Leslie Winkle | {"street":"23 Fifth Ave Apt 8C","city":"Pasadena","zipcode":91001} | 198

Raj Koothrappali | {"street":null,"city":"Pasadena","zipcode":91001} | 294

Stuart Bloom | | 482

(6 rows)

Most values are cast to UTF-8 strings, as shown for street and city here. Integers and booleans are cast to JSON Numerics and thus not quoted.

Use dot notation (column.field) to access individual fields:

=> SELECT address.city FROM customers;

city

----------

Pasadena

Pasadena

Pasadena

Pasadena

Austin

(6 rows)

In the following example, the contact information in the customers table has an email field, which is an array of addresses:

=> SELECT name, contact.email FROM customers;

name | email

--------------------+---------------------------------------------

Missy Cooper | ["missy@mit.edu","mcooper@cern.gov"]

Sheldon Cooper | ["shelly@meemaw.name","cooper@caltech.edu"]

Leonard Hofstadter | ["hofstadter@caltech.edu"]

Leslie Winkle | []

Raj Koothrappali | ["raj@available.com"]

Stuart Bloom |

(6 rows)

You can use ROW columns or specific fields to restrict queries, as in the following example:

=> SELECT address FROM customers WHERE address.city ='Pasadena';

address

--------------------------------------------------------------------

{"street":"100 Main St Apt 4B","city":"Pasadena","zipcode":91001}

{"street":"100 Main St Apt 4A","city":"Pasadena","zipcode":91001}

{"street":"23 Fifth Ave Apt 8C","city":"Pasadena","zipcode":91001}

{"street":null,"city":"Pasadena","zipcode":91001}

(4 rows)

You can use the ROW syntax to specify literal values, such as the address in the WHERE clause in the following example:

=> SELECT name,address FROM customers

WHERE address = ROW('100 Main St Apt 4A','Pasadena',91001);

name | address

--------------------+-------------------------------------------------------------------

Leonard Hofstadter | {"street":"100 Main St Apt 4A","city":"Pasadena","zipcode":91001}

(1 row)

You can join on field values as you would from any other column:

=> SELECT accountID,department from customers JOIN employees

ON customers.name=employees.personal.name;

accountID | department

-----------+------------

139 | Physics

142 | Physics

294 | Astronomy

You can join on full structs. The following example joins the addresses in the employees and customers tables:

=> SELECT employees.personal.name,customers.accountID FROM employees

JOIN customers ON employees.personal.address=customers.address;

name | accountID

--------------------+-----------

Sheldon Cooper | 139

Leonard Hofstadter | 142

(2 rows)

You can cast structs, optionally specifying new field names:

=> SELECT contact::ROW(str VARCHAR, city VARCHAR, zip VARCHAR, email ARRAY[VARCHAR,

20]) FROM customers;

contact

--------------------------------------------------------------------------------

----------------------------------

{"str":"911 San Marcos St","city":"Austin","zip":"73344","email":["missy@mit.ed

u","mcooper@cern.gov"]}

{"str":"100 Main St Apt 4B","city":"Pasadena","zip":"91001","email":["shelly@me

emaw.name","cooper@caltech.edu"]}

{"str":"100 Main St Apt 4A","city":"Pasadena","zip":"91001","email":["hofstadte

r@caltech.edu"]}

{"str":"23 Fifth Ave Apt 8C","city":"Pasadena","zip":"91001","email":[]}

{"str":null,"city":"Pasadena","zip":"91001","email":["raj@available.com"]}

(6 rows)

You can use structs in views and in subqueries, as in the following example:

=> CREATE VIEW neighbors (num_neighbors, area(city, zipcode))

AS SELECT count(*), ROW(address.city, address.zipcode)

FROM customers GROUP BY address.city, address.zipcode;

CREATE VIEW

=> SELECT employees.personal.name, neighbors.area FROM neighbors, employees

WHERE employees.personal.address.zipcode=neighbors.area.zipcode AND neighbors.nu

m_neighbors > 1;

name | area

--------------------+-------------------------------------

Sheldon Cooper | {"city":"Pasadena","zipcode":91001}

Leonard Hofstadter | {"city":"Pasadena","zipcode":91001}

(2 rows)

If a reference is ambiguous, Vertica prefers column names over field names.

You can use many operators and predicates with ROW columns, including JOIN, GROUP BY, ORDER BY, IS [NOT] NULL, and comparison operations in nullable filters. Some operators do not logically apply to structured data and are not supported. See the ROW reference page for a complete list.

1.6 - Subqueries

A subquery is a SELECT statement embedded within another SELECT statement. The embedded subquery is often referenced as the query's inner statement, while the containing query is typically referenced as the query's statement, or outer query block. A subquery returns data that the outer query uses as a condition to determine what data to retrieve. There is no limit to the number of nested subqueries you can create.

Like any query, a subquery returns records from a table that might consist of a single column and record, a single column with multiple records, or multiple columns and records. Subqueries can be noncorrelated or correlated. You can also use them to update or delete table records, based on values in other database tables.

1.6.1 - Subqueries used in search conditions

Subqueries are used as search conditions in order to filter results. They specify the conditions for the rows returned from the containing query's select-list, a query expression, or the subquery itself. The operation evaluates to TRUE, FALSE, or UNKNOWN (NULL).

Syntax

search-condition {

[ { AND | OR | NOT } { predicate | ( search-condition ) } ]

}[,... ]

predicate

{ expression comparison-operator expression

| string-expression [ NOT ] { LIKE | ILIKE | LIKEB | ILIKEB } string-expression

| expression IS [ NOT ] NULL

| expression [ NOT ] IN ( subquery | expression[,... ] )

| expression comparison-operator [ ANY | SOME ] ( subquery )

| expression comparison-operator ALL ( subquery )

| expression OR ( subquery )

| [ NOT ] EXISTS ( subquery )

| [ NOT ] IN ( subquery )

}

Arguments

search-condition |

Specifies the search conditions for the rows returned from one of the following:

If the subquery is used with an UPDATE or DELETE statement, UPDATE specifies the rows to update and DELETE specifies the rows to delete. |

{ AND | OR | NOT } |

Logical operators:

|

predicate |

An expression that returns TRUE, FALSE, or UNKNOWN (NULL). |

expression |

A column name, constant, function, or scalar subquery, or combination of column names, constants, and functions connected by operators or subqueries. |

comparison-operator |

An operator that tests conditions between two expressions, one of the following:

|

string-expression |

A character string with optional wildcard (*) characters. |

[ NOT ] { LIKE | ILIKE | LIKEB | ILIKEB } |

Indicates that the character string following the predicate is to be used (or not used) for pattern matching. |

IS [ NOT ] NULL |

Searches for values that are null or are not null. |

ALL |

Used with a comparison operator and a subquery. Returns TRUE for the left-hand predicate if all values returned by the subquery satisfy the comparison operation, or FALSE if not all values satisfy the comparison or if the subquery returns no rows to the outer query block. |

ANY | SOME |

ANY and SOME are synonyms and are used with a comparison operator and a subquery. Either returns TRUE for the left-hand predicate if any value returned by the subquery satisfies the comparison operation, or FALSE if no values in the subquery satisfy the comparison or if the subquery returns no rows to the outer query block. Otherwise, the expression is UNKNOWN. |

[ NOT ] EXISTS |

Used with a subquery to test for the existence of records that the subquery returns. |

[ NOT ] IN |

Searches for an expression on the basis of an expression's exclusion or inclusion from a list. The list of values is enclosed in parentheses and can be a subquery or a set of constants. |

Expressions as subqueries

Subqueries that return a single value (unlike a list of values returned by IN subqueries) can generally be used anywhere an expression is allowed in SQL: a column name, constant, function, scalar subquery, or a combination of column names, constants, and functions connected by operators or subqueries.

For example:

=> SELECT c1 FROM t1 WHERE c1 = ANY (SELECT c1 FROM t2) ORDER BY c1;

=> SELECT c1 FROM t1 WHERE COALESCE((t1.c1 > ANY (SELECT c1 FROM t2)), TRUE);

=> SELECT c1 FROM t1 GROUP BY c1 HAVING

COALESCE((t1.c1 <> ALL (SELECT c1 FROM t2)), TRUE);

Multi-column expressions are also supported:

=> SELECT c1 FROM t1 WHERE (t1.c1, t1.c2) = ALL (SELECT c1, c2 FROM t2);

=> SELECT c1 FROM t1 WHERE (t1.c1, t1.c2) <> ANY (SELECT c1, c2 FROM t2);

Vertica returns an error on queries where more than one row would be returned by any subquery used as an expression:

=> SELECT c1 FROM t1 WHERE c1 = (SELECT c1 FROM t2) ORDER BY c1;

ERROR: more than one row returned by a subquery used as an expression

See also

1.6.2 - Subqueries in the SELECT list

Subqueries can occur in the select list of the containing query. The results from the following statement are ordered by the first column (customer_name). You could also write ORDER BY 2 and specify that the results be ordered by the select-list subquery.

=> SELECT c.customer_name, (SELECT AVG(annual_income) FROM customer_dimension

WHERE deal_size = c.deal_size) AVG_SAL_DEAL FROM customer_dimension c

ORDER BY 1;

customer_name | AVG_SAL_DEAL

---------------+--------------

Goldstar | 603429

Metatech | 628086

Metadata | 666728

Foodstar | 695962

Verihope | 715683

Veridata | 868252

Bettercare | 879156

Foodgen | 958954

Virtacom | 991551

Inicorp | 1098835

...

Notes

-

Scalar subqueries in the select-list return a single row/column value. These subqueries use Boolean comparison operators: =, >, <, <>, <=, >=.

If the query is correlated, it returns NULL if the correlation results in 0 rows. If the query returns more than one row, the query errors out at run time and Vertica displays an error message that the scalar subquery must only return 1 row.

-

Subquery expressions such as [NOT] IN, [NOT] EXISTS, ANY/SOME, or ALL always return a single Boolean value that evaluates to TRUE, FALSE, or UNKNOWN; the subquery itself can have many rows. Most of these queries can be correlated or noncorrelated.

Note

ALL subqueries cannot be correlated. -

Subqueries in the ORDER BY and GROUP BY clauses are supported; for example, the following statement says to order by the first column, which is the select-list subquery:

=> SELECT (SELECT MAX(x) FROM t2 WHERE y=t1.b) FROM t1 ORDER BY 1;

See also

1.6.3 - Noncorrelated and correlated subqueries

Subqueries can be categorized into two types:

-

A noncorrelated subquery obtains its results independently of its containing (outer) statement.

-

A correlated subquery requires values from its outer query in order to execute.

Noncorrelated subqueries

A noncorrelated subquery executes independently of the outer query. The subquery executes first, and then passes its results to the outer query, For example:

=> SELECT name, street, city, state FROM addresses WHERE state IN (SELECT state FROM states);

Vertica executes this query as follows:

-

Executes the subquery

SELECT state FROM states(in bold). -

Passes the subquery results to the outer query.

A query's WHERE and HAVING clauses can specify noncorrelated subqueries if the subquery resolves to a single row, as shown below:

In WHERE clause

=> SELECT COUNT(*) FROM SubQ1 WHERE SubQ1.a = (SELECT y from SubQ2);

In HAVING clause

=> SELECT COUNT(*) FROM SubQ1 GROUP BY SubQ1.a HAVING SubQ1.a = (SubQ1.a & (SELECT y from SubQ2)

Correlated subqueries

A correlated subquery typically obtains values from its outer query before it executes. When the subquery returns, it passes its results to the outer query. Correlated subqueries generally conform to the following format:

SELECT outer-column[,...] FROM t1 outer

WHERE outer-column comparison-operator

(SELECT sq-column[,...] FROM t2 sq

WHERE sq.expr = outer.expr);

Note

You can use an outer join to obtain the same effect as a correlated subquery.In the following example, the subquery needs values from the addresses.state column in the outer query:

=> SELECT name, street, city, state FROM addresses

WHERE EXISTS (SELECT * FROM states WHERE states.state = addresses.state);

Vertica executes this query as follows:

- Extracts and evaluates each

addresses.statevalue in the outer subquery records. - Using the EXISTS predicate, checks addresses in the inner (correlated) subquery.

- Stops processing when it finds the first match.

When Vertica executes this query, it translates the full query into a JOIN WITH SIPS.

1.6.4 - Flattening FROM clause subqueries

FROM clause subqueries are always evaluated before their containing query. In some cases, the optimizer flattens FROM clause subqueries so the query can execute more efficiently.

For example, in order to create a query plan for the following statement, the Vertica query optimizer evaluates all records in table t1 before it evaluates the records in table t0:

=> SELECT * FROM (SELECT a, MAX(a) AS max FROM (SELECT * FROM t1) AS t0 GROUP BY a);

Given the previous query, the optimizer can internally flatten it as follows:

=> SELECT * FROM (SELECT a, MAX(a) FROM t1 GROUP BY a) AS t0;

Both queries return the same results, but the flattened query runs more quickly.

Flattening views

When a query's FROM clause specifies a view, the optimizer expands the view by replacing it with the query that the view encapsulates. If the view contains subqueries that are eligible for flattening, the optimizer produces a query plan that flattens those subqueries.

Flattening restrictions

The optimizer cannot create a flattened query plan if a subquery or view contains one of the following elements:

-

Aggregate function

-

Analytic function

-

Outer join (left, right or full)

-

GROUP BY,ORDER BY, orHAVINGclause -

DISTINCTkeyword -

LIMITorOFFSETclause -

UNION,EXCEPT, orINTERSECTclause -

EXISTSsubquery

Examples

If a predicate applies to a view or subquery, the flattening operation can allow for optimizations by evaluating the predicates before the flattening takes place. Two examples follow.

View flattening

In this example, view v1 is defined as follows:

=> CREATE VIEW v1 AS SELECT * FROM a;

The following query specifies this view:

=> SELECT * FROM v1 JOIN b ON x=y WHERE x > 10;

Without flattening, the optimizer evaluates the query as follows:

-

Evalutes the subquery.

-

Applies the predicate

WHERE x > 10.

In contrast, the optimizer can create a flattened query plan by applying the predicate before evaluating the subquery. This reduces the optimizer's work because it returns only the records WHERE x > 10 to the containing query.

Vertica internally transforms the previous query as follows:

=> SELECT * FROM (SELECT * FROM a) AS t1 JOIN b ON x=y WHERE x > 10;

The optimizer then flattens the query:

=> SELECT * FROM a JOIN b ON x=y WHERE x > 10;

Subquery flattening

The following example shows how Vertica transforms FROM clause subqueries within a WHERE clause IN subquery. Given the following query:

=> SELECT * FROM a

WHERE b IN (SELECT b FROM (SELECT * FROM t2)) AS D WHERE x=1;

The optimizer flattens it as follows:

=> SELECT * FROM a

WHERE b IN (SELECT b FROM t2) AS D WHERE x=1;

See also

Subquery restrictions1.6.5 - Subqueries in UPDATE and DELETE statements

You can nest subqueries within UPDATE and DELETE statements.

UPDATE subqueries

You can update records in one table according to values in others, by nesting a subquery within an UPDATE statement. The example below illustrates this through a couple of noncorrelated subqueries. You can reproduce this example with the following tables:

=> CREATE TABLE addresses(cust_id INTEGER, address VARCHAR(2000));

CREATE TABLE

dbadmin=> INSERT INTO addresses VALUES(20,'Lincoln Street'),(30,'Booth Hill Road'),(30,'Beach Avenue'),(40,'Mt. Vernon Street'),(50,'Hillside Avenue');

OUTPUT

--------

5

(1 row)

=> CREATE TABLE new_addresses(new_cust_id integer, new_address Boolean DEFAULT 'T');

CREATE TABLE

dbadmin=> INSERT INTO new_addresses VALUES (20),(30),(80);

OUTPUT

--------

3

(1 row)

=> INSERT INTO new_addresses VALUES (60,'F');

OUTPUT

--------

1

=> COMMIT;

COMMIT

Queries on these tables return the following results:

=> SELECT * FROM addresses;

cust_id | address

---------+-------------------

20 | Lincoln Street

30 | Beach Avenue

30 | Booth Hill Road

40 | Mt. Vernon Street

50 | Hillside Avenue

(5 rows)

=> SELECT * FROM new_addresses;

new_cust_id | new_address

-------------+-------------

20 | t

30 | t

80 | t

60 | f

(4 rows)

-

The following UPDATE statement uses a noncorrelated subquery to join

new_addressesandaddressesrecords on customer IDs. UPDATE sets the value 'New Address' in the joinedaddressesrecords. The statement output indicates that three rows were updated:=> UPDATE addresses SET address='New Address' WHERE cust_id IN (SELECT new_cust_id FROM new_addresses WHERE new_address='T'); OUTPUT -------- 3 (1 row) -

Query the

addressestable to see the changes for matching customer ID 20 and 30. Addresses for customer ID 40 and 50 are not updated:=> SELECT * FROM addresses; cust_id | address ---------+------------------- 40 | Mt. Vernon Street 50 | Hillside Avenue 20 | New Address 30 | New Address 30 | New Address (5 rows) =>COMMIT; COMMIT

DELETE subqueries

You can delete records in one table based according to values in others by nesting a subquery within a DELETE statement.

For example, you want to remove records from new_addresses that were used earlier to update records in addresses. The following DELETE statement uses a noncorrelated subquery to join new_addresses and addresses records on customer IDs. It then deletes the joined records from table new_addresses:

=> DELETE FROM new_addresses

WHERE new_cust_id IN (SELECT cust_id FROM addresses WHERE address='New Address');

OUTPUT

--------

2

(1 row)

=> COMMIT;

COMMIT

Querying new_addresses confirms that the records were deleted:

=> SELECT * FROM new_addresses;

new_cust_id | new_address

-------------+-------------

60 | f

80 | t

(2 rows)

1.6.6 - Subquery examples

This topic illustrates some of the subqueries you can write. The examples use the VMart example database.

Single-row subqueries

Single-row subqueries are used with single-row comparison operators (=, >=, <=, <>, and <=>) and return exactly one row.

For example, the following query retrieves the name and hire date of the oldest employee in the Vmart database:

=> SELECT employee_key, employee_first_name, employee_last_name, hire_date

FROM employee_dimension

WHERE hire_date = (SELECT MIN(hire_date) FROM employee_dimension);

employee_key | employee_first_name | employee_last_name | hire_date

--------------+---------------------+--------------------+------------

2292 | Mary | Bauer | 1956-01-11

(1 row)

Multiple-row subqueries

Multiple-row subqueries return multiple records.

For example, the following IN clause subquery returns the names of the employees making the highest salary in each of the six regions:

=> SELECT employee_first_name, employee_last_name, annual_salary, employee_region

FROM employee_dimension WHERE annual_salary IN

(SELECT MAX(annual_salary) FROM employee_dimension GROUP BY employee_region)

ORDER BY annual_salary DESC;

employee_first_name | employee_last_name | annual_salary | employee_region

---------------------+--------------------+---------------+-------------------

Alexandra | Sanchez | 992363 | West

Mark | Vogel | 983634 | South

Tiffany | Vu | 977716 | SouthWest

Barbara | Lewis | 957949 | MidWest

Sally | Gauthier | 927335 | East

Wendy | Nielson | 777037 | NorthWest

(6 rows)

Multicolumn subqueries

Multicolumn subqueries return one or more columns. Sometimes a subquery's result set is evaluated in the containing query in column-to-column and row-to-row comparisons.

Note

Multicolumn subqueries can use the <>, !=, and = operators but not the <, >, <=, >= operators.You can substitute some multicolumn subqueries with a join, with the reverse being true as well. For example, the following two queries ask for the sales transactions of all products sold online to customers located in Massachusetts and return the same result set. The only difference is the first query is written as a join and the second is written as a subquery.

| Join query: | Subquery: |

|

|

The following query returns all employees in each region whose salary is above the average:

=> SELECT e.employee_first_name, e.employee_last_name, e.annual_salary,

e.employee_region, s.average

FROM employee_dimension e,

(SELECT employee_region, AVG(annual_salary) AS average

FROM employee_dimension GROUP BY employee_region) AS s

WHERE e.employee_region = s.employee_region AND e.annual_salary > s.average

ORDER BY annual_salary DESC;

employee_first_name | employee_last_name | annual_salary | employee_region | average

---------------------+--------------------+---------------+-----------------+------------------

Doug | Overstreet | 995533 | East | 61192.786013986

Matt | Gauthier | 988807 | South | 57337.8638902996

Lauren | Nguyen | 968625 | West | 56848.4274914089

Jack | Campbell | 963914 | West | 56848.4274914089

William | Martin | 943477 | NorthWest | 58928.2276119403

Luigi | Campbell | 939255 | MidWest | 59614.9170454545

Sarah | Brown | 901619 | South | 57337.8638902996

Craig | Goldberg | 895836 | East | 61192.786013986

Sam | Vu | 889841 | MidWest | 59614.9170454545

Luigi | Sanchez | 885078 | MidWest | 59614.9170454545

Michael | Weaver | 882685 | South | 57337.8638902996

Doug | Pavlov | 881443 | SouthWest | 57187.2510548523

Ruth | McNulty | 874897 | East | 61192.786013986

Luigi | Dobisz | 868213 | West | 56848.4274914089

Laura | Lang | 865829 | East | 61192.786013986

...

You can also use the EXCEPT, INTERSECT, and UNION [ALL] keywords in FROM, WHERE, and HAVING clauses.

The following subquery returns information about all Connecticut-based customers who bought items through either stores or online sales channel and whose purchases amounted to more than 500 dollars:

=> SELECT DISTINCT customer_key, customer_name FROM public.customer_dimension

WHERE customer_key IN (SELECT customer_key FROM store.store_sales_fact

WHERE sales_dollar_amount > 500

UNION ALL

SELECT customer_key FROM online_sales.online_sales_fact

WHERE sales_dollar_amount > 500)

AND customer_state = 'CT';

customer_key | customer_name

--------------+------------------

200 | Carla Y. Kramer

733 | Mary Z. Vogel

931 | Lauren X. Roy

1533 | James C. Vu

2948 | Infocare

4909 | Matt Z. Winkler

5311 | John Z. Goldberg

5520 | Laura M. Martin

5623 | Daniel R. Kramer

6759 | Daniel Q. Nguyen

...

HAVING clause subqueries

A HAVING clause is used in conjunction with the GROUP BY clause to filter the select-list records that a GROUP BY returns. HAVING clause subqueries must use Boolean comparison operators: =, >, <, <>, <=, >= and take the following form:

SELECT <column, ...>

FROM <table>

GROUP BY <expression>

HAVING <expression>

(SELECT <column, ...>

FROM <table>

HAVING <expression>);

For example, the following statement uses the VMart database and returns the number of customers who purchased lowfat products. Note that the GROUP BY clause is required because the query uses an aggregate (COUNT).

=> SELECT s.product_key, COUNT(s.customer_key) FROM store.store_sales_fact s

GROUP BY s.product_key HAVING s.product_key IN

(SELECT product_key FROM product_dimension WHERE diet_type = 'Low Fat');

The subquery first returns the product keys for all low-fat products, and the outer query then counts the total number of customers who purchased those products.

product_key | count

-------------+-------

15 | 2

41 | 1

66 | 1

106 | 1

118 | 1

169 | 1

181 | 2

184 | 2

186 | 2

211 | 1

229 | 1

267 | 1

289 | 1

334 | 2

336 | 1

(15 rows)

1.6.7 - Subquery restrictions

The following restrictions apply to Vertica subqueries:

-

Subqueries are not allowed in the defining query of a

CREATE PROJECTIONstatement. -

Subqueries can be used in the

SELECTlist, butGROUP BYor aggregate functions are not allowed in the query if the subquery is not part of theGROUP BYclause in the containing query. For example, the following two statement returns an error message:=> SELECT y, (SELECT MAX(a) FROM t1) FROM t2 GROUP BY y; ERROR: subqueries in the SELECT or ORDER BY are not supported if the subquery is not part of the GROUP BY => SELECT MAX(y), (SELECT MAX(a) FROM t1) FROM t2; ERROR: subqueries in the SELECT or ORDER BY are not supported if the query has aggregates and the subquery is not part of the GROUP BY -

Subqueries are supported within

UPDATEstatements with the following exceptions:-

You cannot use

SET column = {expression}to specify a subquery. -

The table specified in the

UPDATElist cannot also appear in theFROMclause (no self joins).

-

-

FROMclause subqueries require an alias but tables do not. If the table has no alias, the query must refer its columns astable-name.column-name. However, column names that are unique among all tables in the query do not need to be qualified by their table name. -

If the

ORDER BYclause is inside aFROMclause subquery, rather than in the containing query, the query is liable to return unexpected sort results. This occurs because Vertica data comes from multiple nodes, so sort order cannot be guaranteed unless the outer query block specifies anORDER BYclause. This behavior complies with the SQL standard, but it might differ from other databases. -

Multicolumn subqueries cannot use the <, >, <=, >= comparison operators. They can use <>, !=, and = operators.

-

WHEREandHAVINGclause subqueries must use Boolean comparison operators: =, >, <, <>, <=, >=. Those subqueries can be noncorrelated and correlated. -

[NOT] INandANYsubqueries nested in another expression are not supported if any of the column values are NULL. In the following statement, for example, if column x from either tablet1ort2contains a NULL value, Vertica returns a run-time error:=> SELECT * FROM t1 WHERE (x IN (SELECT x FROM t2)) IS FALSE; ERROR: NULL value found in a column used by a subquery -

Vertica returns an error message during subquery run time on scalar subqueries that return more than one row.

-

Aggregates and GROUP BY clauses are allowed in subqueries, as long as those subqueries are not correlated.

-

Correlated expressions under

ALLand[NOT] INare not supported. -

Correlated expressions under

ORare not supported. -

Multiple correlations are allowed only for subqueries that are joined with an equality (=) predicate. However,

IN/NOT IN,EXISTS/NOT EXISTSpredicates within correlated subqueries are not allowed:=> SELECT t2.x, t2.y, t2.z FROM t2 WHERE t2.z NOT IN (SELECT t1.z FROM t1 WHERE t1.x = t2.x); ERROR: Correlated subquery with NOT IN is not supported -

Up to one level of correlated subqueries is allowed in the

WHEREclause if the subquery references columns in the immediate outer query block. For example, the following query is not supported because thet2.x = t3.xsubquery can only refer to tablet1in the outer query, making it a correlated expression becauset3.xis two levels out:=> SELECT t3.x, t3.y, t3.z FROM t3 WHERE t3.z IN ( SELECT t1.z FROM t1 WHERE EXISTS ( SELECT 'x' FROM t2 WHERE t2.x = t3.x) AND t1.x = t3.x); ERROR: More than one level correlated subqueries are not supportedThe query is supported if it is rewritten as follows:

=> SELECT t3.x, t3.y, t3.z FROM t3 WHERE t3.z IN (SELECT t1.z FROM t1 WHERE EXISTS (SELECT 'x' FROM t2 WHERE t2.x = t1.x) AND t1.x = t3.x);

1.7 - Joins

Queries can combine records from multiple tables, or multiple instances of the same table. A query that combines records from one or more tables is called a join. Joins are allowed in SELECT statements and subqueries.

Supported join types

Vertica supports the following join types:

-

Inner (including natural, cross) joins

-

Left, right, and full outer joins

-

Optimizations for equality and range joins predicates

Vertica does not support nested loop joins.

Join algorithms

Vertica's query optimizer implements joins with either the hash join or merge join algorithm. For details, see Hash joins versus merge joins.

1.7.1 - Join syntax

Vertica supports the ANSI SQL-92 standard for joining tables, as follows:

table-reference [join-type] JOIN table-reference [ ON join-predicate ]

where join-type can be one of the following:

-

INNER(default) -

LEFT [ OUTER ] -

RIGHT [ OUTER ] -

FULL [ OUTER ] -

NATURAL -

CROSS

For example:

=> SELECT * FROM T1 INNER JOIN T2 ON T1.id = T2.id;

Note

TheON join-predicate clause is invalid for NATURAL and CROSS joins, required for all other join types.

Alternative syntax options

Vertica also supports two older join syntax conventions:

Join specified by WHERE clause join predicate

INNER JOIN is equivalent to a query that specifies its join predicate in a WHERE clause. For example, this example and the previous one return equivalent results. They both specify an inner join between tables T1 and T2 on columns T1.id and T2.id, respectively.

=> SELECT * FROM T1, T2 WHERE T1.id = T2.id;

JOIN USING clause

You can join two tables on identically named columns with a JOIN USING clause. For example:

=> SELECT * FROM T1 JOIN T2 USING(id);

By default, a join that is specified by JOIN USING is always an inner join.

Note

JOIN USING joins the two tables by combining the two join columns into one. Therefore, the two join column data types must be the same or compatible—for example, FLOAT and INTEGER—regardless of the actual data in the joined columns.Benefits of SQL-92 join syntax

Vertica recommends that you use SQL-92 join syntax for several reasons:

-

SQL-92 outer join syntax is portable across databases; the older syntax was not consistent between databases.

-

SQL-92 syntax provides greater control over whether predicates are evaluated during or after outer joins. This was also not consistent between databases when using the older syntax.

-

SQL-92 syntax eliminates ambiguity in the order of evaluating the joins, in cases where more than two tables are joined with outer joins.

1.7.2 - Join conditions vs. filter conditions

If you do not use the SQL-92 syntax, join conditions (predicates that are evaluated during the join) are difficult to distinguish from filter conditions (predicates that are evaluated after the join), and in some cases cannot be expressed at all. With SQL-92, join conditions and filter conditions are separated into two different clauses, the ON clause and the WHERE clause, respectively, making queries easier to understand.

-