This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Working with native tables

You can create two types of native tables in Vertica (ROS format), columnar and flexible.

You can create two types of native tables in Vertica (ROS format), columnar and flexible. You can create both types as persistent or temporary. You can also create views that query a specific set of table columns.

The tables described in this section store their data in and are managed by the Vertica database. Vertica also supports external tables, which are defined in the database and store their data externally. For more information about external tables, see Working with external data.

1 - Creating tables

Use the CREATE TABLE statement to create a native table in the Vertica.

Use the CREATE TABLE statement to create a native table in the Vertica logical schema. You can specify the columns directly, as in the following example, or you can derive a table definition from another table using a LIKE or AS clause. You can specify constraints, partitioning, segmentation, and other factors. For details and restrictions, see the reference page.

The following example shows a basic table definition:

=> CREATE TABLE orders(

orderkey INT,

custkey INT,

prodkey ARRAY[VARCHAR(10)],

orderprices ARRAY[DECIMAL(12,2)],

orderdate DATE

);

Table data storage

Unlike traditional databases that store data in tables, Vertica physically stores table data in projections, which are collections of table columns. Projections store data in a format that optimizes query execution. Similar to materialized views, they store result sets on disk rather than compute them each time they are used in a query.

In order to query or perform any operation on a Vertica table, the table must have one or more projections associated with it. For more information, see Projections.

Deriving a table definition from the data

You can use the INFER_TABLE_DDL function to inspect Parquet, ORC, JSON, or Avro data and produce a starting point for a table definition. This function returns a CREATE TABLE statement, which might require further editing. For columns where the function could not infer the data type, the function labels the type as unknown and emits a warning. For VARCHAR and VARBINARY columns, you might need to adjust the length. Always review the statement the function returns, but especially for tables with many columns, using this function can save time and effort.

Parquet, ORC, and Avro files include schema information, but JSON files do not. For JSON, the function inspects the raw data to produce one or more candidate table definitions. See the function reference page for JSON examples.

In the following example, the function infers a complete table definition from Parquet input, but the VARCHAR columns use the default size and might need to be adjusted:

=> SELECT INFER_TABLE_DDL('/data/people/*.parquet'

USING PARAMETERS format = 'parquet', table_name = 'employees');

WARNING 9311: This generated statement contains one or more varchar/varbinary columns which default to length 80

INFER_TABLE_DDL

-------------------------------------------------------------------------

create table "employees"(

"employeeID" int,

"personal" Row(

"name" varchar,

"address" Row(

"street" varchar,

"city" varchar,

"zipcode" int

),

"taxID" int

),

"department" varchar

);

(1 row)

For Parquet files, you can use the GET_METADATA function to inspect a file and report metadata including information about columns.

See also

2 - Creating temporary tables

CREATE TEMPORARY TABLE creates a table whose data persists only during the current session.

CREATE TEMPORARY TABLE creates a table whose data persists only during the current session. Temporary table data is never visible to other sessions.

By default, all temporary table data is transaction-scoped—that is, the data is discarded when a COMMIT statement ends the current transaction. If CREATE TEMPORARY TABLE includes the parameter ON COMMIT PRESERVE ROWS, table data is retained until the current session ends.

Temporary tables can be used to divide complex query processing into multiple steps. Typically, a reporting tool holds intermediate results while reports are generated—for example, the tool first gets a result set, then queries the result set, and so on.

When you create a temporary table, Vertica automatically generates a default projection for it. For more information, see Auto-projections.

Global versus local tables

CREATE TEMPORARY TABLE can create tables at two scopes, global and local, through the keywords GLOBAL and LOCAL, respectively:

|

Global temporary tables |

Vertica creates global temporary tables in the public schema. Definitions of these tables are visible to all sessions, and persist across sessions until they are explicitly dropped. Multiple users can access the table concurrently. Table data is session-scoped, so it is visible only to the session user, and is discarded when the session ends. |

|

Local temporary tables |

Vertica creates local temporary tables in the V_TEMP_SCHEMA namespace and inserts them transparently into the user's search path. These tables are visible only to the session where they are created. When the session ends, Vertica automatically drops the table and its data. |

Data retention

You can specify whether temporary table data is transaction- or session-scoped:

-

ON COMMIT DELETE ROWS (default): Vertica automatically removes all table data when each transaction ends.

-

ON COMMIT PRESERVE ROWS: Vertica preserves table data across transactions in the current session. Vertica automatically truncates the table when the session ends.

Note

If you create a temporary table with ON COMMIT PRESERVE ROWS, you cannot add projections for that table if it contains data. You must first remove all data from that table with TRUNCATE TABLE.

You can create projections for temporary tables created with ON COMMIT DELETE ROWS, whether populated with data or not. However, CREATE PROJECTION ends any transaction where you might have added data, so projections are always empty.

ON COMMIT DELETE ROWS

By default, Vertica removes all data from a temporary table, whether global or local, when the current transaction ends.

For example:

=> CREATE TEMPORARY TABLE tempDelete (a int, b int);

CREATE TABLE

=> INSERT INTO tempDelete VALUES(1,2);

OUTPUT

--------

1

(1 row)

=> SELECT * FROM tempDelete;

a | b

---+---

1 | 2

(1 row)

=> COMMIT;

COMMIT

=> SELECT * FROM tempDelete;

a | b

---+---

(0 rows)

If desired, you can use DELETE within the same transaction multiple times, to refresh table data repeatedly.

ON COMMIT PRESERVE ROWS

You can specify that a temporary table retain data across transactions in the current session, by defining the table with the keywords ON COMMIT PRESERVE ROWS. Vertica automatically removes all data from the table only when the current session ends.

For example:

=> CREATE TEMPORARY TABLE tempPreserve (a int, b int) ON COMMIT PRESERVE ROWS;

CREATE TABLE

=> INSERT INTO tempPreserve VALUES (1,2);

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMIT

=> SELECT * FROM tempPreserve;

a | b

---+---

1 | 2

(1 row)

=> INSERT INTO tempPreserve VALUES (3,4);

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMIT

=> SELECT * FROM tempPreserve;

a | b

---+---

1 | 2

3 | 4

(2 rows)

Eon restrictions

The following Eon Mode restrictions apply to temporary tables:

3 - Creating a table from other tables

You can create a table from other tables in two ways:.

You can create a table from other tables in two ways:

Important

You can also copy one table to another with the Vertica function

COPY_TABLE.

3.1 - Replicating a table

You can create a table from an existing one using CREATE TABLE with the LIKE clause:.

You can create a table from an existing one using

CREATE TABLE with the LIKE clause:

CREATE TABLE [schema.]table-name LIKE [schema.]existing-table

[ {INCLUDING | EXCLUDING} PROJECTIONS ]

[ {INCLUDE | EXCLUDE} [SCHEMA] PRIVILEGES ]

Creating a table with LIKE replicates the source table definition and any storage policy associated with it. It does not copy table data or expressions on columns.

Copying constraints

CREATE TABLE...LIKE copies all table constraints, with the following exceptions:

-

Foreign key constraints.

-

Any column that obtains its values from a sequence, including IDENTITY columns. Vertica copies the column values into the new table, but removes the original constraint. For example, the following table definition sets an IDENTITY constraint on column ID:

CREATE TABLE public.Premium_Customer

(

ID IDENTITY ,

lname varchar(25),

fname varchar(25),

store_membership_card int

);

The following CREATE TABLE...LIKE statement replicates this table as All_Customers. Vertica removes the IDENTITY constraint from All_Customers.ID, changing it to an integer column with a NOT NULL constraint:

=> CREATE TABLE All_Customers like Premium_Customer;

CREATE TABLE

=> select export_tables('','All_Customers');

export_tables

---------------------------------------------------

CREATE TABLE public.All_Customers

(

ID int NOT NULL,

lname varchar(25),

fname varchar(25),

store_membership_card int

);

(1 row)

Including projections

You can qualify the LIKE clause with INCLUDING PROJECTIONS or EXCLUDING PROJECTIONS, which specify whether to copy projections from the source table:

-

EXCLUDING PROJECTIONS (default): Do not copy projections from the source table.

-

INCLUDING PROJECTIONS: Copy current projections from the source table. Vertica names the new projections according to Vertica naming conventions, to avoid name conflicts with existing objects.

Including schema privileges

You can specify default inheritance of schema privileges for the new table:

For more information see Setting privilege inheritance on tables and views.

Restrictions

The following restrictions apply to the source table:

Example

-

Create the table states:

=> CREATE TABLE states (

state char(2) NOT NULL, bird varchar(20), tree varchar (20), tax float, stateDate char (20))

PARTITION BY state;

-

Populate the table with data:

INSERT INTO states VALUES ('MA', 'chickadee', 'american_elm', 5.675, '07-04-1620');

INSERT INTO states VALUES ('VT', 'Hermit_Thrasher', 'Sugar_Maple', 6.0, '07-04-1610');

INSERT INTO states VALUES ('NH', 'Purple_Finch', 'White_Birch', 0, '07-04-1615');

INSERT INTO states VALUES ('ME', 'Black_Cap_Chickadee', 'Pine_Tree', 5, '07-04-1615');

INSERT INTO states VALUES ('CT', 'American_Robin', 'White_Oak', 6.35, '07-04-1618');

INSERT INTO states VALUES ('RI', 'Rhode_Island_Red', 'Red_Maple', 5, '07-04-1619');

-

View the table contents:

=> SELECT * FROM states;

state | bird | tree | tax | stateDate

-------+---------------------+--------------+-------+----------------------

VT | Hermit_Thrasher | Sugar_Maple | 6 | 07-04-1610

CT | American_Robin | White_Oak | 6.35 | 07-04-1618

RI | Rhode_Island_Red | Red_Maple | 5 | 07-04-1619

MA | chickadee | american_elm | 5.675 | 07-04-1620

NH | Purple_Finch | White_Birch | 0 | 07-04-1615

ME | Black_Cap_Chickadee | Pine_Tree | 5 | 07-04-1615

(6 rows

-

Create a sample projection and refresh:

=> CREATE PROJECTION states_p AS SELECT state FROM states;

=> SELECT START_REFRESH();

-

Create a table like the states table and include its projections:

=> CREATE TABLE newstates LIKE states INCLUDING PROJECTIONS;

-

View projections for the two tables. Vertica has copied projections from states to newstates:

=> \dj

List of projections

Schema | Name | Owner | Node | Comment

-------------------------------+-------------------------------------------+---------+------------------+---------

public | newstates_b0 | dbadmin | |

public | newstates_b1 | dbadmin | |

public | newstates_p_b0 | dbadmin | |

public | newstates_p_b1 | dbadmin | |

public | states_b0 | dbadmin | |

public | states_b1 | dbadmin | |

public | states_p_b0 | dbadmin | |

public | states_p_b1 | dbadmin | |

-

View the table newstates, which shows columns copied from states:

=> SELECT * FROM newstates;

state | bird | tree | tax | stateDate

-------+------+------+-----+-----------

(0 rows)

When you use the CREATE TABLE...LIKE statement, storage policy objects associated with the table are also copied. Data added to the new table use the same labeled storage location as the source table, unless you change the storage policy. For more information, see Working With Storage Locations.

See also

3.2 - Creating a table from a query

CREATE TABLE can specify an AS clause to create a table from a query, as follows:.

CREATE TABLE can specify an AS clause to create a table from a query, as follows:

CREATE [TEMPORARY] TABLE [schema.]table-name

[ ( column-name-list ) ]

[ {INCLUDE | EXCLUDE} [SCHEMA] PRIVILEGES ]

AS [ /*+ LABEL */ ] [ AT epoch ] query [ ENCODED BY column-ref-list ]

Vertica creates a table from the query results and loads the result set into it. For example:

=> CREATE TABLE cust_basic_profile AS SELECT

customer_key, customer_gender, customer_age, marital_status, annual_income, occupation

FROM customer_dimension WHERE customer_age>18 AND customer_gender !='';

CREATE TABLE

=> SELECT customer_age, annual_income, occupation FROM cust_basic_profile

WHERE customer_age > 23 ORDER BY customer_age;

customer_age | annual_income | occupation

--------------+---------------+--------------------

24 | 469210 | Hairdresser

24 | 140833 | Butler

24 | 558867 | Lumberjack

24 | 529117 | Mechanic

24 | 322062 | Acrobat

24 | 213734 | Writer

...

AS clause options

You can qualify an AS clause with one or both of the following options:

Labeling the AS clause

You can embed a LABEL hint in an AS clause in two places:

-

Immediately after the keyword AS:

CREATE TABLE myTable AS /*+LABEL myLabel*/...

-

In the SELECT statement:

CREATE TABLE myTable AS SELECT /*+LABEL myLabel*/

If the AS clause contains a LABEL hint in both places, the first label has precedence.

Note

Labels are invalid for external tables.

Loading historical data

You can qualify a CREATE TABLE AS query with an AT epoch clause, to specify that the query return historical data, where epoch is one of the following:

-

EPOCH LATEST: Return data up to but not including the current epoch. The result set includes data from the latest committed DML transaction.

-

EPOCH integer: Return data up to and including the integer-specified epoch.

-

TIME 'timestamp': Return data from the timestamp-specified epoch.

Note

These options are ignored if used to query temporary or external tables.

See Epochs for additional information about how Vertica uses epochs.

For details, see Historical queries.

Zero-width column handling

If the query returns a column with zero width, Vertica automatically converts it to a VARCHAR(80) column. For example:

=> CREATE TABLE example AS SELECT '' AS X;

CREATE TABLE

=> SELECT EXPORT_TABLES ('', 'example');

EXPORT_TABLES

----------------------------------------------------------

CREATE TEMPORARY TABLE public.example

(

X varchar(80)

);

Requirements and restrictions

-

If you create a temporary table from a query, you must specify ON COMMIT PRESERVE ROWS in order to load the result set into the table. Otherwise, Vertica creates an empty table.

-

If the query output has expressions other than simple columns, such as constants or functions, you must specify an alias for that expression, or list all columns in the column name list.

-

You cannot use CREATE TABLE AS SELECT with a SELECT that returns values of complex types. You can, however, use CREATE TABLE LIKE.

See also

4 - Immutable tables

Many secure systems contain records that must be provably immune to change.

Many secure systems contain records that must be provably immune to change. Protective strategies such as row and block checksums incur high overhead. Moreover, these approaches are not foolproof against unauthorized changes, whether deliberate or inadvertent, by database administrators or other users with sufficient privileges.

Immutable tables are insert-only tables in which existing data cannot be modified, regardless of user privileges. Updating row values and deleting rows are prohibited. Certain changes to table metadata—for example, renaming table columns—are also prohibited, in order to prevent attempts to circumvent these restrictions. Flattened or external tables, which obtain their data from outside sources, cannot be set to be immutable.

You define an existing table as immutable with ALTER TABLE:

ALTER TABLE table SET IMMUTABLE ROWS;

Once set, table immutability cannot be reverted, and is immediately applied to all existing table data, and all data that is loaded thereafter. In order to modify the data of an immutable table, you must copy the data to a new table—for example, with COPY, CREATE TABLE...AS, or COPY_TABLE.

When you execute ALTER TABLE...SET IMMUTABLE ROWS on a table, Vertica sets two columns for that table in the system table TABLES. Together, these columns show when the table was made immutable:

- immutable_rows_since_timestamp: Server system time when immutability was applied. This is valuable for long-term timestamp retrieval and efficient comparison.

- immutable_rows_since_epoch: The epoch that was current when immutability was applied. This setting can help protect the table from attempts to pre-insert records with a future timestamp, so that row's epoch is less than the table's immutability epoch.

Enforcement

The following operations are prohibited on immutable tables:

The following partition management functions are disallowed when the target table is immutable:

Allowed operations

In general, you can execute any DML operation on an immutable table that does not affect existing row data—for example, add rows with COPY or INSERT. After you add data to an immutable table, it cannot be changed.

Tip

A table's immutability can render meaningless certain operations that are otherwise permitted on an immutable table. For example, you can add a column to an immutable table with

ALTER TABLE...ADD COLUMN. However, all values in the new column are set to NULL (unless the column is defined with a

DEFAULT value), and they cannot be updated.

Other allowed operations fall generally into two categories:

-

Changes to a table's DDL that have no effect on its data:

-

Block operations on multiple table rows, or the entire table:

5 - Disk quotas

By default, schemas and tables are limited only by available disk space and license capacity.

By default, schemas and tables are limited only by available disk space and license capacity. You can set disk quotas for schemas or individual tables, for example, to support multi-tenancy. Setting, modifying, or removing a disk quota requires superuser privileges.

Most user operations that increase storage size enforce disk quotas. A table can temporarily exceed its quota during some operations such as recovery. If you lower a quota below the current usage, no data is lost but you cannot add more. Treat quotas as advisory, not as hard limits

A schema quota, if set, must be larger than the largest table quota within it.

A disk quota is a string composed of an integer and a unit of measure (K, M, G, or T), such as '15G' or '1T'. Do not use a space between the number and the unit. No other units of measure are supported.

To set a quota at creation time, use the DISK_QUOTA option for CREATE SCHEMA or CREATE TABLE:

=> CREATE SCHEMA internal DISK_QUOTA '10T';

CREATE SCHEMA

=> CREATE TABLE internal.sales (...) DISK_QUOTA '5T';

CREATE TABLE

=> CREATE TABLE internal.leads (...) DISK_QUOTA '12T';

ROLLBACK 0: Table can not have a greater disk quota than its Schema

To modify, add, or remove a quota on an existing schema or table, use ALTER SCHEMA or ALTER TABLE:

=> ALTER SCHEMA internal DISK_QUOTA '20T';

ALTER SCHEMA

=> ALTER TABLE internal.sales DISK_QUOTA SET NULL;

ALTER TABLE

You can set a quota that is lower than the current usage. The ALTER operation succeeds, the schema or table is temporarily over quota, and you cannot perform operations that increase data usage.

Data that is counted

In Eon Mode, disk usage is an aggregate of all space used by all shards for the schema or table. This value is computed for primary subscriptions only.

In Enterprise Mode, disk usage is the sum space used by all storage containers on all nodes for the schema or table. This sum excludes buddy projections but includes all other projections.

Disk usage is calculated based on compressed size.

When quotas are applied

Quotas, if present, affect most DML and ILM operations, including:

The following example shows a failure caused by exceeding a table's quota:

=> CREATE TABLE stats(score int) DISK_QUOTA '1k';

CREATE TABLE

=> COPY stats FROM STDIN;

1

2

3

4

5

\.

ERROR 0: Disk Quota Exceeded for the Table object public.stats

HINT: Delete data and PURGE or increase disk quota at the table level

DELETE does not free space, because deleted data is still preserved in the storage containers. The delete vector that is added by a delete operation does not count against a quota, so deleting is a quota-neutral operation. Disk space for deleted data is reclaimed when you purge it; see Removing table data.

Some uncommon operations, such as ADD COLUMN, RESTORE, and SWAP PARTITION, can create new storage containers during the transaction. These operations clean up the extra locations upon completion, but while the operation is in progress, a table or schema could exceed its quota. If you get disk-quota errors during these operations, you can temporarily increase the quota, perform the operation, and then reset it.

Quotas do not affect recovery, rebalancing, or Tuple Mover operations.

Monitoring

The DISK_QUOTA_USAGES system table shows current disk usage for tables and schemas that have quotas. This table does not report on objects that do not have quotas.

You can use this table to monitor usage and make decisions about adjusting quotas:

=> SELECT * FROM DISK_QUOTA_USAGES;

object_oid | object_name | is_schema | total_disk_usage_in_bytes | disk_quota_in_bytes

-------------------+-------------+-----------+---------------------+---------------------

45035996273705100 | s | t | 307 | 10240

45035996273705104 | public.t | f | 614 | 1024

45035996273705108 | s.t | f | 307 | 2048

(3 rows)

6 - Managing table columns

After you define a table, you can use ALTER TABLE to modify existing table columns.

After you define a table, you can use

ALTER TABLE to modify existing table columns. You can perform the following operations on a column:

6.1 - Renaming columns

You rename a column with ALTER TABLE as follows:.

You rename a column with ALTER TABLE as follows:

ALTER TABLE [schema.]table-name RENAME [ COLUMN ] column-name TO new-column-name

The following example renames a column in the Retail.Product_Dimension table from Product_description to Item_description:

=> ALTER TABLE Retail.Product_Dimension

RENAME COLUMN Product_description TO Item_description;

If you rename a column that is referenced by a view, the column does not appear in the result set of the view even if the view uses the wild card (*) to represent all columns in the table. Recreate the view to incorporate the column's new name.

6.2 - Changing scalar column data type

In general, you can change a column's data type with ALTER TABLE if doing so does not require storage reorganization.

In general, you can change a column's data type with ALTER TABLE if doing so does not require storage reorganization. After you modify a column's data type, data that you load conforms to the new definition.

The sections that follow describe requirements and restrictions associated with changing a column with a scalar (primitive) data type. For information on modifying complex type columns, see Adding a new field to a complex type column.

Supported data type conversions

Vertica supports conversion for the following data types:

|

Data Types |

Supported Conversions |

|

Binary |

Expansion and contraction. |

|

Character |

All conversions between CHAR, VARCHAR, and LONG VARCHAR. |

|

Exact numeric |

All conversions between the following numeric data types: integer data types—INTEGER, INT, BIGINT, TINYINT, INT8, SMALLINT—and NUMERIC values of scale <=18 and precision 0.

You cannot modify the scale of NUMERIC data types; however, you can change precision in the ranges (0-18), (19-37), and so on.

|

|

Collection |

The following conversions are supported:

- Collection of one element type to collection of another element type, if the source element type can be coerced to the target element type.

- Between arrays and sets.

- Collection type to the same type (array to array or set to set), to change bounds or binary size.

For details, see Changing Collection Columns.

|

Unsupported data type conversions

Vertica does not allow data type conversion on types that require storage reorganization:

You also cannot change a column's data type if the column is one of the following:

You can work around some of these restrictions. For details, see Working with column data conversions.

6.2.1 - Changing column width

You can expand columns within the same class of data type.

You can expand columns within the same class of data type. Doing so is useful for storing larger items in a column. Vertica validates the data before it performs the conversion.

In general, you can also reduce column widths within the data type class. This is useful to reclaim storage if the original declaration was longer than you need, particularly with strings. You can reduce column width only if the following conditions are true:

Otherwise, Vertica returns an error and the conversion fails. For example, if you try to convert a column from varchar(25) to varchar(10)Vertica allows the conversion as long as all column data is no more than 10 characters.

In the following example, columns y and z are initially defined as VARCHAR data types, and loaded with values 12345 and 654321, respectively. The attempt to reduce column z's width to 5 fails because it contains six-character data. The attempt to reduce column y's width to 5 succeeds because its content conforms with the new width:

=> CREATE TABLE t (x int, y VARCHAR, z VARCHAR);

CREATE TABLE

=> CREATE PROJECTION t_p1 AS SELECT * FROM t SEGMENTED BY hash(x) ALL NODES;

CREATE PROJECTION

=> INSERT INTO t values(1,'12345','654321');

OUTPUT

--------

1

(1 row)

=> SELECT * FROM t;

x | y | z

---+-------+--------

1 | 12345 | 654321

(1 row)

=> ALTER TABLE t ALTER COLUMN z SET DATA TYPE char(5);

ROLLBACK 2378: Cannot convert column "z" to type "char(5)"

HINT: Verify that the data in the column conforms to the new type

=> ALTER TABLE t ALTER COLUMN y SET DATA TYPE char(5);

ALTER TABLE

Changing collection columns

If a column is a collection data type, you can use ALTER TABLE to change either its bounds or its maximum binary size. These properties are set at table creation time and can then be altered.

You can make a collection bounded, setting its maximum number of elements, as in the following example.

=> ALTER TABLE test.t1 ALTER COLUMN arr SET DATA TYPE array[int,10];

ALTER TABLE

=> \d test.t1

List of Fields by Tables

Schema | Table | Column | Type | Size | Default | Not Null | Primary Key | Foreign Key

--------+-------+--------+-----------------+------+---------+----------+-------------+-------------

test | t1 | arr | array[int8, 10] | 80 | | f | f |

(1 row)

Alternatively, you can set the binary size for the entire collection instead of setting bounds. Binary size is set either explicitly or from the DefaultArrayBinarySize configuration parameter. The following example creates an array column from the default, changes the default, and then uses ALTER TABLE to change it to the new default.

=> SELECT get_config_parameter('DefaultArrayBinarySize');

get_config_parameter

----------------------

100

(1 row)

=> CREATE TABLE test.t1 (arr array[int]);

CREATE TABLE

=> \d test.t1

List of Fields by Tables

Schema | Table | Column | Type | Size | Default | Not Null | Primary Key | Foreign Key

--------+-------+--------+-----------------+------+---------+----------+-------------+-------------

test | t1 | arr | array[int8](96) | 96 | | f | f |

(1 row)

=> ALTER DATABASE DEFAULT SET DefaultArrayBinarySize=200;

ALTER DATABASE

=> ALTER TABLE test.t1 ALTER COLUMN arr SET DATA TYPE array[int];

ALTER TABLE

=> \d test.t1

List of Fields by Tables

Schema | Table | Column | Type | Size | Default | Not Null | Primary Key | Foreign Key

--------+-------+--------+-----------------+------+---------+----------+-------------+-------------

test | t1 | arr | array[int8](200)| 200 | | f | f |

(1 row)

Alternatively, you can set the binary size explicitly instead of using the default value.

=> ALTER TABLE test.t1 ALTER COLUMN arr SET DATA TYPE array[int](300);

Purging historical data

You cannot reduce a column's width if Vertica retains any historical data that exceeds the new width. To reduce the column width, first remove that data from the table:

-

Advance the AHM to an epoch more recent than the historical data that needs to be removed from the table.

-

Purge the table of all historical data that precedes the AHM with the function

PURGE_TABLE.

For example, given the previous example, you can update the data in column t.z as follows:

=> UPDATE t SET z = '54321';

OUTPUT

--------

1

(1 row)

=> SELECT * FROM t;

x | y | z

---+-------+-------

1 | 12345 | 54321

(1 row)

Although no data in column z now exceeds 5 characters, Vertica retains the history of its earlier data, so attempts to reduce the column width to 5 return an error:

=> ALTER TABLE t ALTER COLUMN z SET DATA TYPE char(5);

ROLLBACK 2378: Cannot convert column "z" to type "char(5)"

HINT: Verify that the data in the column conforms to the new type

You can reduce the column width by purging the table's historical data as follows:

=> SELECT MAKE_AHM_NOW();

MAKE_AHM_NOW

-------------------------------

AHM set (New AHM Epoch: 6350)

(1 row)

=> SELECT PURGE_TABLE('t');

PURGE_TABLE

----------------------------------------------------------------------------------------------------------------------

Task: purge operation

(Table: public.t) (Projection: public.t_p1_b0)

(Table: public.t) (Projection: public.t_p1_b1)

(1 row)

=> ALTER TABLE t ALTER COLUMN z SET DATA TYPE char(5);

ALTER TABLE

6.2.2 - Working with column data conversions

Vertica conforms to the SQL standard by disallowing certain data conversions for table columns.

Vertica conforms to the SQL standard by disallowing certain data conversions for table columns. However, you sometimes need to work around this restriction when you convert data from a non-SQL database. The following examples describe one such workaround, using the following table:

=> CREATE TABLE sales(id INT, price VARCHAR) UNSEGMENTED ALL NODES;

CREATE TABLE

=> INSERT INTO sales VALUES (1, '$50.00');

OUTPUT

--------

1

(1 row)

=> INSERT INTO sales VALUES (2, '$100.00');

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMIT

=> SELECT * FROM SALES;

id | price

----+---------

1 | $50.00

2 | $100.00

(2 rows)

To convert the price column's existing data type from VARCHAR to NUMERIC, complete these steps:

-

Add a new column for temporary use. Assign the column a NUMERIC data type, and derive its default value from the existing price column.

-

Drop the original price column.

-

Rename the new column to the original column.

Add a new column for temporary use

-

Add a column temp_price to table sales. You can use the new column temporarily, setting its data type to what you want (NUMERIC), and deriving its default value from the price column. Cast the default value for the new column to a NUMERIC data type and query the table:

=> ALTER TABLE sales ADD COLUMN temp_price NUMERIC(10,2) DEFAULT

SUBSTR(sales.price, 2)::NUMERIC;

ALTER TABLE

=> SELECT * FROM SALES;

id | price | temp_price

----+---------+------------

1 | $50.00 | 50.00

2 | $100.00 | 100.00

(2 rows)

-

Use ALTER TABLE to drop the default expression from the new column temp_price. Vertica retains the values stored in this column:

=> ALTER TABLE sales ALTER COLUMN temp_price DROP DEFAULT;

ALTER TABLE

Drop the original price column

Drop the extraneous price column. Before doing so, you must first advance the AHM to purge historical data that would otherwise prevent the drop operation:

-

Advance the AHM:

=> SELECT MAKE_AHM_NOW();

MAKE_AHM_NOW

-------------------------------

AHM set (New AHM Epoch: 6354)

(1 row)

-

Drop the original price column:

=> ALTER TABLE sales DROP COLUMN price CASCADE;

ALTER COLUMN

Rename the new column to the original column

You can now rename the temp_price column to price:

-

Use ALTER TABLE to rename the column:

=> ALTER TABLE sales RENAME COLUMN temp_price to price;

-

Query the sales table again:

=> SELECT * FROM sales;

id | price

----+--------

1 | 50.00

2 | 100.00

(2 rows)

6.3 - Adding a new field to a complex type column

You can add new fields to columns of complex types (any combination or nesting of arrays and structs) in native tables.

You can add new fields to columns of complex types (any combination or nesting of arrays and structs) in native tables. To add a field to an existing table's column, use a single ALTER TABLE statement.

Requirements and restrictions

The following are requirements and restrictions associated with adding a new field to a complex type column:

- New fields can only be added to rows/structs.

- The new type definition must contain all of the existing fields in the complex type column. Dropping existing fields from the complex type is not allowed. All of the existing fields in the new type must exactly match their definitions in the old type.This requirement also means that existing fields cannot be renamed.

- New fields can only be added to columns of native (non-external) tables.

- New fields can be added at any level within a nested complex type. For example, if you have a column defined as

ROW(id INT, name ROW(given_name VARCHAR(20), family_name VARCHAR(20)), you can add a middle_name field to the nested ROW.

- New fields can be of any type, either complex or primitive.

- Blank field names are not allowed when adding new fields. Note that blank field names in complex type columns are allowed when creating the table. Vertica automatically assigns a name to each unnamed field.

- If you change the ordering of existing fields using ALTER TABLE, the change affects existing data in addition to new data. This means it is possible to reorder existing fields.

- When you call ALTER COLUMN ... SET DATA TYPE to add a field to a complex type column, Vertica will place an O lock on the table preventing DELETE, UPDATE, INSERT, and COPY statements from accessing the table and blocking SELECT statements issued at SERIALIZABLE isolation level, until the operation completes.

- Performance is slower when adding a field to an array element than when adding a field to an element not nested in an array.

Examples

Adding a field

Consider a company storing customer data:

=> CREATE TABLE customers(id INT, name VARCHAR, address ROW(street VARCHAR, city VARCHAR, zip INT));

CREATE TABLE

The company has just decided to expand internationally, so now needs to add a country field:

=> ALTER TABLE customers ALTER COLUMN address

SET DATA TYPE ROW(street VARCHAR, city VARCHAR, zip INT, country VARCHAR);

ALTER TABLE

You can view the table definition to confirm the change:

=> \d customers

List of Fields by Tables

Schema | Table | Column | Type | Size | Default | Not Null | Primary Key | Foreign Key

--------+-----------+---------+----------------------------------------------------------------------+------+---------+----------+-------------+-------------

public | customers | id | int | 8 | | f | f |

public | customers | name | varchar(80) | 80 | | f | f |

public | customers | address | ROW(street varchar(80),city varchar(80),zip int,country varchar(80)) | -1 | | f | f |

(3 rows)

You can also see that the country field remains null for existing customers:

=> SELECT * FROM customers;

id | name | address

----+------+--------------------------------------------------------------------------------

1 | mina | {"street":"1 allegheny square east","city":"hamden","zip":6518,"country":null}

(1 row)

Common error messages

While you can add one or more fields with a single ALTER TABLE statement, existing fields cannot be removed. The following example throws an error because the city field is missing:

=> ALTER TABLE customers ALTER COLUMN address SET DATA TYPE ROW(street VARCHAR, state VARCHAR, zip INT, country VARCHAR);

ROLLBACK 2377: Cannot convert column "address" from "ROW(varchar(80),varchar(80),int,varchar(80))" to type "ROW(varchar(80),varchar(80),int,varchar(80))"

Similarly, you cannot alter the type of an existing field. The following example will throw an error because the zip field's type cannot be altered:

=> ALTER TABLE customers ALTER COLUMN address SET DATA TYPE ROW(street VARCHAR, city VARCHAR, zip VARCHAR, country VARCHAR);

ROLLBACK 2377: Cannot convert column "address" from "ROW(varchar(80),varchar(80),int,varchar(80))" to type "ROW(varchar(80),varchar(80),varchar(80),varchar(80))"

Additional properties

A complex type column's field order follows the order specified in the ALTER command, allowing you to reorder a column's existing fields. The following example reorders the fields of the address column:

=> ALTER TABLE customers ALTER COLUMN address

SET DATA TYPE ROW(street VARCHAR, country VARCHAR, city VARCHAR, zip INT);

ALTER TABLE

The table definition shows the address column's fields have been reordered:

=> \d customers

List of Fields by Tables

Schema | Table | Column | Type | Size | Default | Not Null | Primary Key | Foreign Key

--------+-----------+---------+----------------------------------------------------------------------+------+---------+----------+-------------+-------------

public | customers | id | int | 8 | | f | f |

public | customers | name | varchar(80) | 80 | | f | f |

public | customers | address | ROW(street varchar(80),country varchar(80),city varchar(80),zip int) | -1 | | f | f |

(3 rows)

Note that you cannot add new fields with empty names. When creating a complex table, however, you can omit field names, and Vertica automatically assigns a name to each unnamed field:

=> CREATE TABLE products(name VARCHAR, description ROW(VARCHAR));

CREATE TABLE

Because the field created in the description column has not been named, Vertica assigns it a default name. This default name can be checked in the table definition:

=> \d products

List of Fields by Tables

Schema | Table | Column | Type | Size | Default | Not Null | Primary Key | Foreign Key

--------+----------+-------------+---------------------+------+---------+----------+-------------+-------------

public | products | name | varchar(80) | 80 | | f | f |

public | products | description | ROW(f0 varchar(80)) | -1 | | f | f |

(2 rows)

Above, we see that the VARCHAR field in the description column was automatically assigned the name f0. When adding new fields, you must specify the existing Vertica-assigned field name:

=> ALTER TABLE products ALTER COLUMN description

SET DATA TYPE ROW(f0 VARCHAR(80), expanded_description VARCHAR(200));

ALTER TABLE

6.4 - Defining column values

You can define a column so Vertica automatically sets its value from an expression through one of the following clauses:.

You can define a column so Vertica automatically sets its value from an expression through one of the following clauses:

-

DEFAULT

-

SET USING

-

DEFAULT USING

DEFAULT

The DEFAULT option sets column values to a specified value. It has the following syntax:

DEFAULT default-expression

Default values are set when you:

-

Load new rows into a table, for example, with INSERT or COPY. Vertica populates DEFAULT columns in new rows with their default values. Values in existing rows, including columns with DEFAULT expressions, remain unchanged.

-

Execute UPDATE on a table and set the value of a DEFAULT column to DEFAULT:

=> UPDATE table-name SET column-name=DEFAULT;

-

Add a column with a DEFAULT expression to an existing table. Vertica populates the new column with its default values when it is added to the table.

Note

Altering an existing table column to specify a DEFAULT expression has no effect on existing values in that column. Vertica applies the DEFAULT expression only on new rows when they are added to the table, through load operations such as INSERT and COPY. To refresh all values in a column with the column's DEFAULT expression, update the column as shown above.

Restrictions

DEFAULT expressions cannot specify volatile functions with ALTER TABLE...ADD COLUMN. To specify volatile functions, use CREATE TABLE or ALTER TABLE...ALTER COLUMN statements.

SET USING

The SET USING option sets the column value to an expression when the function REFRESH_COLUMNS is invoked on that column. This option has the following syntax:

SET USING using-expression

This approach is useful for large denormalized (flattened) tables, where multiple columns get their values by querying other tables.

Restrictions

SET USING has the following restrictions:

DEFAULT USING

The DEFAULT USING option sets DEFAULT and SET USING constraints on a column, equivalent to using DEFAULT and SET USING separately with the same expression on the same column. It has the following syntax:

DEFAULT USING expression

For example, the following column definitions are effectively identical:

=> ALTER TABLE public.orderFact ADD COLUMN cust_name varchar(20)

DEFAULT USING (SELECT name FROM public.custDim WHERE (custDim.cid = orderFact.cid));

=> ALTER TABLE public.orderFact ADD COLUMN cust_name varchar(20)

DEFAULT (SELECT name FROM public.custDim WHERE (custDim.cid = orderFact.cid))

SET USING (SELECT name FROM public.custDim WHERE (custDim.cid = orderFact.cid));

DEFAULT USING supports the same expressions as SET USING and is subject to the same restrictions.

Supported expressions

DEFAULT and SET USING generally support the same expressions. These include:

-

Queries

-

Other columns in the same table

-

Literals (constants)

-

All operators supported by Vertica

-

The following categories of functions:

Expression restrictions

The following restrictions apply to DEFAULT and SET USING expressions:

-

The return value data type must match or be cast to the column data type.

-

The expression must return a value that conforms to the column bounds. For example, a column that is defined as a VARCHAR(1) cannot be set to a default string of abc.

-

In a temporary table, DEFAULT and SET USING do not support subqueries. If you try to create a temporary table where DEFAULT or SET USING use subquery expressions, Vertica returns an error.

-

A column's SET USING expression cannot specify another column in the same table that also sets its value with SET USING. Similarly, a column's DEFAULT expression cannot specify another column in the same table that also sets its value with DEFAULT, or whose value is automatically set to a sequence. However, a column's SET USING expression can specify another column that sets its value with DEFAULT.

Note

You can set a column's DEFAULT expression from another column in the same table that sets its value with SET USING. However, the DEFAULT column is typically set to NULL, as it is only set on load operations that initially set the SET USING column to NULL.

-

DEFAULT and SET USING expressions only support one SELECT statement; attempts to include multiple SELECT statements in the expression return an error. For example, given table t1:

=> SELECT * FROM t1;

a | b

---+---------

1 | hello

2 | world

(2 rows)

Attempting to create table t2 with the following DEFAULT expression returns with an error:

=> CREATE TABLE t2 (aa int, bb varchar(30) DEFAULT (SELECT 'I said ')||(SELECT b FROM t1 where t1.a = t2.aa));

ERROR 9745: Expressions with multiple SELECT statements cannot be used in 'set using' query definitions

Disambiguating predicate columns

If a SET USING or DEFAULT query expression joins two columns with the same name, the column names must include their table names. Otherwise, Vertica assumes that both columns reference the dimension table, and the predicate always evaluates to true.

For example, tables orderFact and custDim both include column cid. Flattened table orderFact defines column cust_name with a SET USING query expression. Because the query predicate references columns cid from both tables, the column names are fully qualified:

=> CREATE TABLE public.orderFact

(

...

cid int REFERENCES public.custDim(cid),

cust_name varchar(20) SET USING (

SELECT name FROM public.custDim WHERE (custDIM.cid = orderFact.cid)),

...

)

Examples

Derive a column's default value from another column

-

Create table t with two columns, date and state, and insert a row of data:

=> CREATE TABLE t (date DATE, state VARCHAR(2));

CREATE TABLE

=> INSERT INTO t VALUES (CURRENT_DATE, 'MA');

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMMIT

SELECT * FROM t;

date | state

------------+-------

2017-12-28 | MA

(1 row)

-

Use ALTER TABLE to add a third column that extracts the integer month value from column date:

=> ALTER TABLE t ADD COLUMN month INTEGER DEFAULT date_part('month', date);

ALTER TABLE

-

When you query table t, Vertica returns the number of the month in column date:

=> SELECT * FROM t;

date | state | month

------------+-------+-------

2017-12-28 | MA | 12

(1 row)

Update default column values

-

Update table t by subtracting 30 days from date:

=> UPDATE t SET date = date-30;

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMIT

=> SELECT * FROM t;

date | state | month

------------+-------+-------

2017-11-28 | MA | 12

(1 row)

The value in month remains unchanged.

-

Refresh the default value in month from column date:

=> UPDATE t SET month=DEFAULT;

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMIT

=> SELECT * FROM t;

date | state | month

------------+-------+-------

2017-11-28 | MA | 11

(1 row)

Derive a default column value from user-defined scalar function

This example shows a user-defined scalar function that adds two integer values. The function is called add2ints and takes two arguments.

-

Develop and deploy the function, as described in Scalar functions (UDSFs).

-

Create a sample table, t1, with two integer columns:

=> CREATE TABLE t1 ( x int, y int );

CREATE TABLE

-

Insert some values into t1:

=> insert into t1 values (1,2);

OUTPUT

--------

1

(1 row)

=> insert into t1 values (3,4);

OUTPUT

--------

1

(1 row)

-

Use ALTER TABLE to add a column to t1, with the default column value derived from the UDSF add2ints:

alter table t1 add column z int default add2ints(x,y);

ALTER TABLE

-

List the new column:

select z from t1;

z

----

3

7

(2 rows)

Table with a SET USING column that queries another table for its values

-

Define tables t1 and t2. Column t2.b is defined to get its data from column t1.b, through the query in its SET USING clause:

=> CREATE TABLE t1 (a INT PRIMARY KEY ENABLED, b INT);

CREATE TABLE

=> CREATE TABLE t2 (a INT, alpha VARCHAR(10),

b INT SET USING (SELECT t1.b FROM t1 WHERE t1.a=t2.a))

ORDER BY a SEGMENTED BY HASH(a) ALL NODES;

CREATE TABLE

Important

The definition for table t2 includes SEGMENTED BY and ORDER BY clauses that exclude SET USING column b. If these clauses are omitted, Vertica creates an auto-projection for this table that specifies column b in its SEGMENTED BY and ORDER BY clauses . Inclusion of a SET USING column in any projection's segmentation or sort order prevents function REFRESH_COLUMNS from populating this column. Instead, it returns with an error.

For details on this and other restrictions, see REFRESH_COLUMNS.

-

Populate the tables with data:

=> INSERT INTO t1 VALUES(1,11),(2,22),(3,33),(4,44);

=> INSERT INTO t2 VALUES (1,'aa'),(2,'bb');

=> COMMIT;

COMMIT

-

View the data in table t2: Column in SET USING column b is empty, pending invocation of Vertica function REFRESH_COLUMNS:

=> SELECT * FROM t2;

a | alpha | b

---+-------+---

1 | aa |

2 | bb |

(2 rows)

-

Refresh the column data in table t2 by calling function REFRESH_COLUMNS:

=> SELECT REFRESH_COLUMNS ('t2','b', 'REBUILD');

REFRESH_COLUMNS

---------------------------

refresh_columns completed

(1 row)

In this example, REFRESH_COLUMNS is called with the optional argument REBUILD. This argument specifies to replace all data in SET USING column b. It is generally good practice to call REFRESH_COLUMNS with REBUILD on any new SET USING column. For details, see REFRESH_COLUMNS.

-

View data in refreshed column b, whose data is obtained from table t1 as specified in the column's SET USING query:

=> SELECT * FROM t2 ORDER BY a;

a | alpha | b

---+-------+----

1 | aa | 11

2 | bb | 22

(2 rows)

DEFAULT and SET USING expressions support subqueries that can obtain values from other tables, and use those with values in the current table to compute column values. The following example adds a column gmt_delivery_time to fact table customer_orders. The column specifies a DEFAULT expression to set values in the new column as follows:

-

Calls meta-function NEW_TIME, which performs the following tasks:

-

Populates the gmt_delivery_time column with the converted values.

=> CREATE TABLE public.customers(

customer_key int,

customer_name varchar(64),

customer_address varchar(64),

customer_tz varchar(5),

...);

=> CREATE TABLE public.customer_orders(

customer_key int,

order_number int,

product_key int,

product_version int,

quantity_ordered int,

store_key int,

date_ordered date,

date_shipped date,

expected_delivery_date date,

local_delivery_time timestamptz,

...);

=> ALTER TABLE customer_orders ADD COLUMN gmt_delivery_time timestamp

DEFAULT NEW_TIME(customer_orders.local_delivery_time,

(SELECT c.customer_tz FROM customers c WHERE (c.customer_key = customer_orders.customer_key)),

'GMT');

7 - Altering table definitions

You can modify a table's definition with ALTER TABLE, in response to evolving database schema requirements.

You can modify a table's definition with

ALTER TABLE, in response to evolving database schema requirements. Changing a table definition is often more efficient than staging data in a temporary table, consuming fewer resources and less storage.

For information on making column-level changes, see Managing table columns. For details about changing and reorganizing table partitions, see Partitioning existing table data.

7.1 - Adding table columns

You add a column to a persistent table with ALTER TABLE..ADD COLUMN:.

You add a column to a persistent table with ALTER TABLE..ADD COLUMN:

ALTER TABLE

...

ADD COLUMN [IF NOT EXISTS] column datatype

[column-constraint]

[ENCODING encoding-type]

[PROJECTIONS (projections-list) | ALL PROJECTIONS ]

Note

Before you add columns to a table, verify that all its superprojections are up to date.

Table locking

When you use ADD COLUMN to alter a table, Vertica takes an O lock on the table until the operation completes. The lock prevents DELETE, UPDATE, INSERT, and COPY statements from accessing the table. The lock also blocks SELECT statements issued at SERIALIZABLE isolation level, until the operation completes.

Adding a column to a table does not affect K-safety of the physical schema design.

You can add columns when nodes are down.

Adding new columns to projections

When you add a column to a table, Vertica automatically adds the column to superprojections of that table. The ADD..COLUMN clause can also specify to add the column to one or more non-superprojections, with one of these options:

-

PROJECTIONS (projections-list): Adds the new column to one or more projections of this table, specified as a comma-delimted list of projection base names. Vertica adds the column to all buddies of each projection. The projection list cannot include projections with pre-aggregated data such as live aggregate projections; otherwise, Vertica rolls back the ALTER TABLE statement.

-

ALL PROJECTIONS adds the column to all projections of this table, excluding projections with pre-aggregated data.

For example, the store_orders table has two projections—superprojection store_orders_super, and user-created projection store_orders_p. The following ALTER TABLE..ADD COLUMN statement adds column expected_ship_date to the store_orders table. Because the statement omits the PROJECTIONS option, Vertica adds the column only to the table's superprojection:

=> ALTER TABLE public.store_orders ADD COLUMN expected_ship_date date;

ALTER TABLE

=> SELECT projection_column_name, projection_name FROM projection_columns WHERE table_name ILIKE 'store_orders'

ORDER BY projection_name , projection_column_name;

projection_column_name | projection_name

------------------------+--------------------

order_date | store_orders_p_b0

order_no | store_orders_p_b0

ship_date | store_orders_p_b0

order_date | store_orders_p_b1

order_no | store_orders_p_b1

ship_date | store_orders_p_b1

expected_ship_date | store_orders_super

order_date | store_orders_super

order_no | store_orders_super

ship_date | store_orders_super

shipper | store_orders_super

(11 rows)

The following ALTER TABLE...ADD COLUMN statement includes the PROJECTIONS option. This specifies to include projection store_orders_p in the add operation. Vertica adds the new column to this projection and the table's superprojection:

=> ALTER TABLE public.store_orders ADD COLUMN delivery_date date PROJECTIONS (store_orders_p);

=> SELECT projection_column_name, projection_name FROM projection_columns WHERE table_name ILIKE 'store_orders'

ORDER BY projection_name, projection_column_name;

projection_column_name | projection_name

------------------------+--------------------

delivery_date | store_orders_p_b0

order_date | store_orders_p_b0

order_no | store_orders_p_b0

ship_date | store_orders_p_b0

delivery_date | store_orders_p_b1

order_date | store_orders_p_b1

order_no | store_orders_p_b1

ship_date | store_orders_p_b1

delivery_date | store_orders_super

expected_ship_date | store_orders_super

order_date | store_orders_super

order_no | store_orders_super

ship_date | store_orders_super

shipper | store_orders_super

(14 rows)

Updating associated table views

Adding new columns to a table that has an associated view does not update the view's result set, even if the view uses a wildcard (*) to represent all table columns. To incorporate new columns, you must recreate the view.

7.2 - Dropping table columns

ALTER TABLE...DROP COLUMN drops the specified table column and the ROS containers that correspond to the dropped column:.

ALTER TABLE...DROP COLUMN drops the specified table column and the ROS containers that correspond to the dropped column:

ALTER TABLE [schema.]table DROP [ COLUMN ] [IF EXISTS] column [CASCADE | RESTRICT]

After the drop operation completes, data backed up from the current epoch onward recovers without the column. Data recovered from a backup that precedes the current epoch re-add the table column. Because drop operations physically purge object storage and catalog definitions (table history) from the table, AT EPOCH (historical) queries return nothing for the dropped column.

The altered table retains its object ID.

Note

Drop column operations can be fast because these catalog-level changes do not require data reorganization, so Vertica can quickly reclaim disk storage.

Restrictions

-

You cannot drop or alter a primary key column or a column that participates in the table partitioning clause.

-

You cannot drop the first column of any projection sort order, or columns that participate in a projection segmentation expression.

-

In Enterprise Mode, all nodes must be up. This restriction does not apply to Eon mode.

-

You cannot drop a column associated with an access policy. Attempts to do so produce the following error:

ERROR 6482: Failed to parse Access Policies for table "t1"

Using CASCADE to force a drop

If the table column to drop has dependencies, you must qualify the DROP COLUMN clause with the CASCADE option. For example, the target column might be specified in a projection sort order. In this and other cases, DROP COLUMN...CASCADE handles the dependency by reorganizing catalog definitions or dropping a projection. In all cases, CASCADE performs the minimal reorganization required to drop the column.

Use CASCADE to drop a column with the following dependencies:

|

Dropped column dependency |

CASCADE behavior |

|

Any constraint |

Vertica drops the column when a FOREIGN KEY constraint depends on a UNIQUE or PRIMARY KEY constraint on the referenced columns. |

|

Specified in projection sort order |

Vertica truncates projection sort order up to and including the projection that is dropped without impact on physical storage for other columns and then drops the specified column. For example if a projection's columns are in sort order (a,b,c), dropping column b causes the projection's sort order to be just (a), omitting column (c). |

|

Specified in a projection segmentation expression |

The column to drop is integral to the projection definition. If possible, Vertica drops the projection as long as doing so does not compromise K-safety; otherwise, the transaction rolls back. |

|

Referenced as default value of another column |

See Dropping a Column Referenced as Default, below. |

Dropping a column referenced as default

You might want to drop a table column that is referenced by another column as its default value. For example, the following table is defined with two columns, a and b:, where b gets its default value from column a:

=> CREATE TABLE x (a int) UNSEGMENTED ALL NODES;

CREATE TABLE

=> ALTER TABLE x ADD COLUMN b int DEFAULT a;

ALTER TABLE

In this case, dropping column a requires the following procedure:

-

Remove the default dependency through ALTER COLUMN..DROP DEFAULT:

=> ALTER TABLE x ALTER COLUMN b DROP DEFAULT;

-

Create a replacement superprojection for the target table if one or both of the following conditions is true:

-

The target column is the table's first sort order column. If the table has no explicit sort order, the default table sort order specifies the first table column as the first sort order column. In this case, the new superprojection must specify a sort order that excludes the target column.

-

If the table is segmented, the target column is specified in the segmentation expression. In this case, the new superprojection must specify a segmentation expression that excludes the target column.

Given the previous example, table x has a default sort order of (a,b). Because column a is the table's first sort order column, you must create a replacement superprojection that is sorted on column b:

=> CREATE PROJECTION x_p1 as select * FROM x ORDER BY b UNSEGMENTED ALL NODES;

-

Run

START_REFRESH:

=> SELECT START_REFRESH();

START_REFRESH

----------------------------------------

Starting refresh background process.

(1 row)

-

Run MAKE_AHM_NOW:

=> SELECT MAKE_AHM_NOW();

MAKE_AHM_NOW

-------------------------------

AHM set (New AHM Epoch: 1231)

(1 row)

-

Drop the column:

=> ALTER TABLE x DROP COLUMN a CASCADE;

Vertica implements the CASCADE directive as follows:

Examples

The following series of commands successfully drops a BYTEA data type column:

=> CREATE TABLE t (x BYTEA(65000), y BYTEA, z BYTEA(1));

CREATE TABLE

=> ALTER TABLE t DROP COLUMN y;

ALTER TABLE

=> SELECT y FROM t;

ERROR 2624: Column "y" does not exist

=> ALTER TABLE t DROP COLUMN x RESTRICT;

ALTER TABLE

=> SELECT x FROM t;

ERROR 2624: Column "x" does not exist

=> SELECT * FROM t;

z

---

(0 rows)

=> DROP TABLE t CASCADE;

DROP TABLE

The following series of commands tries to drop a FLOAT(8) column and fails because there are not enough projections to maintain K-safety.

=> CREATE TABLE t (x FLOAT(8),y FLOAT(08));

CREATE TABLE

=> ALTER TABLE t DROP COLUMN y RESTRICT;

ALTER TABLE

=> SELECT y FROM t;

ERROR 2624: Column "y" does not exist

=> ALTER TABLE t DROP x CASCADE;

ROLLBACK 2409: Cannot drop any more columns in t

=> DROP TABLE t CASCADE;

7.3 - Altering constraint enforcement

ALTER TABLE...ALTER CONSTRAINT can enable or disable enforcement of primary key, unique, and check constraints.

ALTER TABLE...ALTER CONSTRAINT can enable or disable enforcement of primary key, unique, and check constraints. You must qualify this clause with the keyword ENABLED or DISABLED:

For example:

ALTER TABLE public.new_sales ALTER CONSTRAINT C_PRIMARY ENABLED;

For details, see Constraint enforcement.

7.4 - Renaming tables

ALTER TABLE...RENAME TO renames one or more tables.

ALTER TABLE...RENAME TO renames one or more tables. Renamed tables retain their original OIDs.

You rename multiple tables by supplying two comma-delimited lists. Vertica maps the names according to their order in the two lists. Only the first list can qualify table names with a schema. For example:

=> ALTER TABLE S1.T1, S1.T2 RENAME TO U1, U2;

The RENAME TO parameter is applied atomically: all tables are renamed, or none of them. For example, if the number of tables to rename does not match the number of new names, none of the tables is renamed.

Caution

If a table is referenced by a view, renaming it causes the view to fail, unless you create another table with the previous name to replace the renamed table.

Using rename to swap tables within a schema

You can use ALTER TABLE...RENAME TO to swap tables within the same schema, without actually moving data. You cannot swap tables across schemas.

The following example swaps the data in tables T1 and T2 through intermediary table temp:

-

t1 to temp

-

t2 to t1

-

temp to t2

=> DROP TABLE IF EXISTS temp, t1, t2;

DROP TABLE

=> CREATE TABLE t1 (original_name varchar(24));

CREATE TABLE

=> CREATE TABLE t2 (original_name varchar(24));

CREATE TABLE

=> INSERT INTO t1 VALUES ('original name t1');

OUTPUT

--------

1

(1 row)

=> INSERT INTO t2 VALUES ('original name t2');

OUTPUT

--------

1

(1 row)

=> COMMIT;

COMMIT

=> ALTER TABLE t1, t2, temp RENAME TO temp, t1, t2;

ALTER TABLE

=> SELECT * FROM t1, t2;

original_name | original_name

------------------+------------------

original name t2 | original name t1

(1 row)

7.5 - Moving tables to another schema

ALTER TABLE...SET SCHEMA moves a table from one schema to another.

ALTER TABLE...SET SCHEMA moves a table from one schema to another. Vertica automatically moves all projections that are anchored to the source table to the destination schema. It also moves all IDENTITY columns to the destination schema.

Moving a table across schemas requires that you have USAGE privileges on the current schema and CREATE privileges on destination schema. You can move only one table between schemas at a time. You cannot move temporary tables across schemas.

Name conflicts

If a table of the same name or any of the projections that you want to move already exist in the new schema, the statement rolls back and does not move either the table or any projections. To work around name conflicts:

-

Rename any conflicting table or projections that you want to move.

-

Run

ALTER TABLE...SET SCHEMA again.

Note

Vertica lets you move system tables to system schemas. Moving system tables could be necessary to support designs created through the

Database Designer.

Example

The following example moves table T1 from schema S1 to schema S2. All projections that are anchored on table T1 automatically move to schema S2:

=> ALTER TABLE S1.T1 SET SCHEMA S2;

7.6 - Changing table ownership

As a superuser or table owner, you can reassign table ownership with ALTER TABLE...OWNER TO, as follows:.

As a superuser or table owner, you can reassign table ownership with ALTER TABLE...OWNER TO, as follows:

ALTER TABLE [schema.]table-name OWNER TO owner-name

Changing table ownership is useful when moving a table from one schema to another. Ownership reassignment is also useful when a table owner leaves the company or changes job responsibilities. Because you can change the table owner, the tables won't have to be completely rewritten, you can avoid loss in productivity.

Changing table ownership automatically causes the following changes:

-

Grants on the table that were made by the original owner are dropped and all existing privileges on the table are revoked from the previous owner. Changes in table ownership has no effect on schema privileges.

-

Ownership of dependent IDENTITY sequences are transferred with the table. However, ownership does not change for named sequences created with CREATE SEQUENCE. To transfer ownership of these sequences, use ALTER SEQUENCE.

-

New table ownership is propagated to its projections.

Example

In this example, user Bob connects to the database, looks up the tables, and transfers ownership of table t33 from himself to user Alice.

=> \c - Bob

You are now connected as user "Bob".

=> \d

Schema | Name | Kind | Owner | Comment

--------+--------+-------+---------+---------

public | applog | table | dbadmin |

public | t33 | table | Bob |

(2 rows)

=> ALTER TABLE t33 OWNER TO Alice;

ALTER TABLE

When Bob looks up database tables again, he no longer sees table t33:

=> \d List of tables

List of tables

Schema | Name | Kind | Owner | Comment

--------+--------+-------+---------+---------

public | applog | table | dbadmin |

(1 row)

When user Alice connects to the database and looks up tables, she sees she is the owner of table t33.

=> \c - Alice

You are now connected as user "Alice".

=> \d

List of tables

Schema | Name | Kind | Owner | Comment

--------+------+-------+-------+---------

public | t33 | table | Alice |

(2 rows)

Alice or a superuser can transfer table ownership back to Bob. In the following case a superuser performs the transfer.

=> \c - dbadmin

You are now connected as user "dbadmin".

=> ALTER TABLE t33 OWNER TO Bob;

ALTER TABLE

=> \d

List of tables

Schema | Name | Kind | Owner | Comment

--------+----------+-------+---------+---------

public | applog | table | dbadmin |

public | comments | table | dbadmin |

public | t33 | table | Bob |

s1 | t1 | table | User1 |

(4 rows)

You can also query system table TABLES to view table and owner information. Note that a change in ownership does not change the table ID.

In the below series of commands, the superuser changes table ownership back to Alice and queries the TABLES system table.

=> ALTER TABLE t33 OWNER TO Alice;

ALTER TABLE

=> SELECT table_schema_id, table_schema, table_id, table_name, owner_id, owner_name FROM tables;

table_schema_id | table_schema | table_id | table_name | owner_id | owner_name

-------------------+--------------+-------------------+------------+-------------------+------------

45035996273704968 | public | 45035996273713634 | applog | 45035996273704962 | dbadmin

45035996273704968 | public | 45035996273724496 | comments | 45035996273704962 | dbadmin

45035996273730528 | s1 | 45035996273730548 | t1 | 45035996273730516 | User1

45035996273704968 | public | 45035996273795846 | t33 | 45035996273724576 | Alice

(5 rows)

Now the superuser changes table ownership back to Bob and queries the TABLES table again. Nothing changes but the owner_name row, from Alice to Bob.

=> ALTER TABLE t33 OWNER TO Bob;

ALTER TABLE

=> SELECT table_schema_id, table_schema, table_id, table_name, owner_id, owner_name FROM tables;

table_schema_id | table_schema | table_id | table_name | owner_id | owner_name

-------------------+--------------+-------------------+------------+-------------------+------------

45035996273704968 | public | 45035996273713634 | applog | 45035996273704962 | dbadmin

45035996273704968 | public | 45035996273724496 | comments | 45035996273704962 | dbadmin

45035996273730528 | s1 | 45035996273730548 | t1 | 45035996273730516 | User1

45035996273704968 | public | 45035996273793876 | foo | 45035996273724576 | Alice

45035996273704968 | public | 45035996273795846 | t33 | 45035996273714428 | Bob

(5 rows)

8 - Sequences

Sequences can be used to set the default values of columns to sequential integer values.

Sequences can be used to set the default values of columns to sequential integer values. Sequences guarantee uniqueness, and help avoid constraint enforcement problems and overhead. Sequences are especially useful for primary key columns.

While sequence object values are guaranteed to be unique, they are not guaranteed to be contiguous. For example, two nodes can increment a sequence at different rates. The node with a heavier processing load increments the sequence, but the values are not contiguous with those being incremented on a node with less processing. For details, see Distributing sequences.

Vertica supports the following sequence types:

- Named sequences are database objects that generates unique numbers in sequential ascending or descending order. Named sequences are defined independently through CREATE SEQUENCE statements, and are managed independently of the tables that reference them. A table can set the default values of one or more columns to named sequences.

- IDENTITY column sequences increment or decrement column's value as new rows are added. Unlike named sequences, IDENTITY sequence types are defined in a table's DDL, so they do not persist independently of that table. A table can contain only one IDENTITY column.

8.1 - Sequence types compared

The following table lists the differences between the two sequence types:.

The following table lists the differences between the two sequence types:

|

Supported Behavior |

Named Sequence |

IDENTITY |

|

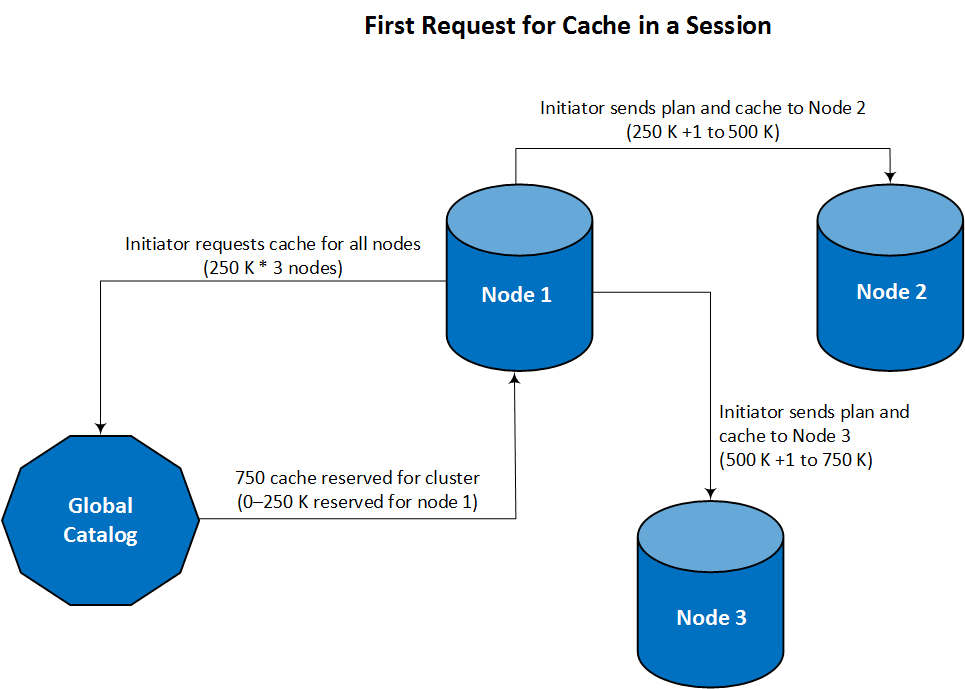

Default cache value 250K |

• |

• |

|

Set initial cache |

• |

• |

|

Define start value |

• |

• |

|

Specify increment unit |

• |

• |

|

Exists as an independent object |

• |

|

|

Exists only as part of table |

|

• |

|

Create as column constraint |

|

• |

|

Requires name |

• |

|

|

Use in expressions |

• |

|

|

Unique across tables |

• |

|

|

Change parameters |

• |

|

|

Move to different schema |

• |

|

|

Set to increment or decrement |

• |

|

|

Grant privileges to object |

• |

|

|

Specify minimum value |

• |

|

|

Specify maximum value |

• |

|

8.2 - Named sequences

Named sequences are sequences that are defined by CREATE SEQUENCE.

Named sequences are sequences that are defined by CREATE SEQUENCE. Unlike IDENTITY sequences, which are defined in a table's DDL, you create a named sequence as an independent object, and then set it as the default value of a table column.

Named sequences are used most often when an application requires a unique identifier in a table or an expression. After a named sequence returns a value, it never returns the same value again in the same session.

8.2.1 - Creating and using named sequences

You create a named sequence with CREATE SEQUENCE.

You create a named sequence with

CREATE SEQUENCE. The statement requires only a sequence name; all other parameters are optional. To create a sequence, a user must have CREATE privileges on a schema that contains the sequence.

The following example creates an ascending named sequence, my_seq, starting at the value 100:

=> CREATE SEQUENCE my_seq START 100;

CREATE SEQUENCE

Incrementing and decrementing a sequence

When you create a named sequence object, you can also specify its increment or decrement value by setting its INCREMENT parameter. If you omit this parameter, as in the previous example, the default is set to 1.

You increment or decrement a sequence by calling the function

NEXTVAL on it—either directly on the sequence itself, or indirectly by adding new rows to a table that references the sequence. When called for the first time on a new sequence, NEXTVAL initializes the sequence to its start value. Vertica also creates a cache for the sequence. Subsequent NEXTVAL calls on the sequence increment its value.

The following call to NEXTVAL initializes the new my_seq sequence to 100:

=> SELECT NEXTVAL('my_seq');

nextval

---------

100

(1 row)

Getting a sequence's current value

You can obtain the current value of a sequence by calling

CURRVAL on it. For example:

=> SELECT CURRVAL('my_seq');

CURRVAL

---------

100

(1 row)

Note

CURRVAL returns an error if you call it on a new sequence that has not yet been initialized by NEXTVAL, or an existing sequence that has not yet been accessed in a new session. For example:

=> CREATE SEQUENCE seq2;

CREATE SEQUENCE

=> SELECT currval('seq2');

ERROR 4700: Sequence seq2 has not been accessed in the session

Referencing sequences in tables