Before reading the topics in this section, you should be familiar with the material in Getting started and are familiar with creating and configuring a fully-functioning example database.

This is the multi-page printable view of this section. Click here to print.

Configuring the database

- 1: Configuration procedure

- 1.1: Prepare disk storage locations

- 1.1.1: Specifying disk storage location during database creation

- 1.1.2: Specifying disk storage location on MC

- 1.1.3: Configuring disk usage to optimize performance

- 1.1.4: Using shared storage with Vertica

- 1.1.5: Viewing database storage information

- 1.1.6: Anti-virus scanning exclusions

- 1.2: Disk space requirements for Vertica

- 1.3: Disk space requirements for Management Console

- 1.4: Prepare the logical schema script

- 1.5: Prepare data files

- 1.6: Prepare load scripts

- 1.7: Create an optional sample query script

- 1.8: Create an empty database

- 1.9: Create the logical schema

- 1.10: Perform a partial data load

- 1.11: Test the database

- 1.12: Optimize query performance

- 1.13: Complete the data load

- 1.14: Test the optimized database

- 1.15: Implement locales for international data sets

- 1.15.1: Specify the default locale for the database

- 1.15.2: Override the default locale for a session

- 1.15.3: Server versus client locale settings

- 1.16: Using time zones with Vertica

- 1.17: Change transaction isolation levels

- 2: Configuration parameter management

- 3: Designing a logical schema

- 3.1: Using multiple schemas

- 3.1.1: Multiple schema examples

- 3.1.2: Creating schemas

- 3.1.3: Specifying objects in multiple schemas

- 3.1.4: Setting search paths

- 3.1.5: Creating objects that span multiple schemas

- 3.2: Tables in schemas

- 4: Creating a database design

- 4.1: About Database Designer

- 4.2: How Database Designer creates a design

- 4.3: Database Designer access requirements

- 4.4: Logging projection data for Database Designer

- 4.4.1: Enabling logging for Database Designer

- 4.4.2: Viewing Database Designer logs

- 4.4.3: Database Designer logs: example data

- 4.5: General design settings

- 4.6: Building a design

- 4.7: Resetting a design

- 4.8: Deploying a design

- 4.9: How to create a design

- 4.10: Running Database Designer programmatically

- 4.10.1: Database Designer function categories

- 4.10.2: Workflow for running Database Designer programmatically

- 4.10.3: Privileges for running Database Designer functions

- 4.10.4: Resource pool for Database Designer users

- 4.11: Creating custom designs

- 4.11.1: Custom design process

- 4.11.2: Planning your design

- 4.11.2.1: Design requirements

- 4.11.2.2: Determining the number of projections to use

- 4.11.2.3: Designing for K-safety

- 4.11.2.3.1: Requirements for a K-safe physical schema design

- 4.11.2.3.2: Requirements for a physical schema design with no K-safety

- 4.11.2.3.3: Designing segmented projections for K-safety

- 4.11.2.3.4: Designing unsegmented projections for K-Safety

- 4.11.2.4: Designing for segmentation

- 4.11.3: Design fundamentals

- 4.11.3.1: Writing and deploying custom projections

- 4.11.3.2: Designing superprojections

- 4.11.3.3: Sort order benefits

- 4.11.3.4: Choosing sort order: best practices

- 4.11.3.5: Prioritizing column access speed

1 - Configuration procedure

This section describes the tasks required to set up a Vertica database. It assumes that you have a valid license key file, installed the Vertica rpm package, and ran the installation script as described.

You complete the configuration procedure using:

-

Administration tools

If you are unfamiliar with Dialog-based user interfaces, read Using the administration tools interface before you begin. See also the Administration tools reference for details.

-

vsql interactive interface

-

Database Designer, described in Creating a database design

Note

You can also perform certain tasks using Management Console. Those tasks point to the appropriate topic.Continuing configuring

Follow the configuration procedure sequentially as this section describes.

Vertica strongly recommends that you first experiment with creating and configuring a database.

You can use this generic configuration procedure several times during the development process, modifying it to fit your changing goals. You can omit steps such as preparing actual data files and sample queries, and run the Database Designer without optimizing for queries. For example, you can create, load, and query a database several times for development and testing purposes, then one final time to create and load the production database.

1.1 - Prepare disk storage locations

You must create and specify directories in which to store your catalog and data files (physical schema). You can specify these locations when you install or configure the database, or later during database operations. Both the catalog and data directories must be owned by the database superuser.

The directory you specify for database catalog files (the catalog path) is used across all nodes in the cluster. For example, if you specify /home/catalog as the catalog directory, Vertica uses that catalog path on all nodes. The catalog directory should always be separate from any data file directories.

Note

Do not use a shared directory for more than one node. Data and catalog directories must be distinct for each node. Multiple nodes must not be allowed to write to the same data or catalog directory.The data path you designate is also used across all nodes in the cluster. Specifying that data should be stored in /home/data, Vertica uses this path on all database nodes.

Do not use a single directory to contain both catalog and data files. You can store the catalog and data directories on different drives, which can be either on drives local to the host (recommended for the catalog directory) or on a shared storage location, such as an external disk enclosure or a SAN.

Before you specify a catalog or data path, be sure the parent directory exists on all nodes of your database. Creating a database in admintools also creates the catalog and data directories, but the parent directory must exist on each node.

You do not need to specify a disk storage location during installation. However, you can do so by using the --data-dir parameter to the install_vertica script. See Specifying disk storage location during installation.

1.1.1 - Specifying disk storage location during database creation

When you invoke the Create Database command in the Administration tools, a dialog box allows you to specify the catalog and data locations. These locations must exist on each host in the cluster and must be owned by the database administrator.

When you click OK, Vertica automatically creates the following subdirectories:

catalog-pathname/database-name/node-name_catalog/data-pathname/database-name/node-name_data/

For example, if you use the default value (the database administrator's home directory) of

/home/dbadmin for the Stock Exchange example database, the catalog and data directories are created on each node in the cluster as follows:

/home/dbadmin/Stock_Schema/stock_schema_node1_host01_catalog/home/dbadmin/Stock_Schema/stock_schema_node1_host01_data

Notes

-

Catalog and data path names must contain only alphanumeric characters and cannot have leading space characters. Failure to comply with these restrictions will result in database creation failure.

-

Vertica refuses to overwrite a directory if it appears to be in use by another database. Therefore, if you created a database for evaluation purposes, dropped the database, and want to reuse the database name, make sure that the disk storage location previously used has been completely cleaned up. See Managing storage locations for details.

1.1.2 - Specifying disk storage location on MC

You can use the MC interface to specify where you want to store database metadata on the cluster in the following ways:

-

When you configure MC the first time

-

When you create new databases using on MC

See also

1.1.3 - Configuring disk usage to optimize performance

Once you have created your initial storage location, you can add additional storage locations to the database later. Not only does this provide additional space, it lets you control disk usage and increase I/O performance by isolating files that have different I/O or access patterns. For example, consider:

-

Isolating execution engine temporary files from data files by creating a separate storage location for temp space.

-

Creating labeled storage locations and storage policies, in which selected database objects are stored on different storage locations based on measured performance statistics or predicted access patterns.

See also

Managing storage locations1.1.4 - Using shared storage with Vertica

If using shared SAN storage, ensure there is no contention among the nodes for disk space or bandwidth.

-

Each host must have its own catalog and data locations. Hosts cannot share catalog or data locations.

-

Configure the storage so that there is enough I/O bandwidth for each node to access the storage independently.

1.1.5 - Viewing database storage information

You can view node-specific information on your Vertica cluster through the Management Console. See Monitoring Vertica Using Management Console for details.

1.1.6 - Anti-virus scanning exclusions

You should exclude the Vertica catalog and data directories from anti-virus scanning. Certain anti-virus products have been identified as targeting Vertica directories, and sometimes lock or delete files in them. This can adversely affect Vertica performance and data integrity.

Identified anti-virus products include the following:

-

ClamAV

-

SentinelOne

-

Sophos

-

Symantec

-

Twistlock

Important

This list is not comprehensive.1.2 - Disk space requirements for Vertica

In addition to actual data stored in the database, Vertica requires disk space for several data reorganization operations, such as mergeout and managing nodes in the cluster. For best results, Vertica recommends that disk utilization per node be no more than sixty percent (60%) for a K-Safe=1 database to allow such operations to proceed.

In addition, disk space is temporarily required by certain query execution operators, such as hash joins and sorts, in the case when they cannot be completed in memory (RAM). Such operators might be encountered during queries, recovery, refreshing projections, and so on. The amount of disk space needed (known as temp space) depends on the nature of the queries, amount of data on the node and number of concurrent users on the system. By default, any unused disk space on the data disk can be used as temp space. However, Vertica recommends provisioning temp space separate from data disk space.

See also

1.3 - Disk space requirements for Management Console

You can install Management Console on any node in the cluster, so it has no special disk requirements other than disk space you allocate for your database cluster.

1.4 - Prepare the logical schema script

Designing a logical schema for a Vertica database is no different from designing one for any other SQL database. Details are described more fully in Designing a logical schema.

To create your logical schema, prepare a SQL script (plain text file, typically with an extension of .sql) that:

-

Creates additional schemas (as necessary). See Using multiple schemas.

-

Creates the tables and column constraints in your database using the CREATE TABLE command.

-

Defines the necessary table constraints using the ALTER TABLE command.

-

Defines any views on the table using the CREATE VIEW command.

You can generate a script file using:

-

A schema designer application.

-

A schema extracted from an existing database.

-

A text editor.

-

One of the example database

example-name_define_schema.sqlscripts as a template. (See the example database directories in/opt/vertica/examples.)

In your script file, make sure that:

-

Each statement ends with a semicolon.

-

You use data types supported by Vertica, as described in the SQL Reference Manual.

Once you have created a database, you can test your schema script by executing it as described in Create the logical schema. If you encounter errors, drop all tables, correct the errors, and run the script again.

1.5 - Prepare data files

Prepare two sets of data files:

-

Test data files. Use test files to test the database after the partial data load. If possible, use part of the actual data files to prepare the test data files.

-

Actual data files. Once the database has been tested and optimized, use your data files for your initial Data load.

How to name data files

Name each data file to match the corresponding table in the logical schema. Case does not matter.

Use the extension .tbl or whatever you prefer. For example, if a table is named Stock_Dimension, name the corresponding data file stock_dimension.tbl. When using multiple data files, append _nnn (where nnn is a positive integer in the range 001 to 999) to the file name. For example, stock_dimension.tbl_001, stock_dimension.tbl_002, and so on.

1.6 - Prepare load scripts

Note

You can postpone this step if your goal is to test a logical schema design for validity.Prepare SQL scripts to load data directly into physical storage using COPY on vsql, or through ODBC.

You need scripts that load:

-

Large tables

-

Small tables

Vertica recommends that you load large tables using multiple files. To test the load process, use files of 10GB to 50GB in size. This size provides several advantages:

-

You can use one of the data files as a sample data file for the Database Designer.

-

You can load just enough data to Perform a partial data load before you load the remainder.

-

If a single load fails and rolls back, you do not lose an excessive amount of time.

-

Once the load process is tested, for multi-terabyte tables, break up the full load in file sizes of 250–500GB.

See also

Tip

You can use the load scripts included in the example databases as templates.1.7 - Create an optional sample query script

The purpose of a sample query script is to test your schema and load scripts for errors.

Include a sample of queries your users are likely to run against the database. If you don't have any real queries, just write simple SQL that collects counts on each of your tables. Alternatively, you can skip this step.

1.8 - Create an empty database

Two options are available for creating an empty database:

-

Using the Management Console. For details, see Creating a database using MC.

-

Using Administration tools .

Although you can create more than one database (for example, one for production and one for testing), there can be only one active database for each installation of Vertica Analytic Database.

1.8.1 - Creating a database name and password

Database names

Database names must conform to the following rules:

-

Be between 1-30 characters

-

Begin with a letter

-

Follow with any combination of letters (upper and lowercase), numbers, and/or underscores.

Database names are case sensitive; however, Vertica strongly recommends that you do not create databases with names that differ only in case. For example, do not create a database called mydatabase and another called MyDataBase.

Database passwords

Database passwords can contain letters, digits, and special characters listed in the next table. Passwords cannot include non-ASCII Unicode characters.

The allowed password length is between 0-100 characters. The database superuser can change a Vertica user's maximum password length using ALTER PROFILE.

You use Profiles to specify and control password definitions. For instance, a profile can define the maximum length, reuse time, and the minimum number or required digits for a password, as well as other details.

The following special (ASCII) characters are valid in passwords. Special characters can appear anywhere in a password string. For example, mypas$word or $mypassword are both valid, while ±mypassword is not. Using special characters other than the ones listed below can cause database instability.

-

# -

? -

= -

_ -

' -

) -

( -

@ -

\ -

/ -

! -

, -

~ -

: -

% -

; -

` -

^ -

+ -

. -

- -

space

-

& -

< -

> -

[ -

] -

{ -

} -

| -

* -

$ -

"

See also

1.8.2 - Create a database using administration tools

-

Run the Administration tools from your Administration host as follows:

$ /opt/vertica/bin/admintoolsIf you are using a remote terminal application, such as PuTTY or a Cygwin bash shell, see Notes for remote terminal users.

-

Accept the license agreement and specify the location of your license file. For more information see Managing licenses for more information.

This step is necessary only if it is the first time you have run the Administration Tools

-

On the Main Menu, click Configuration Menu, and click OK.

-

On the Configuration Menu, click Create Database, and click OK.

-

Enter the name of the database and an optional comment, and click OK. See Creating a database name and password for naming guidelines and restrictions.

-

Establish the superuser password for your database.

-

To provide a password enter the password and click OK. Confirm the password by entering it again, and then click OK.

-

If you don't want to provide the password, leave it blank and click OK. If you don't set a password, Vertica prompts you to verify that you truly do not want to establish a superuser password for this database. Click Yes to create the database without a password or No to establish the password.

Caution

If you do not enter a password at this point, the superuser password is set to empty. Unless the database is for evaluation or academic purposes, Vertica strongly recommends that you enter a superuser password. See Creating a database name and password for guidelines. -

-

Select the hosts to include in the database from the list of hosts specified when Vertica was installed (

install_vertica -s), and click OK. -

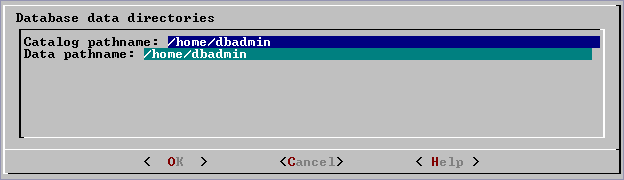

Specify the directories in which to store the data and catalog files, and click OK.

Note

Do not use a shared directory for more than one node. Data and catalog directories must be distinct for each node. Multiple nodes must not be allowed to write to the same data or catalog directory. -

Catalog and data path names must contain only alphanumeric characters and cannot have leading spaces. Failure to comply with these restrictions results in database creation failure.

For example:

Catalog pathname: /home/dbadmin

Data Pathname: /home/dbadmin

-

Review the Current Database Definition screen to verify that it represents the database you want to create, and then click Yes to proceed or No to modify the database definition.

-

If you click Yes, Vertica creates the database you defined and then displays a message to indicate that the database was successfully created.

Note

For databases created with 3 or more nodes, Vertica automatically sets K-safety to 1 to ensure that the database is fault tolerant in case a node fails. For more information, see Failure recovery in the Administrator's Guide and MARK_DESIGN_KSAFE. -

Click OK to acknowledge the message.

1.9 - Create the logical schema

-

Connect to the database.

In the Administration Tools Main Menu, click Connect to Database and click OK.

See Connecting to the Database for details.

The vsql welcome script appears:

Welcome to vsql, the Vertica Analytic Database interactive terminal. Type: \h or \? for help with vsql commands \g or terminate with semicolon to execute query \q to quit => -

Run the logical schema script

Using the \i meta-command in vsql to run the SQL logical schema script that you prepared earlier.

-

Disconnect from the database

Use the

\qmeta-command in vsql to return to the Administration Tools.

1.10 - Perform a partial data load

Vertica recommends that for large tables, you perform a partial data load and then test your database before completing a full data load. This load should load a representative amount of data.

-

Load the small tables.

Load the small table data files using the SQL load scripts and data files you prepared earlier.

-

Partially load the large tables.

Load 10GB to 50GB of table data for each table using the SQL load scripts and data files that you prepared earlier.

For more information about projections, see Projections.

1.11 - Test the database

Test the database to verify that it is running as expected.

Check queries for syntax errors and execution times.

-

Use the vsql \timing meta-command to enable the display of query execution time in milliseconds.

-

Execute the SQL sample query script that you prepared earlier.

-

Execute several ad hoc queries.

1.12 - Optimize query performance

Optimizing the database consists of optimizing for compression and tuning for queries. (See Creating a database design.)

To optimize the database, use the Database Designer to create and deploy a design for optimizing the database. See Using Database Designer to create a comprehensive design.

After you run the Database Designer, use the techniques described in Query optimization to improve the performance of certain types of queries.

Note

The database response time depends on factors such as type and size of the application query, database design, data size and data types stored, available computational power, and network bandwidth. Adding nodes to a database cluster does not necessarily improve the system response time for every query, especially if the response time is already short, e.g., less then 10 seconds, or the response time is not hardware bound.1.13 - Complete the data load

To complete the load:

-

Monitor system resource usage.

Continue to run the

top,free, anddfutilities and watch them while your load scripts are running (as described in Monitoring Linux resource usage). You can do this on any or all nodes in the cluster. Make sure that the system is not swapping excessively (watchkswapdintop) or running out of swap space (watch for a large amount of used swap space in free).Note

Vertica requires a dedicated server. If your loader or other processes take up significant amounts of RAM, it can result in swapping. -

Complete the large table loads.

Run the remainder of the large table load scripts.

1.14 - Test the optimized database

Check query execution times to test your optimized design:

-

Use the vsql

\timingmeta-command to enable the display of query execution time in milliseconds.Execute a SQL sample query script to test your schema and load scripts for errors.

Note

Include a sample of queries your users are likely to run against the database. If you don't have any real queries, just write simple SQL that collects counts on each of your tables. Alternatively, you can skip this step. -

Execute several ad hoc queries

-

Run Administration tools and select Connect to Database.

-

Use the \i meta-command to execute the query script; for example:

vmartdb=> \i vmart_query_03.sql customer_name | annual_income ------------------+--------------- James M. McNulty | 999979 Emily G. Vogel | 999998 (2 rows) Time: First fetch (2 rows): 58.411 ms. All rows formatted: 58.448 ms vmartdb=> \i vmart_query_06.sql store_key | order_number | date_ordered -----------+--------------+-------------- 45 | 202416 | 2004-01-04 113 | 66017 | 2004-01-04 121 | 251417 | 2004-01-04 24 | 250295 | 2004-01-04 9 | 188567 | 2004-01-04 166 | 36008 | 2004-01-04 27 | 150241 | 2004-01-04 148 | 182207 | 2004-01-04 198 | 75716 | 2004-01-04 (9 rows) Time: First fetch (9 rows): 25.342 ms. All rows formatted: 25.383 ms

-

Once the database is optimized, it should run queries efficiently. If you discover queries that you want to optimize, you can modify and update the design incrementally.

1.15 - Implement locales for international data sets

Locale specifies the user's language, country, and any special variant preferences, such as collation. Vertica uses locale to determine the behavior of certain string functions. Locale also determines the collation for various SQL commands that require ordering and comparison, such as aggregate GROUP BY and ORDER BY clauses, joins, and the analytic ORDER BY clause.

The default locale for a Vertica database is en_US@collation=binary (English US). You can define a new default locale that is used for all sessions on the database. You can also override the locale for individual sessions. However, projections are always collated using the default en_US@collation=binary collation, regardless of the session collation. Any locale-specific collation is applied at query time.

If you set the locale to null, Vertica sets the locale to en_US_POSIX. You can set the locale back to the default locale and collation by issuing the vsql meta-command \locale. For example:

Note

=> set locale to '';

INFO 2567: Canonical locale: 'en_US_POSIX'

Standard collation: 'LEN'

English (United States, Computer)

SET

=> \locale en_US@collation=binary;

INFO 2567: Canonical locale: 'en_US'

Standard collation: 'LEN_KBINARY'

English (United States)

=> \locale

en_US@collation-binary;

You can set locale through ODBC, JDBC, and ADO.net.

ICU locale support

Vertica uses the ICU library for locale support; you must specify locale using the ICU locale syntax. The locale used by the database session is not derived from the operating system (through the LANG variable), so Vertica recommends that you set the LANG for each node running vsql, as described in the next section.

While ICU library services can specify collation, currency, and calendar preferences, Vertica supports only the collation component. Any keywords not relating to collation are rejected. Projections are always collated using the en_US@collation=binary collation regardless of the session collation. Any locale-specific collation is applied at query time.

The SET DATESTYLE TO ... command provides some aspects of the calendar, but Vertica supports only dollars as currency.

Changing DB locale for a session

This examples sets the session locale to Thai.

-

At the operating-system level for each node running vsql, set the

LANGvariable to the locale language as follows:export LANG=th_TH.UTF-8Note

If setting theLANG=as shown does not work, the operating system support for locales may not be installed. -

For each Vertica session (from ODBC/JDBC or vsql) set the language locale.

From vsql:

\locale th_TH -

From ODBC/JDBC:

"SET LOCALE TO th_TH;" -

In PUTTY (or ssh terminal), change the settings as follows:

settings > window > translation > UTF-8 -

Click Apply and then click Save.

All data loaded must be in UTF-8 format, not an ISO format, as described in Delimited data. Character sets like ISO 8859-1 (Latin1), which are incompatible with UTF-8, are not supported, so functions like SUBSTRING do not work correctly for multibyte characters. Thus, settings for locale should not work correctly. If the translation setting ISO-8859-11:2001 (Latin/Thai) works, the data is loaded incorrectly. To convert data correctly, use a utility program such as Linux

iconv.

Note

The maximum length parameter for VARCHAR and CHAR data type refers to the number of octets (bytes) that can be stored in that field, not the number of characters. When using multi-byte UTF-8 characters, make sure to size fields to accommodate from 1 to 4 bytes per character, depending on the data.See also

1.15.1 - Specify the default locale for the database

After you start the database, the default locale configuration parameter, DefaultSessionLocale, sets the initial locale. You can override this value for individual sessions.

To set the locale for the database, use the configuration parameter as follows:

=> ALTER DATABASE DEFAULT SET DefaultSessionLocale = 'ICU-locale-identifier';

For example:

=> ALTER DATABASE DEFAULT SET DefaultSessionLocale = 'en_GB';

1.15.2 - Override the default locale for a session

You can override the default locale for the current session in two ways:

-

VSQL command

\locale. For example:=> \locale en_GBINFO: INFO 2567: Canonical locale: 'en_GB' Standard collation: 'LEN' English (United Kingdom) -

SQL statement

SET LOCALE. For example:=> SET LOCALE TO en_GB; INFO 2567: Canonical locale: 'en_GB' Standard collation: 'LEN' English (United Kingdom)

Both methods accept locale short and long forms. For example:

=> SET LOCALE TO LEN;

INFO 2567: Canonical locale: 'en'

Standard collation: 'LEN'

English

=> \locale LEN

INFO 2567: Canonical locale: 'en'

Standard collation: 'LEN'

English

See also

1.15.3 - Server versus client locale settings

Vertica differentiates database server locale settings from client application locale settings:

-

Server locale settings only impact collation behavior for server-side query processing.

-

Client applications verify that locale is set appropriately in order to display characters correctly.

The following sections describe best practices to ensure predictable results.

Server locale

The server session locale should be set as described in Specify the default locale for the database. If locales vary across different sessions, set the server locale at the start of each session from your client.

vsql client

-

If the database does not have a default session locale, set the server locale for the session to the desired locale.

-

The locale setting in the terminal emulator where the vsql client runs should be set to be equivalent to session locale setting on the server side (ICU locale). By doing so, the data is collated correctly on the server and displayed correctly on the client.

-

All input data for vsql should be in UTF-8, and all output data is encoded in UTF-8

-

Vertica does not support non UTF-8 encodings and associated locale values; .

-

For instructions on setting locale and encoding, refer to your terminal emulator documentation.

ODBC clients

-

ODBC applications can be either in ANSI or Unicode mode. If the user application is Unicode, the encoding used by ODBC is UCS-2. If the user application is ANSI, the data must be in single-byte ASCII, which is compatible with UTF-8 used on the database server. The ODBC driver converts UCS-2 to UTF-8 when passing to the Vertica server and converts data sent by the Vertica server from UTF-8 to UCS-2.

-

If the user application is not already in UCS-2, the application must convert the input data to UCS-2, or unexpected results could occur. For example:

-

For non-UCS-2 data passed to ODBC APIs, when it is interpreted as UCS-2, it could result in an invalid UCS-2 symbol being passed to the APIs, resulting in errors.

-

The symbol provided in the alternate encoding could be a valid UCS-2 symbol. If this occurs, incorrect data is inserted into the database.

-

-

If the database does not have a default session locale, ODBC applications should set the desired server session locale using

SQLSetConnectAttr(if different from database wide setting). By doing so, you get the expected collation and string functions behavior on the server.

JDBC and ADO.NET clients

-

JDBC and ADO.NET applications use a UTF-16 character set encoding and are responsible for converting any non-UTF-16 encoded data to UTF-16. The same cautions apply as for ODBC if this encoding is violated.

-

The JDBC and ADO.NET drivers convert UTF-16 data to UTF-8 when passing to the Vertica server and convert data sent by Vertica server from UTF-8 to UTF-16.

-

If there is no default session locale at the database level, JDBC and ADO.NET applications should set the correct server session locale by executing the SET LOCALE TO command in order to get the expected collation and string functions behavior on the server. For more information, see SET LOCALE.

1.16 - Using time zones with Vertica

Vertica uses the public-domain tz database (time zone database), which contains code and data that represent the history of local time for locations around the globe. This database organizes time zone and daylight saving time data by partitioning the world into timezones whose clocks all agree on timestamps that are later than the POSIX Epoch (1970-01-01 00:00:00 UTC). Each timezone has a unique identifier. Identifiers typically follow the convention area/location, where area is a continent or ocean, and location is a specific location within the area—for example, Africa/Cairo, America/New_York, and Pacific/Honolulu.

Important

IANA acknowledge that 1970 is an arbitrary cutoff. They note the problems that face moving the cutoff earlier "due to the wide variety of local practices before computer timekeeping became prevalent." IANA's own description of the tz database suggests that users should regard historical dates and times, especially those that predate the POSIX epoch date, with a healthy measure of skepticism. For details, see Theory and pragmatics of the tz code and data.Vertica uses the TZ environment variable (if set) on each node for the default current time zone. Otherwise, Vertica uses the operating system time zone.

The TZ variable can be set by the operating system during login (see /etc/profile, /etc/profile.d, or /etc/bashrc) or by the user in .profile, .bashrc or .bash-profile. TZ must be set to the same value on each node when you start Vertica.

The following command returns the current time zone for your database:

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

You can also set the time zone for a single session with SET TIME ZONE.

Conversion and storage of date/time data

There is no database default time zone. TIMESTAMPTZ (TIMESTAMP WITH TIMEZONE) data is converted from the current local time and stored as GMT/UTC (Greenwich Mean Time/Coordinated Universal Time).

When TIMESTAMPTZ data is used, data is converted back to the current local time zone, which might be different from the local time zone where the data was stored. This conversion takes into account daylight saving time (summer time), depending on the year and date to determine when daylight saving time begins and ends.

TIMESTAMP WITHOUT TIMEZONE data stores the timestamp as given, and retrieves it exactly as given. The current time zone is ignored. The same is true for TIME WITHOUT TIMEZONE. For TIME WITH TIMEZONE (TIMETZ), however, the current time zone setting is stored along with the given time, and that time zone is used on retrieval.

Note

Vertica recommends that you use TIMESTAMPTZ, not TIMETZ.Querying data/time data

TIMESTAMPTZ uses the current time zone on both input and output, as in the following example:

=> CREATE TEMP TABLE s (tstz TIMESTAMPTZ);=> SET TIMEZONE TO 'America/New_York';

=> INSERT INTO s VALUES ('2009-02-01 00:00:00');

=> INSERT INTO s VALUES ('2009-05-12 12:00:00');

=> SELECT tstz AS 'Local timezone', tstz AT TIMEZONE 'America/New_York' AS 'America/New_York',

tstz AT TIMEZONE 'GMT' AS 'GMT' FROM s;

Local timezone | America/New_York | GMT

------------------------+---------------------+---------------------

2009-02-01 00:00:00-05 | 2009-02-01 00:00:00 | 2009-02-01 05:00:00

2009-05-12 12:00:00-04 | 2009-05-12 12:00:00 | 2009-05-12 16:00:00

(2 rows)

The -05 in the Local time zone column shows that the data is displayed in EST, while -04 indicates EDT. The other two columns show the TIMESTAMP WITHOUT TIMEZONE at the specified time zone.

The next example shows what happens if the current time zone is changed to GMT:

=> SET TIMEZONE TO 'GMT';=> SELECT tstz AS 'Local timezone', tstz AT TIMEZONE 'America/New_York' AS

'America/New_York', tstz AT TIMEZONE 'GMT' as 'GMT' FROM s;

Local timezone | America/New_York | GMT

------------------------+---------------------+---------------------

2009-02-01 05:00:00+00 | 2009-02-01 00:00:00 | 2009-02-01 05:00:00

2009-05-12 16:00:00+00 | 2009-05-12 12:00:00 | 2009-05-12 16:00:00

(2 rows)

The +00 in the Local time zone column indicates that TIMESTAMPTZ is displayed in GMT.

The approach of using TIMESTAMPTZ fields to record events captures the GMT of the event, as expressed in terms of the local time zone. Later, it allows for easy conversion to any other time zone, either by setting the local time zone or by specifying an explicit AT TIMEZONE clause.

The following example shows how TIMESTAMP WITHOUT TIMEZONE fields work in Vertica.

=> CREATE TEMP TABLE tnoz (ts TIMESTAMP);=> INSERT INTO tnoz VALUES('2009-02-01 00:00:00');

=> INSERT INTO tnoz VALUES('2009-05-12 12:00:00');

=> SET TIMEZONE TO 'GMT';

=> SELECT ts AS 'No timezone', ts AT TIMEZONE 'America/New_York' AS

'America/New_York', ts AT TIMEZONE 'GMT' AS 'GMT' FROM tnoz;

No timezone | America/New_York | GMT

---------------------+------------------------+------------------------

2009-02-01 00:00:00 | 2009-02-01 05:00:00+00 | 2009-02-01 00:00:00+00

2009-05-12 12:00:00 | 2009-05-12 16:00:00+00 | 2009-05-12 12:00:00+00

(2 rows)

The +00 at the end of a timestamp indicates that the setting is TIMESTAMP WITH TIMEZONE in GMT (the current time zone). The America/New_York column shows what the GMT setting was when you recorded the time, assuming you read a normal clock in the America/New_York time zone. What this shows is that if it is midnight in the America/New_York time zone, then it is 5 am GMT.

Note

00:00:00 Sunday February 1, 2009 in America/New_York converts to 05:00:00 Sunday February 1, 2009 in GMT.The GMT column displays the GMT time, assuming the input data was captured in GMT.

If you don't set the time zone to GMT, and you use another time zone, for example America/New_York, then the results display in America/New_York with a -05 and -04, showing the difference between that time zone and GMT.

=> SET TIMEZONE TO 'America/New_York';

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

=> SELECT ts AS 'No timezone', ts AT TIMEZONE 'America/New_York' AS

'America/New_York', ts AT TIMEZONE 'GMT' AS 'GMT' FROM tnoz;

No timezone | America/New_York | GMT

---------------------+------------------------+------------------------

2009-02-01 00:00:00 | 2009-02-01 00:00:00-05 | 2009-01-31 19:00:00-05

2009-05-12 12:00:00 | 2009-05-12 12:00:00-04 | 2009-05-12 08:00:00-04

(2 rows)

In this case, the last column is interesting in that it returns the time in New York, given that the data was captured in GMT.

See also

1.17 - Change transaction isolation levels

By default, Vertica uses the READ COMMITTED isolation level for all sessions. You can change the default isolation level for the database or for a given session.

A transaction retains its isolation level until it completes, even if the session's isolation level changes during the transaction. Vertica internal processes (such as the Tuple Mover and refresh operations) and DDL operations always run at the SERIALIZABLE isolation level to ensure consistency.

Database isolation level

The configuration parameter

TransactionIsolationLevel specifies the database isolation level, and is used as the default for all sessions. Use

ALTER DATABASE to change the default isolation level.For example:

=> ALTER DATABASE DEFAULT SET TransactionIsolationLevel = 'SERIALIZABLE';

ALTER DATABASE

=> ALTER DATABASE DEFAULT SET TransactionIsolationLevel = 'READ COMMITTED';

ALTER DATABASE

Changes to the database isolation level only apply to future sessions. Existing sessions and their transactions continue to use their original isolation level.

Use

SHOW CURRENT to view the database isolation level:

=> SHOW CURRENT TransactionIsolationLevel;

level | name | setting

----------+---------------------------+----------------

DATABASE | TransactionIsolationLevel | READ COMMITTED

(1 row)

Session isolation level

SET SESSION CHARACTERISTICS AS TRANSACTION changes the isolation level for a specific session. For example:

=> SET SESSION CHARACTERISTICS AS TRANSACTION ISOLATION LEVEL SERIALIZABLE;

SET

Use

SHOW to view the current session's isolation level:

=> SHOW TRANSACTION_ISOLATION;

See also

Transactions2 - Configuration parameter management

Vertica supports a wide variety of configuration parameters that affect many facets of database behavior. These parameters can be set with the appropriate ALTER statements at one or more levels, listed here in descending order of precedence:

-

User (ALTER USER)

-

Session (ALTER SESSION)

-

Node (ALTER NODE)

-

Database (ALTER DATABASE)

You can query system table CONFIGURATION_PARAMETERS to obtain the current settings for all user-accessible parameters. For example, the following query obtains settings for partitioning parameters: their current and default values, which levels they can be set at, and whether changes require a database restart to take effect:

=> SELECT parameter_name, current_value, default_value, allowed_levels, change_requires_restart

FROM configuration_parameters WHERE parameter_name ILIKE '%partitioncount%';

parameter_name | current_value | default_value | allowed_levels | change_requires_restart

----------------------+---------------+---------------+----------------+-------------------------

MaxPartitionCount | 1024 | 1024 | NODE, DATABASE | f

ActivePartitionCount | 1 | 1 | NODE, DATABASE | f

(2 rows)

For details about individual configuration parameters grouped by category, see Configuration parameters.

Setting and clearing configuration parameters

You change specific configuration parameters with the appropriate ALTER statements; the same statements also let you reset configuration parameters to their default values. For example, the following ALTER statements change ActivePartitionCount at the database level from 1 to 2 , and DisablePartitionCount at the session level from 0 to 1:

=> ALTER DATABASE DEFAULT SET ActivePartitionCount = 2;

ALTER DATABASE

=> ALTER SESSION SET DisableAutopartition = 1;

ALTER SESSION

=> SELECT parameter_name, current_value, default_value FROM configuration_parameters

WHERE parameter_name IN ('ActivePartitionCount', 'DisableAutopartition');

parameter_name | current_value | default_value

----------------------+---------------+---------------

ActivePartitionCount | 2 | 1

DisableAutopartition | 1 | 0

(2 rows)

You can later reset the same configuration parameters to their default values:

=> ALTER DATABASE DEFAULT CLEAR ActivePartitionCount;

ALTER DATABASE

=> ALTER SESSION CLEAR DisableAutopartition;

ALTER DATABASE

=> SELECT parameter_name, current_value, default_value FROM configuration_parameters

WHERE parameter_name IN ('ActivePartitionCount', 'DisableAutopartition');

parameter_name | current_value | default_value

----------------------+---------------+---------------

DisableAutopartition | 0 | 0

ActivePartitionCount | 1 | 1

(2 rows)

Caution

Vertica is designed to operate with minimal configuration changes. Be careful to change configuration parameters according to documented guidelines.2.1 - Viewing configuration parameter values

You can view active configuration parameter values in two ways:

SHOW statements

Use the following SHOW statements to view active configuration parameters:

-

SHOW CURRENT: Returns settings of active configuration parameter values. Vertica checks settings at all levels, in the following ascending order of precedence:

-

session

-

node

-

database

If no values are set at any scope, SHOW CURRENT returns the parameter's default value.

-

-

SHOW DATABASE: Displays configuration parameter values set for the database.

-

SHOW USER: Displays configuration parameters set for the specified user, and for all users.

-

SHOW SESSION: Displays configuration parameter values set for the current session.

-

SHOW NODE: Displays configuration parameter values set for a node.

If a configuration parameter requires a restart to take effect, the values in a SHOW CURRENT statement might differ from values in other SHOW statements. To see which parameters require restart, query the CONFIGURATION_PARAMETERS system table.

System tables

You can query several system tables for configuration parameters:

-

SESSION_PARAMETERS returns session-scope parameters.

-

CONFIGURATION_PARAMETERS returns parameters for all scopes: database, node, and session.

-

USER_CONFIGURATION_PARAMETERS provides information about user-level configuration parameters that are in effect for database users.

3 - Designing a logical schema

Designing a logical schema for a Vertica database is the same as designing for any other SQL database. A logical schema consists of objects such as schemas, tables, views and referential Integrity constraints that are visible to SQL users. Vertica supports any relational schema design that you choose.

3.1 - Using multiple schemas

Using a single schema is effective if there is only one database user or if a few users cooperate in sharing the database. In many cases, however, it makes sense to use additional schemas to allow users and their applications to create and access tables in separate namespaces. For example, using additional schemas allows:

-

Many users to access the database without interfering with one another.

Individual schemas can be configured to grant specific users access to the schema and its tables while restricting others.

-

Third-party applications to create tables that have the same name in different schemas, preventing table collisions.

Unlike other RDBMS, a schema in a Vertica database is not a collection of objects bound to one user.

3.1.1 - Multiple schema examples

This section provides examples of when and how you might want to use multiple schemas to separate database users. These examples fall into two categories: using multiple private schemas and using a combination of private schemas (i.e. schemas limited to a single user) and shared schemas (i.e. schemas shared across multiple users).

Using multiple private schemas

Using multiple private schemas is an effective way of separating database users from one another when sensitive information is involved. Typically a user is granted access to only one schema and its contents, thus providing database security at the schema level. Database users can be running different applications, multiple copies of the same application, or even multiple instances of the same application. This enables you to consolidate applications on one database to reduce management overhead and use resources more effectively. The following examples highlight using multiple private schemas.

Using multiple schemas to separate users and their unique applications

In this example, both database users work for the same company. One user (HRUser) uses a Human Resource (HR) application with access to sensitive personal data, such as salaries, while another user (MedUser) accesses information regarding company healthcare costs through a healthcare management application. HRUser should not be able to access company healthcare cost information and MedUser should not be able to view personal employee data.

To grant these users access to data they need while restricting them from data they should not see, two schemas are created with appropriate user access, as follows:

-

HRSchema—A schema owned by HRUser that is accessed by the HR application.

-

HealthSchema—A schema owned by MedUser that is accessed by the healthcare management application.

Using multiple schemas to support multitenancy

This example is similar to the last example in that access to sensitive data is limited by separating users into different schemas. In this case, however, each user is using a virtual instance of the same application.

An example of this is a retail marketing analytics company that provides data and software as a service (SaaS) to large retailers to help them determine which promotional methods they use are most effective at driving customer sales.

In this example, each database user equates to a retailer, and each user only has access to its own schema. The retail marketing analytics company provides a virtual instance of the same application to each retail customer, and each instance points to the user’s specific schema in which to create and update tables. The tables in these schemas use the same names because they are created by instances of the same application, but they do not conflict because they are in separate schemas.

Example of schemas in this database could be:

-

MartSchema—A schema owned by MartUser, a large department store chain.

-

PharmSchema—A schema owned by PharmUser, a large drug store chain.

Using multiple schemas to migrate to a newer version of an application

Using multiple schemas is an effective way of migrating to a new version of a software application. In this case, a new schema is created to support the new version of the software, and the old schema is kept as long as necessary to support the original version of the software. This is called a “rolling application upgrade.”

For example, a company might use a HR application to store employee data. The following schemas could be used for the original and updated versions of the software:

-

HRSchema—A schema owned by HRUser, the schema user for the original HR application.

-

V2HRSchema—A schema owned by V2HRUser, the schema user for the new version of the HR application.

Combining private and shared schemas

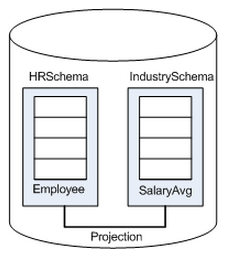

The previous examples illustrate cases in which all schemas in the database are private and no information is shared between users. However, users might want to share common data. In the retail case, for example, MartUser and PharmUser might want to compare their per store sales of a particular product against the industry per store sales average. Since this information is an industry average and is not specific to any retail chain, it can be placed in a schema on which both users are granted USAGE privileges.

Example of schemas in this database might be:

-

MartSchema—A schema owned by MartUser, a large department store chain.

-

PharmSchema—A schema owned by PharmUser, a large drug store chain.

-

IndustrySchema—A schema owned by DBUser (from the retail marketing analytics company) on which both MartUser and PharmUser have USAGE privileges. It is unlikely that retailers would be given any privileges beyond USAGE on the schema and SELECT on one or more of its tables.

3.1.2 - Creating schemas

You can create as many schemas as necessary for your database. For example, you could create a schema for each database user. However, schemas and users are not synonymous as they are in Oracle.

By default, only a superuser can create a schema or give a user the right to create a schema. (See GRANT (database) in the SQL Reference Manual.)

To create a schema use the CREATE SCHEMA statement, as described in the SQL Reference Manual.

3.1.3 - Specifying objects in multiple schemas

Once you create two or more schemas, each SQL statement or function must identify the schema associated with the object you are referencing. You can specify an object within multiple schemas by:

-

Qualifying the object name by using the schema name and object name separated by a dot. For example, to specify

MyTable, located inSchema1, qualify the name asSchema1.MyTable. -

Using a search path that includes the desired schemas when a referenced object is unqualified. By Setting search paths, Vertica will automatically search the specified schemas to find the object.

3.1.4 - Setting search paths

Each user session has a search path of schemas. Vertica uses this search path to find tables and user-defined functions (UDFs) that are unqualified by their schema name. A session search path is initially set from the user's profile. You can change the session's search path at any time by calling

SET SEARCH_PATH. This search path remains in effect until the next SET SEARCH_PATH statement, or the session ends.

Viewing the current search path

SHOW SEARCH_PATH returns the session's current search path. For example:

=> SHOW SEARCH_PATH;

name | setting

-------------+---------------------------------------------------

search_path | "$user", public, v_catalog, v_monitor, v_internal

Schemas are listed in descending order of precedence. The first schema has the highest precedence in the search order. If this schema exists, it is also defined as the current schema, which is used for tables that are created with unqualified names. You can identify the current schema by calling the function

CURRENT_SCHEMA:

=> SELECT CURRENT_SCHEMA;

current_schema

----------------

public

(1 row)

Setting the user search path

A session search path is initially set from the user's profile. If the search path in a user profile is not set by

CREATE USER or

ALTER USER, it is set to the database default:

=> CREATE USER agent007;

CREATE USER

=> \c - agent007

You are now connected as user "agent007".

=> SHOW SEARCH_PATH;

name | setting

-------------+---------------------------------------------------

search_path | "$user", public, v_catalog, v_monitor, v_internal

$user resolves to the session user name—in this case, agent007—and has the highest precedence. If a schema agent007, exists, Vertica begins searches for unqualified tables in that schema. Also, calls to

CURRENT_SCHEMA return this schema. Otherwise, Vertica uses public as the current schema and begins searches in it.

Use

ALTER USER to modify an existing user's search path. These changes overwrite all non-system schemas in the search path, including $USER. System schemas are untouched. Changes to a user's search path take effect only when the user starts a new session; current sessions are unaffected.

Important

After modifying the user's search path, verify that the user has access privileges to all schemas that are on the updated search path.For example, the following statements modify agent007's search path, and grant access privileges to schemas and tables that are on the new search path:

=> ALTER USER agent007 SEARCH_PATH store, public;

ALTER USER

=> GRANT ALL ON SCHEMA store, public TO agent007;

GRANT PRIVILEGE

=> GRANT SELECT ON ALL TABLES IN SCHEMA store, public TO agent007;

GRANT PRIVILEGE

=> \c - agent007

You are now connected as user "agent007".

=> SHOW SEARCH_PATH;

name | setting

-------------+-------------------------------------------------

search_path | store, public, v_catalog, v_monitor, v_internal

(1 row)

To verify a user's search path, query the system table

USERS:

=> SELECT search_path FROM USERS WHERE user_name='agent007';

search_path

-------------------------------------------------

store, public, v_catalog, v_monitor, v_internal

(1 row)

To revert a user's search path to the database default settings, call ALTER USER and set the search path to DEFAULT. For example:

=> ALTER USER agent007 SEARCH_PATH DEFAULT;

ALTER USER

=> SELECT search_path FROM USERS WHERE user_name='agent007';

search_path

---------------------------------------------------

"$user", public, v_catalog, v_monitor, v_internal

(1 row)

Ignored search path schemas

Vertica only searches among existing schemas to which the current user has access privileges. If a schema in the search path does not exist or the user lacks access privileges to it, Vertica silently excludes it from the search. For example, if agent007 lacks SELECT privileges to schema public, Vertica silently skips this schema. Vertica returns with an error only if it cannot find the table anywhere on the search path.

Setting session search path

Vertica initially sets a session's search path from the user's profile. You can change the current session's search path with

SET SEARCH_PATH. You can use SET SEARCH_PATH in two ways:

-

Explicitly set the session search path to one or more schemas. For example:

=> \c - agent007 You are now connected as user "agent007". dbadmin=> SHOW SEARCH_PATH; name | setting -------------+--------------------------------------------------- search_path | "$user", public, v_catalog, v_monitor, v_internal (1 row) => SET SEARCH_PATH TO store, public; SET => SHOW SEARCH_PATH; name | setting -------------+------------------------------------------------- search_path | store, public, v_catalog, v_monitor, v_internal (1 row) -

Set the session search path to the database default:

=> SET SEARCH_PATH TO DEFAULT; SET => SHOW SEARCH_PATH; name | setting -------------+--------------------------------------------------- search_path | "$user", public, v_catalog, v_monitor, v_internal (1 row)

SET SEARCH_PATH overwrites all non-system schemas in the search path, including $USER. System schemas are untouched.

3.1.5 - Creating objects that span multiple schemas

Vertica supports views that reference tables across multiple schemas. For example, a user might need to compare employee salaries to industry averages. In this case, the application queries two schemas:

-

Shared schema

IndustrySchemafor salary averages -

Private schema

HRSchemafor company-specific salary information

Best Practice: When creating objects that span schemas, use qualified table names. This naming convention avoids confusion if the query path or table structure within the schemas changes at a later date.

3.2 - Tables in schemas

In Vertica you can create persistent and temporary tables, through

CREATE TABLE and

CREATE TEMPORARY TABLE, respectively.

For detailed information on both types, see Creating Tables and Creating temporary tables.

Persistent tables

CREATE TABLE creates a table in the Vertica logical schema. For example:

CREATE TABLE vendor_dimension (

vendor_key INTEGER NOT NULL PRIMARY KEY,

vendor_name VARCHAR(64),

vendor_address VARCHAR(64),

vendor_city VARCHAR(64),

vendor_state CHAR(2),

vendor_region VARCHAR(32),

deal_size INTEGER,

last_deal_update DATE

);

For detailed information, see Creating Tables.

Temporary tables

CREATE TEMPORARY TABLE creates a table whose data persists only during the current session. Temporary table data is never visible to other sessions.

Temporary tables can be used to divide complex query processing into multiple steps. Typically, a reporting tool holds intermediate results while reports are generated—for example, the tool first gets a result set, then queries the result set, and so on.

CREATE TEMPORARY TABLE can create tables at two scopes, global and local, through the keywords GLOBAL and LOCAL, respectively:

-

GLOBAL(default): The table definition is visible to all sessions. However, table data is session-scoped. -

LOCAL: The table definition is visible only to the session in which it is created. When the session ends, Vertica automatically drops the table.

For detailed information, see Creating temporary tables.

4 - Creating a database design

A design is a physical storage plan that optimizes query performance. Data in Vertica is physically stored in projections. When you initially load data into a table using INSERT, COPY (or COPY LOCAL), Vertica creates a default superprojection for the table. This superprojection ensures that all of the data is available for queries. However, these superprojections might not optimize database performance, resulting in slow query performance and low data compression.

To improve performance, create a design for your Vertica database that optimizes query performance and data compression. You can create a design in several ways:

-

Use Database Designer, a toolthat recommends a design for optimal performance.

-

Use Database Designer to create an initial design and then manually modify it.

Database Designer can help you minimize how much time you spend on manual database tuning. You can also use Database Designer to redesign the database incrementally as requirements such as workloads change over time.

Database Designer runs as a background process. This is useful if you have a large design that you want to run overnight. An active SSH session is not required, so design and deploy operations continue to run uninterrupted if the session ends.

Tip

Vertica recommends that you first globally optimize your database using the Comprehensive setting in Database Designer. If the performance of the comprehensive design is not adequate, you can design custom projections using an incremental design and manually, as described in Creating custom designs.4.1 - About Database Designer

Vertica Database Designer uses sophisticated strategies to create a design that provides excellent performance for ad-hoc queries and specific queries while using disk space efficiently.

During the design process, Database Designer analyzes the logical schema definition, sample data, and sample queries, and creates a physical schema (projections) in the form of a SQL script that you deploy automatically or manually. This script creates a minimal set of superprojections to ensure K-safety.

In most cases, the projections that Database Designer creates provide excellent query performance within physical constraints while using disk space efficiently.

General design options

When you run Database Designer, several general options are available:

-

Create a comprehensive or incremental design.

-

Optimize for query execution, load, or a balance of both.

-

Require K-safety.

-

Recommend unsegmented projections when feasible.

-

Analyze statistics before creating the design.

Design input

Database Designer bases its design on the following information that you provide:

-

Design queries that you typically run during normal database operations.

-

Design tables that contain sample data.

Output

Database Designer yields the following output:

-

Design script that creates the projections for the design in a way that meets the optimization objectives and distributes data uniformly across the cluster.

-

Deployment script that creates and refreshes the projections for your design. For comprehensive designs, the deployment script contains commands that remove non-optimized projections. The deployment script includes the full design script.

-

Backup script that contains SQL statements to deploy the design that existed on the system before deployment. This file is useful in case you need to revert to the pre-deployment design.

Design restrictions

Database Designer-generated designs:

-

Exclude live aggregate or Top-K projections. You must create these manually. See CREATE PROJECTION.

-

Do not sort, segment, or partition projections on LONG VARBINARY and LONG VARCHAR columns.

-

Do not support operations on complex types.

Post-design options

While running Database Designer, you can choose to deploy your design automatically after the deployment script is created, or to deploy it manually, after you have reviewed and tested the design. Vertica recommends that you test the design on a non-production server before deploying the design to your production server.

4.2 - How Database Designer creates a design

Design recommendations

Database Designer-generated designs can include the following recommendations:

-

Sort buddy projections in the same order, which can significantly improve load, recovery, and site node performance. All buddy projections have the same base name so that they can be identified as a group.

Note

If you manually create projections, Database Designer recommends a buddy with the same sort order, if one does not already exist. By default, Database Designer recommends both super and non-super segmented projections with a buddy of the same sort order and segmentation. -

Accepts unlimited queries for a comprehensive design.

-

Identifies similar design queries and assigns them a signature.

For queries with the same signature, Database Designer weights the queries, depending on how many queries have that signature. It then considers the weighted query when creating a design.

-

Recommends and creates projections in a way that minimizes data skew by distributing data uniformly across the cluster.

-

Produces higher quality designs by considering UPDATE, DELETE, and SELECT statements.

4.3 - Database Designer access requirements

By default, only users with the DBADMIN role can run Database Designer. Non-DBADMIN users can run Database Designer only if they are granted the necessary privileges and DBDUSER role, as described below. You can also enable users to run Database Designer on the Management Console (see Enabling Users to run Database Designer on Management Console).

-

Add a temporary folder to all cluster nodes with CREATE LOCATION:

=> CREATE LOCATION '/tmp/dbd' ALL NODES; -

Grant the desired user CREATE privileges to create schemas on the current (DEFAULT) database, with GRANT DATABASE:

=> GRANT CREATE ON DATABASE DEFAULT TO dbd-user; -

Grant the DBDUSER role to

dbd-userwith GRANT ROLE:=> GRANT DBDUSER TO dbd-user; -

On all nodes in the cluster, grant

dbd-useraccess to the temporary folder with GRANT LOCATION:=> GRANT ALL ON LOCATION '/tmp/dbd' TO dbd-user; -

Grant

dbd-userprivileges on one or more database schemas and their tables, with GRANT SCHEMA and GRANT TABLE, respectively:=> GRANT ALL ON SCHEMA this-schema[,...] TO dbd-user; => GRANT ALL ON ALL TABLES IN SCHEMA this-schema[,...] TO dbd-user; -

Enable the DBDUSER role on

dbd-userin one of the following ways:-

As

dbd-user, enable the DBDUSER role with SET ROLE:=> SET ROLE DBDUSER; -

As DBADMIN, automatically enable the DBDUSER role for

dbd-useron each login, with ALTER USER:=> ALTER USER dbd-user DEFAULT ROLE DBDUSER;

-

Important

When you grant the DBDUSER role, be sure to associate a resource pool with that user to manage resources while Database Designer runs.

Multiple users can run Database Designer concurrently without interfering with each other or exhausting cluster resources. When a user runs Database Designer, either with Management Console or programmatically, execution is generally contained by the user's resource pool, but might spill over into system resource pools for less-intensive tasks.

Enabling users to run Database Designer on Management Console

Users who are already granted the DBDUSER role and required privileges, as described above, can also be enabled to run Database Designer on Management Console:

-

Log in as a superuser to Management Console.

-

Click MC Settings.

-

Click User Management.

-

Specify an MC user:

-

To create an MC user, click Add.

-

To use an existing MC user, select the user and click Edit.

-

-

Next to the DB access level window, click Add.

-

In the Add Permissions window:

-

From the Choose a database drop-down list, select the database on which to create a design.

-

In the Database username field, enter the

dbd-useruser name that you created earlier. -

In the Database password field, enter the database password.

-

In the Restrict access drop-down list, select the level of MC user for this user.

-

-

Click OK to save your changes.

-

Log out of the MC Super user account.

The MC user is now mapped to dbd-user. Log in as the MC user and use Database Designer to create an optimized design for your database.

DBDUSER capabilities and limitations

As a DBDUSER, the following constraints apply:

-

Designs must set K-safety to be equal to system K-safety. If a design violates K-safety by lacking enough buddy projections for tables, the design does not complete.

-

You cannot explicitly advance the ancient history mark (AHM)—for example, call MAKE_AHM_NOW—until after deploying the design.

When you create a design, you automatically have privileges to manipulate that design. Other tasks might require additional privileges:

| Task | Required privileges |

|---|---|

| Submit design tables |

|

| Submit a single design query |

|

| Submit a file of design queries |

|

| Submit design queries from results of a user query |

|

| Create design and deployment scripts |

|

4.4 - Logging projection data for Database Designer

When you run Database Designer, the Optimizer proposes a set of ideal projections based on the options that you specify. When you deploy the design, Database Designer creates the design based on these projections. However, space or budget constraints may prevent Database Designer from creating all the proposed projections. In addition, Database Designer may not be able to implement the projections using ideal criteria.

To get information about the projections, first enable the Database Designer logging capability. When enabled, Database Designer stores information about the proposed projections in two Data Collector tables. After Database Designer deploys the design, these logs contain information about which proposed projections were actually created. After deployment, the logs contain information about:

-

Projections that the Optimizer proposed

-

Projections that Database Designer actually created when the design was deployed

-

Projections that Database Designer created, but not with the ideal criteria that the Optimizer identified.

-

The DDL used to create all the projections

-

Column optimizations

If you do not deploy the design immediately, review the log to determine if you want to make any changes. If the design has been deployed, you can still manually create some of the projections that Database Designer did not create.

To enable the Database Designer logging capability, see Enabling logging for Database Designer.

To view the logged information, see Viewing Database Designer logs.

4.4.1 - Enabling logging for Database Designer

By default, Database Designer does not log information about the projections that the Optimizer proposed and the Database Designer deploys.

To enable Database Designer logging, enter the following command:

=> ALTER DATABASE DEFAULT SET DBDLogInternalDesignProcess = 1;

To disable Database Designer logging, enter the following command:

=> ALTER DATABASE DEFAULT SET DBDLogInternalDesignProcess = 0;

See also

4.4.2 - Viewing Database Designer logs

You can find data about the projections that Database Designer considered and deployed in two Data Collector tables:

-

DC_DESIGN_PROJECTION_CANDIDATES

-

DC_DESIGN_QUERY_PROJECTION_CANDIDATES

DC_DESIGN_PROJECTION_CANDIDATES

The DC_DESIGN_PROJECTION_CANDIDATES table contains information about all the projections that the Optimizer proposed. This table also includes the DDL that creates them. The is_a_winner field indicates if that projection was part of the actual deployed design. To view the DC_DESIGN_PROJECTION_CANDIDATES table, enter:

=> SELECT * FROM DC_DESIGN_PROJECTION_CANDIDATES;

DC_DESIGN_QUERY_PROJECTION_CANDIDATES

The DC_DESIGN_QUERY_PROJECTION_CANDIDATES table lists plan features for all design queries.

Possible features are:

-

FULLY DISTRIBUTED JOIN

-

MERGE JOIN

-

GROUPBY PIPE

-

FULLY DISTRIBUTED GROUPBY

-

RLE PREDICATE

-

VALUE INDEX PREDICATE

-

LATE MATERIALIZATION

For all design queries, the DC_DESIGN_QUERY_PROJECTION_CANDIDATES table includes the following plan feature information:

-

Optimizer path cost.

-

Database Designer benefits.

-

Ideal plan feature and its description, which identifies how the referenced projection should be optimized.

-

If the design was deployed, the actual plan feature and its description is included in the table. This information identifies how the referenced projection was actually optimized.

Because most projections have multiple optimizations, each projection usually has multiple rows.To view the DC_DESIGN_QUERY_PROJECTION_CANDIDATES table, enter:

=> SELECT * FROM DC_DESIGN_QUERY_PROJECTION_CANDIDATES;

To see example data from these tables, see Database Designer logs: example data.

4.4.3 - Database Designer logs: example data

In the following example, Database Designer created the logs after creating a comprehensive design for the VMart sample database. The output shows two records from the DC_DESIGN_PROJECTION_CANDIDATES table.

The first record contains information about the customer_dimension_dbd_1_sort_$customer_gender$__$annual_income$ projection. The record includes the CREATE PROJECTION statement that Database Designer used to create the projection. The is_a_winner column is t, indicating that Database Designer created this projection when it deployed the design.

The second record contains information about the product_dimension_dbd_2_sort_$product_version$__$product_key$ projection. For this projection, the is_a_winner column is f. The Optimizer recommended that Database Designer create this projection as part of the design. However, Database Designer did not create the projection when it deployed the design. The log includes the DDL for the CREATE PROJECTION statement. If you want to add the projection manually, you can use that DDL. For more information, see Creating a design manually.

=> SELECT * FROM dc_design_projection_candidates;

-[ RECORD 1 ]--------+---------------------------------------------------------------

time | 2014-04-11 06:30:17.918764-07

node_name | v_vmart_node0001

session_id | localhost.localdoma-931:0x1b7

user_id | 45035996273704962

user_name | dbadmin

design_id | 45035996273705182

design_table_id | 45035996273720620

projection_id | 45035996273726626

iteration_number | 1

projection_name | customer_dimension_dbd_1_sort_$customer_gender$__$annual_income$

projection_statement | CREATE PROJECTION v_dbd_sarahtest_sarahtest."customer_dimension_dbd_1_

sort_$customer_gender$__$annual_income$"

(

customer_key ENCODING AUTO,

customer_type ENCODING AUTO,

customer_name ENCODING AUTO,

customer_gender ENCODING RLE,

title ENCODING AUTO,

household_id ENCODING AUTO,

customer_address ENCODING AUTO,

customer_city ENCODING AUTO,

customer_state ENCODING AUTO,

customer_region ENCODING AUTO,

marital_status ENCODING AUTO,

customer_age ENCODING AUTO,

number_of_children ENCODING AUTO,

annual_income ENCODING AUTO,

occupation ENCODING AUTO,

largest_bill_amount ENCODING AUTO,

store_membership_card ENCODING AUTO,

customer_since ENCODING AUTO,

deal_stage ENCODING AUTO,

deal_size ENCODING AUTO,

last_deal_update ENCODING AUTO

)

AS

SELECT customer_key,

customer_type,

customer_name,

customer_gender,

title,

household_id,

customer_address,

customer_city,

customer_state,

customer_region,

marital_status,

customer_age,

number_of_children,

annual_income,

occupation,

largest_bill_amount,

store_membership_card,

customer_since,

deal_stage,

deal_size,

last_deal_update

FROM public.customer_dimension

ORDER BY customer_gender,

annual_income

UNSEGMENTED ALL NODES;

is_a_winner | t

-[ RECORD 2 ]--------+-------------------------------------------------------------

time | 2014-04-11 06:30:17.961324-07