This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Data types

The following table summarizes the internal data types that Vertica supports.

The following table summarizes the internal data types that Vertica supports. It also shows the default placement of null values in projections. The Size column lists uncompressed bytes.

|

Data Type |

Size / bytes |

Description |

NULL Sorting |

|

Binary |

|

BINARY |

1 to 65,000 |

Fixed-length binary string |

NULLS LAST |

|

VARBINARY (synonyms: BYTEA, RAW) |

1 to 65,000 |

Variable-length binary string |

NULLS LAST |

|

LONG VARBINARY |

1 to 32,000,000 |

Long variable-length binary string |

NULLS LAST |

|

Boolean |

|

BOOLEAN |

1 |

True or False or NULL |

NULLS LAST |

|

Character / Long |

|

CHAR |

1 to 65,000 |

Fixed-length character string |

NULLS LAST |

|

VARCHAR |

1 to 65,000 |

Variable-length character string |

NULLS LAST |

|

LONG VARCHAR |

1 to 32,000,000 |

Long variable-length character string |

NULLS LAST |

|

Date/Time |

|

DATE |

8 |

A month, day, and year |

NULLS FIRST |

|

TIME |

8 |

A time of day without timezone |

NULLS FIRST |

|

TIME WITH TIMEZONE |

8 |

A time of day with timezone |

NULLS FIRST |

|

TIMESTAMP (synonyms: DATETIME, SMALLDATETIME) |

8 |

A date and time without timezone |

NULLS FIRST |

|

TIMESTAMP WITH TIMEZONE |

8 |

A date and time with timezone |

NULLS FIRST |

|

INTERVAL |

8 |

The difference between two points in time |

NULLS FIRST |

|

INTERVAL DAY TO SECOND |

8 |

An interval measured in days and seconds |

NULLS FIRST |

|

INTERVAL YEAR TO MONTH |

8 |

An interval measured in years and months |

NULLS FIRST |

|

Approximate Numeric |

|

DOUBLE PRECISION |

8 |

Signed 64-bit IEEE floating point number, requiring 8 bytes of storage |

NULLS LAST |

|

FLOAT |

8 |

Signed 64-bit IEEE floating point number, requiring 8 bytes of storage |

NULLS LAST |

|

FLOAT(n) |

8 |

Signed 64-bit IEEE floating point number, requiring 8 bytes of storage |

NULLS LAST |

|

FLOAT8 |

8 |

Signed 64-bit IEEE floating point number, requiring 8 bytes of storage |

NULLS LAST |

|

REAL |

8 |

Signed 64-bit IEEE floating point number, requiring 8 bytes of storage |

NULLS LAST |

|

Exact Numeric |

|

INTEGER |

8 |

Signed 64-bit integer, requiring 8 bytes of storage |

NULLS FIRST |

|

INT |

8 |

Signed 64-bit integer, requiring 8 bytes of storage |

NULLS FIRST |

|

BIGINT |

8 |

Signed 64-bit integer, requiring 8 bytes of storage |

NULLS FIRST |

|

INT8 |

8 |

Signed 64-bit integer, requiring 8 bytes of storage |

NULLS FIRST |

|

SMALLINT |

8 |

Signed 64-bit integer, requiring 8 bytes of storage |

NULLS FIRST |

|

TINYINT |

8 |

Signed 64-bit integer, requiring 8 bytes of storage |

NULLS FIRST |

|

DECIMAL |

8+ |

8 bytes for the first 18 digits of precision, plus 8 bytes for each additional 19 digits |

NULLS FIRST |

|

NUMERIC |

8+ |

8 bytes for the first 18 digits of precision, plus 8 bytes for each additional 19 digits |

NULLS FIRST |

|

NUMBER |

8+ |

8 bytes for the first 18 digits of precision, plus 8 bytes for each additional 19 digits |

NULLS FIRST |

|

MONEY |

8+ |

8 bytes for the first 18 digits of precision, plus 8 bytes for each additional 19 digits |

NULLS FIRST |

|

Spatial |

|

GEOMETRY |

1 to 10,000,000 |

Coordinates expressed as (x,y) pairs, defined in the Cartesian plane. |

NULLS LAST |

|

GEOGRAPHY |

1 to 10,000,000 |

Coordinates expressed in longitude/latitude angular values, measured in degrees |

NULLS LAST |

|

UUID |

|

UUID |

16 |

Stores universally unique identifiers (UUIDs). |

NULLS FIRST |

|

Complex |

|

ARRAY |

1 to 32,000,000 |

Collection of values of a primitive or complex type. |

Native array: same as the element type

Non-native array: cannot be used to order projections

|

|

ROW |

1 to 32,000,000 |

Structure of property-value pairs. |

Cannot be used to order projections |

|

SET |

1 to 32,000,000 |

Collection of unique values of a primitive type. |

Same as the primitive type |

1 - Binary data types (BINARY and VARBINARY)

Store raw-byte data, such as IP addresses, up to bytes.

Store raw-byte data, such as IP addresses, up to 65000 bytes. The BINARY and BINARY VARYING (VARBINARY) data types are collectively referred to as binary string types and the values of binary string types are referred to as binary strings. A binary string is a sequence of octets or bytes.

BYTEA and RAW are synonyms for VARBINARY.

Syntax

BINARY ( length )

{ VARBINARY | BINARY VARYING | BYTEA | RAW } ( max-length )

Arguments

length, max-length- The length of the string or column width, in bytes (octets).

BINARY and VARBINARY data types

BINARY and VARBINARY data types have the following attributes:

-

BINARY: A fixed-width string of length bytes, where the number of bytes is declared as an optional specifier to the type. If length is omitted, the default is 1. Where necessary, values are right-extended to the full width of the column with the zero byte. For example:

=> SELECT TO_HEX('ab'::BINARY(4));

to_hex

----------

61620000

-

VARBINARY: A variable-width string up to a length of max-length bytes, where the maximum number of bytes is declared as an optional specifier to the type. The default is the default attribute size, which is 80, and the maximum length is 65000 bytes. VARBINARY values are not extended to the full width of the column. For example:

=> SELECT TO_HEX('ab'::VARBINARY(4));

to_hex

--------

6162

You can use several formats when working with binary values. The hexadecimal format is generally the most straightforward and is emphasized in Vertica documentation.

Binary values can also be represented in octal format by prefixing the value with a backslash '\'.

Note

If you use vsql, you must use the escape character (\) when you insert another backslash on input; for example, input '\141' as '\\141'.

You can also input values represented by printable characters. For example, the hexadecimal value '0x61' can also be represented by the symbol a.

See Data load.

On input, strings are translated from:

Both functions take a VARCHAR argument and return a VARBINARY value.

Like the input format, the output format is a hybrid of octal codes and printable ASCII characters. A byte in the range of printable ASCII characters (the range [0x20, 0x7e]) is represented by the corresponding ASCII character, with the exception of the backslash ('\'), which is escaped as '\\'. All other byte values are represented by their corresponding octal values. For example, the bytes {97,92,98,99}, which in ASCII are {a,\,b,c}, are translated to text as 'a\\bc'.

Binary operators and functions

The binary operators &, ~, |, and # have special behavior for binary data types, as described in Bitwise operators.

The following aggregate functions are supported for binary data types:

BIT_AND, BIT_OR, and BIT_XOR are bit-wise operations that are applied to each non-null value in a group, while MAX and MIN are byte-wise comparisons of binary values.

Like their binary operator counterparts, if the values in a group vary in length, the aggregate functions treat the values as though they are all equal in length by extending shorter values with zero bytes to the full width of the column. For example, given a group containing the values 'ff', null, and 'f', a binary aggregate ignores the null value and treats the value 'f' as 'f0'. Also, like their binary operator counterparts, these aggregate functions operate on VARBINARY types explicitly and operate on BINARY types implicitly through casts. See Data type coercion operators (CAST).

Binary versus character data types

The BINARY and VARBINARY binary types are similar to the CHAR and VARCHAR character data types, respectively. They differ as follows:

-

Binary data types contain byte strings (a sequence of octets or bytes).

-

Character data types contain character strings (text).

-

The lengths of binary data types are measured in bytes, while character data types are measured in characters.

Examples

The following example shows HEX_TO_BINARY and TO_HEX usage.

Table t and its projection are created with binary columns:

=> CREATE TABLE t (c BINARY(1));

=> CREATE PROJECTION t_p (c) AS SELECT c FROM t;

Insert minimum byte and maximum byte values:

=> INSERT INTO t values(HEX_TO_BINARY('0x00'));

=> INSERT INTO t values(HEX_TO_BINARY('0xFF'));

Binary values can then be formatted in hex on output using the TO_HEX function:

=> SELECT TO_HEX(c) FROM t;

to_hex

--------

00

ff

(2 rows)

The BIT_AND, BIT_OR, and BIT_XOR functions are interesting when operating on a group of values. For example, create a sample table and projections with binary columns:

The example that follows uses table t with a single column of VARBINARY data type:

=> CREATE TABLE t ( c VARBINARY(2) );

=> INSERT INTO t values(HEX_TO_BINARY('0xFF00'));

=> INSERT INTO t values(HEX_TO_BINARY('0xFFFF'));

=> INSERT INTO t values(HEX_TO_BINARY('0xF00F'));

Query table t to see column c output:

=> SELECT TO_HEX(c) FROM t;

TO_HEX

--------

ff00

ffff

f00f

(3 rows)

Now issue the bitwise AND operation. Because these are aggregate functions, an implicit GROUP BY operation is performed on results using (ff00&(ffff)&f00f):

=> SELECT TO_HEX(BIT_AND(c)) FROM t;

TO_HEX

--------

f000

(1 row)

Issue the bitwise OR operation on (ff00|(ffff)|f00f):

=> SELECT TO_HEX(BIT_OR(c)) FROM t;

TO_HEX

--------

ffff

(1 row)

Issue the bitwise XOR operation on (ff00#(ffff)#f00f):

=> SELECT TO_HEX(BIT_XOR(c)) FROM t;

TO_HEX

--------

f0f0

(1 row)

2 - Boolean data type

Vertica provides the standard SQL type BOOLEAN, which has two states: true and false.

Vertica provides the standard SQL type BOOLEAN, which has two states: true and false. The third state in SQL boolean logic is unknown, which is represented by the NULL value.

Syntax

BOOLEAN

Parameters

Valid literal data values for input are:

TRUE |

't' |

'true' |

'y' |

'yes' |

'1' |

1 |

FALSE |

'f' |

'false' |

'n' |

'no' |

'0' |

0 |

Notes

-

Do not confuse the BOOLEAN data type with Logical operators or the Boolean.

-

The keywords TRUE and FALSE are preferred and are SQL-compliant.

-

A Boolean value of NULL appears last (largest) in ascending order.

-

All other values must be enclosed in single quotes.

-

Boolean values are output using the letters t and f.

See also

3 - Character data types (CHAR and VARCHAR)

Stores strings of letters, numbers, and symbols.

Stores strings of letters, numbers, and symbols. The CHARACTER (CHAR) and CHARACTER VARYING (VARCHAR) data types are collectively referred to as character string types, and the values of character string types are known as character strings.

Character data can be stored as fixed-length or variable-length strings. Fixed-length strings are right-extended with spaces on output; variable-length strings are not extended.

String literals in SQL statements must be enclosed in single quotes.

Syntax

{ CHAR | CHARACTER } [ (octet-length) ]

{ VARCHAR | CHARACTER VARYING ] } [ (octet-length) ]

Arguments

octet-length- Length of the string or column width, declared in bytes (octets).

This argument is optional.

CHAR versus VARCHAR data types

The following differences apply to CHAR and VARCHAR data:

-

CHAR is conceptually a fixed-length, blank-padded string. Trailing blanks (spaces) are removed on input and are restored on output. The default length is 1, and the maximum length is 65000 octets (bytes).

-

VARCHAR is a variable-length character data type. The default length is 80, and the maximum length is 65000 octets. For string values longer than 65000, use Long data types. Values can include trailing spaces.

Normally, you use VARCHAR for all of string data. Use CHAR when you need fixed-width string output. For example, you can use CHAR columns for data to be transferred to a legacy system that requires fixed-width strings.

Setting maximum length

When you define character columns, specify the maximum size of any string to be stored in a column. For example, to store strings up to 24 octets in length, use one of the following definitions:

CHAR(24) --- fixed-length

VARCHAR(24) --- variable-length

The maximum length parameter for VARCHAR and CHAR data types refers to the number of octets that can be stored in that field, not the number of characters (Unicode code points). When using multibyte UTF-8 characters, the fields must be sized to accommodate from 1 to 4 octets per character, depending on the data. If the data loaded into a VARCHAR or CHAR column exceeds the specified maximum size for that column, data is truncated on UTF-8 character boundaries to fit within the specified size. See COPY.

Note

Remember to include the extra octets required for multibyte characters in the column-width declaration, keeping in mind the 65000 octet column-width limit.

Due to compression in Vertica, the cost of overestimating the length of these fields is incurred primarily at load time and during sorts.

NULL versus NUL

NULL and NUL differ as follows:

-

NUL represents a character whose ASCII/Unicode code is 0, sometimes qualified "ASCII NUL".

-

NULL means no value, and is true of a field (column) or constant, not of a character.

CHAR, LONG VARCHAR, and VARCHAR string data types accept ASCII NUL values.

In ascending sorts, NULL appears last (largest).

For additional information about NULL ordering, see NULL sort order.

The following example casts the input string containing NUL values to VARCHAR:

=> SELECT 'vert\0ica'::CHARACTER VARYING AS VARCHAR;

VARCHAR

---------

vert\0ica

(1 row)

The result contains 9 characters:

=> SELECT LENGTH('vert\0ica'::CHARACTER VARYING);

length

--------

9

(1 row)

If you use an extended string literal, the length is 8 characters:

=> SELECT E'vert\0ica'::CHARACTER VARYING AS VARCHAR;

VARCHAR

---------

vertica

(1 row)

=> SELECT LENGTH(E'vert\0ica'::CHARACTER VARYING);

LENGTH

--------

8

(1 row)

4 - Date/time data types

Vertica supports the full set of SQL date and time data types.

Vertica supports the full set of SQL date and time data types.

The following rules apply to all date/time data types:

-

All have a size of 8 bytes.

-

A date/time value of NULL is smallest relative to all other date/time values,.

-

Vertica uses Julian dates for all date/time calculations, which can correctly predict and calculate any date more recent than 4713 BC to far into the future, based on the assumption that the average length of the year is 365.2425 days.

-

All the date/time data types accept the special literal value NOW to specify the current date and time. For example:

=> SELECT TIMESTAMP 'NOW';

?column?

---------------------------

2020-09-23 08:23:50.42325

(1 row)

-

By default, Vertica rounds with a maximum precision of six decimal places. You can substitute an integer between 0 and 6 for p to specify your preferred level of precision.

The following table lists specific attributes of date/time data types:

|

Name |

Description |

Low Value |

High Value |

Resolution |

DATE |

Dates only (no time of day) |

~ 25e+15 BC |

~ 25e+15 AD |

1 day |

TIME [(p)] |

Time of day only (no date) |

00:00:00.00 |

23:59:60.999999 |

1 μs |

TIMETZ [(p)] |

Time of day only, with time zone |

00:00:00.00+14 |

23:59:59.999999-14 |

1 μs |

TIMESTAMP [(p)] |

Both date and time, without time zone |

290279-12-22 19:59:05.224194 BC |

294277-01-09 04:00:54.775806 AD |

1 μs |

TIMESTAMPTZ [(p)]* |

Both date and time, with time zone |

290279-12-22 19:59:05.224194 BC UTC |

294277-01-09 04:00:54.775806 AD UTC |

1 μs |

INTERVAL DAY TO SECOND [(p)] |

Time intervals |

-106751991 days 04:00:54.775807 |

+-106751991 days 04:00:54.775807 |

1 μs |

INTERVAL YEAR TO MONTH |

Time intervals |

~ -768e15 yrs |

~ 768e15 yrs |

1 month |

Vertica recognizes the files in

/opt/vertica/share/timezonesets as date/time input values and defines the default list of strings accepted in the AT TIME ZONE zone parameter. The names are not necessarily used for date/time output—output is driven by the official time zone abbreviations associated with the currently selected time zone parameter setting.

4.1 - DATE

Consists of a month, day, and year.

Consists of a month, day, and year. The following limits apply:

See SET DATESTYLE for information about ordering.

Note

'0000-00-00' is not valid. If you try to insert that value into a DATE or TIMESTAMP field, an error occurs. If you copy '0000-00-00' into a DATE or TIMESTAMP field, Vertica converts the value to 0001-01-01 00:00:00 BC.

Syntax

DATE

Examples

|

Example |

Description |

January 8, 1999 |

Unambiguous in any datestyle input mode |

1999-01-08 |

ISO 8601; January 8 in any mode (recommended format) |

1/8/1999 |

January 8 in MDY mode; August 1 in DMY mode |

1/18/1999 |

January 18 in MDY mode; rejected in other modes |

01/02/03 |

January 2, 2003 in MDY mode

February 1, 2003 in DMY mode

February 3, 2001 in YMD mode |

1999-Jan-08 |

January 8 in any mode |

Jan-08-1999 |

January 8 in any mode |

08-Jan-1999 |

January 8 in any mode |

99-Jan-08 |

January 8 in YMD mode, else error |

08-Jan-99 |

January 8, except error in YMD mode |

Jan-08-99 |

January 8, except error in YMD mode |

19990108 |

ISO 8601; January 8, 1999 in any mode |

990108 |

ISO 8601; January 8, 1999 in any mode |

1999.008 |

Year and day of year |

J2451187 |

Julian day |

January 8, 99 BC |

Year 99 before the Common Era |

4.2 - DATETIME

DATETIME is an alias for TIMESTAMP.

DATETIME is an alias for TIMESTAMP/TIMESTAMPTZ.

4.3 - INTERVAL

Measures the difference between two points in time.

Measures the difference between two points in time. Intervals can be positive or negative. The INTERVAL data type is SQL:2008 compliant, and supports interval qualifiers that are divided into two major subtypes:

-

Year-month: Span of years and months

-

Day-time: Span of days, hours, minutes, seconds, and fractional seconds

Intervals are represented internally as some number of microseconds and printed as up to 60 seconds, 60 minutes, 24 hours, 30 days, 12 months, and as many years as necessary. You can control the output format of interval units with SET INTERVALSTYLE and SET DATESTYLE.

Syntax

INTERVAL 'interval-literal' [ interval-qualifier ] [ (p) ]

Parameters

-

interval-literal

- A character string that expresses an interval, conforming to this format:

[-] { quantity subtype-unit }[...] [ AGO ]

For details, see Interval literal.

-

interval-qualifier

- Optionally specifies how to interpret and format an interval literal for output, and, optionally, sets precision. If omitted, the default is

DAY TO SECOND(6). For details, see Interval qualifier.

p- Specifies precision of the seconds field, where

p is an integer between 0 - 6. For details, see Specifying interval precision.

Default: 6

Limits

|

Name |

Low Value |

High Value |

Resolution |

INTERVAL DAY TO SECOND [(p)] |

-106751991 days 04:00:54.775807 |

+/-106751991 days 04:00:54.775807 |

1 microsecond |

INTERVAL YEAR TO MONTH |

~/ -768e15 yrs |

~ 768e15 yrs |

1 month |

4.3.1 - Setting interval unit display

SET INTERVALSTYLE and SET DATESTYLE control the output format of interval units.

SET INTERVALSTYLE and SET DATESTYLE control the output format of interval units.

Important

DATESTYLE settings supersede INTERVALSTYLE. If DATESTYLE is set to SQL, interval unit display always conforms to the SQL:2008 standard, which omits interval unit display. If DATESTYLE is set to ISO, you can use

SET INTERVALSTYLE to omit or display interval unit display, as described below.

Omitting interval units

To omit interval units from the output, set INTERVALSTYLE to PLAIN. This is the default setting, which conforms with the SQL:2008 standard:

=> SET INTERVALSTYLE TO PLAIN;

SET

=> SELECT INTERVAL '3 2';

?column?

----------

3 02:00

When INTERVALSTYLE is set to PLAIN, units are omitted from the output, even if the query specifies input units:

=> SELECT INTERVAL '3 days 2 hours';

?column?

----------

3 02:00

If DATESTYLE is set to SQL, Vertica conforms with SQL:2008 standard and always omits interval units from output:

=> SET DATESTYLE TO SQL;

SET

=> SET INTERVALSTYLE TO UNITS;

SET

=> SELECT INTERVAL '3 2';

?column?

----------

3 02:00

Displaying interval units

To enable display of interval units, DATESTYLE must be set to ISO. You can then display interval units by setting INTERVALSTYLE to UNITS:

=> SET DATESTYLE TO ISO;

SET

=> SET INTERVALSTYLE TO UNITS;

SET

=> SELECT INTERVAL '3 2';

?column?

----------------

3 days 2 hours

Checking INTERVALSTYLE and DATESTYLE settings

Use

SHOW statements to check INTERVALSTYLE and DATESTYLE settings:

=> SHOW INTERVALSTYLE;

name | setting

---------------+---------

intervalstyle | units

=> SHOW DATESTYLE;

name | setting

-----------+----------

datestyle | ISO, MDY

4.3.2 - Specifying interval input

Interval values are expressed through interval literals.

Interval values are expressed through interval literals. An interval literal is composed of one or more interval fields, where each field represents a span of days and time, or years and months, as follows:

[-] { quantity subtype-unit }[...] [AGO]

Using subtype units

Subtype units are optional for day-time intervals; they must be specified for year-month intervals.

For example, the first statement below implicitly specifies days and time; the second statement explicitly identifies day and time units. Both statements return the same result:

=> SET INTERVALSTYLE TO UNITS;

=> SELECT INTERVAL '1 12:59:10:05';

?column?

--------------------

1 day 12:59:10.005

(1 row)

=> SELECT INTERVAL '1 day 12 hours 59 min 10 sec 5 milliseconds';

?column?

--------------------

1 day 12:59:10.005

(1 row)

The following two statements add 28 days and 4 weeks to the current date, respectively. The intervals in both cases are equal and the statements return the same result. However, in the first statement, the interval literal omits the subtype (implicitly days); in the second statement, the interval literal must include the subtype unit weeks:

=> SELECT CURRENT_DATE;

?column?

------------

2016-08-15

(1 row)

=> SELECT CURRENT_DATE + INTERVAL '28';

?column?

---------------------

2016-09-12 00:00:00

(1 row)

dbadmin=> SELECT CURRENT_DATE + INTERVAL '4 weeks';

?column?

---------------------

2016-09-12 00:00:00

(1 row)

An interval literal can include day-time and year-month fields. For example, the following statement adds an interval of 4 years, 4 weeks, 4 days and 14 hours to the current date. The years and weeks fields must include subtype units; the days and hours fields omit them:

> SELECT CURRENT_DATE + INTERVAL '4 years 4 weeks 4 14';

?column?

---------------------

2020-09-15 14:00:00

(1 row)

Omitting subtype units

You can specify quantities of days, hours, minutes, and seconds without specifying units. Vertica recognizes colons in interval literals as part of the timestamp:

=> SELECT INTERVAL '1 4 5 6';

?column?

------------

1 day 04:05:06

=> SELECT INTERVAL '1 4:5:6';

?column?

------------

1 day 04:05:06

=> SELECT INTERVAL '1 day 4 hour 5 min 6 sec';

?column?

------------

1 day 04:05:06

If Vertica cannot determine the units, it applies the quantity to any missing units based on the interval qualifier. In the next two examples, Vertica uses the default interval qualifier (DAY TO SECOND(6)) and assigns the trailing 1 to days, since it has already processed hours, minutes, and seconds in the output:

=> SELECT INTERVAL '4:5:6 1';

?column?

------------

1 day 04:05:06

=> SELECT INTERVAL '1 4:5:6';

?column?

------------

1 day 04:05:06

In the next two examples, Vertica recognizes 4:5 as hours:minutes. The remaining values in the interval literal are assigned to the missing units: 1 is assigned to days and 2 is assigned to seconds.

SELECT INTERVAL '4:5 1 2';

?column?

------------

1 day 04:05:02

=> SELECT INTERVAL '1 4:5 2';

?column?

------------

1 day 04:05:02

Specifying the interval qualifier can change how Vertica interprets 4:5:

=> SELECT INTERVAL '4:5' MINUTE TO SECOND;

?column?

------------

00:04:05

4.3.3 - Controlling interval format

Interval qualifiers specify a range of options that Vertica uses to interpret and format an interval literal.

Interval qualifiers specify a range of options that Vertica uses to interpret and format an interval literal. The interval qualifier can also specify precision. Each interval qualifier is composed of one or two units:

unit[p] [ TO unit[p] ]

where:

-

unit specifies a day-time or year-month subtype.

-

p specifies precision, an integer between 0 and 6. In general, precision only applies to SECOND units. The default precision for SECOND is 6. For details, see Specifying interval precision.

If an interval omits an interval qualifier, Vertica uses the default DAY TO SECOND(6).

Interval qualifier categories

Interval qualifiers belong to one of the following categories:

-

Year-month: Span of years and months

-

Day-time: Span of days, hours, minutes, seconds, and fractional seconds

Note

All examples below assume that

INTERVALSTYLE is set to plain.

Year-Month

Vertica supports two year-month subtypes: YEAR and MONTH.

In the following example, YEAR TO MONTH qualifies the interval literal 1 2 to indicate a span of 1 year and two months:

=> SELECT interval '1 2' YEAR TO MONTH;

?column?

----------

1-2

(1 row)

If you omit the qualifier, Vertica uses the default interval qualifier DAY TO SECOND and returns a different result:

=> SELECT interval '1 2';

?column?

----------

1 02:00

(1 row)

The following example uses the interval qualifier YEAR. In this case, Vertica extracts only the year from the interval literal 1y 10m :

=> SELECT INTERVAL '1y 10m' YEAR;

?column?

----------

1

(1 row)

In the next example, the interval qualifier MONTH converts the same interval literal to months:

=> SELECT INTERVAL '1y 10m' MONTH;

?column?

----------

22

(1 row)

Day-time

Vertica supports four day-time subtypes: DAY, HOUR, MINUTE, and SECOND.

In the following example, the interval qualifier DAY TO SECOND(4) qualifies the interval literal 1h 3m 6s 5msecs 57us. The qualifier also sets precision on seconds to 4:

=> SELECT INTERVAL '1h 3m 6s 5msecs 57us' DAY TO SECOND(4);

?column?

---------------

01:03:06.0051

(1 row)

If no interval qualifier is specified, Vertica uses the default subtype DAY TO SECOND(6), regardless of how you specify the interval literal. For example, as an extension to SQL:2008, both of the following commands return 910 days:

=> SELECT INTERVAL '2-6';

?column?

-----------------

910

=> SELECT INTERVAL '2 years 6 months';

?column?

-----------------

910

An interval qualifier can extract other values from the input parameters. For example, the following command extracts the HOUR value from the interval literal 3 days 2 hours:

=> SELECT INTERVAL '3 days 2 hours' HOUR;

?column?

----------

74

The primary day/time (DAY TO SECOND) and year/month (YEAR TO MONTH) subtype ranges can be restricted to more specific range of types by an interval qualifier. For example, HOUR TO MINUTE is a limited form of day/time interval, which can be used to express time zone offsets.

=> SELECT INTERVAL '1 3' HOUR to MINUTE;

?column?

---------------

01:03

hh:mm:ss and hh:mm formats are used only when at least two of the fields specified in the interval qualifier are non-zero and there are no more than 23 hours or 59 minutes:

=> SELECT INTERVAL '2 days 12 hours 15 mins' DAY TO MINUTE;

?column?

--------------

2 12:15

=> SELECT INTERVAL '15 mins 20 sec' MINUTE TO SECOND;

?column?

----------

15:20

=> SELECT INTERVAL '1 hour 15 mins 20 sec' MINUTE TO SECOND;

?column?

-----------------

75:20

4.3.4 - Specifying interval precision

In general, interval precision only applies to seconds.

In general, interval precision only applies to seconds. If no precision is explicitly specified, Vertica rounds precision to a maximum of six decimal places. For example:

=> SELECT INTERVAL '2 hours 4 minutes 3.709384766 seconds' DAY TO SECOND;

?column?

-----------------

02:04:03.709385

(1 row)

Vertica lets you specify interval precision in two ways:

For example, the following statements use both methods to set precision, and return identical results:

=> SELECT INTERVAL(4) '2 hours 4 minutes 3.709384766 seconds' DAY TO SECOND;

?column?

---------------

02:04:03.7094

(1 row)

=> SELECT INTERVAL '2 hours 4 minutes 3.709384766 seconds' DAY TO SECOND(4);

?column?

---------------

02:04:03.7094

(1 row)

If the same statement specifies precision more than once, Vertica uses the lesser precision. For example, the following statement specifies precision twice: the INTERVAL keyword specifies precision of 1, while the interval qualifier SECOND specifies precision of 2. Vertica uses the lesser precision of 1:

=> SELECT INTERVAL(1) '1.2467' SECOND(2);

?column?

----------

1.2 secs

Setting precision on interval table columns

If you create a table with an interval column, the following restrictions apply to the column definition:

-

You can set precision on the INTERVAL keyword only if you omit specifying an interval qualifier. If you try to set precision on the INTERVAL keyword and include an interval qualifier, Vertica returns an error.

-

You can set precision only on the last unit of an interval qualifier. For example:

CREATE TABLE public.testint2

(

i INTERVAL HOUR TO SECOND(3)

);

If you specify precision on another unit, Vertica discards it when it saves the table definition.

4.3.5 - Fractional seconds in interval units

Vertica supports intervals in milliseconds (hh:mm:ss:ms), where 01:02:03:25 represents 1 hour, 2 minutes, 3 seconds, and 025 milliseconds.

Vertica supports intervals in milliseconds (hh:mm:ss:ms), where 01:02:03:25 represents 1 hour, 2 minutes, 3 seconds, and 025 milliseconds. Milliseconds are converted to fractional seconds as in the following example, which returns 1 day, 2 hours, 3 minutes, 4 seconds, and 25.5 milliseconds:

=> SELECT INTERVAL '1 02:03:04:25.5';

?column?

------------

1 day 02:03:04.0255

Vertica allows fractional minutes. The fractional minutes are rounded into seconds:

=> SELECT INTERVAL '10.5 minutes';

?column?

------------

00:10:30

=> select interval '10.659 minutes';

?column?

-------------

00:10:39.54

=> select interval '10.3333333333333 minutes';

?column?

----------

00:10:20

Considerations

-

An INTERVAL can include only the subset of units that you need; however, year/month intervals represent calendar years and months with no fixed number of days, so year/month interval values cannot include days, hours, minutes. When year/month values are specified for day/time intervals, the intervals extension assumes 30 days per month and 365 days per year. Since the length of a given month or year varies, day/time intervals are never output as months or years, only as days, hours, minutes, and so on.

-

Day/time and year/month intervals are logically independent and cannot be combined with or compared to each other. In the following example, an interval-literal that contains DAYS cannot be combined with the YEAR TO MONTH type:

=> SELECT INTERVAL '1 2 3' YEAR TO MONTH;

ERROR 3679: Invalid input syntax for interval year to month: "1 2 3"

-

Vertica accepts intervals up to 2^63 – 1 microseconds or months (about 18 digits).

-

INTERVAL YEAR TO MONTH can be used in an analytic RANGE window when the ORDER BY column type is TIMESTAMP/TIMESTAMP WITH TIMEZONE, or DATE. Using TIME/TIME WITH TIMEZONE are not supported.

-

You can use INTERVAL DAY TO SECOND when the ORDER BY column type is TIMESTAMP/TIMESTAMP WITH TIMEZONE, DATE, and TIME/TIME WITH TIMEZONE.

Examples

Examples in this section assume that INTERVALSTYLE is set to PLAIN, so results omit subtype units. Interval values that omit an interval qualifier use the default to DAY TO SECOND(6).

=> SELECT INTERVAL '00:2500:00';

?column?

----------

1 17:40

(1 row)

=> SELECT INTERVAL '2500' MINUTE TO SECOND;

?column?

----------

2500

(1 row)

=> SELECT INTERVAL '2500' MINUTE;

?column?

----------

2500

(1 row)

=> SELECT INTERVAL '28 days 3 hours' HOUR TO SECOND;

?column?

----------

675:00

(1 row)

=> SELECT INTERVAL(3) '28 days 3 hours';

?column?

----------

28 03:00

(1 row)

=> SELECT INTERVAL(3) '28 days 3 hours 1.234567';

?column?

-----------------

28 03:01:14.074

(1 row)

=> SELECT INTERVAL(3) '28 days 3 hours 1.234567 sec';

?column?

-----------------

28 03:00:01.235

(1 row)

=> SELECT INTERVAL(3) '28 days 3.3 hours' HOUR TO SECOND;

?column?

----------

675:18

(1 row)

=> SELECT INTERVAL(3) '28 days 3.35 hours' HOUR TO SECOND;

?column?

----------

675:21

(1 row)

=> SELECT INTERVAL(3) '28 days 3.37 hours' HOUR TO SECOND;

?column?

-----------

675:22:12

(1 row)

=> SELECT INTERVAL '1.234567 days' HOUR TO SECOND;

?column?

---------------

29:37:46.5888

(1 row)

=> SELECT INTERVAL '1.23456789 days' HOUR TO SECOND;

?column?

-----------------

29:37:46.665696

(1 row)

=> SELECT INTERVAL(3) '1.23456789 days' HOUR TO SECOND;

?column?

--------------

29:37:46.666

(1 row)

=> SELECT INTERVAL(3) '1.23456789 days' HOUR TO SECOND(2);

?column?

-------------

29:37:46.67

(1 row)

=> SELECT INTERVAL(3) '01:00:01.234567' as "one hour+";

one hour+

--------------

01:00:01.235

(1 row)

=> SELECT INTERVAL(3) '01:00:01.234567' = INTERVAL(3) '01:00:01.234567';

?column?

----------

t

(1 row)

=> SELECT INTERVAL(3) '01:00:01.234567' = INTERVAL '01:00:01.234567';

?column?

----------

f

(1 row)

=> SELECT INTERVAL(3) '01:00:01.234567' = INTERVAL '01:00:01.234567'

HOUR TO SECOND(3);

?column?

----------

t

(1 row)

=> SELECT INTERVAL(3) '01:00:01.234567' = INTERVAL '01:00:01.234567'

MINUTE TO SECOND(3);

?column?

----------

t

(1 row)

=> SELECT INTERVAL '255 1.1111' MINUTE TO SECOND(3);

?column?

------------

255:01.111

(1 row)

=> SELECT INTERVAL '@ - 5 ago';

?column?

----------

5

(1 row)

=> SELECT INTERVAL '@ - 5 minutes ago';

?column?

----------

00:05

(1 row)

=> SELECT INTERVAL '@ 5 minutes ago';

?column?

----------

-00:05

(1 row)

=> SELECT INTERVAL '@ ago -5 minutes';

?column?

----------

00:05

(1 row)

=> SELECT DATE_PART('month', INTERVAL '2-3' YEAR TO MONTH);

DATE_PART

-----------

3

(1 row)

=> SELECT FLOOR((TIMESTAMP '2005-01-17 10:00'

- TIMESTAMP '2005-01-01')

/ INTERVAL '7');

FLOOR

-------

2

(1 row)

4.3.6 - Processing signed intervals

In the SQL:2008 standard, a minus sign before an interval-literal or as the first character of the interval-literal negates the entire literal, not just the first component.

In the SQL:2008 standard, a minus sign before an interval-literal or as the first character of the interval-literal negates the entire literal, not just the first component. In Vertica, a leading minus sign negates the entire interval, not just the first component. The following commands both return the same value:

=> SELECT INTERVAL '-1 month - 1 second';

?column?

----------

-29 days 23:59:59

=> SELECT INTERVAL -'1 month - 1 second';

?column?

----------

-29 days 23:59:59

Use one of the following commands instead to return the intended result:

=> SELECT INTERVAL -'1 month 1 second';

?column?

----------

-30 days 1 sec

=> SELECT INTERVAL -'30 00:00:01';

?column?

----------

-30 days 1 sec

Two negatives together return a positive:

=> SELECT INTERVAL -'-1 month - 1 second';

?column?

----------

29 days 23:59:59

=> SELECT INTERVAL -'-1 month 1 second';

?column?

----------

30 days 1 sec

You can use the year-month syntax with no spaces. Vertica allows the input of negative months but requires two negatives when paired with years.

=> SELECT INTERVAL '3-3' YEAR TO MONTH;

?column?

----------

3 years 3 months

=> SELECT INTERVAL '3--3' YEAR TO MONTH;

?column?

----------

2 years 9 months

When the interval-literal looks like a year/month type, but the type is day/second, or vice versa, Vertica reads the interval-literal from left to right, where number-number is years-months, and number <space> <signed number> is whatever the units specify. Vertica processes the following command as (–) 1 year 1 month = (–) 365 + 30 = –395 days:

=> SELECT INTERVAL '-1-1' DAY TO HOUR;

?column?

----------

-395 days

If you insert a space in the interval-literal, Vertica processes it based on the subtype DAY TO HOUR: (–) 1 day – 1 hour = (–) 24 – 1 = –23 hours:

=> SELECT INTERVAL '-1 -1' DAY TO HOUR;

?column?

----------

-23 hours

Two negatives together returns a positive, so Vertica processes the following command as (–) 1 year – 1 month = (–) 365 – 30 = –335 days:

=> SELECT INTERVAL '-1--1' DAY TO HOUR;

?column?

----------

-335 days

If you omit the value after the hyphen, Vertica assumes 0 months and processes the following command as 1 year 0 month –1 day = 365 + 0 – 1 = –364 days:

=> SELECT INTERVAL '1- -1' DAY TO HOUR;

?column?

----------

364 days

4.3.7 - Casting with intervals

You can use CAST to convert strings to intervals, and vice versa.

You can use CAST to convert strings to intervals, and vice versa.

String to interval

You cast a string to an interval as follows:

CAST( [ INTERVAL[(p)] ] [-] ] interval-literal AS INTERVAL[(p)] interval-qualifier )

For example:

=> SELECT CAST('3700 sec' AS INTERVAL);

?column?

----------

01:01:40

You can cast intervals within day-time or the year-month subtypes but not between them:

=> SELECT CAST(INTERVAL '4440' MINUTE as INTERVAL);

?column?

----------

3 days 2 hours

=> SELECT CAST(INTERVAL -'01:15' as INTERVAL MINUTE);

?column?

----------

-75 mins

Interval to string

You cast an interval to a string as follows:

CAST( (SELECT interval ) AS VARCHAR[(n)] )

For example:

=> SELECT CONCAT(

'Tomorrow at this time: ',

CAST((SELECT INTERVAL '24 hours') + CURRENT_TIMESTAMP(0) AS VARCHAR));

CONCAT

-----------------------------------------------

Tomorrow at this time: 2016-08-17 08:41:23-04

(1 row)

4.3.8 - Operations with intervals

If you divide an interval by an interval, you get a FLOAT:.

If you divide an interval by an interval, you get a FLOAT:

=> SELECT INTERVAL '28 days 3 hours' HOUR(4) / INTERVAL '27 days 3 hours' HOUR(4);

?column?

------------

1.036866359447

An INTERVAL divided by FLOAT returns an INTERVAL:

=> SELECT INTERVAL '3' MINUTE / 1.5;

?column?

------------

2 mins

INTERVAL MODULO (remainder) INTERVAL returns an INTERVAL:

=> SELECT INTERVAL '28 days 3 hours' HOUR % INTERVAL '27 days 3 hours' HOUR;

?column?

------------

24 hours

If you add INTERVAL and TIME, the result is TIME, modulo 24 hours:

=> SELECT INTERVAL '1' HOUR + TIME '1:30';

?column?

------------

02:30:00

4.4 - SMALLDATETIME

SMALLDATETIME is an alias for TIMESTAMP.

SMALLDATETIME is an alias for TIMESTAMP/TIMESTAMPTZ.

4.5 - TIME/TIMETZ

Stores the specified time of day.

Stores the specified time of day. TIMETZ is the same as TIME WITH TIME ZONE: both data types store the UTC offset of the specified time.

Syntax

TIME [ (p) ] [ { WITHOUT | WITH } TIME ZONE ] 'input-string' [ AT TIME ZONE zone ]

Parameters

p- Optional precision value that specifies the number of fractional digits retained in the seconds field, an integer value between 0 and 6. If you omit specifying precision, Vertica returns up to 6 fractional digits.

WITHOUT TIME ZONE- Ignore any time zone in the input string and use a value without a time zone (default).

WITH TIME ZONE- Convert the time to UTC. If the input string includes a time zone, use its UTC offset for the conversion. If the input string omits a time zone, Vertica uses the UTC offset of the time zone that is configured for your system.

input-string- See Input String below.

-

AT TIME ZONE zone

- See TIME AT TIME ZONE and TIMESTAMP AT TIME ZONE.

TIMETZ vs. TIMESTAMPTZ

TIMETZ and

TIMESTAMPTZ are not parallel SQL constructs. TIMESTAMPTZ records a time and date in GMT, converting from the specified TIME ZONE.TIMETZ records the specified time and the specified time zone, in minutes, from GMT.

Limits

|

Name |

Low Value |

High Value |

Resolution |

TIME [p] |

00:00:00.00 |

23:59:60.999999 |

1 µs |

TIME [p] WITH TIME ZONE |

00:00:00.00+14 |

23:59:59.999999-14 |

1 µs |

A TIME input string can be set to any of the formats shown below:

|

Example |

Description |

04:05:06.789 |

ISO 8601 |

04:05:06 |

ISO 8601 |

04:05 |

ISO 8601 |

040506 |

ISO 8601 |

04:05 AM |

Same as 04:05; AM does not affect value |

04:05 PM |

Same as 16:05 |

04:05:06.789-8 |

ISO 8601 |

04:05:06-08:00 |

ISO 8601 |

04:05-08:00 |

ISO 8601 |

040506-08 |

ISO 8601 |

04:05:06 PST |

Time zone specified by name |

Data type coercion

You can cast a TIME or TIMETZ interval to a TIMESTAMP. This returns the local date and time as follows:

=> SELECT (TIME '3:01am')::TIMESTAMP;

?column?

---------------------

2012-08-30 03:01:00

(1 row)

=> SELECT (TIMETZ '3:01am')::TIMESTAMP;

?column?

---------------------

2012-08-22 03:01:00

(1 row)

Casting the same TIME or TIMETZ interval to a TIMESTAMPTZ returns the local date and time, appended with the UTC offset—in this example, -05:

=> SELECT (TIME '3:01am')::TIMESTAMPTZ;

?column?

------------------------

2016-12-08 03:01:00-05

(1 row)

4.6 - TIME AT TIME ZONE

Converts the specified TIME to the time in another time zone.

Converts the specified TIME to the time in another time zone.

Syntax

TIME [WITH TIME ZONE] 'input-string' AT TIME ZONE 'zone'

Parameters

WITH TIME ZONE- Converts the input string to UTC, using the UTC offset for the specified time zone. If the input string omits a time zone, Vertica uses the UTC offset of the time zone that is configured for your system, and converts the input string accordingly

zone- Specifies the time zone to use in the conversion, either as a literal or interval that specifies UTC offset:

For details, see Specifying Time Zones below.

Note

Vertica treats literals TIME ZONE and TIMEZONE as synonyms.

Specifying time zones

You can specify time zones in two ways:

-

A string literal such as America/Chicago or PST

-

An interval that specifies a UTC offset—for example, INTERVAL '-08:00'

It is generally good practice to specify time zones with literals that indicate a geographic location. Vertica makes the necessary seasonal adjustments, and thereby avoids inconsistent results. For example, the following two queries are issued when daylight time is in effect. Because the local UTC offset during daylight time is -04, both queries return the same results:

=> SELECT CURRENT_TIME(0) "EDT";

EDT

-------------

12:34:35-04

(1 row)

=> SELECT CURRENT_TIME(0) AT TIME ZONE 'America/Denver' "Mountain Time";

Mountain Time

---------------

10:34:35-06

(1 row)

=> SELECT CURRENT_TIME(0) AT TIME ZONE INTERVAL '-06:00' "Mountain Time";

Mountain Time

---------------

10:34:35-06

(1 row)

If you issue a use the UTC offset in a similar query when standard time is in effect, you must adjust the UTC offset accordingly—for Denver time, to -07—otherwise, Vertica returns a different (and erroneous) result:

=> SELECT CURRENT_TIME(0) "EST";

EST

-------------

14:18:22-05

(1 row)

=> SELECT CURRENT_TIME(0) AT TIME ZONE INTERVAL '-06:00' "Mountain Time";

Mountain Time

---------------

13:18:22-06

(1 row)

You can show and set the session's time zone with

SHOW TIMEZONE and

SET TIME ZONE, respectively:

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

=> SELECT CURRENT_TIME(0) "Eastern Daylight Time";

Eastern Daylight Time

-----------------------

12:18:24-04

(1 row)

=> SET TIMEZONE 'America/Los_Angeles';

SET

=> SELECT CURRENT_TIME(0) "Pacific Daylight Time";

Pacific Daylight Time

-----------------------

09:18:24-07

(1 row)

Time zone literals

To view the default list of valid literals, see the files in the following directory:

opt/vertica/share/timezonesets

For example:

$ cat Antarctica.txt

...

# src/timezone/tznames/Antarctica.txt

#

AWST 28800 # Australian Western Standard Time

# (Antarctica/Casey)

# (Australia/Perth)

...

NZST 43200 # New Zealand Standard Time

# (Antarctica/McMurdo)

# (Pacific/Auckland)

ROTT -10800 # Rothera Time

# (Antarctica/Rothera)

SYOT 10800 # Syowa Time

# (Antarctica/Syowa)

VOST 21600 # Vostok time

# (Antarctica/Vostok)

Examples

The following example assumes that local time is EST (Eastern Standard Time). The query converts the specified time to MST (mountain standard time):

=> SELECT CURRENT_TIME(0);

timezone

-------------

10:10:56-05

(1 row)

=> SELECT TIME '10:10:56' AT TIME ZONE 'America/Denver' "Denver Time";

Denver Time

-------------

08:10:56-07

(1 row)

The next example adds a time zone literal to the input string—in this case, Europe/Vilnius—and converts the time to MST:

=> SELECT TIME '09:56:13 Europe/Vilnius' AT TIME ZONE 'America/Denver';

Denver Time

-------------

00:56:13-07

(1 row)

See also

4.7 - TIMESTAMP/TIMESTAMPTZ

Stores the specified date and time.

Stores the specified date and time. TIMESTAMPTZ is the same as TIMESTAMP WITH TIME ZONE: both data types store the UTC offset of the specified time.

TIMESTAMP is an alias for DATETIME and SMALLDATETIME.

Syntax

TIMESTAMP [ (p) ] [ { WITHOUT | WITH } TIME ZONE ] 'input-string' [AT TIME ZONE zone ]

TIMESTAMPTZ [ (p) ] 'input-string' [ AT TIME ZONE zone ]

Parameters

p- Optional precision value that specifies the number of fractional digits retained in the seconds field, an integer value between 0 and 6. If you omit specifying precision, Vertica returns up to 6 fractional digits.

WITHOUT TIME ZONE

WITH TIME ZONESpecifies whether to include a time zone with the stored value:

-

WITHOUT TIME ZONE (default): Specifiesthat input-string does not include a time zone. If the input string contains a time zone, Vertica ignores this qualifier. Instead, it conforms to WITH TIME ZONE behavior.

-

WITH TIME ZONE: Specifies to convert input-string to UTC, using the UTC offset for the specified time zone. If the input string omits a time zone, Vertica uses the UTC offset of the time zone that is configured for your system.

input-string- See Input String below.

-

AT TIME ZONE zone

- See TIMESTAMP AT TIME ZONE.

Limits

In the following table, values are rounded. See Date/time data types for more detail.

|

Name |

Low Value |

High Value |

Resolution |

TIMESTAMP [ (p) ] [ WITHOUT TIME ZONE ] |

290279 BC |

294277 AD |

1 µs |

TIMESTAMP [ (p) ] WITH TIME ZONE |

290279 BC |

294277 AD |

1 µs |

The date/time input string concatenates a date and a time. The input string can include a time zone, specified as a literal such as America/Chicago, or as a UTC offset.

The following list represents typical date/time input variations:

Note

0000-00-00 is invalid input. If you try to insert that value into a DATE or TIMESTAMP field, an error occurs. If you copy 0000-00-00 into a DATE or TIMESTAMP field, Vertica converts the value to 0001-01-01 00:00:00 BC.

The input string can also specify the calendar era, either AD (default) or BC. If you omit the calendar era, Vertica assumes the current calendar era (AD). The calendar era typically follows the time zone; however, the input string can include it in various locations. For example, the following queries return the same results:

=> SELECT TIMESTAMP WITH TIME ZONE 'March 1, 44 12:00 CET BC ' "Caesar's Time of Death EST";

Caesar's Time of Death EST

----------------------------

0044-03-01 06:00:00-05 BC

(1 row)

=> SELECT TIMESTAMP WITH TIME ZONE 'March 1, 44 12:00 BC CET' "Caesar's Time of Death EST";

Caesar's Time of Death EST

----------------------------

0044-03-01 06:00:00-05 BC

(1 row)

Examples

=> SELECT (TIMESTAMP '2014-01-17 10:00' - TIMESTAMP '2014-01-01');

?column?

----------

16 10:00

(1 row)

=> SELECT (TIMESTAMP '2014-01-17 10:00' - TIMESTAMP '2014-01-01') / 7;

?column?

-------------------

2 08:17:08.571429

(1 row)

=> SELECT TIMESTAMP '2009-05-29 15:21:00.456789'-TIMESTAMP '2009-05-28';

?column?

-------------------

1 15:21:00.456789

(1 row)

=> SELECT (TIMESTAMP '2009-05-29 15:21:00.456789'-TIMESTAMP '2009-05-28')(3);

?column?

----------------

1 15:21:00.457

(1 row)

=> SELECT '2017-03-18 07:00'::TIMESTAMPTZ(0) + INTERVAL '1.5 day';

?column?

------------------------

2017-03-19 19:00:00-04

(1 row)

=> SELECT (TIMESTAMP '2014-01-17 10:00' - TIMESTAMP '2014-01-01') day;

?column?

----------

16

(1 row)

=> SELECT cast((TIMESTAMP '2014-01-17 10:00' - TIMESTAMP '2014-01-01')

day as integer) / 7;

?column?

----------------------

2.285714285714285714

(1 row)

=> SELECT floor((TIMESTAMP '2014-01-17 10:00' - TIMESTAMP '2014-01-01')

/ interval '7');

floor

-------

2

(1 row)

=> SELECT (TIMESTAMP '2009-05-29 15:21:00.456789'-TIMESTAMP '2009-05-28') second;

?column?

---------------

141660.456789

(1 row)

=> SELECT (TIMESTAMP '2012-05-29 15:21:00.456789'-TIMESTAMP '2009-01-01') year;

?column?

----------

3

(1 row)

=> SELECT (TIMESTAMP '2012-05-29 15:21:00.456789'-TIMESTAMP '2009-01-01') month;

?column?

----------

40

(1 row)

=> SELECT (TIMESTAMP '2012-05-29 15:21:00.456789'-TIMESTAMP '2009-01-01')

year to month;

?column?

----------

3-4

(1 row)

=> SELECT (TIMESTAMP '2012-05-29 15:21:00.456789'-TIMESTAMP '2009-01-01')

second(3);

?column?

---------------

107536860.457

(1 row)

=> SELECT (TIMESTAMP '2012-05-29 15:21:00.456789'-TIMESTAMP '2009-01-01') minute;

?column?

----------

1792281

(1 row)

=> SELECT (TIMESTAMP '2012-05-29 15:21:00.456789'-TIMESTAMP '2009-01-01')

minute to second(3);

?column?

----------------

1792281:00.457

(1 row)

=> SELECT TIMESTAMP 'infinity';

?column?

----------

infinity

(1 row)

4.8 - TIMESTAMP AT TIME ZONE

Converts the specified TIMESTAMP or TIMESTAMPTZ (TIMESTAMP WITH TIMEZONE) to another time zone.

Converts the specified TIMESTAMP or TIMESTAMPTZ (TIMESTAMP WITH TIMEZONE) to another time zone. Vertica executes AT TIME ZONE differently, depending on whether the date input is a TIMESTAMP or TIMESTAMPTZ. See TIMESTAMP versus TIMESTAMPTZ Behavior below.

Syntax

timestamp-clause AT TIME ZONE 'zone'

Parameters

- [timestamp-clause](/en/sql-reference/data-types/datetime-data-types/timestamptimestamptz/)

- Specifies the timestamp to convert, either

TIMESTAMP or TIMESTAMPTZ.

For details, see

TIMESTAMP/TIMESTAMPTZ.

AT TIME ZONE zone- Specifies the time zone to use in the timestamp conversion, where

zone is a literal or interval that specifies a UTC offset:

For details, see Specifying Time Zones below.

Note

Vertica treats literals TIME ZONE and TIMEZONE as synonyms.

TIMESTAMP versus TIMESTAMPTZ behavior

How Vertica interprets AT TIME ZONE depends on whether the date input is a TIMESTAMP or TIMESTAMPTZ:

|

Date input |

Action |

TIMESTAMP |

If the input string specifies no time zone, Vertica performs two actions:

-

Converts the input string to the time zone of the AT TIME ZONE argument.

-

Returns the time for the current session's time zone.

If the input string includes a time zone, Vertica implicitly casts it to a TIMESTAMPTZ and converts it accordingly (see TIMESTAMPTZ below).

For example, the following statement specifies a TIMESTAMP with no time zone. Vertica executes the statement as follows:

-

Converts the input string to PDT (Pacific Daylight Time).

-

Returns that time in the local time zone, which is three hours later:

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

SELECT TIMESTAMP '2017-3-14 5:30' AT TIME ZONE 'PDT';

timezone

------------------------

2017-03-14 08:30:00-04

(1 row)

|

TIMESTAMPTZ |

Vertica converts the input string to the time zone of the AT TIME ZONE argument and returns that time.

For example, the following statement specifies a TIMESTAMPTZ data type. The input string omits any time zone expression, so Vertica assumes the input string to be in local time zone (America/New_York) and returns the time of the AT TIME ZONE argument:

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

=> SELECT TIMESTAMP WITH TIME ZONE '2001-02-16 20:38:40' AT TIME ZONE 'America/Denver';

timezone

---------------------

2001-02-16 18:38:40

(1 row)

The input string in the next statement explicitly specifies a time zone, so Vertica coerces the TIMESTAMP to a TIMESTAMPTZ and returns the time of the AT TIME ZONE argument:

=> SELECT TIMESTAMP '2001-02-16 20:38:40 America/Mexico_City' AT TIME ZONE 'Asia/Tokyo';

timezone

---------------------

2001-02-17 11:38:40

(1 row)

|

Specifying time zones

You can specify time zones in two ways:

-

A string literal such as America/Chicago or PST

-

An interval that specifies a UTC offset—for example, INTERVAL '-08:00'

It is generally good practice to specify time zones with literals that indicate a geographic location. Vertica makes the necessary seasonal adjustments, and thereby avoids inconsistent results. For example, the following two queries are issued when daylight time is in effect. Because the local UTC offset during daylight time is -04, both queries return the same results:

=> SELECT TIMESTAMPTZ '2017-03-16 09:56:13' AT TIME ZONE 'America/Denver' "Denver Time";

Denver Time

---------------------

2017-03-16 07:56:13

(1 row)

=> SELECT TIMESTAMPTZ '2017-03-16 09:56:13' AT TIME ZONE INTERVAL '-06:00' "Denver Time";

Denver Time

---------------------

2017-03-16 07:56:13

(1 row)

If you issue a use the UTC offset in a similar query when standard time is in effect, you must adjust the UTC offset accordingly—for Denver time, to -07—otherwise, Vertica returns a different (and erroneous) result:

=> SELECT TIMESTAMPTZ '2017-01-16 09:56:13' AT TIME ZONE 'America/Denver' "Denver Time";

Denver Time

---------------------

2017-0-16 07:56:13

(1 row)

=> SELECT TIMESTAMPTZ '2017-01-16 09:56:13' AT TIME ZONE INTERVAL '-06:00' "Denver Time";

Denver Time

---------------------

2017-01-16 08:56:13

(1 row)

You can show and set the session's time zone with

SHOW TIMEZONE and

SET TIME ZONE, respectively:

=> SHOW TIMEZONE;

name | setting

----------+------------------

timezone | America/New_York

(1 row)

=> SELECT CURRENT_TIMESTAMP(0) "Eastern Daylight Time";

Eastern Daylight Time

------------------------

2017-03-20 12:18:24-04

(1 row)

=> SET TIMEZONE 'America/Los_Angeles';

SET

=> SELECT CURRENT_TIMESTAMP(0) "Pacific Daylight Time";

Pacific Daylight Time

------------------------

2017-03-20 09:18:24-07

(1 row)

Time zone literals

To view the default list of valid literals, see the files in the following directory:

opt/vertica/share/timezonesets

For example:

$ cat Antarctica.txt

...

# src/timezone/tznames/Antarctica.txt

#

AWST 28800 # Australian Western Standard Time

# (Antarctica/Casey)

# (Australia/Perth)

...

NZST 43200 # New Zealand Standard Time

# (Antarctica/McMurdo)

# (Pacific/Auckland)

ROTT -10800 # Rothera Time

# (Antarctica/Rothera)

SYOT 10800 # Syowa Time

# (Antarctica/Syowa)

VOST 21600 # Vostok time

# (Antarctica/Vostok)

See also

5 - Long data types

Store data up to 32000000 octets.

Store data up to 32000000 octets. Vertica supports two long data types:

-

LONG VARBINARY: Variable-length raw-byte data, such as spatial data. LONG VARBINARY values are not extended to the full width of the column.

-

LONG VARCHAR: Variable-length strings, such as log files and unstructured data. LONG VARCHAR values are not extended to the full width of the column.

Use LONG data types only when you need to store data greater than the maximum size of VARBINARY and VARCHAR data types (65 KB). Long data can include unstructured data, online comments or posts, or small log files.

Flex tables have a default LONG VARBINARY __raw__ column, with a NOT NULL constraint. For more information, see Flex tables.

Syntax

LONG VARBINARY [(max-length)]

LONG VARCHAR [(octet-length)]

Parameters

max-length- Length of the byte string or column width, declared in bytes (octets), up to 32000000.

Default: 1 MB

octet-length- Length of the string or column width, declared in bytes (octets), up to 32000000.

Default: 1 MB

For optimal performance of LONG data types, Vertica recommends that you:

-

Use the LONG data types as storage only containers; Vertica supports operations on the content of LONG data types, but does not support all the operations that VARCHAR and VARBINARY take.

-

Use VARBINARY and VARCHAR data types, instead of their LONG counterparts, whenever possible. VARBINARY and VARCHAR data types are more flexible and have a wider range of operations.

-

Do not sort, segment, or partition projections on LONG data type columns.

-

Do not add constraints, such as a primary key, to any LONG VARBINARY or LONG VARCHAR columns.

-

Do not join or aggregate any LONG data type columns.

Examples

The following example creates a table user_comments with a LONG VARCHAR column and inserts data into it:

=> CREATE TABLE user_comments

(id INTEGER,

username VARCHAR(200),

time_posted TIMESTAMP,

comment_text LONG VARCHAR(200000));

=> INSERT INTO user_comments VALUES

(1,

'User1',

TIMESTAMP '2013-06-25 12:47:32.62',

'The weather tomorrow will be cold and rainy and then

on the day after, the sun will come and the temperature

will rise dramatically.');

6 - Numeric data types

Numeric data types are numbers stored in database columns.

Numeric data types are numbers stored in database columns. These data types are typically grouped by:

-

Exact numeric types, values where the precision and scale need to be preserved. The exact numeric types are INTEGER, BIGINT, DECIMAL, NUMERIC, NUMBER, and MONEY.

-

Approximate numeric types, values where the precision needs to be preserved and the scale can be floating. The approximate numeric types are DOUBLE PRECISION, FLOAT, and REAL.

Implicit casts from INTEGER, FLOAT, and NUMERIC to VARCHAR are not supported. If you need that functionality, write an explicit cast using one of the following forms:

CAST(numeric-expression AS data-type)

numeric-expression::data-type

For example, you can cast a float to an integer as follows:

=> SELECT(FLOAT '123.5')::INT;

?column?

----------

124

(1 row)

String-to-numeric data type conversions accept formats of quoted constants for scientific notation, binary scaling, hexadecimal, and combinations of numeric-type literals:

-

Scientific notation:

=> SELECT FLOAT '1e10';

?column?

-------------

10000000000

(1 row)

-

BINARY scaling:

=> SELECT NUMERIC '1p10';

?column?

----------

1024

(1 row)

-

hexadecimal:

=> SELECT NUMERIC '0x0abc';

?column?

----------

2748

(1 row)

6.1 - DOUBLE PRECISION (FLOAT)

Vertica supports the numeric data type DOUBLE PRECISION, which is the IEEE-754 8-byte floating point type, along with most of the usual floating point operations.

Vertica supports the numeric data type DOUBLE PRECISION, which is the IEEE-754 8-byte floating point type, along with most of the usual floating point operations.

Syntax

[ DOUBLE PRECISION | FLOAT | FLOAT(n) | FLOAT8 | REAL ]

Parameters

Note

On a machine whose floating-point arithmetic does not follow IEEE-754, these values probably do not work as expected.

Double precision is an inexact, variable-precision numeric type. In other words, some values cannot be represented exactly and are stored as approximations. Thus, input and output operations involving double precision might show slight discrepancies.

-

All of the DOUBLE PRECISION data types are synonyms for 64-bit IEEE FLOAT.

-

The n in FLOAT(n) must be between 1 and 53, inclusive, but a 53-bit fraction is always used. See the IEEE-754 standard for details.

-

For exact numeric storage and calculations (money for example), use NUMERIC.

-

Floating point calculations depend on the behavior of the underlying processor, operating system, and compiler.

-

Comparing two floating-point values for equality might not work as expected.

-

While Vertica treats decimal values as FLOAT internally, if a column is defined as FLOAT then you cannot read decimal values from ORC and Parquet files. In those formats, FLOAT and DECIMAL are different types.

Values

COPY accepts floating-point data in the following format:

-

Optional leading white space

-

An optional plus ("+") or minus sign ("-")

-

A decimal number, a hexadecimal number, an infinity, a NAN, or a null value

Decimal Number

A decimal number consists of a non-empty sequence of decimal digits possibly containing a radix character (decimal point "."), optionally followed by a decimal exponent. A decimal exponent consists of an "E" or "e", followed by an optional plus or minus sign, followed by a non-empty sequence of decimal digits, and indicates multiplication by a power of 10.

Hexadecimal Number

A hexadecimal number consists of a "0x" or "0X" followed by a non-empty sequence of hexadecimal digits possibly containing a radix character, optionally followed by a binary exponent. A binary exponent consists of a "P" or "p", followed by an optional plus or minus sign, followed by a non-empty sequence of decimal digits, and indicates multiplication by a power of 2. At least one of radix character and binary exponent must be present.

Infinity

An infinity is either INF or INFINITY, disregarding case.

NaN (Not A Number)

A NaN is NAN (disregarding case) optionally followed by a sequence of characters enclosed in parentheses. The character string specifies the value of NAN in an implementation-dependent manner. (The Vertica internal representation of NAN is 0xfff8000000000000LL on x86 machines.)

When writing infinity or NAN values as constants in a SQL statement, enclose them in single quotes. For example:

=> UPDATE table SET x = 'Infinity'

Note

Vertica follows the IEEE definition of NaNs (IEEE 754). The SQL standards do not specify how floating point works in detail.

IEEE defines NaNs as a set of floating point values where each one is not equal to anything, even to itself. A NaN is not greater than and at the same time not less than anything, even itself. In other words, comparisons always return false whenever a NaN is involved.

However, for the purpose of sorting data, NaN values must be placed somewhere in the result. The value generated 'NaN' appears in the context of a floating point number matches the NaN value generated by the hardware. For example, Intel hardware generates (0xfff8000000000000LL), which is technically a Negative, Quiet, Non-signaling NaN.

Vertica uses a different NaN value to represent floating point NULL (0x7ffffffffffffffeLL). This is a Positive, Quiet, Non-signaling NaN and is reserved by Vertica

A NaN example follows.

=> SELECT CBRT('Nan'); -- cube root

CBRT

------

NaN

(1 row)

=> SELECT 'Nan' > 1.0;

?column?

----------

f

(1 row)

Null Value

The load file format of a null value is user defined, as described in the COPY command. The Vertica internal representation of a null value is 0x7fffffffffffffffLL. The interactive format is controlled by the vsql printing option null. For example:

\pset null '(null)'

The default option is not to print anything.

Rules

To search for NaN column values, use the following predicate:

... WHERE column != column

This is necessary because WHERE column = 'Nan' cannot be true by definition.

Sort order (ascending)

-

NaN

-

-Inf

-

numbers

-

+Inf

-

NULL

Notes

-

NULL appears last (largest) in ascending order.

-

All overflows in floats generate +/-infinity or NaN, per the IEEE floating point standard.

6.2 - INTEGER

A signed 8-byte (64-bit) data type.

A signed 8-byte (64-bit) data type.

Syntax

[ INTEGER | INT | BIGINT | INT8 | SMALLINT | TINYINT ]

Parameters

INT, INTEGER, INT8, SMALLINT, TINYINT, and BIGINT are all synonyms for the same signed 64-bit integer data type. Automatic compression techniques are used to conserve disk space in cases where the full 64 bits are not required.

Notes

-

The range of values is –2^63+1 to 2^63-1.

-

2^63 = 9,223,372,036,854,775,808 (19 digits).

-

The value –2^63 is reserved to represent NULL.

-

NULL appears first (smallest) in ascending order.

-

Vertica does not have an explicit 4-byte (32-bit integer) or smaller types. Vertica's encoding and compression automatically eliminate the storage overhead of values that fit in less than 64 bits.

Restrictions

-

The JDBC type INTEGER is 4 bytes and is not supported by Vertica. Use BIGINT instead.

-

Vertica does not support the SQL/JDBC types NUMERIC, SMALLINT, or TINYINT.

-

Vertica does not check for overflow (positive or negative) except in the aggregate function SUM(). If you encounter overflow when using SUM, use SUM_FLOAT(), which converts to floating point.

See also

Data Type Coercion Chart

6.3 - NUMERIC

Numeric data types store fixed-point numeric data.

Numeric data types store fixed-point numeric data. For example, a value of $123.45 can be stored in a NUMERIC(5,2) field. Note that the first number, the precision, specifies the total number of digits.

Syntax

numeric-type [ ( precision[, scale] ) ]

Parameters

numeric-type- One of the following:

-

NUMERIC

-

DECIMAL

-

NUMBER

-

MONEY

precision- An unsigned integer that specifies the total number of significant digits that the data type stores, where

precision is ≤ 1024. If omitted, the default precision depends on numeric type that you specify. If you assign a value that exceeds precision, Vertica returns an error.

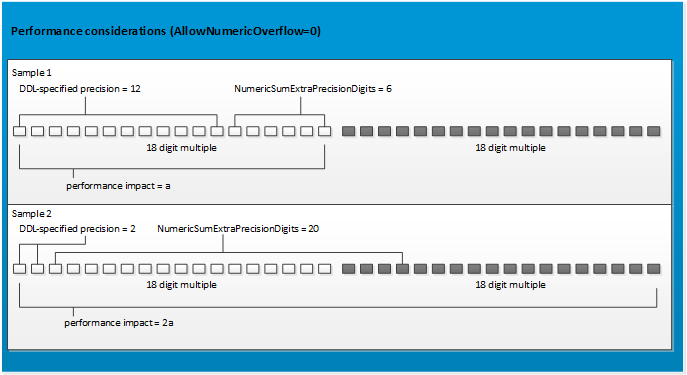

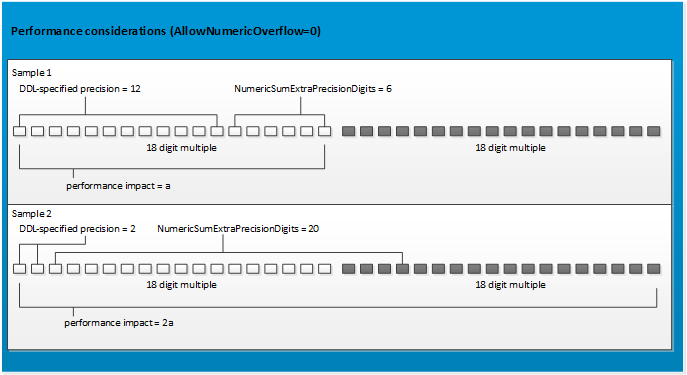

If a data type's precision is ≤ 18, performance is equivalent to an INTEGER data type, regardless of scale. When possible, Vertica recommends using a precision ≤ 18.

scale- An unsigned integer that specifies the maximum number of digits to the right of the decimal point to store.

scale must be ≤ precision. If omitted, the default scale depends on numeric type that you specify. If you assign a value with more decimal digits than scale, the scale is rounded to scale digits.

When using ALTER to modify the data type of a numeric column, scale cannot be changed.

Default precision and scale

NUMERIC, DECIMAL, NUMBER, and MONEY differ in their default precision and scale values:

|

Type |

Precision |

Scale |

|

NUMERIC |

37 |

15 |

|

DECIMAL |

37 |

15 |

|

NUMBER |

38 |

0 |

|

MONEY |

18 |

4 |

Supported encoding

Vertica supports the following encoding for numeric data types:

-

Precision ≤ 18: AUTO, BLOCK_DICT, BLOCKDICT_COMP, COMMONDELTA_COMP, DELTAVAL, GCDDELTA, and RLE

-

Precision > 18: AUTO, BLOCK_DICT, BLOCKDICT_COMP, RLE

For details, see Encoding types.

Numeric versus integer and floating data types

Numeric data types are exact data types that store values of a specified precision and scale, expressed with a number of digits before and after a decimal point. This contrasts with the Vertica integer and floating data types:

-

DOUBLE PRECISION (FLOAT) supports ~15 digits, variable exponent, and represents numeric values approximately. It can be less precise than NUMERIC data types.

-

INTEGER supports ~18 digits, whole numbers only.

The NUMERIC data type is preferred for non-integer constants, because it is always exact. For example:

=> SELECT 1.1 + 2.2 = 3.3;

?column?

----------

t

(1 row)

=> SELECT 1.1::float + 2.2::float = 3.3::float;

?column?

----------

f

(1 row)

Numeric operations

Supported numeric operations include the following:

-

Basic math: +, -, *, /

-

Aggregation: SUM, MIN, MAX, COUNT

-

Comparison: <, <=, =, <=>, <>, >, >=

NUMERIC divide operates directly on numeric values, without converting to floating point. The result has at least 18 decimal places and is rounded.

NUMERIC mod (including %) operates directly on numeric values, without converting to floating point. The result has the same scale as the numerator and never needs rounding.

Some complex operations used with numeric data types result in an implicit cast to FLOAT. When using SQRT, STDDEV, transcendental functions such as LOG, and TO_CHAR/TO_NUMBER formatting, the result is always FLOAT.

Examples

The following series of commands creates a table that contains a numeric data type and then performs some mathematical operations on the data:

=> CREATE TABLE num1 (id INTEGER, amount NUMERIC(8,2));

Insert some values into the table:

=> INSERT INTO num1 VALUES (1, 123456.78);

Query the table:

=> SELECT * FROM num1;

id | amount

------+-----------

1 | 123456.78

(1 row)

The following example returns the NUMERIC column, amount, from table num1:

=> SELECT amount FROM num1;

amount

-----------

123456.78

(1 row)

The following syntax adds one (1) to the amount:

=> SELECT amount+1 AS 'amount' FROM num1;

amount

-----------

123457.78

(1 row)

The following syntax multiplies the amount column by 2:

=> SELECT amount*2 AS 'amount' FROM num1;

amount

-----------

246913.56

(1 row)

The following syntax returns a negative number for the amount column:

=> SELECT -amount FROM num1;

?column?

------------

-123456.78

(1 row)

The following syntax returns the absolute value of the amount argument:

=> SELECT ABS(amount) FROM num1;

ABS

-----------

123456.78

(1 row)

The following syntax casts the NUMERIC amount as a FLOAT data type:

=> SELECT amount::float FROM num1;

amount

-----------

123456.78

(1 row)

See also

Mathematical functions

6.4 - Numeric data type overflow

Vertica does not check for overflow (positive or negative) except in the aggregate function SUM().

Vertica does not check for overflow (positive or negative) except in the aggregate function SUM(). If you encounter overflow when using SUM, use SUM_FLOAT() which converts to floating point.