This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Cluster layout

Vertica supports two cluster architectures for Hadoop integration.

Vertica supports two cluster architectures for Hadoop integration. Which architecture you use affects the decisions you make about integration with HDFS. These options might also be limited by license terms.

-

You can co-locate Vertica on some or all of your Hadoop nodes. Vertica can then take advantage of data locality.

-

You can build a Vertica cluster that is separate from your Hadoop cluster. In this configuration, Vertica can fully use each of its nodes; it does not share resources with Hadoop.

With either architecture, if you are using the hdfs scheme to read ORC or Parquet files, you must do some additional configuration. See Configuring HDFS access.

1 - Co-located clusters

With co-located clusters, Vertica is installed on some or all of your Hadoop nodes.

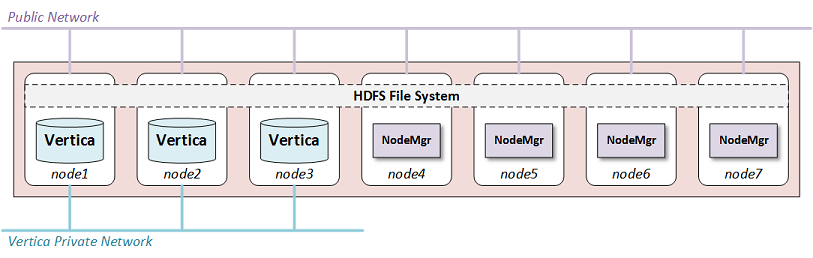

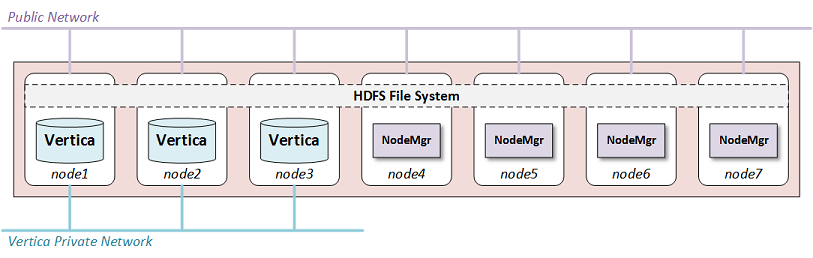

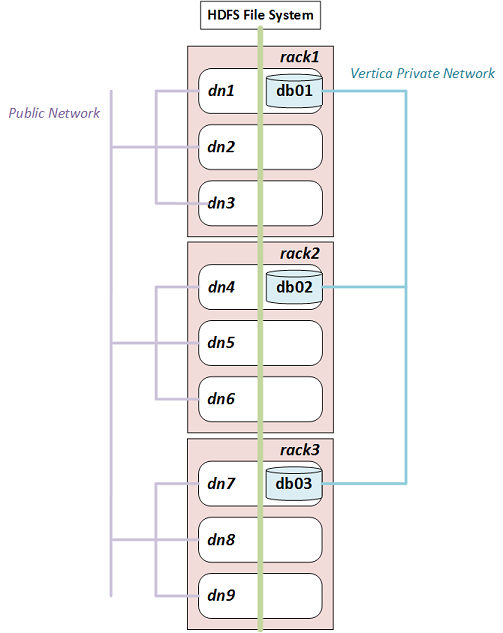

With co-located clusters, Vertica is installed on some or all of your Hadoop nodes. The Vertica nodes use a private network in addition to the public network used by all Hadoop nodes, as the following figure shows:

You might choose to place Vertica on all of your Hadoop nodes or only on some of them. If you are using HDFS Storage Locations you should use at least three Vertica nodes, the minimum number for K-safety in an Enterprise Mode database.

Using more Vertica nodes can improve performance because the HDFS data needed by a query is more likely to be local to the node.

You can place Hadoop and Vertica clusters within a single rack, or you can span across many racks and nodes. If you do not co-locate Vertica on every node, you can improve performance by co-locating it on at least one node in each rack. See Configuring rack locality.

Normally, both Hadoop and Vertica use the entire node. Because this configuration uses shared nodes, you must address potential resource contention in your configuration on those nodes. See Configuring Hadoop for co-located clusters for more information. No changes are needed on Hadoop-only nodes.

Hardware recommendations

Hadoop clusters frequently do not have identical provisioning requirements or hardware configurations. However, Vertica nodes should be equivalent in size and capability, per the best-practice standards recommended in Platform and hardware requirements and recommendations.

Because Hadoop cluster specifications do not always meet these standards, Vertica recommends the following specifications for Vertica nodes in your Hadoop cluster.

|

Specifications for... |

Recommendation |

|

Processor |

For best performance, run:

-

Two-socket servers with 8–14 core CPUs, clocked at or above 2.6 GHz for clusters over 10 TB

-

Single-socket servers with 8–12 cores clocked at or above 2.6 GHz for clusters under 10 TB

|

|

Memory |

Distribute the memory appropriately across all memory channels in the server:

-

Minimum—8 GB of memory per physical CPU core in the server

-

High-performance applications—12–16 GB of memory per physical core

-

Type—at least DDR3-1600, preferably DDR3-1866

|

|

Storage |

Read/write:

Storage post RAID: Each node should have 1–9 TB. For a production setting, Vertica recommends RAID 10. In some cases, RAID 50 is acceptable.

Because Vertica performs heavy compression and encoding, SSDs are not required. In most cases, a RAID of more, less-expensive HDDs performs just as well as a RAID of fewer SSDs.

If you intend to use RAID 50 for your data partition, you should keep a spare node in every rack, allowing for manual failover of a Vertica node in the case of a drive failure. A Vertica node recovery is faster than a RAID 50 rebuild. Also, be sure to never put more than 10 TB compressed on any node, to keep node recovery times at an acceptable rate.

|

|

Network |

10 GB networking in almost every case. With the introduction of 10 GB over cat6a (Ethernet), the cost difference is minimal. |

2 - Configuring Hadoop for co-located clusters

If you are co-locating Vertica on any HDFS nodes, there are some additional configuration requirements.

If you are co-locating Vertica on any HDFS nodes, there are some additional configuration requirements.

Hadoop configuration parameters

For best performance, set the following parameters with the specified minimum values:

|

Parameter |

Minimum Value |

|

HDFS block size |

512MB |

|

Namenode Java Heap |

1GB |

|

Datanode Java Heap |

2GB |

WebHDFS

Hadoop has two services that can provide web access to HDFS:

For Vertica, you must use the WebHDFS service.

YARN

The YARN service is available in newer releases of Hadoop. It performs resource management for Hadoop clusters. When co-locating Vertica on YARN-managed Hadoop nodes you must make some changes in YARN.

Vertica recommends reserving at least 16GB of memory for Vertica on shared nodes. Reserving more will improve performance. How you do this depends on your Hadoop distribution:

-

If you are using Hortonworks, create a "Vertica" node label and assign this to the nodes that are running Vertica.

-

If you are using Cloudera, enable and configure static service pools.

Consult the documentation for your Hadoop distribution for details. Alternatively, you can disable YARN on the shared nodes.

Hadoop balancer

The Hadoop Balancer can redistribute data blocks across HDFS. For many Hadoop services, this feature is useful. However, for Vertica this can reduce performance under some conditions.

If you are using HDFS storage locations, the Hadoop load balancer can move data away from the Vertica nodes that are operating on it, degrading performance. This behavior can also occur when reading ORC or Parquet files if Vertica is not running on all Hadoop nodes. (If you are using separate Vertica and Hadoop clusters, all Hadoop access is over the network, and the performance cost is less noticeable.)

To prevent the undesired movement of data blocks across the HDFS cluster, consider excluding Vertica nodes from rebalancing. See the Hadoop documentation to learn how to do this.

Replication factor

By default, HDFS stores three copies of each data block. Vertica is generally set up to store two copies of each data item through K-Safety. Thus, lowering the replication factor to 2 can save space and still provide data protection.

To lower the number of copies HDFS stores, set HadoopFSReplication, as explained in Troubleshooting HDFS storage locations.

Disk space for Non-HDFS use

You also need to reserve some disk space for non-HDFS use. To reserve disk space using Ambari, set dfs.datanode.du.reserved to a value in the hdfs-site.xml configuration file.

Setting this parameter preserves space for non-HDFS files that Vertica requires.

3 - Configuring rack locality

This feature is supported only for reading ORC and Parquet data on co-located clusters.

Note

This feature is supported only for reading ORC and Parquet data on co-located clusters. It is only meaningful on Hadoop clusters that span multiple racks.

When possible, when planning a query Vertica automatically uses database nodes that are co-located with the HDFS nodes that contain the data. Moving query execution closer to the data reduces network latency and can improve performance. This behavior, called node locality, requires no additional configuration.

When Vertica is co-located on only a subset of HDFS nodes, sometimes there is no database node that is co-located with the data. However, performance is usually better if a query uses a database node in the same rack. If configured with information about Hadoop rack structure, Vertica attempts to use a database node in the same rack as the data to be queried.

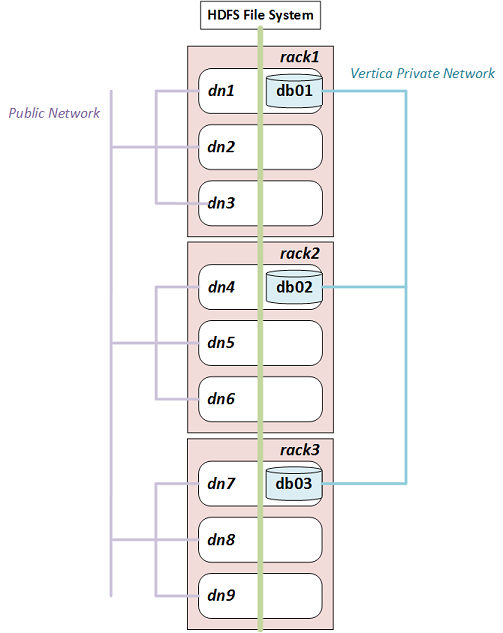

For example, the following diagram illustrates a Hadoop cluster with three racks each containing three data nodes. (Typical production systems have more data nodes per rack.) In each rack, Vertica is co-located on one node.

If you configure rack locality, Vertica uses db01 to query data on dn1, dn2, or dn3, and uses db02 and db03 for data on rack2 and rack3 respectively. Because HDFS replicates data, any given data block can exist in more than one rack. If a data block is replicated on dn2, dn3, and dn6, for example, Vertica uses either db01 or db02 to query it.

Hadoop components are rack-aware, so configuration files describing rack structure already exist in the Hadoop cluster. To use this information in Vertica, configure fault groups that describe this rack structure. Vertica uses fault groups in query planning.

Configuring fault groups

Vertica uses Fault groups to describe physical cluster layout. Because your database nodes are co-located on HDFS nodes, Vertica can use the information about the physical layout of the HDFS cluster.

Tip

For best results, ensure that each Hadoop rack contains at least one co-located Vertica node.

Hadoop stores its cluster-layout data in a topology mapping file in HADOOP_CONF_DIR. On HortonWorks the file is typically named topology_mappings.data. On Cloudera it is typically named topology.map. Use the data in this file to create an input file for the fault-group script. For more information about the format of this file, see Creating a fault group input file.

Following is an example topology mapping file for the cluster illustrated previously:

[network_topology]

dn1.example.com=/rack1

10.20.41.51=/rack1

dn2.example.com=/rack1

10.20.41.52=/rack1

dn3.example.com=/rack1

10.20.41.53=/rack1

dn4.example.com=/rack2

10.20.41.71=/rack2

dn5.example.com=/rack2

10.20.41.72=/rack2

dn6.example.com=/rack2

10.20.41.73=/rack2

dn7.example.com=/rack3

10.20.41.91=/rack3

dn8.example.com=/rack3

10.20.41.92=/rack3

dn9.example.com=/rack3

10.20.41.93=/rack3

From this data, you can create the following input file describing the Vertica subset of this cluster:

/rack1 /rack2 /rack3

/rack1 = db01

/rack2 = db02

/rack3 = db03

This input file tells Vertica that the database node "db01" is on rack1, "db02" is on rack2, and "db03" is on rack3. In creating this file, ignore Hadoop data nodes that are not also Vertica nodes.

After you create the input file, run the fault-group tool:

$ python /opt/vertica/scripts/fault_group_ddl_generator.py dbName input_file > fault_group_ddl.sql

The output of this script is a SQL file that creates the fault groups. Execute it following the instructions in Creating fault groups.

You can review the new fault groups with the following statement:

=> SELECT member_name,node_address,parent_name FROM fault_groups

INNER JOIN nodes ON member_name=node_name ORDER BY parent_name;

member_name | node_address | parent_name

-------------------------+--------------+-------------

db01 | 10.20.41.51 | /rack1

db02 | 10.20.41.71 | /rack2

db03 | 10.20.41.91 | /rack3

(3 rows)

Working with multi-level racks

A Hadoop cluster can use multi-level racks. For example, /west/rack-w1, /west/rack-2, and /west/rack-w3 might be served from one data center, while /east/rack-e1, /east/rack-e2, and /east/rack-e3 are served from another. Use the following format for entries in the input file for the fault-group script:

/west /east

/west = /rack-w1 /rack-w2 /rack-w3

/east = /rack-e1 /rack-e2 /rack-e3

/rack-w1 = db01

/rack-w2 = db02

/rack-w3 = db03

/rack-e1 = db04

/rack-e2 = db05

/rack-e3 = db06

Do not create entries using the full rack path, such as /west/rack-w1.

Auditing results

To see how much data can be loaded with rack locality, use EXPLAIN with the query and look for statements like the following in the output:

100% of ORC data including co-located data can be loaded with rack locality.

4 - Separate clusters

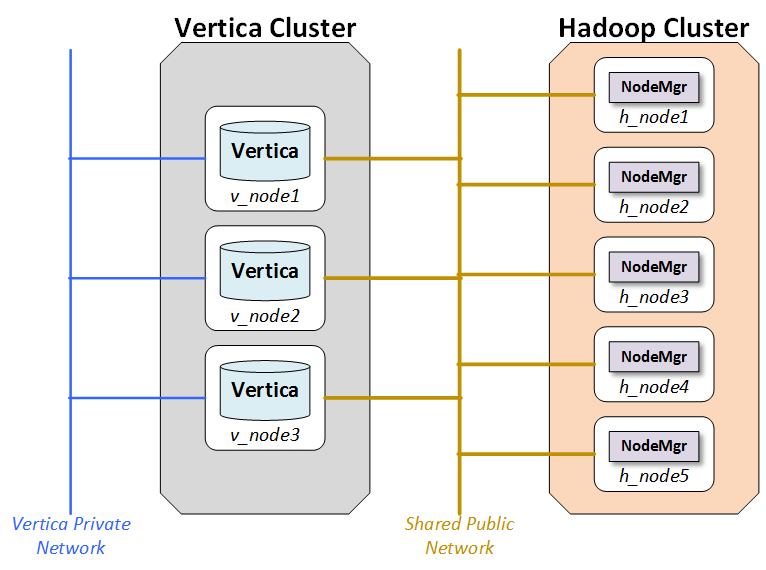

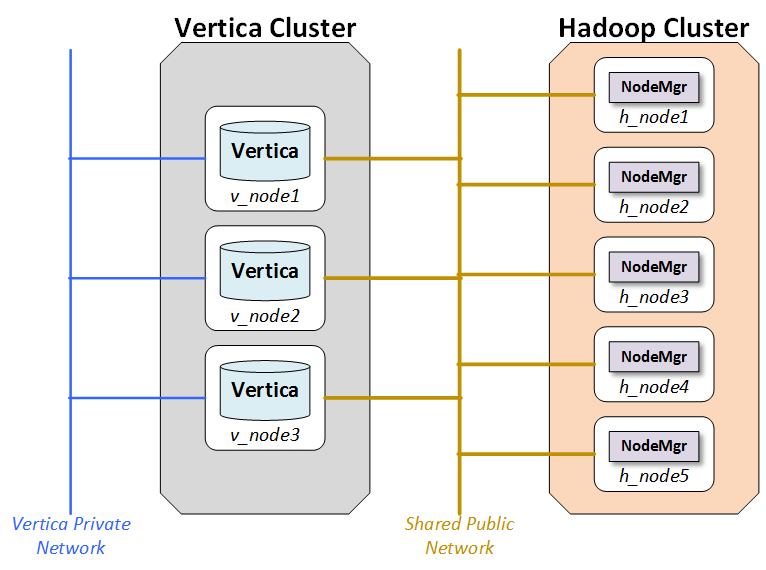

With separate clusters, a Vertica cluster and a Hadoop cluster share no nodes.

With separate clusters, a Vertica cluster and a Hadoop cluster share no nodes. You should use a high-bandwidth network connection between the two clusters.

The following figure illustrates the configuration for separate clusters::

Network

The network is a key performance component of any well-configured cluster. When Vertica stores data to HDFS it writes and reads data across the network.

The layout shown in the figure calls for two networks, and there are benefits to adding a third:

-

Database Private Network: Vertica uses a private network for command and control and moving data between nodes in support of its database functions. In some networks, the command and control and passing of data are split across two networks.

-

Database/Hadoop Shared Network: Each Vertica node must be able to connect to each Hadoop data node and the NameNode. Hadoop best practices generally require a dedicated network for the Hadoop cluster. This is not a technical requirement, but a dedicated network improves Hadoop performance. Vertica and Hadoop should share the dedicated Hadoop network.

-

Optional Client Network: Outside clients may access the clustered networks through a client network. This is not an absolute requirement, but the use of a third network that supports client connections to either Vertica or Hadoop can improve performance. If the configuration does not support a client network, than client connections should use the shared network.

Hadoop configuration parameters

For best performance, set the following parameters with the specified minimum values:

|

Parameter |

Minimum Value |

|

HDFS block size |

512MB |

|

Namenode Java Heap |

1GB |

|

Datanode Java Heap |

2GB |