This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Apache Hadoop integration

Apache™ Hadoop™, like Vertica, uses a cluster of nodes for distributed processing.

Apache™ Hadoop™, like Vertica, uses a cluster of nodes for distributed processing. The primary component of interest is HDFS, the Hadoop Distributed File System.

You can use Vertica with HDFS in several ways:

-

You can import HDFS data into locally-stored ROS files.

-

You can access HDFS data in place using external tables. You can define the tables yourself or get schema information from Hive, a Hadoop component.

-

You can use HDFS as a storage location for ROS files.

-

You can export data from Vertica to share with other Hadoop components using a Hadoop columnar format. See File export for more information.

Hadoop file paths are expressed as URLs in the webhdfs or hdfs URL scheme. For more about using these schemes, see HDFS file system.

Hadoop distributions

Vertica can be used with Hadoop distributions from Hortonworks, Cloudera, and MapR. See Hadoop integrations for the specific versions that are supported.

If you are using Cloudera, you can manage your Vertica cluster using Cloudera Manager. See Integrating with Cloudera Manager.

If you are using MapR, see Integrating Vertica with the MapR distribution of Hadoop.

WebHDFS requirement

By default, if you use a URL in the hdfs scheme, Vertica treats it as webhdfs. If you instead use the (deprecated) LibHDFS++ library, you must still have a WebHDFS service available. LibHDFS++ does not support some WebHDFS features, such as encryption zones, wire encryption, or writes, and falls back to WebHDFS when needed.

For some uses, such as Eon Mode communal storage, you must use WebHDFS directly with the webhdfs scheme.

Deprecated

Support for LibHDFS++ is deprecated. You can set the

HDFSUseWebHDFS configuration parameter to 0 (disabled) to use LibHDFS++ until it is removed.

1 - Cluster layout

Vertica supports two cluster architectures for Hadoop integration.

Vertica supports two cluster architectures for Hadoop integration. Which architecture you use affects the decisions you make about integration with HDFS. These options might also be limited by license terms.

-

You can co-locate Vertica on some or all of your Hadoop nodes. Vertica can then take advantage of data locality.

-

You can build a Vertica cluster that is separate from your Hadoop cluster. In this configuration, Vertica can fully use each of its nodes; it does not share resources with Hadoop.

With either architecture, if you are using the hdfs scheme to read ORC or Parquet files, you must do some additional configuration. See Configuring HDFS access.

1.1 - Co-located clusters

With co-located clusters, Vertica is installed on some or all of your Hadoop nodes.

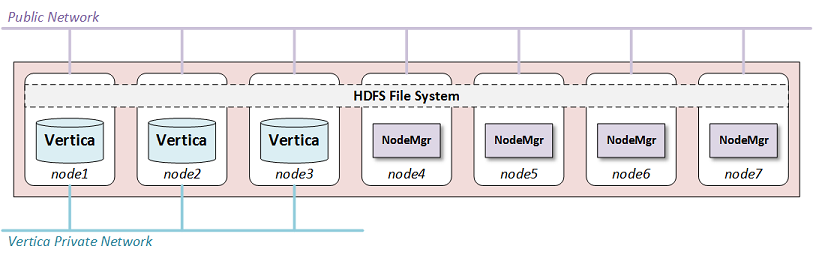

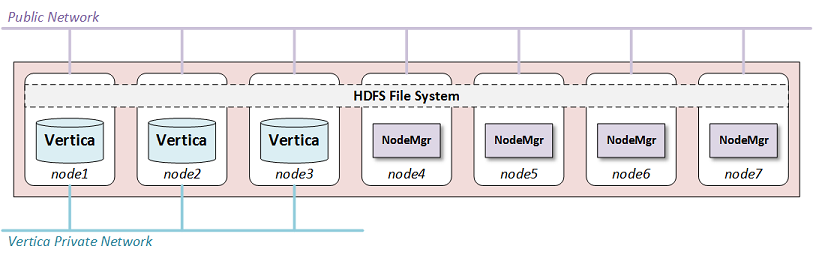

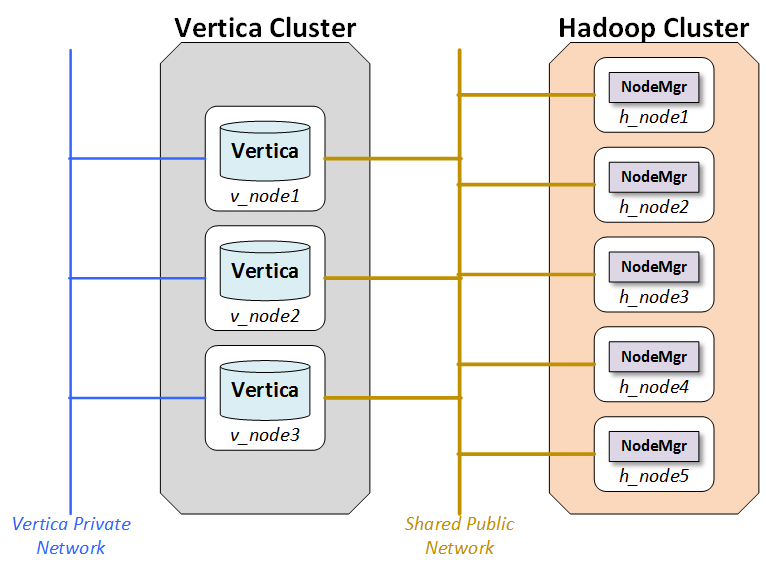

With co-located clusters, Vertica is installed on some or all of your Hadoop nodes. The Vertica nodes use a private network in addition to the public network used by all Hadoop nodes, as the following figure shows:

You might choose to place Vertica on all of your Hadoop nodes or only on some of them. If you are using HDFS Storage Locations you should use at least three Vertica nodes, the minimum number for K-safety in an Enterprise Mode database.

Using more Vertica nodes can improve performance because the HDFS data needed by a query is more likely to be local to the node.

You can place Hadoop and Vertica clusters within a single rack, or you can span across many racks and nodes. If you do not co-locate Vertica on every node, you can improve performance by co-locating it on at least one node in each rack. See Configuring rack locality.

Normally, both Hadoop and Vertica use the entire node. Because this configuration uses shared nodes, you must address potential resource contention in your configuration on those nodes. See Configuring Hadoop for co-located clusters for more information. No changes are needed on Hadoop-only nodes.

Hardware recommendations

Hadoop clusters frequently do not have identical provisioning requirements or hardware configurations. However, Vertica nodes should be equivalent in size and capability, per the best-practice standards recommended in Platform and hardware requirements and recommendations.

Because Hadoop cluster specifications do not always meet these standards, Vertica recommends the following specifications for Vertica nodes in your Hadoop cluster.

|

Specifications for... |

Recommendation |

|

Processor |

For best performance, run:

-

Two-socket servers with 8–14 core CPUs, clocked at or above 2.6 GHz for clusters over 10 TB

-

Single-socket servers with 8–12 cores clocked at or above 2.6 GHz for clusters under 10 TB

|

|

Memory |

Distribute the memory appropriately across all memory channels in the server:

-

Minimum—8 GB of memory per physical CPU core in the server

-

High-performance applications—12–16 GB of memory per physical core

-

Type—at least DDR3-1600, preferably DDR3-1866

|

|

Storage |

Read/write:

Storage post RAID: Each node should have 1–9 TB. For a production setting, Vertica recommends RAID 10. In some cases, RAID 50 is acceptable.

Because Vertica performs heavy compression and encoding, SSDs are not required. In most cases, a RAID of more, less-expensive HDDs performs just as well as a RAID of fewer SSDs.

If you intend to use RAID 50 for your data partition, you should keep a spare node in every rack, allowing for manual failover of a Vertica node in the case of a drive failure. A Vertica node recovery is faster than a RAID 50 rebuild. Also, be sure to never put more than 10 TB compressed on any node, to keep node recovery times at an acceptable rate.

|

|

Network |

10 GB networking in almost every case. With the introduction of 10 GB over cat6a (Ethernet), the cost difference is minimal. |

1.2 - Configuring Hadoop for co-located clusters

If you are co-locating Vertica on any HDFS nodes, there are some additional configuration requirements.

If you are co-locating Vertica on any HDFS nodes, there are some additional configuration requirements.

Hadoop configuration parameters

For best performance, set the following parameters with the specified minimum values:

|

Parameter |

Minimum Value |

|

HDFS block size |

512MB |

|

Namenode Java Heap |

1GB |

|

Datanode Java Heap |

2GB |

WebHDFS

Hadoop has two services that can provide web access to HDFS:

For Vertica, you must use the WebHDFS service.

YARN

The YARN service is available in newer releases of Hadoop. It performs resource management for Hadoop clusters. When co-locating Vertica on YARN-managed Hadoop nodes you must make some changes in YARN.

Vertica recommends reserving at least 16GB of memory for Vertica on shared nodes. Reserving more will improve performance. How you do this depends on your Hadoop distribution:

-

If you are using Hortonworks, create a "Vertica" node label and assign this to the nodes that are running Vertica.

-

If you are using Cloudera, enable and configure static service pools.

Consult the documentation for your Hadoop distribution for details. Alternatively, you can disable YARN on the shared nodes.

Hadoop balancer

The Hadoop Balancer can redistribute data blocks across HDFS. For many Hadoop services, this feature is useful. However, for Vertica this can reduce performance under some conditions.

If you are using HDFS storage locations, the Hadoop load balancer can move data away from the Vertica nodes that are operating on it, degrading performance. This behavior can also occur when reading ORC or Parquet files if Vertica is not running on all Hadoop nodes. (If you are using separate Vertica and Hadoop clusters, all Hadoop access is over the network, and the performance cost is less noticeable.)

To prevent the undesired movement of data blocks across the HDFS cluster, consider excluding Vertica nodes from rebalancing. See the Hadoop documentation to learn how to do this.

Replication factor

By default, HDFS stores three copies of each data block. Vertica is generally set up to store two copies of each data item through K-Safety. Thus, lowering the replication factor to 2 can save space and still provide data protection.

To lower the number of copies HDFS stores, set HadoopFSReplication, as explained in Troubleshooting HDFS storage locations.

Disk space for Non-HDFS use

You also need to reserve some disk space for non-HDFS use. To reserve disk space using Ambari, set dfs.datanode.du.reserved to a value in the hdfs-site.xml configuration file.

Setting this parameter preserves space for non-HDFS files that Vertica requires.

1.3 - Configuring rack locality

This feature is supported only for reading ORC and Parquet data on co-located clusters.

Note

This feature is supported only for reading ORC and Parquet data on co-located clusters. It is only meaningful on Hadoop clusters that span multiple racks.

When possible, when planning a query Vertica automatically uses database nodes that are co-located with the HDFS nodes that contain the data. Moving query execution closer to the data reduces network latency and can improve performance. This behavior, called node locality, requires no additional configuration.

When Vertica is co-located on only a subset of HDFS nodes, sometimes there is no database node that is co-located with the data. However, performance is usually better if a query uses a database node in the same rack. If configured with information about Hadoop rack structure, Vertica attempts to use a database node in the same rack as the data to be queried.

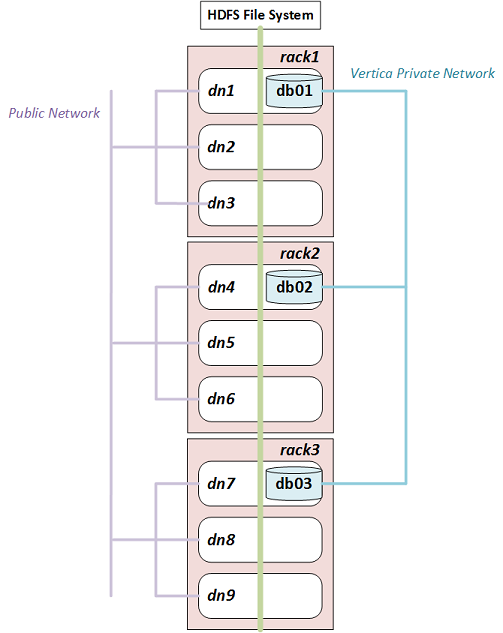

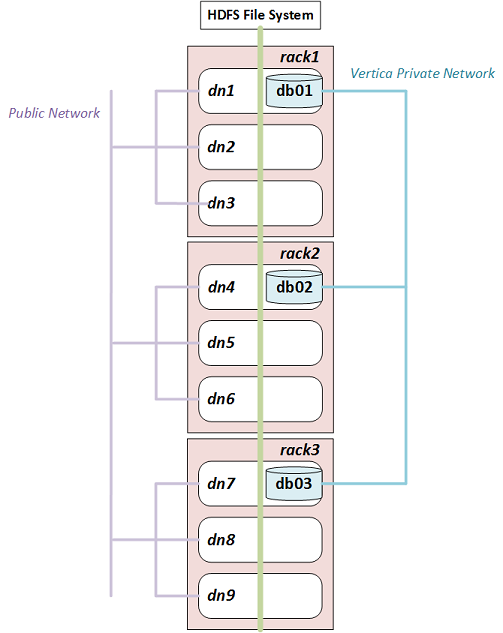

For example, the following diagram illustrates a Hadoop cluster with three racks each containing three data nodes. (Typical production systems have more data nodes per rack.) In each rack, Vertica is co-located on one node.

If you configure rack locality, Vertica uses db01 to query data on dn1, dn2, or dn3, and uses db02 and db03 for data on rack2 and rack3 respectively. Because HDFS replicates data, any given data block can exist in more than one rack. If a data block is replicated on dn2, dn3, and dn6, for example, Vertica uses either db01 or db02 to query it.

Hadoop components are rack-aware, so configuration files describing rack structure already exist in the Hadoop cluster. To use this information in Vertica, configure fault groups that describe this rack structure. Vertica uses fault groups in query planning.

Configuring fault groups

Vertica uses Fault groups to describe physical cluster layout. Because your database nodes are co-located on HDFS nodes, Vertica can use the information about the physical layout of the HDFS cluster.

Tip

For best results, ensure that each Hadoop rack contains at least one co-located Vertica node.

Hadoop stores its cluster-layout data in a topology mapping file in HADOOP_CONF_DIR. On HortonWorks the file is typically named topology_mappings.data. On Cloudera it is typically named topology.map. Use the data in this file to create an input file for the fault-group script. For more information about the format of this file, see Creating a fault group input file.

Following is an example topology mapping file for the cluster illustrated previously:

[network_topology]

dn1.example.com=/rack1

10.20.41.51=/rack1

dn2.example.com=/rack1

10.20.41.52=/rack1

dn3.example.com=/rack1

10.20.41.53=/rack1

dn4.example.com=/rack2

10.20.41.71=/rack2

dn5.example.com=/rack2

10.20.41.72=/rack2

dn6.example.com=/rack2

10.20.41.73=/rack2

dn7.example.com=/rack3

10.20.41.91=/rack3

dn8.example.com=/rack3

10.20.41.92=/rack3

dn9.example.com=/rack3

10.20.41.93=/rack3

From this data, you can create the following input file describing the Vertica subset of this cluster:

/rack1 /rack2 /rack3

/rack1 = db01

/rack2 = db02

/rack3 = db03

This input file tells Vertica that the database node "db01" is on rack1, "db02" is on rack2, and "db03" is on rack3. In creating this file, ignore Hadoop data nodes that are not also Vertica nodes.

After you create the input file, run the fault-group tool:

$ python /opt/vertica/scripts/fault_group_ddl_generator.py dbName input_file > fault_group_ddl.sql

The output of this script is a SQL file that creates the fault groups. Execute it following the instructions in Creating fault groups.

You can review the new fault groups with the following statement:

=> SELECT member_name,node_address,parent_name FROM fault_groups

INNER JOIN nodes ON member_name=node_name ORDER BY parent_name;

member_name | node_address | parent_name

-------------------------+--------------+-------------

db01 | 10.20.41.51 | /rack1

db02 | 10.20.41.71 | /rack2

db03 | 10.20.41.91 | /rack3

(3 rows)

Working with multi-level racks

A Hadoop cluster can use multi-level racks. For example, /west/rack-w1, /west/rack-2, and /west/rack-w3 might be served from one data center, while /east/rack-e1, /east/rack-e2, and /east/rack-e3 are served from another. Use the following format for entries in the input file for the fault-group script:

/west /east

/west = /rack-w1 /rack-w2 /rack-w3

/east = /rack-e1 /rack-e2 /rack-e3

/rack-w1 = db01

/rack-w2 = db02

/rack-w3 = db03

/rack-e1 = db04

/rack-e2 = db05

/rack-e3 = db06

Do not create entries using the full rack path, such as /west/rack-w1.

Auditing results

To see how much data can be loaded with rack locality, use EXPLAIN with the query and look for statements like the following in the output:

100% of ORC data including co-located data can be loaded with rack locality.

1.4 - Separate clusters

With separate clusters, a Vertica cluster and a Hadoop cluster share no nodes.

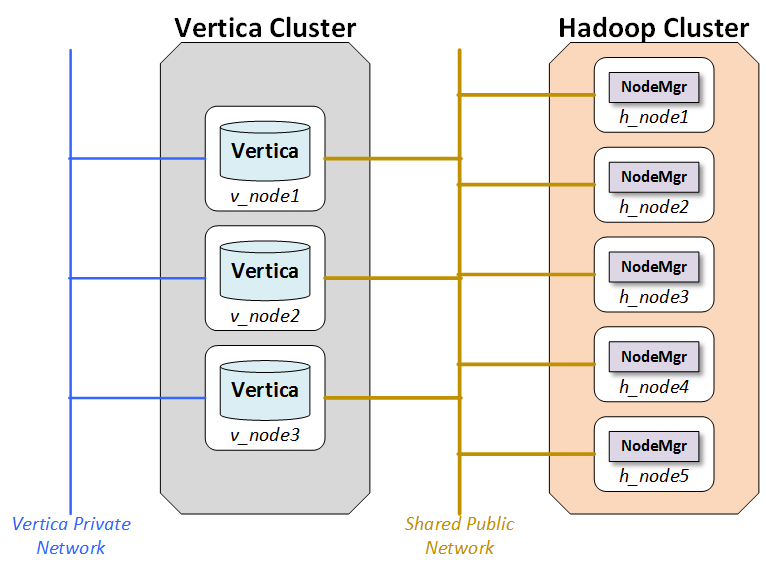

With separate clusters, a Vertica cluster and a Hadoop cluster share no nodes. You should use a high-bandwidth network connection between the two clusters.

The following figure illustrates the configuration for separate clusters::

Network

The network is a key performance component of any well-configured cluster. When Vertica stores data to HDFS it writes and reads data across the network.

The layout shown in the figure calls for two networks, and there are benefits to adding a third:

-

Database Private Network: Vertica uses a private network for command and control and moving data between nodes in support of its database functions. In some networks, the command and control and passing of data are split across two networks.

-

Database/Hadoop Shared Network: Each Vertica node must be able to connect to each Hadoop data node and the NameNode. Hadoop best practices generally require a dedicated network for the Hadoop cluster. This is not a technical requirement, but a dedicated network improves Hadoop performance. Vertica and Hadoop should share the dedicated Hadoop network.

-

Optional Client Network: Outside clients may access the clustered networks through a client network. This is not an absolute requirement, but the use of a third network that supports client connections to either Vertica or Hadoop can improve performance. If the configuration does not support a client network, than client connections should use the shared network.

Hadoop configuration parameters

For best performance, set the following parameters with the specified minimum values:

|

Parameter |

Minimum Value |

|

HDFS block size |

512MB |

|

Namenode Java Heap |

1GB |

|

Datanode Java Heap |

2GB |

2 - Configuring HDFS access

Vertica uses information from the Hadoop cluster configuration to support reading data (COPY or external tables).

Vertica uses information from the Hadoop cluster configuration to support reading data (COPY or external tables). In Eon Mode, it also uses this information to access communal storage on HDFS. Vertica nodes therefore must have access to certain Hadoop configuration files.

For both co-located and separate clusters that use Kerberos authentication, configure Vertica for Kerberos as explained in Configure Vertica for Kerberos Authentication.

Vertica requires access to the WebHDFS service and ports on all name nodes and data nodes. For more information about WebHDFS ports, see HDFS Ports in the Cloudera documentation.

Accessing Hadoop configuration files

Your Vertica nodes need access to certain Hadoop configuration files:

- If Vertica is co-located on HDFS nodes, then those configuration files are already present.

- If Vertica is running on a separate cluster, you must copy the required files to all database nodes. A simple way to do so is to configure your Vertica nodes as Hadoop edge nodes. Client applications run on edge nodes; from Hadoop's perspective, Vertica is a client application. You can use Ambari or Cloudera Manager to configure edge nodes. For more information, see the documentation from your Hadoop vendor.

Verify that the value of the HadoopConfDir configuration parameter (see Hadoop parameters) includes a directory containing the core-site.xml and hdfs-site.xml files. If you do not set a value, Vertica looks for the files in /etc/hadoop/conf. For all Vertica users, the directory is accessed by the Linux user under which the Vertica server process runs.

Vertica uses several properties defined in these configuration files. These properties are listed in HDFS file system.

Using a cluster with high availability NameNodes

If your Hadoop cluster uses High Availability (HA) Name Nodes, verify that the dfs.nameservices parameter and the individual name nodes are defined in hdfs-site.xml.

Using more than one Hadoop cluster

In some cases, a Vertica cluster requires access to more than one HDFS cluster. For example, your business might use separate HDFS clusters for separate regions, or you might need data from both test and deployment clusters.

To support multiple clusters, perform the following steps:

-

Copy the configuration files from all HDFS clusters to your database nodes. You can place the copied files in any location readable by Vertica. However, as a best practice, you should place them all in the same directory tree, with one subdirectory per HDFS cluster. The locations must be the same on all database nodes.

-

Set the HadoopConfDir configuration parameter. The value is a colon-separated path containing the directories for all of your HDFS clusters.

-

Use an explicit name node or name service in the URL when creating an external table or copying data. Do not use hdfs:/// because it could be ambiguous. For more information about URLs, see HDFS file system.

Vertica connects directly to a name node or name service; it does not otherwise distinguish among HDFS clusters. Therefore, names of HDFS name nodes and name services must be globally unique.

Verifying the configuration

Use the VERIFY_HADOOP_CONF_DIR function to verify that Vertica can find configuration files in HadoopConfDir.

Use the HDFS_CLUSTER_CONFIG_CHECK function to test access through the hdfs scheme.

For more information about testing your configuration, see Verifying HDFS configuration.

Updating configuration files

If you update the configuration files after starting Vertica, use the following statement to refresh them:

=> SELECT CLEAR_HDFS_CACHES();

The CLEAR_HDFS_CACHES function also flushes information about which name node is active in a High Availability (HA) Hadoop cluster. Therefore, the first request after calling this function is slow, because the initial connection to the name node can take more than 15 seconds.

2.1 - Verifying HDFS configuration

Use the EXTERNAL_CONFIG_CHECK function to test access to HDFS.

Use the EXTERNAL_CONFIG_CHECK function to test access to HDFS. This function calls several others. If you prefer to test individual components, or if some tests do not apply to your configuration, you can instead call the functions individually. For example, if you are not using the HCatalog Connector then you do not need to call that function. The functions are:

To run all tests, call EXTERNAL_CONFIG_CHECK with no arguments:

=> SELECT EXTERNAL_CONFIG_CHECK();

To test only some authorities, nameservices, or Hive schemas, pass a single string argument. The format is a comma-separated list of "key=value" pairs, where keys are "authority", "nameservice", and "schema". The value is passed to all of the sub-functions; see those reference pages for details on how values are interpreted.

The following example tests the configuration of only the nameservice named "ns1":

=> SELECT EXTERNAL_CONFIG_CHECK('nameservice=ns1');

2.2 - Troubleshooting reads from HDFS

You might encounter the following issues when accessing data in HDFS.

You might encounter the following issues when accessing data in HDFS.

Queries using [web]hdfs:/// show unexpected results

If you are using the /// shorthand to query external tables and see unexpected results, such as production data in your test cluster, verify that HadoopConfDir is set to the value you expect. The HadoopConfDir configuration parameter defines a path to search for the Hadoop configuration files that Vertica needs to resolve file locations. The HadoopConfDir parameter can be set at the session level, overriding the permanent value set in the database.

To debug problems with /// URLs, try replacing the URLs with ones that use an explicit nameservice or name node. If the explicit URL works, then the problem is with the resolution of the shorthand. If the explicit URL also does not work as expected, then the problem is elsewhere (such as your nameservice).

Queries take a long time to run when using HA

The High Availability Name Node feature in HDFS allows a name node to fail over to a standby name node. The dfs.client.failover.max.attempts configuration parameter (in hdfs-site.xml) specifies how many attempts to make when failing over. Vertica uses a default value of 4 if this parameter is not set. After reaching the maximum number of failover attempts, Vertica concludes that the HDFS cluster is unavailable and aborts the operation. Vertica uses the dfs.client.failover.sleep.base.millis and dfs.client.failover.sleep.max.millis parameters to decide how long to wait between retries. Typical ranges are 500 milliseconds to 15 seconds, with longer waits for successive retries.

A second parameter, ipc.client.connect.retry.interval, specifies the time to wait between attempts, with typical values being 10 to 20 seconds.

Cloudera and Hortonworks both provide tools to automatically generate configuration files. These tools can set the maximum number of failover attempts to a much higher number (50 or 100). If the HDFS cluster is unavailable (all name nodes are unreachable), Vertica can appear to hang for an extended period (minutes to hours) while trying to connect.

Failover attempts are logged in the QUERY_EVENTS system table. The following example shows how to query this table to find these events:

=> SELECT event_category, event_type, event_description, operator_name,

event_details, count(event_type) AS count

FROM query_events

WHERE event_type ilike 'WEBHDFS FAILOVER RETRY'

GROUP BY event_category, event_type, event_description, operator_name, event_details;

-[ RECORD 1 ]-----+---------------------------------------

event_category | EXECUTION

event_type | WEBHDFS FAILOVER RETRY

event_description | WebHDFS Namenode failover and retry.

operator_name | WebHDFS FileSystem

event_details | WebHDFS request failed on ns

count | 4

You can either wait for Vertica to complete or abort the connection, or set the dfs.client.failover.max.attempts parameter to a lower value.

WebHDFS error when using LibHDFS++

When creating an external table or loading data and using the hdfs scheme, you might see errors from WebHDFS failures. Such errors indicate that Vertica was not able to use the hdfs scheme and fell back to webhdfs, but that the WebHDFS configuration is incorrect.

First verify the value of the HadoopConfDir configuration parameter, which can be set at the session level. Then verify that the HDFS configuration files found there have the correct WebHDFS configuration for your Hadoop cluster. See Configuring HDFS access for information about use of these files. See your Hadoop documentation for information about WebHDFS configuration.

Vertica places too much load on the name node (LibHDFS++)

Large HDFS clusters can sometimes experience heavy load on the name node when clients, including Vertica, need to locate data. If your name node is sensitive to this load and if you are using LibHDFS++, you can instruct Vertica to distribute metadata about block locations to its nodes so that they do not have to contact the name node as often. Distributing this metadata can degrade database performance somewhat in deployments where the name node isn't contended. This performance effect is because the data must be serialized and distributed.

If protecting your name node from load is more important than query performance, set the EnableHDFSBlockInfoCache configuration parameter to 1 (true). Usually this applies to large HDFS clusters where name node contention is already an issue.

This setting applies to access through LibHDFS++ (hdfs scheme). Sometimes LibHDFS++ falls back to WebHDFS, which does not use this setting. If you have enabled this setting and you are still seeing high traffic on your name node from Vertica, check the QUERY_EVENTS system table for LibHDFS++ UNSUPPORTED OPERATION events.

Kerberos authentication errors

Kerberos authentication can fail even though a ticket is valid if Hadoop expires tickets frequently. It can also fail due to clock skew between Hadoop and Vertica nodes. For details, see Troubleshooting Kerberos authentication.

3 - Accessing kerberized HDFS data

If your Hortonworks or Cloudera Hadoop cluster uses Kerberos authentication to restrict access to HDFS, Vertica must be granted access.

If your Hortonworks or Cloudera Hadoop cluster uses Kerberos authentication to restrict access to HDFS, Vertica must be granted access. If you use Kerberos authentication for your Vertica database, and database users have HDFS access, then you can configure Vertica to use the same principals to authenticate. However, not all Hadoop administrators want to grant access to all database users, preferring to manage access in other ways. In addition, not all Vertica databases use Kerberos.

Vertica provides the following options for accessing Kerberized Hadoop clusters:

-

Using Vertica Kerberos principals.

-

Using Hadoop proxy users combined with Vertica users.

-

Using a user-specified delegation token which grants access to HDFS. Delegation tokens are issued by the Hadoop cluster.

Proxy users and delegation tokens both use a session parameter to manage identities. Vertica need not be Kerberized when using either of these methods.

Vertica does not support Kerberos for MapR.

To test your Kerberos configuration, see Verifying HDFS configuration.

3.1 - Using Kerberos with Vertica

If you use Kerberos for your Vertica cluster and your principals have access to HDFS, then you can configure Vertica to use the same credentials for HDFS.

If you use Kerberos for your Vertica cluster and your principals have access to HDFS, then you can configure Vertica to use the same credentials for HDFS.

Vertica authenticates with Hadoop in two ways that require different configurations:

-

User Authentication—On behalf of the user, by passing along the user's existing Kerberos credentials. This method is also called user impersonation. Actions performed on behalf of particular users, like executing queries, generally use user authentication.

-

Vertica Authentication—On behalf of system processes that access ROS data or the catalog, by using a special Kerberos credential stored in a keytab file.

Note

Vertica and Hadoop must use the same Kerberos server or servers (KDCs).

Vertica can interact with more than one Kerberos realm. To configure multiple realms, see Multi-realm Support.

Vertica attempts to automatically refresh Hadoop tokens before they expire. See Token expiration.

User authentication

To use Vertica with Kerberos and Hadoop, the client user first authenticates with one of the Kerberos servers (Key Distribution Center, or KDC) being used by the Hadoop cluster. A user might run kinit or sign in to Active Directory, for example.

A user who authenticates to a Kerberos server receives a Kerberos ticket. At the beginning of a client session, Vertica automatically retrieves this ticket. Vertica then uses this ticket to get a Hadoop token, which Hadoop uses to grant access. Vertica uses this token to access HDFS, such as when executing a query on behalf of the user. When the token expires, Vertica automatically renews it, also renewing the Kerberos ticket if necessary.

The user must have been granted permission to access the relevant files in HDFS. This permission is checked the first time Vertica reads HDFS data.

Vertica can use multiple KDCs serving multiple Kerberos realms, if proper cross-realm trust has been set up between realms.

Vertica authentication

Automatic processes, such as the Tuple Mover or the processes that access Eon Mode communal storage, do not log in the way users do. Instead, Vertica uses a special identity (principal) stored in a keytab file on every database node. (This approach is also used for Vertica clusters that use Kerberos but do not use Hadoop.) After you configure the keytab file, Vertica uses the principal residing there to automatically obtain and maintain a Kerberos ticket, much as in the client scenario. In this case, the client does not interact with Kerberos.

Each Vertica node uses its own principal; it is common to incorporate the name of the node into the principal name. You can either create one keytab per node, containing only that node's principal, or you can create a single keytab containing all the principals and distribute the file to all nodes. Either way, the node uses its principal to get a Kerberos ticket and then uses that ticket to get a Hadoop token.

When creating HDFS storage locations Vertica uses the principal in the keytab file, not the principal of the user issuing the CREATE LOCATION statement. The HCatalog Connector sometimes uses the principal in the keytab file, depending on how Hive authenticates users.

Configuring users and the keytab file

If you have not already configured Kerberos authentication for Vertica, follow the instructions in Configure Vertica for Kerberos authentication. Of particular importance for Hadoop integration:

-

Create one Kerberos principal per node.

-

Place the keytab files in the same location on each database node and set configuration parameter KerberosKeytabFile to that location.

-

Set KerberosServiceName to the name of the principal. (See Inform Vertica about the Kerberos principal.)

If you are using the HCatalog Connector, follow the additional steps in Configuring security in the HCatalog Connector documentation.

If you are using HDFS storage locations, give all node principals read and write permission to the HDFS directory you will use as a storage location.

3.2 - Proxy users and delegation tokens

An alternative to granting HDFS access to individual Vertica users is to use delegation tokens, either directly or with a proxy user.

An alternative to granting HDFS access to individual Vertica users is to use delegation tokens, either directly or with a proxy user. In this configuration, Vertica accesses HDFS on behalf of some other (Hadoop) user. The Hadoop users need not be Vertica users at all.

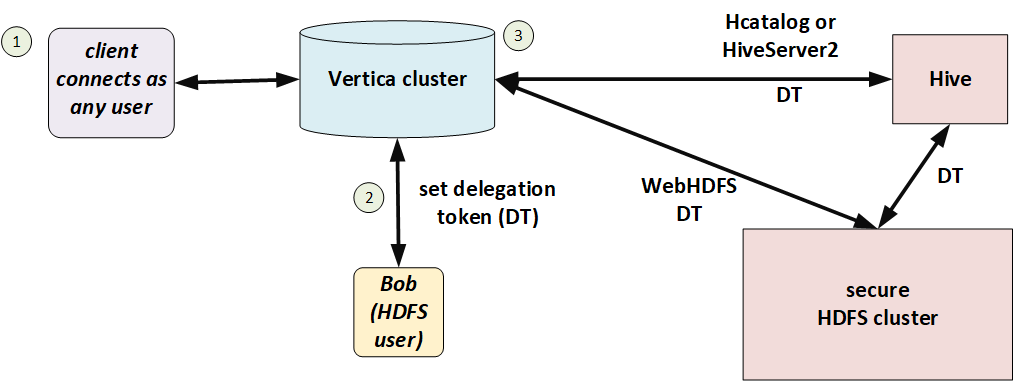

In Vertica, you can either specify the name of the Hadoop user to act on behalf of (doAs), or you can directly use a Kerberos delegation token that you obtain from HDFS (Bring Your Own Delegation Token). In the doAs case, Vertica obtains a delegation token for that user, so both approaches ultimately use delegation tokens to access files in HDFS.

Use the HadoopImpersonationConfig session parameter to specify a user or delegation token to use for HDFS access. Each session can use a different user and can use either doAs or a delegation token. The value of HadoopImpersonationConfig is a set of JSON objects.

To use delegation tokens of either type (more specifically, when HadoopImpersonationConfig is set), you must access HDFS through WebHDFS.

3.2.1 - User impersonation (doAs)

You can use user impersonation to access data in an HDFS cluster from Vertica.

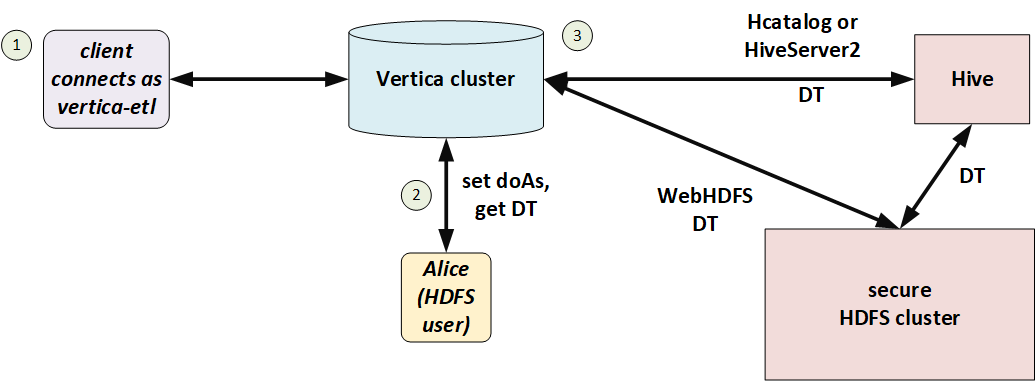

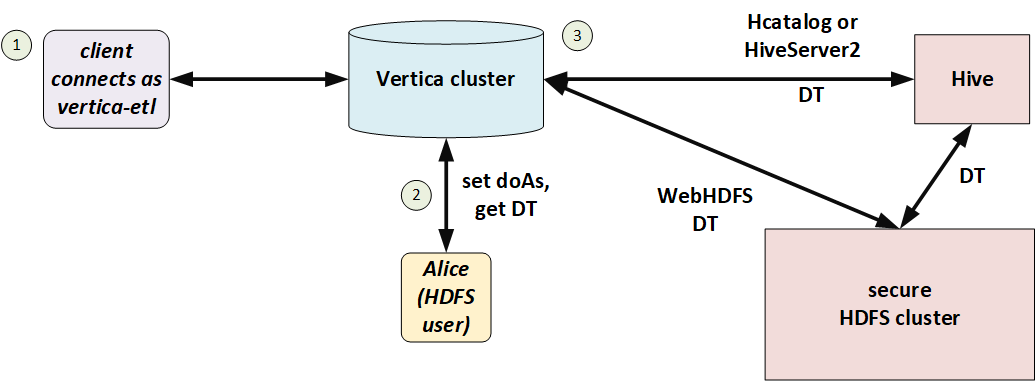

You can use user impersonation to access data in an HDFS cluster from Vertica. This approach is called "doAs" (for "do as") because Vertica uses a single proxy user on behalf of another (Hadoop) user. The impersonated Hadoop user does not need to also be a Vertica user.

In the following illustration, Alice is a Hadoop user but not a Vertica user. She connects to Vertica as the proxy user, vertica-etl. In her session, Vertica obtains a delegation token (DT) on behalf of the doAs user (Alice), and uses that delegation token to access HDFS.

You can use doAs with or without Kerberos, so long as HDFS and Vertica match. If HDFS uses Kerberos then Vertica must too.

User configuration

The Hadoop administrator must create a proxy user and allow it to access HDFS on behalf of other users. Set values in core-site.xml as in the following example:

<name>hadoop.proxyuser.vertica-etl.users</name>

<value>*</value>

<name>hadoop.proxyuser.vertica-etl.hosts</name>

<value>*</value>

In Vertica, create a corresponding user.

Session configuration

To make requests on behalf of a Hadoop user, first set the HadoopImpersonationConfig session parameter to specify the user and HDFS cluster. Vertica will access HDFS as that user until the session ends or you change the parameter.

The value of this session parameter is a collection of JSON objects. Each object specifies an HDFS cluster and a Hadoop user. For the cluster, you can specify either a name service or an individual name node. If you are using HA name node, then you must either use a name service or specify all name nodes. HadoopImpersonationConfig format describes the full JSON syntax.

The following example shows access on behalf of two different users. The users "stephanie" and "bob" are Hadoop users, not Vertica users. "vertica-etl" is a Vertica user.

$ vsql -U vertica-etl

=> ALTER SESSION SET

HadoopImpersonationConfig = '[{"nameservice":"hadoopNS", "doAs":"stephanie"}]';

=> COPY nation FROM 'webhdfs:///user/stephanie/nation.dat';

=> ALTER SESSION SET

HadoopImpersonationConfig = '[{"nameservice":"hadoopNS", "doAs":"bob"}, {"authority":"hadoop2:50070", "doAs":"rob"}]';

=> COPY nation FROM 'webhdfs:///user/bob/nation.dat';

Vertica uses Hadoop delegation tokens, obtained from the name node, to impersonate Hadoop users. In a long-running session, a token could expire. Vertica attempts to renew tokens automatically; see Token expiration.

Testing the configuration

You can use the HADOOP_IMPERSONATION_CONFIG_CHECK function to test your HDFS delegation tokens and HCATALOGCONNECTOR_CONFIG_CHECK to test your HCatalog Connector delegation token.

3.2.2 - Bring your own delegation token

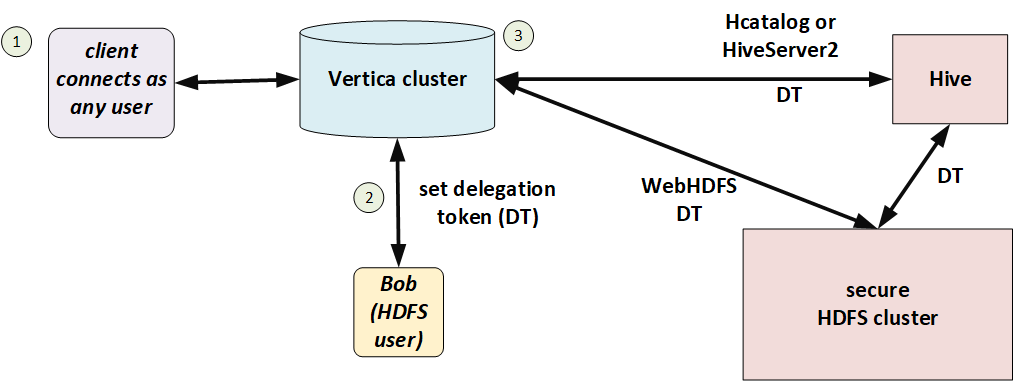

Instead of creating a proxy user and giving it access to HDFS for use with doAs, you can give Vertica a Hadoop delegation token to use.

Instead of creating a proxy user and giving it access to HDFS for use with doAs, you can give Vertica a Hadoop delegation token to use. You must obtain this delegation token from the Hadoop name node. In this model, security is handled entirely on the Hadoop side, with Vertica just passing along a token. Vertica may or may not be Kerberized.

A typical workflow is:

-

In an ETL front end, a user submits a query.

-

The ETL system uses authentication and authorization services to verify that the user has sufficient permission to run the query.

-

The ETL system requests a delegation token for the user from the name node.

-

The ETL system makes a client connection to Vertica, sets the delegation token for the session, and runs the query.

When using a delegation token, clients can connect as any Vertica user. No proxy user is required.

In the following illustration, Bob has a Hadoop-issued delegation token. He connects to Vertica and Vertica uses that delegation token to access files in HDFS.

Session configuration

Set the HadoopImpersonationConfig session parameter to specify the delegation token and HDFS cluster. Vertica will access HDFS using that delegation token until the session ends, the token expires, or you change the parameter.

The value of this session parameter is a collection of JSON objects. Each object specifies a delegation token ("token") in WebHDFS format and an HDFS name service or name node. HadoopImpersonationConfig format describes the full JSON syntax.

The following example shows access on behalf of two different users. The users "stephanie" and "bob" are Hadoop users, not Vertica users. "dbuser1" is a Vertica user with no special privileges.

$ vsql -U dbuser1

=> ALTER SESSION SET

HadoopImpersonationConfig ='[{"authority":"hadoop1:50070","token":"JAAGZGJldGwxBmRiZXRsMQCKAWDXJgB9igFg-zKEfY4gao4BmhSJYtXiWqrhBHbbUn4VScNg58HWQxJXRUJIREZTIGRlbGVnYXRpb24RMTAuMjAuMTAwLjU0OjgwMjA"}]';

=> COPY nation FROM 'webhdfs:///user/stephanie/nation.dat';

=> ALTER SESSION SET

HadoopImpersonationConfig ='[{"authority":"hadoop1:50070","token":"HgADdG9tA3RvbQCKAWDXJgAoigFg-zKEKI4gaI4BmhRoOUpq_jPxrVhZ1NSMnodAQnhUthJXRUJIREZTIGRlbGVnYXRpb24RMTAuMjAuMTAwLjU0OjgwMjA"}]';

=> COPY nation FROM 'webhdfs:///user/bob/nation.dat';

You can use the WebHDFS REST API to get delegation tokens:

$ curl -s --noproxy "*" --negotiate -u: -X GET "http://hadoop1:50070/webhdfs/v1/?op=GETDELEGATIONTOKEN"

Vertica does not, and cannot, renew delegation tokens when they expire. You must either keep sessions shorter than token lifetime or implement a renewal scheme.

Delegation tokens and the HCatalog Connector

HiveServer2 uses a different format for delegation tokens. To use the HCatalog Connector, therefore, you must set two delegation tokens, one as usual (authority) and one for HiveServer2 (schema). The HCatalog Connector uses the schema token to access metadata and the authority token to access data. The schema name is the same Hive schema you specified in CREATE HCATALOG SCHEMA. The following example shows how to use these two delegation tokens.

$ vsql -U dbuser1

-- set delegation token for user and HiveServer2

=> ALTER SESSION SET

HadoopImpersonationConfig='[

{"nameservice":"hadoopNS","token":"JQAHcmVsZWFzZQdyZWxlYXNlAIoBYVJKrYSKAWF2VzGEjgmzj_IUCIrI9b8Dqu6awFTHk5nC-fHB8xsSV0VCSERGUyBkZWxlZ2F0aW9uETEwLjIwLjQyLjEwOTo4MDIw"},

{"schema":"access","token":"UwAHcmVsZWFzZQdyZWxlYXNlL2hpdmUvZW5nLWc5LTEwMC52ZXJ0aWNhY29ycC5jb21AVkVSVElDQUNPUlAuQ09NigFhUkmyTooBYXZWNk4BjgETFKN2xPURn19Yq9tf-0nekoD51TZvFUhJVkVfREVMRUdBVElPTl9UT0tFThZoaXZlc2VydmVyMkNsaWVudFRva2Vu"}]';

-- uses HiveServer2 token to get metadata

=> CREATE HCATALOG SCHEMA access WITH hcatalog_schema 'access';

-- uses both tokens

=> SELECT * FROM access.t1;

--uses only HiveServer2 token

=> SELECT * FROM hcatalog_tables;

HiveServer2 does not provide a REST API for delegation tokens like WebHDFS does. See Getting a HiveServer2 delegation token for some tips.

Testing the configuration

You can use the HADOOP_IMPERSONATION_CONFIG_CHECK function to test your HDFS delegation tokens and HCATALOGCONNECTOR_CONFIG_CHECK to test your HCatalog Connector delegation token.

3.2.3 - Getting a HiveServer2 delegation token

To acccess Hive metadata using HiveServer2, you need a special delegation token.

To acccess Hive metadata using HiveServer2, you need a special delegation token. (See Bring your own delegation token.) HiveServer2 does not provide an easy way to get this token, unlike the REST API that grants HDFS (data) delegation tokens.

The following utility code shows a way to get this token. You will need to modify this code for your own cluster; in particular, change the value of the connectURL static.

import java.io.FileWriter;

import java.io.PrintStream;

import java.io.PrintWriter;

import java.io.StringWriter;

import java.io.Writer;

import java.security.PrivilegedExceptionAction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.Statement;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hive.shims.Utils;

import org.apache.hadoop.security.UserGroupInformation;

import org.apache.hive.jdbc.HiveConnection;

import org.json.simple.JSONArray;

import org.json.simple.JSONObject;

public class JDBCTest {

public static final String driverName = "org.apache.hive.jdbc.HiveDriver";

public static String connectURL = "jdbc:hive2://node2.cluster0.example.com:2181,node1.cluster0.example.com:2181,node3.cluster0.example.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2";

public static String schemaName = "hcat";

public static String verticaUser = "condor";

public static String proxyUser = "condor-2";

public static String krb5conf = "/home/server/kerberos/krb5.conf";

public static String realm = "EXAMPLE.COM";

public static String keytab = "/home/server/kerberos/kt.keytab";

public static void main(String[] args) {

if (args.length < 7) {

System.out.println(

"Usage: JDBCTest <jdbc_url> <hive_schema> <kerberized_user> <proxy_user> <krb5_conf> <krb_realm> <krb_keytab>");

System.exit(1);

}

connectURL = args[0];

schemaName = args[1];

verticaUser = args[2];

proxyUser = args[3];

krb5conf = args[4];

realm = args[5];

keytab = args[6];

System.out.println("connectURL: " + connectURL);

System.out.println("schemaName: " + schemaName);

System.out.println("verticaUser: " + verticaUser);

System.out.println("proxyUser: " + proxyUser);

System.out.println("krb5conf: " + krb5conf);

System.out.println("realm: " + realm);

System.out.println("keytab: " + keytab);

try {

Class.forName("org.apache.hive.jdbc.HiveDriver");

System.out.println("Found HiveServer2 JDBC driver");

} catch (ClassNotFoundException e) {

System.out.println("Couldn't find HiveServer2 JDBC driver");

}

try {

Configuration conf = new Configuration();

System.setProperty("java.security.krb5.conf", krb5conf);

conf.set("hadoop.security.authentication", "kerberos");

UserGroupInformation.setConfiguration(conf);

dtTest();

} catch (Throwable e) {

Writer stackString = new StringWriter();

e.printStackTrace(new PrintWriter(stackString));

System.out.println(e);

System.out.printf("Error occurred when connecting to HiveServer2 with [%s]: %s\n%s\n",

new Object[] { connectURL, e.getMessage(), stackString.toString() });

}

}

private static void dtTest() throws Exception {

UserGroupInformation user = UserGroupInformation.loginUserFromKeytabAndReturnUGI(verticaUser + "@" + realm, keytab);

user.doAs(new PrivilegedExceptionAction() {

public Void run() throws Exception {

System.out.println("In doas: " + UserGroupInformation.getLoginUser());

Connection con = DriverManager.getConnection(JDBCTest.connectURL);

System.out.println("Connected to HiveServer2");

JDBCTest.showUser(con);

System.out.println("Getting delegation token for user");

String token = ((HiveConnection) con).getDelegationToken(JDBCTest.proxyUser, "hive/_HOST@" + JDBCTest.realm);

System.out.println("Got token: " + token);

System.out.println("Closing original connection");

con.close();

System.out.println("Setting delegation token in UGI");

Utils.setTokenStr(Utils.getUGI(), token, "hiveserver2ClientToken");

con = DriverManager.getConnection(JDBCTest.connectURL + ";auth=delegationToken");

System.out.println("Connected to HiveServer2 with delegation token");

JDBCTest.showUser(con);

con.close();

JDBCTest.writeDTJSON(token);

return null;

}

});

}

private static void showUser(Connection con) throws Exception {

String sql = "select current_user()";

Statement stmt = con.createStatement();

ResultSet res = stmt.executeQuery(sql);

StringBuilder result = new StringBuilder();

while (res.next()) {

result.append(res.getString(1));

}

System.out.println("\tcurrent_user: " + result.toString());

}

private static void writeDTJSON(String token) {

JSONArray arr = new JSONArray();

JSONObject obj = new JSONObject();

obj.put("schema", schemaName);

obj.put("token", token);

arr.add(obj);

try {

FileWriter fileWriter = new FileWriter("hcat_delegation.json");

fileWriter.write(arr.toJSONString());

fileWriter.flush();

} catch (Exception e) {

e.printStackTrace();

}

}

}

Following is an example call and its output:

$ java -cp hs2token.jar JDBCTest 'jdbc:hive2://test.example.com:10000/default;principal=hive/_HOST@EXAMPLE.COM' "default" "testuser" "test" "/etc/krb5.conf" "EXAMPLE.COM" "/test/testuser.keytab"

connectURL: jdbc:hive2://test.example.com:10000/default;principal=hive/_HOST@EXAMPLE.COM

schemaName: default

verticaUser: testuser

proxyUser: test

krb5conf: /etc/krb5.conf

realm: EXAMPLE.COM

keytab: /test/testuser.keytab

Found HiveServer2 JDBC driver

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

In doas: testuser@EXAMPLE.COM (auth:KERBEROS)

Connected to HiveServer2

current_user: testuser

Getting delegation token for user

Got token: JQAEdGVzdARoaXZlB3JlbGVhc2WKAWgvBOwzigFoUxFwMwKOAgMUHfqJ5ma7_27LiePN8C7MxJ682bsVSElWRV9ERUxFR0FUSU9OX1RPS0VOFmhpdmVzZXJ2ZXIyQ2xpZW50VG9rZW4

Closing original connection

Setting delegation token in UGI

Connected to HiveServer2 with delegation token

current_user: testuser

3.2.4 - HadoopImpersonationConfig format

The value of the HadoopImpersonationConfig session parameter is a set of one or more JSON objects.

The value of the HadoopImpersonationConfig session parameter is a set of one or more JSON objects. Each object describes one doAs user or delegation token for one Hadoop destination. You must use WebHDFS, not LibHDFS++, to use impersonation.

Syntax

[ { ("doAs" | "token"): value,

("nameservice" | "authority" | "schema"): value} [,...]

]

Properties

doAs |

The name of a Hadoop user to impersonate. |

token |

A delegation token to use for HDFS access. |

nameservice |

A Hadoop name service. All access to this name service uses the doAs user or delegation token. |

authority |

A name node authority. All access to this authority uses the doAs user or delegation token. If the name node fails over to another name node, the doAs user or delegation token does not automatically apply to the failover name node. If you are using HA name node, use nameservice instead of authority or include objects for every name node. |

schema |

A Hive schema, for use with the HCatalog Connector. Vertica uses this object's doAs user or token to access Hive metadata only. For data access you must also specify a name service or authority object, just like for all other data access. |

Examples

In the following example of doAs, Bob is a Hadoop user and vertica-etl is a Kerberized proxy user.

$ kinit vertica-etl -kt /home/dbadmin/vertica-etl.keytab

$ vsql -U vertica-etl

=> ALTER SESSION SET

HadoopImpersonationConfig = '[{"nameservice":"hadoopNS", "doAs":"Bob"}]';

=> COPY nation FROM 'webhdfs:///user/bob/nation.dat';

In the following example, the current Vertica user (it doesn't matter who that is) uses a Hadoop delegation token. This token belongs to Alice, but you never specify the user name here. Instead, you use it to get the delegation token from Hadoop.

$ vsql -U dbuser1

=> ALTER SESSION SET

HadoopImpersonationConfig ='[{"nameservice":"hadoopNS","token":"JAAGZGJldGwxBmRiZXRsMQCKAWDXJgB9igFg-zKEfY4gao4BmhSJYtXiWqrhBHbbUn4VScNg58HWQxJXRUJIREZTIGRlbGVnYXRpb24RMTAuMjAuMTAwLjU0OjgwMjA"}]';

=> COPY nation FROM 'webhdfs:///user/alice/nation.dat';

In the following example, "authority" specifies the (single) name node on a Hadoop cluster that does not use high availability.

$ vsql -U dbuser1

=> ALTER SESSION SET

HadoopImpersonationConfig ='[{"authority":"hadoop1:50070", "doAs":"Stephanie"}]';

=> COPY nation FROM 'webhdfs://hadoop1:50070/user/stephanie/nation.dat';

To access data in Hive you need to specify two delegation tokens. The first, for a name service or authority, is for data access as usual. The second is for the HiveServer2 metadata for the schema. HiveServer2 requires a delegation token in WebHDFS format. The schema name is the Hive schema you specify with CREATE HCATALOG SCHEMA.

$ vsql -U dbuser1

-- set delegation token for user and HiveServer2

=> ALTER SESSION SET

HadoopImpersonationConfig='[

{"nameservice":"hadoopNS","token":"JQAHcmVsZWFzZQdyZWxlYXNlAIoBYVJKrYSKAWF2VzGEjgmzj_IUCIrI9b8Dqu6awFTHk5nC-fHB8xsSV0VCSERGUyBkZWxlZ2F0aW9uETEwLjIwLjQyLjEwOTo4MDIw"},

{"schema":"access","token":"UwAHcmVsZWFzZQdyZWxlYXNlL2hpdmUvZW5nLWc5LTEwMC52ZXJ0aWNhY29ycC5jb21AVkVSVElDQUNPUlAuQ09NigFhUkmyTooBYXZWNk4BjgETFKN2xPURn19Yq9tf-0nekoD51TZvFUhJVkVfREVMRUdBVElPTl9UT0tFThZoaXZlc2VydmVyMkNsaWVudFRva2Vu"}]';

-- uses HiveServer2 token to get metadata

=> CREATE HCATALOG SCHEMA access WITH hcatalog_schema 'access';

-- uses both tokens

=> SELECT * FROM access.t1;

--uses only HiveServer2 token

=> SELECT * FROM hcatalog_tables;

Each object in the HadoopImpersonationConfig collection specifies one connection to one Hadoop cluster. You can add as many connections as you like, including to more than one Hadoop cluster. The following example shows delegation tokens for two different Hadoop clusters. Vertica uses the correct token for each cluster when connecting.

$ vsql -U dbuser1

=> ALTER SESSION SET

HadoopImpersonationConfig ='[

{"nameservice":"productionNS","token":"JAAGZGJldGwxBmRiZXRsMQCKAWDXJgB9igFg-zKEfY4gao4BmhSJYtXiWqrhBHbbUn4VScNg58HWQxJXRUJIREZTIGRlbGVnYXRpb24RMTAuMjAuMTAwLjU0OjgwMjA"},

{"nameservice":"testNS", "token":"HQAHcmVsZWFzZQdyZWxlYXNlAIoBYVJKrYSKAWF2VzGEjgmzj_IUCIrI9b8Dqu6awFTHk5nC-fHB8xsSV0VCSERGUyBkZWxlZ2F0aW9uETEwLjIwLjQyLjEwOTo4MDIw"}]';

=> COPY clicks FROM 'webhdfs://productionNS/data/clickstream.dat';

=> COPY testclicks FROM 'webhdfs://testNS/data/clickstream.dat';

3.3 - Token expiration

Vertica uses Hadoop tokens when using Kerberos tickets (Using Kerberos from [%=Vertica.DBMS_SHORT%]) or doAs (User Impersonation (doAs)).

Vertica uses Hadoop tokens when using Kerberos tickets (Using Kerberos with Vertica) or doAs (User impersonation (doAs)). Vertica attempts to automatically refresh Hadoop tokens before they expire, but you can also set a minimum refresh frequency if you prefer. Use the HadoopFSTokenRefreshFrequency configuration parameter to specify the frequency in seconds:

=> ALTER DATABASE exampledb SET HadoopFSTokenRefreshFrequency = '86400';

If the current age of the token is greater than the value specified in this parameter, Vertica refreshes the token before accessing data stored in HDFS.

Vertica does not refresh delegation tokens (Bring your own delegation token).

4 - Using HDFS storage locations

Vertica stores data in its native format, ROS, in storage locations.

Vertica stores data in its native format, ROS, in storage locations. You can place storage locations on the local Linux file system or in HDFS. If you place storage locations on HDFS, you must perform additional configuration in HDFS to be able to manage them. These are in addition to the requirements in Vertica for managing storage locations and backup/restore.

If you use HDFS storage locations, the HDFS data must be available when you start Vertica. Your HDFS cluster must be operational, and the ROS files must be present. If you moved data files, or they are corrupted, or your HDFS cluster is not responsive, Vertica cannot start.

4.1 - Hadoop configuration for backup and restore

If your Vertica cluster uses storage locations on HDFS, and you want to be able to back up and restore those storage locations using vbr, you must enable snapshotting in HDFS.

If your Vertica cluster uses storage locations on HDFS, and you want to be able to back up and restore those storage locations using vbr, you must enable snapshotting in HDFS.

The Vertica backup script uses HDFS's snapshotting feature to create a backup of HDFS storage locations. A directory must allow snapshotting before HDFS can take a snapshot. Only a Hadoop superuser can enable snapshotting on a directory. Vertica can enable snapshotting automatically if the database administrator is also a Hadoop superuser.

If HDFS is unsecured, the following instructions apply to the database administrator account, usually dbadmin. If HDFS uses Kerberos security, the following instructions apply to the principal stored in the Vertica keytab file, usually vertica. The instructions below use the term "database account" to refer to this user.

We recommend that you make the database administrator or principal a Hadoop superuser. If you are not able to do so, you must enable snapshotting on the directory before configuring it for use by Vertica.

The steps you need to take to make the Vertica database administrator account a superuser depend on the distribution of Hadoop you are using. Consult your Hadoop distribution's documentation for details.

Manually enabling snapshotting for a directory

If you cannot grant superuser status to the database account, you can instead enable snapshotting of each directory manually. Use the following command:

$ hdfs dfsadmin -allowSnapshot path

Issue this command for each directory on each node. Remember to do this each time you add a new node to your HDFS cluster.

Nested snapshottable directories are not allowed, so you cannot enable snapshotting for a parent directory to automatically enable it for child directories. You must enable it for each individual directory.

Additional requirements for Kerberos

If HDFS uses Kerberos, then in addition to granting the keytab principal access, you must give Vertica access to certain Hadoop configuration files. See Configuring Kerberos.

Testing the database account's ability to make HDFS directories snapshottable

After making the database account a Hadoop superuser, verify that the account can set directories snapshottable:

-

Log into the Hadoop cluster as the database account (dbadmin by default).

-

Determine a location in HDFS where the database administrator can create a directory. The /tmp directory is usually available. Create a test HDFS directory using the command:

$ hdfs dfs -mkdir /path/testdir

-

Make the test directory snapshottable using the command:

$ hdfs dfsadmin -allowSnapshot /path/testdir

The following example demonstrates creating an HDFS directory and making it snapshottable:

$ hdfs dfs -mkdir /tmp/snaptest

$ hdfs dfsadmin -allowSnapshot /tmp/snaptest

Allowing snaphot on /tmp/snaptest succeeded

4.2 - Removing HDFS storage locations

The steps to remove an HDFS storage location are similar to standard storage locations:.

The steps to remove an HDFS storage location are similar to standard storage locations:

-

Remove any existing data from the HDFS storage location by using SET_OBJECT_STORAGE_POLICY to change each object's storage location. Alternatively, you can use CLEAR_OBJECT_STORAGE_POLICY. Because the Tuple Mover runs infrequently, set the enforce-storage-move parameter to true to make the change immediately.

-

Retire the location on each host that has the storage location defined using RETIRE_LOCATION. Set enforce-storage-move to true.

-

Drop the location on each node using DROP_LOCATION.

-

Optionally remove the snapshots and files from the HDFS directory for the storage location.

-

Perform a full database backup.

For more information about changing storage policies, changing usage, retiring locations, and dropping locations, see Managing storage locations.

Important

If you have backed up the data in the HDFS storage location you are removing, you must perform a full database backup after you remove the location. If you do not and restore the database to a backup made before you removed the location, the location's data is restored.

Removing storage location files from HDFS

Dropping an HDFS storage location does not automatically clean the HDFS directory that stored the location's files. Any snapshots of the data files created when backing up the location are also not deleted. These files consume disk space on HDFS and also prevent the directory from being reused as an HDFS storage location. Vertica cannot create a storage location in a directory that contains existing files or subdirectories.

You must log into the Hadoop cluster to delete the files from HDFS. An alternative is to use some other HDFS file management tool.

Removing backup snapshots

HDFS returns an error if you attempt to remove a directory that has snapshots:

$ hdfs dfs -rm -r -f -skipTrash /user/dbadmin/v_vmart_node0001

rm: The directory /user/dbadmin/v_vmart_node0001 cannot be deleted since

/user/dbadmin/v_vmart_node0001 is snapshottable and already has snapshots

The Vertica backup script creates snapshots of HDFS storage locations as part of the backup process. If you made backups of your HDFS storage location, you must delete the snapshots before removing the directories.

HDFS stores snapshots in a subdirectory named .snapshot. You can list the snapshots in the directory using the standard HDFS ls command:

$ hdfs dfs -ls /user/dbadmin/v_vmart_node0001/.snapshot

Found 1 items

drwxrwx--- - dbadmin supergroup 0 2014-09-02 10:13 /user/dbadmin/v_vmart_node0001/.snapshot/s20140902-101358.629

To remove snapshots, use the command:

$ hdfs dfs -removeSnapshot directory snapshotname

The following example demonstrates the command to delete the snapshot shown in the previous example:

$ hdfs dfs -deleteSnapshot /user/dbadmin/v_vmart_node0001 s20140902-101358.629

You must delete each snapshot from the directory for each host in the cluster. After you have deleted the snapshots, you can delete the directories in the storage location.

Important

Each snapshot's name is based on a timestamp down to the millisecond. Nodes independently create their own snapshots. They do not synchronize snapshot creation, so their snapshot names differ. You must list each node's snapshot directory to learn the names of the snapshots it contains.

See Apache's HDFS Snapshot documentation for more information about managing and removing snapshots.

Removing the storage location directories

You can remove the directories that held the storage location's data by either of the following methods:

-

Use an HDFS file manager to delete directories. See your Hadoop distribution's documentation to determine if it provides a file manager.

-

Log into the Hadoop Name Node using the database administrator’s account and use HDFS's rmr command to delete the directories. See Apache's File System Shell Guide for more information.

The following example uses the HDFS rmr command from the Linux command line to delete the directories left behind in the HDFS storage location directory /user/dbamin. It uses the -skipTrash flag to force the immediate deletion of the files:

$ hdfsp dfs -ls /user/dbadmin

Found 3 items

drwxrwx--- - dbadmin supergroup 0 2014-08-29 15:11 /user/dbadmin/v_vmart_node0001

drwxrwx--- - dbadmin supergroup 0 2014-08-29 15:11 /user/dbadmin/v_vmart_node0002

drwxrwx--- - dbadmin supergroup 0 2014-08-29 15:11 /user/dbadmin/v_vmart_node0003

$ hdfs dfs -rmr -skipTrash /user/dbadmin/*

Deleted /user/dbadmin/v_vmart_node0001

Deleted /user/dbadmin/v_vmart_node0002

Deleted /user/dbadmin/v_vmart_node0003

5 - Using the HCatalog Connector

The Vertica HCatalog Connector lets you access data stored in Apache's Hive data warehouse software the same way you access it within a native Vertica table.

The Vertica HCatalog Connector lets you access data stored in Apache's Hive data warehouse software the same way you access it within a native Vertica table.

If your files are in the Optimized Columnar Row (ORC) or Parquet format and do not use complex types, the HCatalog Connector creates an external table and uses the ORC or Parquet reader instead of using the Java SerDe. See ORC and PARQUET for more information about these readers.

The HCatalog Connector performs predicate pushdown to improve query performance. Instead of reading all data across the network to evaluate a query, the HCatalog Connector moves the evaluation of predicates closer to the data. Predicate pushdown applies to Hive partition pruning, ORC stripe pruning, and Parquet row-group pruning. The HCatalog Connector supports predicate pushdown for the following predicates: >, >=, =, <>, <=, <.

5.1 - Overview

There are several Hadoop components that you need to understand to use the HCatalog connector:.

There are several Hadoop components that you need to understand to use the HCatalog connector:

-

Apache Hive lets you query data stored in a Hadoop Distributed File System (HDFS) the same way you query data stored in a relational database. Behind the scenes, Hive uses a set of serializer and deserializer (SerDe) classes to extract data from files stored in HDFS and break it into columns and rows. Each SerDe handles data files in a specific format. For example, one SerDe extracts data from comma-separated data files while another interprets data stored in JSON format.

-

Apache HCatalog is a component of the Hadoop ecosystem that makes Hive's metadata available to other Hadoop components (such as Pig).

-

HiveServer2 makes HCatalog and Hive data available via JDBC. Through it, a client can make requests to retrieve data stored in Hive, as well as information about the Hive schema. HiveServer2 can use authorization services (Sentry or Ranger). HiverServer2 can use Hive LLAP (Live Long And Process).

The Vertica HCatalog Connector lets you transparently access data that is available through HiveServer2. You use the connector to define a schema in Vertica that corresponds to a Hive database or schema. When you query data within this schema, the HCatalog Connector transparently extracts and formats the data from Hadoop into tabular data. Vertica supports authorization services and Hive LLAP.

Note

You can use the WebHCat service instead of HiveServer2, but performance is usually better with HiveServer2. Support for WebHCat is deprecated. To use WebHCat, set the HCatalogConnectorUseHiveServer2 configuration parameter to 0. See

Hadoop parameters. WebHCat does not support authorization services.

HCatalog connection features

The HCatalog Connector lets you query data stored in Hive using the Vertica native SQL syntax. Some of its main features are:

-

The HCatalog Connector always reflects the current state of data stored in Hive.

-

The HCatalog Connector uses the parallel nature of both Vertica and Hadoop to process Hive data. The result is that querying data through the HCatalog Connector is often faster than querying the data directly through Hive.

-

Because Vertica performs the extraction and parsing of data, the HCatalog Connector does not significantly increase the load on your Hadoop cluster.

-

The data you query through the HCatalog Connector can be used as if it were native Vertica data. For example, you can execute a query that joins data from a table in an HCatalog schema with a native table.

HCatalog Connector considerations

There are a few things to keep in mind when using the HCatalog Connector:

-

Hive's data is stored in flat files in a distributed file system, requiring it to be read and deserialized each time it is queried. This deserialization causes Hive performance to be much slower than that of Vertica. The HCatalog Connector has to perform the same process as Hive to read the data. Therefore, querying data stored in Hive using the HCatalog Connector is much slower than querying a native Vertica table. If you need to perform extensive analysis on data stored in Hive, you should consider loading it into Vertica. Vertica optimization often makes querying data through the HCatalog Connector faster than directly querying it through Hive.

-

If Hive uses Kerberos security, the HCatalog Connector uses the querying user's credentials in queries by default. If Hive uses Sentry or Ranger to enforce security, then you must either disable this behavior in Vertica by setting EnableHCatImpersonation to 0 or grant users access to the underlying data in HDFS. (Sentry supports ACL synchronization to automatically grant access.) Alternatively, you can specify delegation tokens for data and metadata access. See Configuring security.

-

Hive supports complex data types such as lists, maps, and structs that Vertica does not support. Columns containing these data types are converted to a JSON representation of the data type and stored as a VARCHAR. See Data type conversions from Hive to Vertica.

Note

The HCatalog Connector is read-only. It cannot insert data into Hive.

5.2 - How the HCatalog Connector works

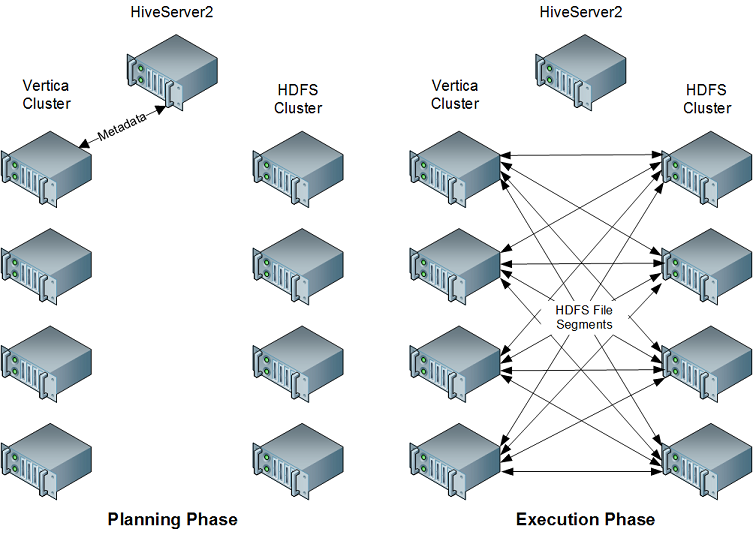

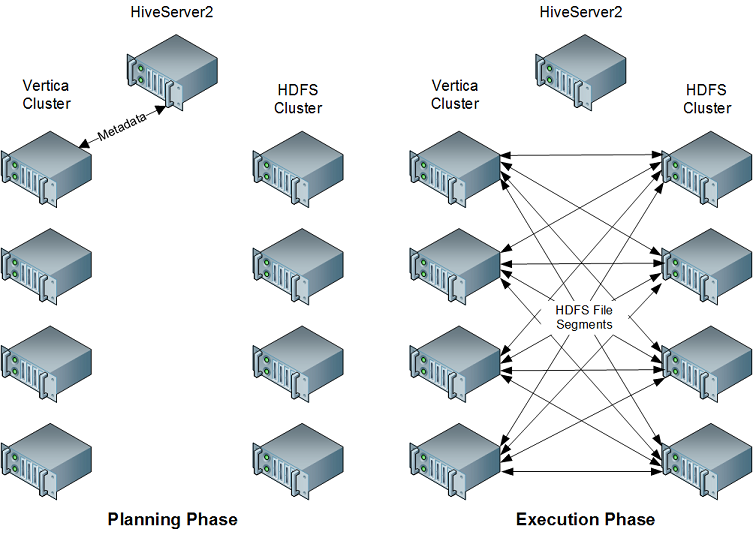

When planning a query that accesses data from a Hive table, the Vertica HCatalog Connector on the initiator node contacts HiveServer2 (or WebHCat) in your Hadoop cluster to determine if the table exists.

When planning a query that accesses data from a Hive table, the Vertica HCatalog Connector on the initiator node contacts HiveServer2 (or WebHCat) in your Hadoop cluster to determine if the table exists. If it does, the connector retrieves the table's metadata from the metastore database so the query planning can continue. When the query executes, all nodes in the Vertica cluster directly retrieve the data necessary for completing the query from HDFS. They then use the Hive SerDe classes to extract the data so the query can execute. When accessing data in ORC or Parquet format, the HCatalog Connector uses Vertica's internal readers for these formats instead of the Hive SerDe classes.

This approach takes advantage of the parallel nature of both Vertica and Hadoop. In addition, by performing the retrieval and extraction of data directly, the HCatalog Connector reduces the impact of the query on the Hadoop cluster.

For files in the Optimized Columnar Row (ORC) or Parquet format that do not use complex types, the HCatalog Connector creates an external table and uses the ORC or Parquet reader instead of using the Java SerDe. You can direct these readers to access custom Hive partition locations if Hive used them when writing the data. By default these extra checks are turned off to improve performance.

5.3 - HCatalog Connector requirements

Before you can use the HCatalog Connector, both your Vertica and Hadoop installations must meet the following requirements.

Before you can use the HCatalog Connector, both your Vertica and Hadoop installations must meet the following requirements.

Vertica requirements

All of the nodes in your cluster must have a Java Virtual Machine (JVM) installed. You must use the same Java version that the Hadoop cluster uses. See Installing the Java Runtime on Your Vertica Cluster.

You must also add certain libraries distributed with Hadoop and Hive to your Vertica installation directory. See Configuring Vertica for HCatalog.

Hadoop requirements

Your Hadoop cluster must meet several requirements to operate correctly with the Vertica Connector for HCatalog:

-

It must have Hive, HiveServer2, and HCatalog installed and running. See Apache's HCatalog page for more information.

-

The HiveServer2 server and all of the HDFS nodes that store HCatalog data must be directly accessible from all of the hosts in your Vertica database. Verify that any firewall separating the Hadoop cluster and the Vertica cluster will pass HiveServer2, metastore database, and HDFS traffic.

-

The data that you want to query must be in an internal or external Hive table.

-

If a table you want to query uses a non-standard SerDe, you must install the SerDe's classes on your Vertica cluster before you can query the data. See Using nonstandard SerDes.

5.4 - Installing the Java runtime on your Vertica cluster

The HCatalog Connector requires a 64-bit Java Virtual Machine (JVM).

The HCatalog Connector requires a 64-bit Java Virtual Machine (JVM). The JVM must support Java 6 or later, and must be the same version as the one installed on your Hadoop nodes.

Note

If your Vertica cluster is configured to execute User Defined Extensions (UDxs) written in Java, it already has a correctly-configured JVM installed. See

Developing user-defined extensions (UDxs) for more information.

Installing Java on your Vertica cluster is a two-step process:

-

Install a Java runtime on all of the hosts in your cluster.

-

Set the JavaBinaryForUDx configuration parameter to tell Vertica the location of the Java executable.

Installing a Java runtime

For Java-based features, Vertica requires a 64-bit Java 6 (Java version 1.6) or later Java runtime. Vertica supports runtimes from either Oracle or OpenJDK. You can choose to install either the Java Runtime Environment (JRE) or Java Development Kit (JDK), since the JDK also includes the JRE.

Many Linux distributions include a package for the OpenJDK runtime. See your Linux distribution's documentation for information about installing and configuring OpenJDK.

To install the Oracle Java runtime, see the Java Standard Edition (SE) Download Page. You usually run the installation package as root in order to install it. See the download page for instructions.

Once you have installed a JVM on each host, ensure that the java command is in the search path and calls the correct JVM by running the command:

$ java -version

This command should print something similar to:

java version "1.8.0_102"

Java(TM) SE Runtime Environment (build 1.8.0_102-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.102-b14, mixed mode)

Note

Any previously installed Java VM on your hosts may interfere with a newly installed Java runtime. See your Linux distribution's documentation for instructions on configuring which JVM is the default. Unless absolutely required, you should uninstall any incompatible version of Java before installing the Java 6 or Java 7 runtime.

Setting the JavaBinaryForUDx configuration parameter

The JavaBinaryForUDx configuration parameter tells Vertica where to look for the JRE to execute Java UDxs. After you have installed the JRE on all of the nodes in your cluster, set this parameter to the absolute path of the Java executable. You can use the symbolic link that some Java installers create (for example /usr/bin/java). If the Java executable is in your shell search path, you can get the path of the Java executable by running the following command from the Linux command line shell:

$ which java

/usr/bin/java

If the java command is not in the shell search path, use the path to the Java executable in the directory where you installed the JRE. Suppose you installed the JRE in /usr/java/default (which is where the installation package supplied by Oracle installs the Java 1.6 JRE). In this case the Java executable is /usr/java/default/bin/java.

You set the configuration parameter by executing the following statement as a database superuser:

=> ALTER DATABASE DEFAULT SET PARAMETER JavaBinaryForUDx = '/usr/bin/java';

See ALTER DATABASE for more information on setting configuration parameters.

To view the current setting of the configuration parameter, query the CONFIGURATION_PARAMETERS system table:

=> \x

Expanded display is on.

=> SELECT * FROM CONFIGURATION_PARAMETERS WHERE parameter_name = 'JavaBinaryForUDx';

-[ RECORD 1 ]-----------------+----------------------------------------------------------

node_name | ALL

parameter_name | JavaBinaryForUDx

current_value | /usr/bin/java

default_value |

change_under_support_guidance | f

change_requires_restart | f

description | Path to the java binary for executing UDx written in Java

Once you have set the configuration parameter, Vertica can find the Java executable on each node in your cluster.

Note

Since the location of the Java executable is set by a single configuration parameter for the entire cluster, you must ensure that the Java executable is installed in the same path on all of the hosts in the cluster.

5.5 - Configuring Vertica for HCatalog

Before you can use the HCatalog Connector, you must add certain Hadoop and Hive libraries to your Vertica installation.

Before you can use the HCatalog Connector, you must add certain Hadoop and Hive libraries to your Vertica installation. You must also copy the Hadoop configuration files that specify various connection properties. Vertica uses the values in those configuration files to make its own connections to Hadoop.

You need only make these changes on one node in your cluster. After you do this you can install the HCatalog connector.

Copy Hadoop libraries and configuration files

Vertica provides a tool, hcatUtil, to collect the required files from Hadoop. This tool copies selected libraries and XML configuration files from your Hadoop cluster to your Vertica cluster. This tool might also need access to additional libraries:

-

If you plan to use Hive to query files that use Snappy compression, you need access to the Snappy native libraries, libhadoop*.so and libsnappy*.so.

-

If you plan to use Hive to query files that use LZO compression, you need access to the hadoop-lzo-*.jar and libgplcompression.so* libraries. In core-site.xml you must also edit the io.compression.codecs property to include com.hadoop.compression.lzo.LzopCodec.

-

If you plan to use a JSON SerDe with a Hive table, you need access to its library. This is the same library that you used to configure Hive; for example:

hive> add jar /home/release/json-serde-1.3-jar-with-dependencies.jar;

hive> create external table nationjson (id int,name string,rank int,text string)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION '/user/release/vt/nationjson';

-

If you are using any other libraries that are not standard across all supported Hadoop versions, you need access to those libraries.

If any of these cases applies to you, do one of the following:

-

Include the path(s) in the path you specify as the value of --hcatLibPath, or

-

Copy the file(s) to a directory already on that path.

If Vertica is not co-located on a Hadoop node, you should do the following:

-

Copy /opt/vertica/packages/hcat/tools/hcatUtil to a Hadoop node and run it there, specifying a temporary output directory. Your Hadoop, HIVE, and HCatalog lib paths might be different. In newer versions of Hadoop the HCatalog directory is usually a subdirectory under the HIVE directory, and Cloudera creates a new directory for each revision of the configuration files. Use the values from your environment in the following command:

hcatUtil --copyJars

--hadoopHiveHome="$HADOOP_HOME/lib;$HIVE_HOME/lib;/hcatalog/dist/share"

--hadoopHiveConfPath="$HADOOP_CONF_DIR;$HIVE_CONF_DIR;$WEBHCAT_CONF_DIR"

--hcatLibPath="/tmp/hadoop-files"

If you are using Hive LLAP, specify the hive2 directories.

-

Verify that all necessary files were copied:

hcatUtil --verifyJars --hcatLibPath=/tmp/hadoop-files

-

Copy that output directory (/tmp/hadoop-files, in this example) to /opt/vertica/packages/hcat/lib on the Vertica node you will connect to when installing the HCatalog connector. If you are updating a Vertica cluster to use a new Hadoop cluster (or a new version of Hadoop), first remove all JAR files in /opt/vertica/packages/hcat/lib except vertica-hcatalogudl.jar.

-

Verify that all necessary files were copied:

hcatUtil --verifyJars --hcatLibPath=/opt/vertica/packages/hcat

If Vertica is co-located on some or all Hadoop nodes, you can do this in one step on a shared node. Your Hadoop, HIVE, and HCatalog lib paths might be different; use the values from your environment in the following command:

hcatUtil --copyJars

--hadoopHiveHome="$HADOOP_HOME/lib;$HIVE_HOME/lib;/hcatalog/dist/share"