Vertica provides the ability to add, remove, and replace nodes on a live cluster that is actively processing queries. This ability lets you scale the database without interrupting users.

This is the multi-page printable view of this section. Click here to print.

Managing nodes

- 1: Stop Vertica on a node

- 2: Restart Vertica on a node

- 3: Setting node type

- 4: Active standby nodes

- 4.1: Creating an active standby node

- 4.2: Replace a node with an active standby node

- 4.3: Revert active standby nodes

- 5: Large cluster

- 5.1: Planning a large cluster

- 5.2: Enabling large cluster

- 5.3: Changing the number of control nodes and realigning

- 5.4: Monitoring large clusters

- 6: Multiple databases on a cluster

- 7: Fault groups

- 7.1: About the fault group script

- 7.2: Creating a fault group input file

- 7.3: Creating fault groups

- 7.4: Monitoring fault groups

- 7.5: Dropping fault groups

- 8: Terrace routing

- 9: Elastic cluster

- 9.1: Scaling factor

- 9.2: Viewing scaling factor settings

- 9.3: Setting the scaling factor

- 9.4: Local data segmentation

- 9.5: Elastic cluster best practices

- 10: Adding nodes

- 10.1: Adding hosts to a cluster

- 10.2: Adding nodes to a database

- 10.3: Add nodes to a cluster in AWS

- 11: Removing nodes

- 11.1: Automatic eviction of unhealthy nodes

- 11.2: Lowering K-Safety to enable node removal

- 11.3: Removing nodes from a database

- 11.4: Removing hosts from a cluster

- 11.5: Remove nodes from an AWS cluster

- 12: Replacing nodes

- 12.1: Replacing a host using the same name and IP address

- 12.2: Replacing a failed node using a node with a different IP address

- 12.3: Replacing a functioning node using a different name and IP address

- 12.4: Using the administration tools to replace nodes

- 13: Rebalancing data across nodes

- 14: Redistributing configuration files to nodes

- 15: Stopping and starting nodes on MC

- 16: Upgrading your operating system on nodes in your Vertica cluster

- 17: Reconfiguring node messaging

- 17.1: re_ip command

- 17.2: Restarting a node with new host IPs

- 18: Adjusting Spread Daemon timeouts for virtual environments

1 - Stop Vertica on a node

In some cases, you need to take down a node to perform maintenance tasks, or upgrade hardware. You can do this with one of the following:

-

Command line admintools stop_node

Important

Before removing a node from a cluster, check that the cluster has the minimum number of nodes required to comply with K-safety. If necessary, temporarily lower the database K-safety level.Administration tools

-

Run Administration Tools, select Advanced Menu, and click OK.

-

Select Stop Vertica on Host and click OK.

-

Choose the host that you want to stop and click OK.

-

Return to the Main Menu, select View Database Cluster State, and click OK. The host you previously stopped should appear DOWN.

-

You can now perform maintenance.

See Restart Vertica on a Node for details about restarting Vertica on a node.

Command line

You can use the command line tool stop_node to stop Vertica on one or more nodes. stop_node takes one or more node IP addresses as arguments. For example, the following command stops Vertica on two nodes:

$ admintools -t stop_node -s 192.0.2.1,192.0.2.2

2 - Restart Vertica on a node

After stopping a node to perform maintenance tasks such as upgrading hardware, you need to restart the node so it can reconnect with the database cluster.

-

Run Administration Tools. From the Main Menu select Restart Vertica on Host and click OK.

-

Select the database and click OK.

-

Select the host that you want to restart and click OK.

Note

This process may take a few moments. -

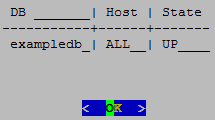

Return to the Main Menu, select View Database Cluster State, and click OK. The host you restarted now appears as UP, as shown.

3 - Setting node type

When you create a node, Vertica automatically sets its type to PERMANENT. This enables Vertica to use this node to store data. You can change a node's type with

ALTER NODE, to one of the following:

-

PERMANENT: (default): A node that stores data.

-

EPHEMERAL: A node that is in transition from one type to another—typically, from PERMANENT to either STANDBY or EXECUTE.

-

STANDBY: A node that is reserved to replace any node when it goes down. A standby node stores no segments or data until it is called to replace a down node. When used as a replacement node, Vertica changes its type to PERMANENT. For more information, see Active standby nodes.

-

EXECUTE: A node that is reserved for computation purposes only. An execute node contains no segments or data.

Note

STANDBY and EXECUTE node types are supported only in Enterprise Mode.4 - Active standby nodes

An active standby node exists is a node in an Enterprise Mode database that is available to replace any failed node. Unlike permanent Vertica nodes, an standby node does not perform computations or contain data. If a permanent node fails, an active standby node can replace the failed node, after the failed node exceeds the failover time limit. After replacing the failed node, the active standby node contains the projections and performs all calculations of the node it replaced.

In this section

4.1 - Creating an active standby node

You can create active standby nodes in an Enterprise Mode database at the same time that you create the database, or later.

Note

When you create an active standby node, be sure to add any necessary storage locations. For more information, refer to Adding Storage Locations.Creating an active standby node in a new database

-

Create a database, including the nodes that you intend to use as active standby nodes.

-

Using vsql, connect to a node other than the node that you want to use as an active standby node.

-

Use ALTER NODE to convert the node from a permanent node to an active standby node. For example:

=> ALTER NODE v_mart_node5 STANDBY;After you issue the ALTER NODE statement, the affected node goes down and restarts as an active standby node.

Creating an active standby node in an existing database

When you create a node to be used as an active standby node, change the new node to ephemeral status as quickly as possible to prevent the cluster from moving data to it.

-

Important

Do not rebalance the database at this stage. -

Using vsql, connect to any other node.

-

Use ALTER NODE to convert the new node from a permanent node to an ephemeral node. For example:

=> ALTER NODE v_mart_node5 EPHEMERAL; -

Rebalance the cluster to remove all data from the ephemeral node.

-

Use ALTER NODE on the ephemeral node to convert it to an active standby node. For example:

=> ALTER NODE v_mart_node5 STANDBY;

4.2 - Replace a node with an active standby node

A failed node on an Enterprise Mode database can be replaced with an active standby node automatically, or manually.

Important

A node must be down before it can be replaced with an active standby node. Attempts to replace a node that is up return with an error.Automatic replacement

You can configure automatic replacement of failed nodes with parameter FailoverToStandbyAfter. If enabled, this parameter specifies the length of time that an active standby node waits before taking the place of a failed node. If possible, Vertica selects a standby node from the same fault group as the failed node. Otherwise, Vertica randomly selects an available active standby node.

Manual replacement

As an administrator, you can manually replace a failed node with ALTER NODE:

-

Connect to the database with Administration Tools or vsql.

-

Replace the node with ALTER NODE...REPLACE. The REPLACE option can specify a standby node. If REPLACE is unqualified, then Vertica selects a standby node from the same fault group as the failed node, if one is available; otherwise, it randomly selects an available active standby node.

4.3 - Revert active standby nodes

When a down node in an Enterprise Mode database is ready for reactivation, you can restore it by reverting its replacement to standby status. You can perform this operation on individual nodes or the entire database, with ALTER NODE and ALTER DATABASE, respectively:

-

Connect to the database with Administration Tools or via vsql.

-

Revert the standby nodes.

-

Individually with ALTER NODE:

ALTER NODE node-name RESET; -

Collectively across the database cluster with ALTER DATABASE:

ALTER DATABASE DEFAULT RESET STANDBY;

-

If a down node cannot resume operation, Vertica ignores the reset request and leaves the standby node in place.

5 - Large cluster

Vertica uses the Spread service to broadcast control messages between database nodes. This service can limit the growth of a Vertica database cluster. As you increase the number of cluster nodes, the load on the Spread service also increases as more participants exchange messages. This increased load can slow overall cluster performance. Also, network addressing limits the maximum number of participants in the Spread service to 120 (and often far less). In this case, you can use large cluster to overcome these Spread limitations.

When large cluster is enabled, a subset of cluster nodes, called control nodes, exchange messages using the Spread service. Other nodes in the cluster are assigned to one of these control nodes, and depend on them for cluster-wide communication. Each control node passes messages from the Spread service to its dependent nodes. When a dependent node needs to broadcast a message to other nodes in the cluster, it passes the message to its control node, which in turn sends the message out to its other dependent nodes and the Spread service.

By setting up dependencies between control nodes and other nodes, you can grow the total number of database nodes, and remain in compliance with the Spread limit of 120 nodes.

Note

Technically, when large cluster is disabled, all of the nodes in the cluster are control nodes. In this case, all nodes connect to Spread. When large cluster is enabled, some nodes become dependent on control nodes.A downside of the large cluster feature is that if a control node fails, its dependent nodes are cut off from the rest of the database cluster. These nodes cannot participate in database activities, and Vertica considers them to be down as well. When the control node recovers, it re-establishes communication between its dependent nodes and the database, so all of the nodes rejoin the cluster.

Note

The Spread service demon runs as an independent process on the control node host. It is not part of the Vertica process. If the Vertica process goes down on the node—for example, you use admintools to stop the Vertica process on the host—Spread continues to run. As long as the Spread demon runs on the control node, the node's dependents can communicate with the database cluster and participate in database activity. Normally, the control node only goes down if the node's host has an issue—or example, you shut it down, it becomes disconnected from the network, or a hardware failure occurs.Large cluster and database growth

When your database has large cluster enabled, Vertica decides whether to make a newly added node into a control or a dependent node as follows:

-

In Enterprise Mode, if the number of control nodes configured for the database cluster is greater than the current number of nodes it contains, Vertica makes the new node a control node. In Eon Mode, the number of control nodes is set at the subcluster level. If the number of control nodes set for the subcluster containing the new node is less than this setting, Vertica makes the new node a control node.

-

If the Enterprise Mode cluster or Eon Mode subcluster has reached its limit on control nodes, a new node becomes a dependent of an existing control node.

When a newly-added node is a dependent node, Vertica automatically assigns it to a control node. Which control node it chooses is guided by the database mode:

-

Enterprise Mode database: Vertica assigns the new node to the control node with the least number of dependents. If you created fault groups in your database, Vertica chooses a control node in the same fault group as the new node. This feature lets you use fault groups to organize control nodes and their dependents to reflect the physical layout of the underlying host hardware. For example, you might want dependent nodes to be in the same rack as their control nodes. Otherwise, a failure that affects the entire rack (such as a power supply failure) will not only cause nodes in the rack to go down, but also nodes in other racks whose control node is in the affected rack. See Fault groups for more information.

-

Eon Mode database: Vertica always adds new nodes to a subcluster. Vertica assigns the new node to the control node with the fewest dependent nodes in that subcluster. Every subcluster in an Eon Mode database with large cluster enabled has at least one control node. Keeping dependent nodes in the same subcluster as their control node maintains subcluster isolation.

Important

In versions of Vertica prior to 10.0.1, nodes in an Eon Mode database with large cluster enabled were not necessarily assigned a control node in their subcluster. If you have upgraded your Eon Mode database from a version of Vertica earlier than 10.0.1 and have large cluster enabled, realign the control nodes in your database. This process reassigns dependent nodes and fixes any cross-subcluster control node dependencies. See Realigning Control Nodes and Reloading Spread for more information.Spread's upper limit of 120 participants can cause errors when adding a subcluster to an Eon Mode database. If your database cluster has 120 control nodes, attempting to create a subcluster fails with an error. Every subcluster must have at least one control node. When your cluster has 120 control nodes , Vertica cannot create a control node for the new subcluster. If this error occurs, you must reduce the number of control nodes in your database cluster before adding a subcluster.

When to enable large cluster

Vertica automatically enables large cluster in two cases:

-

The database cluster contains 120 or more nodes. This is true for both Enterprise Mode and Eon Mode.

-

You create an Eon Mode subcluster (either a primary subcluster or a secondary subcluster) with an initial node count of 16 or more.

Vertica does not automatically enable large cluster if you expand an existing subcluster to 16 or more nodes by adding nodes to it.

Note

You can prevent Vertica from automatically enabling large cluster when you create a subcluster with 16 or more nodes by setting the control-set-size parameter to -1. See Creating subclusters for details.

You can choose to manually enable large cluster mode before Vertica automatically enables it. Your best practice is to enable large cluster when your database cluster size reaches a threshold:

-

For cloud-based databases, enable large cluster when the cluster contains 16 or more nodes. In a cloud environment, your database uses point-to-point network communications. Spread scales poorly in point-to-point communications mode. Enabling large cluster when the database cluster reaches 16 nodes helps limit the impact caused by Spread being in point-to-point mode.

-

For on-premises databases, enable large cluster when the cluster reaches 50 to 80 nodes. Spread scales better in an on-premises environment. However, by the time the cluster size reaches 50 to 80 nodes, Spread may begin exhibiting performance issues.

In either cloud or on-premises environments, enable large cluster if you begin to notice Spread-related performance issues. Symptoms of Spread performance issues include:

-

The load on the spread service begins to cause performance issues. Because Vertica uses Spread for cluster-wide control messages, Spread performance issues can adversely affect database performance. This is particularly true for cloud-based databases, where Spread performance problems becomes a bottleneck sooner, due to the nature of network broadcasting in the cloud infrastructure. In on-premises databases, broadcast messages are usually less of a concern because messages usually remain within the local subnet. Even so, eventually, Spread usually becomes a bottleneck before Vertica automatically enables large cluster automatically when the cluster reaches 120 nodes.

-

The compressed list of addresses in your cluster is too large to fit in a maximum transmission unit (MTU) packet (1478 bytes). The MTU packet has to contain all of the addresses for the nodes participating in the Spread service. Under ideal circumstances (when your nodes have the IP addresses 1.1.1.1, 1.1.1.2 and so on) 120 addresses can fit in this packet. This is why Vertica automatically enables large cluster if your database cluster reaches 120 nodes. In practice, the compressed list of IP addresses will reach the MTU packet size limit at 50 to 80 nodes.

5.1 - Planning a large cluster

There are two factors you should consider when planning to expand your database cluster to the point that it needs to use large cluster:

-

How many control nodes should your database cluster have?

-

How should those control nodes be distributed?

Determining the number of control nodes

When you manually enable large cluster or add enough nodes to trigger Vertica to enable it automatically, a subset of the cluster nodes become control nodes. In subclusters with fewer than 16 nodes, all nodes are control nodes. In many cases, you can set the number of control nodes to the square root of the total number of nodes in the entire Enterprise Mode cluster, or in Eon Mode subclusters with more than 16 nodes. However, this formula for calculating the number of control is not guaranteed to always meet your requirements.

When choosing the number of control nodes in a database cluster, you must balance two competing considerations:

-

If a control node fails or is shut down, all nodes that depend on it are cut off from the database. They are also down until the control node rejoins the database. You can reduce the impact of a control node failure by increasing the number of control nodes in your cluster.

-

The more control nodes in your cluster, the greater the load on the spread service. In cloud environments, increased complexity of the network environment broadcast can contribute to high latency. This latency can cause messages sent over the spread service to take longer to reach all of the nodes in the cluster.

In a cloud environment, experience has shown that 16 control nodes balances the needs of reliability and performance. In an Eon Mode database, you must have at least one control node per subcluster. Therefore, if you have more than 16 subclusters, you must have more than 16 control nodes.

In an Eon Mode database, whether on-premises or in the cloud, consider adding more control nodes to your primary subclusters than to secondary subclusters. Only nodes in primary subclusters are responsible for maintaining K-safety in an Eon Mode database. Therefore, a control node failure in a primary subcluster can have greater impact on your database than a control node failure in a secondary subcluster.

In an on-premises Enterprise Mode database, consider the physical layout of the hosts running your database when choosing the number of control nodes. If your hosts are spread across multiple server racks, you want to have enough control nodes to distribute them across the racks. Distributing the control nodes helps ensure reliability in the case of a failure that involves the entire rack (such as a power supply or network switch failure). You can configure your database so no node depends on a control node that is in a separate rack. Limiting dependency to within a rack prevents a failure that affects an entire rack from causing additional node loss outside the rack due to control node loss.

Selecting the number of control nodes based on the physical layout also lets you reduce network traffic across switches. By having dependent nodes on the same racks as their control nodes, the communications between them remain in the rack, rather that traversing a network switch.

You might need to increase the number of control nodes to evenly distribute them across your racks. For example, on-premises Enterprise Mode database has 64 total nodes, spread across three racks. The square root of the number of nodes yields 8 control nodes for this cluster. However, you cannot evenly distribute eight control nodes among the three racks. Instead, you can have 9 control nodes and evenly distribute three control nodes per rack.

Influencing control node placement

After you determine the number of nodes for your cluster, you need to determine how to distribute them among the cluster nodes. Vertica chooses which nodes become control nodes. You can influence how Vertica chooses the control nodes and which nodes become their dependents. The exact process you use depends on your database's mode:

-

Enterprise Mode on-premises database: Define fault groups to influence control node placement. Dependent nodes are always in the same fault group as their control node. You usually define fault groups that reflect the physical layout of the hosts running your database. For example, you usually define one or more fault groups for the nodes in a single rack of servers. When the fault groups reflect your physical layout, Vertica places control nodes and dependents in a way that can limit the impact of rack failures. See Fault groups for more information.

-

Eon Mode database: Use subclusters to control the placement of control nodes. Each subcluster must have at least one control node. Dependent nodes are always in the same subcluster as their control nodes. You can set the number of control nodes for each subcluster. Doing so lets you assign more control nodes to primary subclusters, where it's important to minimize the impact of a control node failure.

How Vertica chooses a default number of control nodes

Vertica can automatically choose the number of control nodes in the entire cluster (when in Enterprise Mode) or for a subcluster (when in Eon Mode). It sets a default value in these circumstances:

-

When you pass the

defaultkeyword to the--large-clusteroption of theinstall_verticascript (see Enable Large Cluster When Installing Vertica). -

Vertica automatically enables large cluster when your database cluster grows to 120 or more nodes.

-

Vertica automatically enables large cluster for an Eon Mode subcluster if you create it with more than 16 nodes. Note that Vertica does not enable large cluster on a subcluster you expand past the 16 node limit. It only enables large clusters that start out larger than 16 nodes.

The number of control nodes Vertica chooses depends on what triggered Vertica to set the value.

If you pass the --large-cluster default option to the

install_vertica script, Vertica sets the number of control nodes to the square root of the number of nodes in the initial cluster.

If your database cluster reaches 120 nodes, Vertica enables large cluster by making any newly-added nodes into dependents. The default value for the limit on the number of control nodes is 120. When you reach this limit, any newly-added nodes are added as dependents. For example, suppose you have a 115 node Enterprise Mode database cluster where you have not manually enabled large cluster. If you add 10 nodes to this cluster, Vertica adds 5 of the nodes as control nodes (bringing you up to the 120-node limit) and the other 5 nodes as dependents.

Important

You should manually enable large cluster before your database reaches 120 nodes.In an Eon Mode database, each subcluster has its own setting for the number of control nodes. Vertica only automatically sets the number of control nodes when you create a subcluster with more than 16 nodes initially. When this occurs, Vertica sets the number of control nodes for the subcluster to the square root of the number of nodes in the subcluster.

For example, suppose you add a new subcluster with 25 nodes in it. This subcluster starts with more than the 16 node limit, so Vertica sets the number of control nodes for subcluster to 5 (which is the square root of 25). Five of the nodes are added as control nodes, and the remaining 20 are added as dependents of those five nodes.

Even though each subcluster has its own setting for the number of control nodes, an Eon Mode database cluster still has the 120 node limit on the total number of control nodes that it can have.

5.2 - Enabling large cluster

Vertica enables the large cluster feature automatically when:

-

The total number of nodes in the database cluster exceeds 120.

-

You create an Eon Mode subcluster with more than 16 nodes.

In most cases, you should consider manually enabling large cluster before your cluster size reaches either of these thresholds. See Planning a large cluster for guidance on when to enable large cluster.

You can enable large cluster on a new Vertica database, or on an existing database.

Enable large cluster when installing Vertica

You can enable large cluster when installing Vertica onto a new database cluster. This option is useful if you know from the beginning that your database will benefit from large cluster.

The install_vertica script's

--large-cluster argument enables large cluster during installation. It takes a single integer value between 1 and 120 that specifies the number of control nodes to create in the new database cluster. Alternatively, this option can take the literal argument default. In this case, Vertica enables large cluster mode and sets the number of control nodes to the square root of the number nodes you provide in the

--hosts argument. For example, if --hosts specifies 25 hosts and --large-cluster is set to default, the install script creates a database cluster with 5 control nodes.

The --large-cluster argument has a slightly different effect depending on the database mode you choose when creating your database:

-

Enterprise Mode:

--large-clustersets the total number of control nodes for the entire database cluster. -

Eon Mode :

--large-clustersets the number of control nodes in the initial default subcluster. This setting has no effect on subclusters that you create later.

Note

You cannot use --large-cluster to set the number of control nodes in your initial database to be higher than the number of you pass in the --hosts argument. The installer sets the number of control nodes to whichever is the lower value: the value you pass to the --large-cluster option or the number of hosts in the --hosts option.

You can set the number of control nodes to be higher than the number of nodes currently in an existing database, with the meta-function SET_CONTROL_SET_SIZE function. You choose to set a higher number to preallocate control nodes when planning for future expansion. For details, see Changing the number of control nodes and realigning.

After the installation process completes, use the Administration tools or the [%=Vertica.MC%] to create a database. See Create an empty database for details.

If your database is on-premises and running in Enterprise Mode, you usually want to define fault groups that reflect the physical layout of your hosts. They let you define which hosts are in the same server racks, and are dependent on the same infrastructure (such power supplies and network switches). With this knowledge, Vertica can realign the control nodes to make your database better able to cope with hardware failures. See Fault groups for more information.

After creating a database, any nodes that you add are, by default, dependent nodes. You can change the number of control nodes in the database with the meta-function SET_CONTROL_SET_SIZE.

Enable large cluster in an existing database

You can manually enable large cluster in an existing database. You usually choose to enable large cluster manually before your database reaches the point where Vertica automatically enables it. See When To Enable Large Cluster for an explanation of when you should consider enabling large cluster.

Use the meta-function SET_CONTROL_SET_SIZE to enable large cluster and set the number of control nodes. You pass this function an integer value that sets the number of control nodes in the entire Enterprise Mode cluster, or in an Eon Mode subcluster.

5.3 - Changing the number of control nodes and realigning

You can change the number of control nodes in the entire database cluster in Enterprise Mode, or the number of control nodes in a subcluster in Eon Mode. You may choose to change the number of control nodes in a cluster or subcluster to reduce the impact of control node loss on your database. See Planning a large cluster to learn more about when you should change the number of control nodes in your database.

You change the number of control nodes by calling the meta-function SET_CONTROL_SET_SIZE. If large cluster was not enabled before the call to SET_CONTROL_SET_SIZE, the function enables large cluster in your database. See Enabling large cluster for more information.

When you call SET_CONTROL_SET_SIZE in an Enterprise Mode database, it sets the number of control nodes in the entire database cluster. In an Eon Mode database, you must supply SET_CONTROL_SET_SIZE with the name of a subcluster in addition to the number of control nodes. The function sets the number of control nodes for that subcluster. Other subclusters in the database cluster are unaffected by this call.

Before changing the number of control nodes in an Eon Mode subcluster, verify that the subcluster is running. Changing the number of control nodes of a subcluster while it is down can cause configuration issues that prevent nodes in the subcluster from starting.

Note

You can set the number of control nodes to a value that is higher than the number of nodes currently in the cluster or subcluster. When the number of control nodes is higher than the current node count, newly-added nodes become control nodes until the number of nodes in the cluster or subcluster reaches the number control nodes you set.

You may choose to set the number of control nodes higher than the current node count to plan for future expansion. For example, suppose you have a 4-node subcluster in an Eon Mode database that you plan to expand in the future. You determine that you want limit the number of control nodes in this cluster to 8, even if you expand it beyond that size. In this case, you can choose to set the control node size for the subcluster to 8 now. As you add new nodes to the subcluster, they become control nodes until the size of the subcluster reaches 8. After that point, Vertica assigns newly-added nodes as a dependent of an existing control node in the subcluster.

Realigning control nodes and reloading spread

After you call the SET_CONTROL_SET_SIZE function, there are several additional steps you must take before the new setting takes effect.

Important

Follow these steps if you have upgraded your large-cluster enabled Eon Mode database from a version prior to 10.0.1. Earlier versions of Vertica did not restrict control node assignments to be within the same subcluster. When you realign the control nodes after an upgrade, Vertica configures each subcluster to have at least one control node, and assigns nodes to a control node in their own subcluster.-

Call the REALIGN_CONTROL_NODES function. This function tells Vertica to re-evaluate the assignment of control nodes and their dependents in your cluster or subcluster. When calling this function in an Eon Mode database, you must supply the name of the subcluster where you changed the control node settings.

-

Call the RELOAD_SPREAD function. This function updates the control node assignment information in configuration files and triggers Spread to reload.

-

Restart the nodes affected by the change in control nodes. In an Enterprise Mode database, you must restart the entire database to ensure all nodes have updated configuration information. In Eon Mode, restart the subcluster or subclusters affected by your changes. You must restart the entire Eon Mode database if you changed a critical subcluster (such as the only primary subcluster).

Note

You do not need to restart nodes if the earlier steps didn't change control node assignments. This case usually only happens when you set the number of control nodes in an Eon Mode subcluster to higher than the subcluster's current node count, and all nodes in the subcluster are already control nodes. In this case, no control nodes are added or removed, so node dependencies do not change. Because the dependencies did not change, the nodes do not need to reload the Spread configuration. -

In an Enterprise Mode database, call START_REBALANCE_CLUSTER to rebalance the cluster. This process improves your database's fault tolerance by shifting buddy projection assignments to limit the impact of a control node failure. You do not need to take this step in an Eon Mode database.

Enterprise Mode example

The following example makes 4 out of the 8 nodes in an Enterprise Mode database into control nodes. It queries the LARGE_CLUSTER_CONFIGURATION_STATUS system table which shows control node assignments for each node in the database. At the start, all nodes are their own control nodes. See Monitoring large clusters for more information the system tables associated with large cluster.

=> SELECT * FROM V_CATALOG.LARGE_CLUSTER_CONFIGURATION_STATUS;

node_name | spread_host_name | control_node_name

------------------+------------------+-------------------

v_vmart_node0001 | v_vmart_node0001 | v_vmart_node0001

v_vmart_node0002 | v_vmart_node0002 | v_vmart_node0002

v_vmart_node0003 | v_vmart_node0003 | v_vmart_node0003

v_vmart_node0004 | v_vmart_node0004 | v_vmart_node0004

v_vmart_node0005 | v_vmart_node0005 | v_vmart_node0005

v_vmart_node0006 | v_vmart_node0006 | v_vmart_node0006

v_vmart_node0007 | v_vmart_node0007 | v_vmart_node0007

v_vmart_node0008 | v_vmart_node0008 | v_vmart_node0008

(8 rows)

=> SELECT SET_CONTROL_SET_SIZE(4);

SET_CONTROL_SET_SIZE

----------------------

Control size set

(1 row)

=> SELECT REALIGN_CONTROL_NODES();

REALIGN_CONTROL_NODES

---------------------------------------------------------------

The new control node assignments can be viewed in vs_nodes.

Check vs_cluster_layout to see the proposed new layout. Reboot

all the nodes and call rebalance_cluster now

(1 row)

=> SELECT RELOAD_SPREAD(true);

RELOAD_SPREAD

---------------

Reloaded

(1 row)

=> SELECT SHUTDOWN();

After restarting the database, the final step is to rebalance the cluster and query the LARGE_CLUSTER_CONFIGURATION_STATUS table to see the current control node assignments:

=> SELECT START_REBALANCE_CLUSTER();

START_REBALANCE_CLUSTER

-------------------------

REBALANCING

(1 row)

=> SELECT * FROM V_CATALOG.LARGE_CLUSTER_CONFIGURATION_STATUS;

node_name | spread_host_name | control_node_name

------------------+------------------+-------------------

v_vmart_node0001 | v_vmart_node0001 | v_vmart_node0001

v_vmart_node0002 | v_vmart_node0002 | v_vmart_node0002

v_vmart_node0003 | v_vmart_node0003 | v_vmart_node0003

v_vmart_node0004 | v_vmart_node0004 | v_vmart_node0004

v_vmart_node0005 | v_vmart_node0001 | v_vmart_node0001

v_vmart_node0006 | v_vmart_node0002 | v_vmart_node0002

v_vmart_node0007 | v_vmart_node0003 | v_vmart_node0003

v_vmart_node0008 | v_vmart_node0004 | v_vmart_node0004

(8 rows)

Eon Mode example

The following example configures 4 control nodes in an 8-node secondary subcluster named analytics. The primary subcluster is not changed. The primary differences between this example and the previous Enterprise Mode example is the need to specify a subcluster when calling SET_CONTROL_SET_SIZE, not having to restart the entire database, and not having to call START_REBALANCE_CLUSTER.

=> SELECT * FROM V_CATALOG.LARGE_CLUSTER_CONFIGURATION_STATUS;

node_name | spread_host_name | control_node_name

----------------------+----------------------+----------------------

v_verticadb_node0001 | v_verticadb_node0001 | v_verticadb_node0001

v_verticadb_node0002 | v_verticadb_node0002 | v_verticadb_node0002

v_verticadb_node0003 | v_verticadb_node0003 | v_verticadb_node0003

v_verticadb_node0004 | v_verticadb_node0004 | v_verticadb_node0004

v_verticadb_node0005 | v_verticadb_node0005 | v_verticadb_node0005

v_verticadb_node0006 | v_verticadb_node0006 | v_verticadb_node0006

v_verticadb_node0007 | v_verticadb_node0007 | v_verticadb_node0007

v_verticadb_node0008 | v_verticadb_node0008 | v_verticadb_node0008

v_verticadb_node0009 | v_verticadb_node0009 | v_verticadb_node0009

v_verticadb_node0010 | v_verticadb_node0010 | v_verticadb_node0010

v_verticadb_node0011 | v_verticadb_node0011 | v_verticadb_node0011

(11 rows)

=> SELECT subcluster_name,node_name,is_primary,control_set_size FROM

V_CATALOG.SUBCLUSTERS;

subcluster_name | node_name | is_primary | control_set_size

--------------------+----------------------+------------+------------------

default_subcluster | v_verticadb_node0001 | t | -1

default_subcluster | v_verticadb_node0002 | t | -1

default_subcluster | v_verticadb_node0003 | t | -1

analytics | v_verticadb_node0004 | f | -1

analytics | v_verticadb_node0005 | f | -1

analytics | v_verticadb_node0006 | f | -1

analytics | v_verticadb_node0007 | f | -1

analytics | v_verticadb_node0008 | f | -1

analytics | v_verticadb_node0009 | f | -1

analytics | v_verticadb_node0010 | f | -1

analytics | v_verticadb_node0011 | f | -1

(11 rows)

=> SELECT SET_CONTROL_SET_SIZE('analytics',4);

SET_CONTROL_SET_SIZE

----------------------

Control size set

(1 row)

=> SELECT REALIGN_CONTROL_NODES('analytics');

REALIGN_CONTROL_NODES

-----------------------------------------------------------------------------

The new control node assignments can be viewed in vs_nodes. Call

reload_spread(true). If the subcluster is critical, restart the database.

Otherwise, restart the subcluster

(1 row)

=> SELECT RELOAD_SPREAD(true);

RELOAD_SPREAD

---------------

Reloaded

(1 row)

At this point, the analytics subcluster needs to restart. You have several options to restart it. See Starting and stopping subclusters for details. This example uses the admintools command line to stop and start the subcluster.

$ admintools -t stop_subcluster -d verticadb -c analytics -p password

*** Forcing subcluster shutdown ***

Verifying subcluster 'analytics'

Node 'v_verticadb_node0004' will shutdown

Node 'v_verticadb_node0005' will shutdown

Node 'v_verticadb_node0006' will shutdown

Node 'v_verticadb_node0007' will shutdown

Node 'v_verticadb_node0008' will shutdown

Node 'v_verticadb_node0009' will shutdown

Node 'v_verticadb_node0010' will shutdown

Node 'v_verticadb_node0011' will shutdown

Shutdown subcluster command successfully sent to the database

$ admintools -t restart_subcluster -d verticadb -c analytics -p password

*** Restarting subcluster for database verticadb ***

Restarting host [10.11.12.19] with catalog [v_verticadb_node0004_catalog]

Restarting host [10.11.12.196] with catalog [v_verticadb_node0005_catalog]

Restarting host [10.11.12.51] with catalog [v_verticadb_node0006_catalog]

Restarting host [10.11.12.236] with catalog [v_verticadb_node0007_catalog]

Restarting host [10.11.12.103] with catalog [v_verticadb_node0008_catalog]

Restarting host [10.11.12.185] with catalog [v_verticadb_node0009_catalog]

Restarting host [10.11.12.80] with catalog [v_verticadb_node0010_catalog]

Restarting host [10.11.12.47] with catalog [v_verticadb_node0011_catalog]

Issuing multi-node restart

Starting nodes:

v_verticadb_node0004 (10.11.12.19) [CONTROL]

v_verticadb_node0005 (10.11.12.196) [CONTROL]

v_verticadb_node0006 (10.11.12.51) [CONTROL]

v_verticadb_node0007 (10.11.12.236) [CONTROL]

v_verticadb_node0008 (10.11.12.103)

v_verticadb_node0009 (10.11.12.185)

v_verticadb_node0010 (10.11.12.80)

v_verticadb_node0011 (10.11.12.47)

Starting Vertica on all nodes. Please wait, databases with a large catalog may take a while to initialize.

Node Status: v_verticadb_node0004: (DOWN) v_verticadb_node0005: (DOWN) v_verticadb_node0006: (DOWN)

v_verticadb_node0007: (DOWN) v_verticadb_node0008: (DOWN) v_verticadb_node0009: (DOWN)

v_verticadb_node0010: (DOWN) v_verticadb_node0011: (DOWN)

Node Status: v_verticadb_node0004: (DOWN) v_verticadb_node0005: (DOWN) v_verticadb_node0006: (DOWN)

v_verticadb_node0007: (DOWN) v_verticadb_node0008: (DOWN) v_verticadb_node0009: (DOWN)

v_verticadb_node0010: (DOWN) v_verticadb_node0011: (DOWN)

Node Status: v_verticadb_node0004: (INITIALIZING) v_verticadb_node0005: (INITIALIZING) v_verticadb_node0006:

(INITIALIZING) v_verticadb_node0007: (INITIALIZING) v_verticadb_node0008: (INITIALIZING)

v_verticadb_node0009: (INITIALIZING) v_verticadb_node0010: (INITIALIZING) v_verticadb_node0011: (INITIALIZING)

Node Status: v_verticadb_node0004: (UP) v_verticadb_node0005: (UP) v_verticadb_node0006: (UP)

v_verticadb_node0007: (UP) v_verticadb_node0008: (UP) v_verticadb_node0009: (UP)

v_verticadb_node0010: (UP) v_verticadb_node0011: (UP)

Syncing catalog on verticadb with 2000 attempts.

Once the subcluster restarts, you can query the system tables to see the control node configuration:

=> SELECT * FROM V_CATALOG.LARGE_CLUSTER_CONFIGURATION_STATUS;

node_name | spread_host_name | control_node_name

----------------------+----------------------+----------------------

v_verticadb_node0001 | v_verticadb_node0001 | v_verticadb_node0001

v_verticadb_node0002 | v_verticadb_node0002 | v_verticadb_node0002

v_verticadb_node0003 | v_verticadb_node0003 | v_verticadb_node0003

v_verticadb_node0004 | v_verticadb_node0004 | v_verticadb_node0004

v_verticadb_node0005 | v_verticadb_node0005 | v_verticadb_node0005

v_verticadb_node0006 | v_verticadb_node0006 | v_verticadb_node0006

v_verticadb_node0007 | v_verticadb_node0007 | v_verticadb_node0007

v_verticadb_node0008 | v_verticadb_node0004 | v_verticadb_node0004

v_verticadb_node0009 | v_verticadb_node0005 | v_verticadb_node0005

v_verticadb_node0010 | v_verticadb_node0006 | v_verticadb_node0006

v_verticadb_node0011 | v_verticadb_node0007 | v_verticadb_node0007

(11 rows)

=> SELECT subcluster_name,node_name,is_primary,control_set_size FROM subclusters;

subcluster_name | node_name | is_primary | control_set_size

--------------------+----------------------+------------+------------------

default_subcluster | v_verticadb_node0001 | t | -1

default_subcluster | v_verticadb_node0002 | t | -1

default_subcluster | v_verticadb_node0003 | t | -1

analytics | v_verticadb_node0004 | f | 4

analytics | v_verticadb_node0005 | f | 4

analytics | v_verticadb_node0006 | f | 4

analytics | v_verticadb_node0007 | f | 4

analytics | v_verticadb_node0008 | f | 4

analytics | v_verticadb_node0009 | f | 4

analytics | v_verticadb_node0010 | f | 4

analytics | v_verticadb_node0011 | f | 4

(11 rows)

Disabling large cluster

To disable large cluster, call SET_CONTROL_SET_SIZE with a value of -1. This value is the default for non-large cluster databases. It tells Vertica to make all nodes into control nodes.

In an Eon Mode database, to fully disable large cluster you must to set the number of control nodes to -1 in every subcluster that has a set number of control nodes. You can see which subclusters have a set number of control nodes by querying the CONTROL_SET_SIZE column of the V_CATALOG.SUBCLUSTERS system table.

The following example resets the number of control nodes set in the previous Eon Mode example.

=> SELECT subcluster_name,node_name,is_primary,control_set_size FROM subclusters;

subcluster_name | node_name | is_primary | control_set_size

--------------------+----------------------+------------+------------------

default_subcluster | v_verticadb_node0001 | t | -1

default_subcluster | v_verticadb_node0002 | t | -1

default_subcluster | v_verticadb_node0003 | t | -1

analytics | v_verticadb_node0004 | f | 4

analytics | v_verticadb_node0005 | f | 4

analytics | v_verticadb_node0006 | f | 4

analytics | v_verticadb_node0007 | f | 4

analytics | v_verticadb_node0008 | f | 4

analytics | v_verticadb_node0009 | f | 4

analytics | v_verticadb_node0010 | f | 4

analytics | v_verticadb_node0011 | f | 4

(11 rows)

=> SELECT SET_CONTROL_SET_SIZE('analytics',-1);

SET_CONTROL_SET_SIZE

----------------------

Control size set

(1 row)

=> SELECT REALIGN_CONTROL_NODES('analytics');

REALIGN_CONTROL_NODES

---------------------------------------------------------------------------------------

The new control node assignments can be viewed in vs_nodes. Call reload_spread(true).

If the subcluster is critical, restart the database. Otherwise, restart the subcluster

(1 row)

=> SELECT RELOAD_SPREAD(true);

RELOAD_SPREAD

---------------

Reloaded

(1 row)

-- After restarting the analytics subcluster...

=> SELECT * FROM V_CATALOG.LARGE_CLUSTER_CONFIGURATION_STATUS;

node_name | spread_host_name | control_node_name

----------------------+----------------------+----------------------

v_verticadb_node0001 | v_verticadb_node0001 | v_verticadb_node0001

v_verticadb_node0002 | v_verticadb_node0002 | v_verticadb_node0002

v_verticadb_node0003 | v_verticadb_node0003 | v_verticadb_node0003

v_verticadb_node0004 | v_verticadb_node0004 | v_verticadb_node0004

v_verticadb_node0005 | v_verticadb_node0005 | v_verticadb_node0005

v_verticadb_node0006 | v_verticadb_node0006 | v_verticadb_node0006

v_verticadb_node0007 | v_verticadb_node0007 | v_verticadb_node0007

v_verticadb_node0008 | v_verticadb_node0008 | v_verticadb_node0008

v_verticadb_node0009 | v_verticadb_node0009 | v_verticadb_node0009

v_verticadb_node0010 | v_verticadb_node0010 | v_verticadb_node0010

v_verticadb_node0011 | v_verticadb_node0011 | v_verticadb_node0011

(11 rows)

=> SELECT subcluster_name,node_name,is_primary,control_set_size FROM subclusters;

subcluster_name | node_name | is_primary | control_set_size

--------------------+----------------------+------------+------------------

default_subcluster | v_verticadb_node0001 | t | -1

default_subcluster | v_verticadb_node0002 | t | -1

default_subcluster | v_verticadb_node0003 | t | -1

analytics | v_verticadb_node0004 | f | -1

analytics | v_verticadb_node0005 | f | -1

analytics | v_verticadb_node0006 | f | -1

analytics | v_verticadb_node0007 | f | -1

analytics | v_verticadb_node0008 | f | -1

analytics | v_verticadb_node0009 | f | -1

analytics | v_verticadb_node0010 | f | -1

analytics | v_verticadb_node0011 | f | -1

(11 rows)

5.4 - Monitoring large clusters

Monitor large cluster traits by querying the following system tables:

-

V_CATALOG.LARGE_CLUSTER_CONFIGURATION_STATUS—Shows the current spread hosts and the control designations in the catalog so you can see if they match.

-

V_MONITOR.CRITICAL_HOSTS—Lists the hosts whose failure would cause the database to become unsafe and force a shutdown.

Tip

The CRITICAL_HOSTS view is especially useful for large cluster arrangements. For non-large clusters, query the CRITICAL_NODES table. -

In an Eon Mode database, the CONTROL_SET_SIZE column of the V_CATALOG.SUBCLUSTERS system table shows the number of control nodes set for each subcluster.

You might also want to query the following system tables:

-

V_CATALOG.FAULT_GROUPS—Shows fault groups and their hierarchy in the cluster.

-

V_CATALOG.CLUSTER_LAYOUT—Shows the relative position of the actual arrangement of the nodes participating in the database cluster and the fault groups that affect them.

6 - Multiple databases on a cluster

Vertica allows you to manage your database workloads by running multiple databases on a single cluster. However, databases cannot share the same node while running.

Example

If you have an 8-node cluster, with database 1 running on nodes 1, 2, 3, 4 and database 2 running on nodes 5, 6, 7, 8, you cannot create a new database in this cluster because all nodes are occupied. But if you stop database 1, you can create a database 3 using nodes 1, 2, 3, 4. Or if you stop both databases 1 and 2, you can create a database 3 using nodes 3, 4, 5, 6. In this latter case, database 1 and database 2 cannot be restarted unless you stop database 3, as they occupy the same nodes.

7 - Fault groups

Note

You cannot create fault groups for an Eon Mode database. Rather, Vertica automatically creates fault groups on a large cluster Eon database; these fault groups are configured around the control nodes and their dependents of each subcluster. These fault groups are managed internally by Vertica and are not accessible to users.Fault groups let you configure an Enterprise Mode database for your physical cluster layout. Sharing your cluster topology lets you use terrace routing to reduce the buffer requirements of large queries. It also helps to minimize the risk of correlated failures inherent in your environment, usually caused by shared resources.

Vertica automatically creates fault groups around control nodes (servers that run spread) in large cluster arrangements, placing nodes that share a control node in the same fault group. Automatic and user-defined fault groups do not include ephemeral nodes because such nodes hold no data.

Consider defining your own fault groups specific to your cluster's physical layout if you want to:

-

Use terrace routing to reduce the buffer requirements of large queries.

-

Reduce the risk of correlated failures. For example, by defining your rack layout, Vertica can better tolerate a rack failure.

-

Influence the placement of control nodes in the cluster.

Vertica supports complex, hierarchical fault groups of different shapes and sizes. The database platform provides a fault group script (DDL generator), SQL statements, system tables, and other monitoring tools.

See High availability with fault groups for an overview of fault groups with a cluster topology example.

7.1 - About the fault group script

To help you define fault groups on your cluster, Vertica provides a script named fault_group_ddl_generator.py in the

/opt/vertica/scripts directory. This script generates the SQL statements you need to run to create fault groups.

The fault_group_ddl_generator.py script does not create fault groups for you, but you can copy the output to a file. Then, when you run the helper script, you can use \i or vsql–f commands to pass the cluster topology to Vertica.

The fault group script takes the following arguments:

-

The database name

-

The fault group input file

For example:

$ python /opt/vertica/scripts/fault_group_ddl_generator.py VMartdb fault_grp_input.out

See also

7.2 - Creating a fault group input file

Use a text editor to create a fault group input file for the targeted cluster.

The following example shows how you can create a fault group input file for a cluster that has 8 racks with 8 nodes on each rack—for a total of 64 nodes in the cluster.

-

On the first line of the file, list the parent (top-level) fault groups, delimited by spaces.

rack1 rack2 rack3 rack4 rack5 rack6 rack7 rack8 -

On the subsequent lines, list the parent fault group followed by an equals sign (=). After the equals sign, list the nodes or fault groups delimited by spaces.

<parent> = <child_1> <child_2> <child_n...>Such as:

rack1 = v_vmart_node0001 v_vmart_node0002 v_vmart_node0003 v_vmart_node0004 rack2 = v_vmart_node0005 v_vmart_node0006 v_vmart_node0007 v_vmart_node0008 rack3 = v_vmart_node0009 v_vmart_node0010 v_vmart_node0011 v_vmart_node0012 rack4 = v_vmart_node0013 v_vmart_node0014 v_vmart_node0015 v_vmart_node0016 rack5 = v_vmart_node0017 v_vmart_node0018 v_vmart_node0019 v_vmart_node0020 rack6 = v_vmart_node0021 v_vmart_node0022 v_vmart_node0023 v_vmart_node0024 rack7 = v_vmart_node0025 v_vmart_node0026 v_vmart_node0027 v_vmart_node0028 rack8 = v_vmart_node0029 v_vmart_node0030 v_vmart_node0031 v_vmart_node0032After the first row of parent fault groups, the order in which you write the group descriptions does not matter. All fault groups that you define in this file must refer back to a parent fault group. You can indicate the parent group directly or by specifying the child of a fault group that is the child of a parent fault group.

Such as:

rack1 rack2 rack3 rack4 rack5 rack6 rack7 rack8 rack1 = v_vmart_node0001 v_vmart_node0002 v_vmart_node0003 v_vmart_node0004 rack2 = v_vmart_node0005 v_vmart_node0006 v_vmart_node0007 v_vmart_node0008 rack3 = v_vmart_node0009 v_vmart_node0010 v_vmart_node0011 v_vmart_node0012 rack4 = v_vmart_node0013 v_vmart_node0014 v_vmart_node0015 v_vmart_node0016 rack5 = v_vmart_node0017 v_vmart_node0018 v_vmart_node0019 v_vmart_node0020 rack6 = v_vmart_node0021 v_vmart_node0022 v_vmart_node0023 v_vmart_node0024 rack7 = v_vmart_node0025 v_vmart_node0026 v_vmart_node0027 v_vmart_node0028 rack8 = v_vmart_node0029 v_vmart_node0030 v_vmart_node0031 v_vmart_node0032

After you create your fault group input file, you are ready to run the fault_group_ddl_generator.py. This script generates the DDL statements you need to create fault groups in Vertica.

If your Vertica database is co-located on a Hadoop cluster, and that cluster uses more than one rack, you can use fault groups to improve performance. See Configuring rack locality.

See also

Creating fault groups7.3 - Creating fault groups

When you define fault groups, Vertica distributes data segments across the cluster. This allows the cluster to be aware of your cluster topology so it can tolerate correlated failures inherent in your environment, such as a rack failure. For an overview, see High Availability With Fault Groups.

Important

Defining fault groups requires careful and thorough network planning, and a solid understanding of your network topology.Prerequisites

To define a fault group, you must have:

-

Superuser privileges

-

An existing database

Run the fault group script

-

As the database administrator, run the

fault_group_ddl_generator.pyscript:python /opt/vertica/scripts/fault_group_ddl_generator.py databasename fault-group-inputfile > sql-filenameFor example, the following command writes the Python script output to the SQL file

fault_group_ddl.sql.$ python /opt/vertica/scripts/fault_group_ddl_generator.py VMart fault_groups_VMart.out > fault_group_ddl.sqlAfter the script returns, you can run the SQL file, instead of multiple DDL statements individually.

Tip

Consider saving the input file so you can modify fault groups later—for example, after expanding the cluster or changing the distribution of control nodes. -

Using vsql, run the DDL statements in

fault_group_ddl.sqlor execute the commands in the file using vsql.=> \i fault_group_ddl.sql -

If large cluster is enabled, realign control nodes with REALIGN_CONTROL_NODES. Otherwise, skip this step.

=> SELECT REALIGN_CONTROL_NODES(); -

Save cluster changes to the Spread configuration file by calling RELOAD_SPREAD:

=> SELECT RELOAD_SPREAD(true); -

Use Administration tools to restart the database.

-

Save changes to the cluster's data layout by calling REBALANCE_CLUSTER:

=> SELECT REBALANCE_CLUSTER();

See also

7.4 - Monitoring fault groups

You can monitor fault groups by querying Vertica system tables or by logging in to the Management Console (MC) interface.

Monitor fault groups using system tables

Use the following system tables to view information about fault groups and cluster vulnerabilities, such as the nodes the cluster cannot lose without the database going down:

-

V_CATALOG.FAULT_GROUPS: View the hierarchy of all fault groups in the cluster.

-

V_CATALOG.CLUSTER_LAYOUT: Observe the arrangement of the nodes participating in the data business and the fault groups that affect them. Ephemeral nodes do not appear in the cluster layout ring because they hold no data.

Monitoring fault groups using Management Console

An MC administrator can monitor and highlight fault groups of interest by following these steps:

-

Click the running database you want to monitor and click Manage in the task bar.

-

Open the Fault Group View menu, and select the fault groups you want to view.

-

(Optional) Hide nodes that are not in the selected fault group to focus on fault groups of interest.

Nodes assigned to a fault group each have a colored bubble attached to the upper-left corner of the node icon. Each fault group has a unique color.If the number of fault groups exceeds the number of colors available, MC recycles the colors used previously.

Because Vertica supports complex, hierarchical fault groups of different shapes and sizes, MC displays multiple fault group participation as a stack of different-colored bubbles. The higher bubbles represent a lower-tiered fault group, which means that bubble is closer to the parent fault group, not the child or grandchild fault group.

For more information about fault group hierarchy, see High Availability With Fault Groups.

7.5 - Dropping fault groups

When you remove a fault group from the cluster, be aware that the drop operation removes the specified fault group and its child fault groups. Vertica places all nodes under the parent of the dropped fault group. To see the current fault group hierarchy in the cluster, query system table

FAULT_GROUPS.

Drop a fault group

Use the DROP FAULT GROUP statement to remove a fault group from the cluster. The following example shows how you can drops the group2 fault group:

=> DROP FAULT GROUP group2;

DROP FAULT GROUP

Drop all fault groups

Use the ALTER DATABASE statement to drop all fault groups, along with any child fault groups, from the specified database cluster.

The following command drops all fault groups from the current database.

=> ALTER DATABASE DEFAULT DROP ALL FAULT GROUP;

ALTER DATABASE

Add nodes back to a fault group

To add a node back to a fault group, you must manually reassign it to a new or existing fault group. To do so, use the CREATE FAULT GROUP and ALTER FAULT GROUP..ADD NODE statements.

See also

8 - Terrace routing

Important

Before you apply terrace routing to your database, be sure you are familiar with large cluster and fault groups.Terrace routing can significantly reduce message buffering on a large cluster database. The following sections describe how Vertica implements terrace routing on Enterprise Mode and Eon Mode databases.

Terrace routing on Enterprise Mode

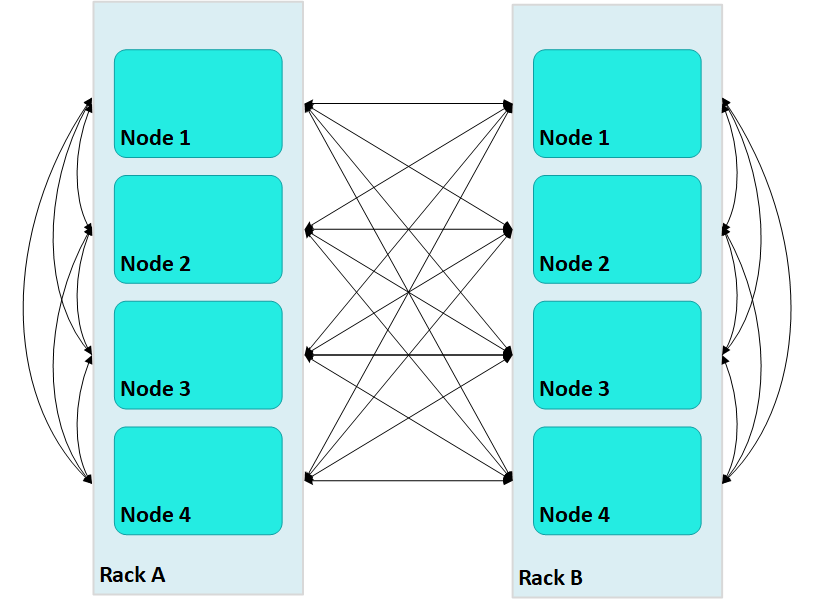

Terrace routing on an Enterprise Mode database is implemented through fault groups that define a rack-based topology. In a large cluster with terrace routing disabled, nodes in a Vertica cluster form a fully connected network, where each non-dependent (control) node sends messages across the database cluster through connections with all other non-dependent nodes, both within and outside its own rack/fault group:

In this case, large Vertica clusters can require many connections on each node, where each connection incurs its own network buffering requirements. The total number of buffers required for each node is calculated as follows:

(numRacks * numRackNodes) - 1

In a two-rack cluster with 4 nodes per rack as shown above, this resolves to 7 buffers for each node.

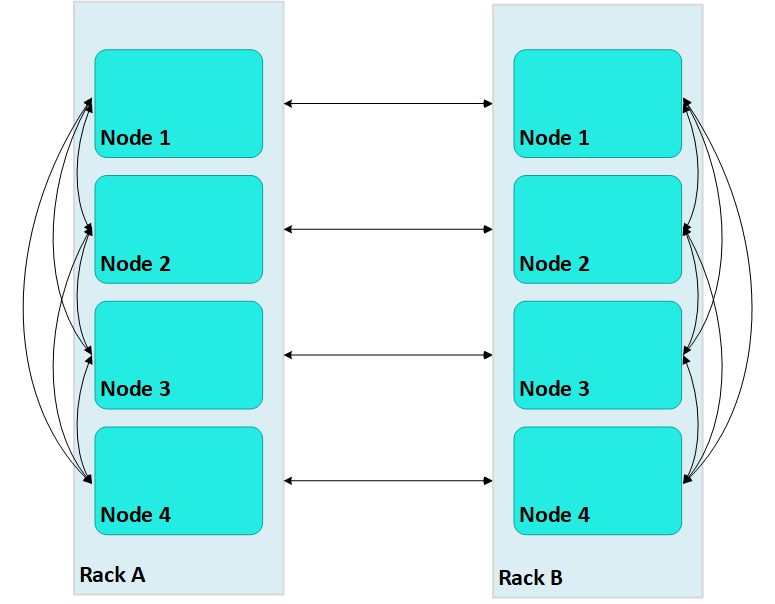

With terrace routing enabled, you can considerably reduce large cluster network buffering. Each nth node in a rack/fault group is paired with the corresponding nth node of all other fault groups. For example, with terrace routing enabled, messaging in the same two-rack cluster is now implemented as follows:

Thus, a message that originates from node 2 on rack A (A2) is sent to all other nodes on rack A; each rack A node then conveys the message to its corresponding node on rack B—A1 to B1, A2 to B2, and so on.

With terrace routing enabled, each node of a given rack avoids the overhead of maintaining message buffers to all other nodes. Instead, each node is only responsible for maintaining connections to:

-

All other nodes of the same rack (

numRackNodes- 1) -

One node on each of the other racks (

numRacks- 1)

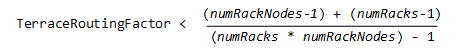

Thus, the total number of message buffers required for each node is calculated as follows:

(numRackNodes-1) + (numRacks-1)

In a two-rack cluster with 4 nodes as shown earlier, this resolves to 4 buffers for each node.

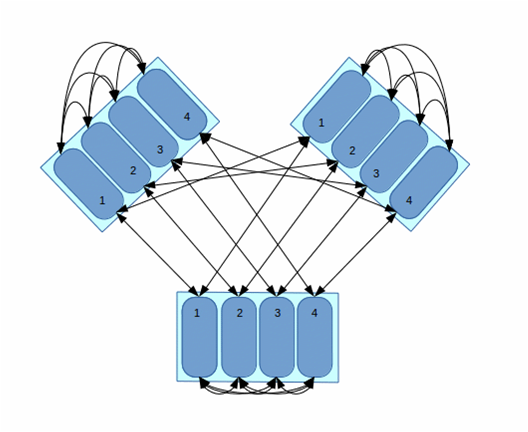

Terrace routing trades time (intra-rack hops) for space (network message buffers). As a cluster expands with additional racks and nodes, the argument favoring this trade off becomes increasingly persuasive:

In this three-rack cluster with 4 nodes per rack, without terrace routing the number of buffers required by each node would be 11. With terrace routing, the number of buffers per node is 5. As a cluster expands with the addition of racks and nodes per rack, the disparity between buffer requirements widens. For example, given a six-rack cluster with 16 nodes per rack, without terrace routing the number of buffers required per node is 95; with terrace routing, 20.

Enabling terrace routing

Terrace routing depends on fault group definitions that describe a cluster network topology organized around racks and their member nodes. As noted earlier, when terrace routing is enabled, Vertica first distributes data within the rack/fault group; it then uses nth node-to-nth node mappings to forward this data to all other racks in the database cluster.

You enable (or disable) terrace routing for any Enterprise Mode large cluster that implements rack-based fault groups through configuration parameter TerraceRoutingFactor. To enable terrace routing, set this parameter as follows:

where:

-

numRackNodes: Number of nodes in a rack -

numRacks: Number of racks in the cluster

For example:

| #Racks | Nodes/rack | #Connections |

Terrace routing enabled if TerraceRoutingFactor less than: | |

|---|---|---|---|---|

| Without terrace routing | With terrace routing | |||

| 2 | 16 | 31 | 16 | 1.94 |

| 4 | 16 | 63 | 18 | 3.5 |

| 6 | 16 | 95 | 20 | 4.75 |

| 8 | 16 | 127 | 22 | 5.77 |

By default, TerraceRoutingFactor is set to 2, which generally ensures that terrace routing is enabled for any Enterprise Mode large cluster that implements rack-based fault groups. Vertica recommends enabling terrace routing for any cluster that contains 64 or more nodes, or if queries often require excessive buffer space.

To disable terrace routing, set TerraceRoutingFactor to a large integer such as 1000:

=> ALTER DATABASE DEFAULT SET TerraceRoutingFactor = 1000;

Terrace routing on Eon Mode

As in Enterprise Mode mode, terrace routing is enabled by default on an Eon Mode database, and is implemented through fault groups. However, you do not create fault groups for an Eon Mode database. Rather, Vertica automatically creates fault groups on a large cluster database; these fault groups are configured around the control nodes and their dependents of each subcluster. These fault groups are managed internally by Vertica and are not accessible to users.

9 - Elastic cluster

Note

Elastic Cluster is an Enterprise Mode-only feature. For scaling your database under Eon Mode, see Scaling your Eon Mode database.You can scale your cluster up or down to meet the needs of your database. The most common case is to add nodes to your database cluster to accommodate more data and provide better query performance. However, you can scale down your cluster if you find that it is over-provisioned, or if you need to divert hardware for other uses.

You scale your cluster by adding or removing nodes. Nodes can be added or removed without shutting down or restarting the database. After adding a node or before removing a node, Vertica begins a rebalancing process that moves data around the cluster to populate the new nodes or move data off nodes about to be removed from the database. During this process, nodes can exchange data that are not being added or removed to maintain robust intelligent K-safety. If Vertica determines that the data cannot be rebalanced in a single iteration due to lack of disk space, then the rebalance operation spans multiple iterations.

To help make data rebalancing due to cluster scaling more efficient, Vertica locally segments data storage on each node so it can be easily moved to other nodes in the cluster. When a new node is added to the cluster, existing nodes in the cluster give up some of their data segments to populate the new node. They also exchange segments to minimize the number of nodes that any one node depends upon. This strategy minimizes the number of nodes that might become critical when a node fails. When a node is removed from the cluster, its storage containers are moved to other nodes in the cluster (which also relocates data segments to minimize how many nodes might become critical when a node fails). This method of breaking data into portable segments is referred to as elastic cluster, as it facilitates enlarging or shrinking the cluster.

The alternative to elastic cluster is re-segmenting all projection data and redistributing it evenly among all database nodes any time a node is added or removed. This method requires more processing and more disk space, as it requires all data in all projections to be dumped and reloaded.

Elastic cluster scaling factor

In a new installation, each node has a scaling factor that specifies the number of local segments (see Scaling factor). Rebalance efficiently redistributes data by relocating local segments provided that, after nodes are added or removed, there are sufficient local segments in the cluster to redistribute the data evenly (determined by MAXIMUM_SKEW_PERCENT). For example, if the scaling factor = 8, and there are initially 5 nodes, then there are a total of 40 local segments cluster-wide.

If you add two additional nodes (seven nodes) Vertica relocates five local segments on two nodes, and six such segments on five nodes, resulting in roughly a 16.7 percent skew. Rebalance relocates local segments only if the resulting skew is less than the allowed threshold, as determined by MAXIMUM_SKEW_PERCENT. Otherwise, segmentation space (and hence data, if uniformly distributed over this space) is evenly distributed among the seven nodes, and new local segment boundaries are drawn for each node, such that each node again has eight local segments.

Note

By default, the scaling factor only has an effect while Vertica rebalances the database. While rebalancing, each node breaks the projection segments it contains into storage containers, which it then moves to other nodes if necessary. After rebalancing, the data is recombined into ROS containers. It is possible to have Vertica always group data into storage containers. See Local data segmentation for more information.Enabling elastic cluster

You enable elastic cluster with ENABLE_ELASTIC_CLUSTER. Query the ELASTIC_CLUSTER system table to verify that elastic cluster is enabled:

=> SELECT is_enabled FROM ELASTIC_CLUSTER;

is_enabled

------------

t

(1 row)

9.1 - Scaling factor

To avoid an increased number of ROS containers, do not enable local segmentation and do not change the scaling factor.

9.2 - Viewing scaling factor settings

To view the scaling factor, query the ELASTIC_CLUSTER table:

=> SELECT scaling_factor FROM ELASTIC_CLUSTER;

scaling_factor

---------------

4

(1 row)

=> SELECT SET_SCALING_FACTOR(6);

SET_SCALING_FACTOR

--------------------

SET

(1 row)

=> SELECT scaling_factor FROM ELASTIC_CLUSTER;

scaling_factor

---------------

6

(1 row)

9.3 - Setting the scaling factor

The scaling factor determines the number of storage containers that Vertica uses to store each projection across the database during rebalancing when local segmentation is enabled. When setting the scaling factor, follow these guidelines:

-

The number of storage containers should be greater than or equal to the number of partitions multiplied by the number of local segments:

num-storage-containers>= (num-partitions*num-local-segments) -

Set the scaling factor high enough so rebalance can transfer local segments to satisfy the skew threshold, but small enough so the number of storage containers does not result in too many ROS containers, and cause ROS pushback. The maximum number of ROS containers (by default 1024) is set by configuration parameter ContainersPerProjectionLimit.

Use the SET_SCALING_FACTOR function to change your database's scaling factor. The scaling factor can be an integer between 1 and 32.

=> SELECT SET_SCALING_FACTOR(12);

SET_SCALING_FACTOR

--------------------

SET

(1 row)

9.4 - Local data segmentation

By default, the scaling factor only has an effect when Vertica rebalances the database. During rebalancing, nodes break the projection segments they contain into storage containers which they can quickly move to other nodes.

This process is more efficient than re-segmenting the entire projection (in particular, less free disk space is required), but it still has significant overhead, since storage containers have to be separated into local segments, some of which are then transferred to other nodes. This overhead is not a problem if you rarely add or remove nodes from your database.

However, if your database is growing rapidly and is constantly busy, you may find the process of adding nodes becomes disruptive. In this case, you can enable local segmentation, which tells Vertica to always segment its data based on the scaling factor, so the data is always broken into containers that are easily moved. Having the data segmented in this way dramatically speeds up the process of adding or removing nodes, since the data is always in a state that can be quickly relocated to another node. The rebalancing process that Vertica performs after adding or removing a node just has to decide which storage containers to relocate, instead of first having to first break the data into storage containers.

Local data segmentation increases the number of storage containers stored on each node. This is not an issue unless a table contains many partitions. For example, if the table is partitioned by day and contains one or more years. If local data segmentation is enabled, then each of these table partitions is broken into multiple local storage segments, which potentially results in a huge number of files which can lead to ROS "pushback." Consider your table partitions and the effect enabling local data segmentation may have before enabling the feature.

9.4.1 - Enabling and disabling local segmentation

To enable local segmentation, use the ENABLE_LOCAL_SEGMENTS function. To disable local segmentation, use the DISABLE_LOCAL_SEGMENTATION function:

=> SELECT ENABLE_LOCAL_SEGMENTS();

ENABLE_LOCAL_SEGMENTS

-----------------------

ENABLED

(1 row)

=> SELECT is_local_segment_enabled FROM elastic_cluster;

is_enabled

------------

t

(1 row)

=> SELECT DISABLE_LOCAL_SEGMENTS();

DISABLE_LOCAL_SEGMENTS

------------------------

DISABLED

(1 row)

=> SELECT is_local_segment_enabled FROM ELASTIC_CLUSTER;

is_enabled

------------

f

(1 row)

9.5 - Elastic cluster best practices

The following are some best practices with regard to local segmentation.

Note

You should always perform a database backup before and after performing any of the operations discussed in this topic. You need to back up before changing any elastic cluster or local segmentation settings to guard against a hardware failure causing the rebalance process to leave the database in an unusable state. You should perform a full backup of the database after the rebalance procedure to avoid having to rebalance the database again if you need to restore from a backup.When to enable local data segmentation

Local data segmentation can significantly speed up the process of resizing your cluster. You should enable local data segmentation if:

-

your database does not contain tables with hundreds of partitions.

-

the number of nodes in the database cluster is a power of two.

-

you plan to expand or contract the size of your cluster.

Local segmentation can result in an excessive number of storage containers with tables that have hundreds of partitions, or in clusters with a non-power-of-two number of nodes. If your database has these two features, take care when enabling local segmentation.

10 - Adding nodes

There are many reasons for adding one or more nodes to an installation of Vertica:

- Increase system performance. Add additional nodes due to a high query load or load latency or increase disk space without adding storage locations to existing nodes.

Note

The database response time depends on factors such as type and size of the application query, database design, data size and data types stored, available computational power, and network bandwidth. Adding nodes to a database cluster does not necessarily improve the system response time for every query, especially if the response time is already short, e.g., less then 10 seconds, or the response time is not hardware bound.-

Make the database K-safe (K-safety=1) or increase K-safety to 2. See Failure recovery for details.

-

Swap a node for maintenance. Use a spare machine to temporarily take over the activities of an existing node that needs maintenance. The node that requires maintenance is known ahead of time so that when it is temporarily removed from service, the cluster is not vulnerable to additional node failures.

-

Replace a node. Permanently add a node to remove obsolete or malfunctioning hardware.

Important

If you install Vertica on a single node without specifying the IP address or host name (or you usedlocalhost), you cannot expand the cluster. You must reinstall Vertica and specify an IP address or host name that is not localhost/127.0.0.1.

Adding nodes consists of the following general tasks:

-

Vertica strongly recommends that you back up the database before you perform this significant operation because it entails creating new projections, refreshing them, and then deleting the old projections. See Backing up and restoring the database for more information.

The process of migrating the projection design to include the additional nodes could take a while; however during this time, all user activity on the database can proceed normally, using the old projections.

-

Configure the hosts you want to add to the cluster.

See Before you Install Vertica. You will also need to edit the hosts configuration file on all of the existing nodes in the cluster to ensure they can resolve the new host.

-

Add the hosts you added to the cluster (in step 3) to the database.

Note

When you add a "host" to the database, it becomes a "node." You can add nodes to your database using either the Administration tools or the Management Console (See Monitoring with MC).

You can also add nodes using the

admintoolscommand line, which allows you to preserve the specific order of the nodes you add.

After you add nodes to the database, Vertica automatically distributes updated configuration files to the rest of the nodes in the cluster and starts the process of rebalancing data in the cluster. See Rebalancing data across nodes for details.

10.1 - Adding hosts to a cluster

After you have backed up the database and configured the hosts you want to add to the cluster, you can now add hosts to the cluster using the

update_vertica script.

You cannot use the MC to add hosts to a cluster in an on-premises environment. However, after the hosts are added to the cluster, the MC does allow you to add the hosts to a database as nodes.

Prerequisites and restrictions

If you installed Vertica on a single node without specifying the IP address or hostname (you used localhost), it is not possible to expand the cluster. You must reinstall Vertica and specify an IP address or hostname.

Procedure to add hosts

From one of the existing cluster hosts, run the update_vertica script with a minimum of the --add-hosts host(s) parameter (where host(s) is the hostname or IP address of the system(s) that you are adding to the cluster) and the --rpm or --deb parameter: